Javier Ibanez-Guzman

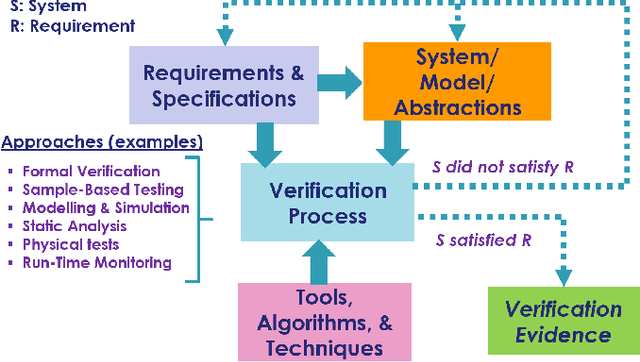

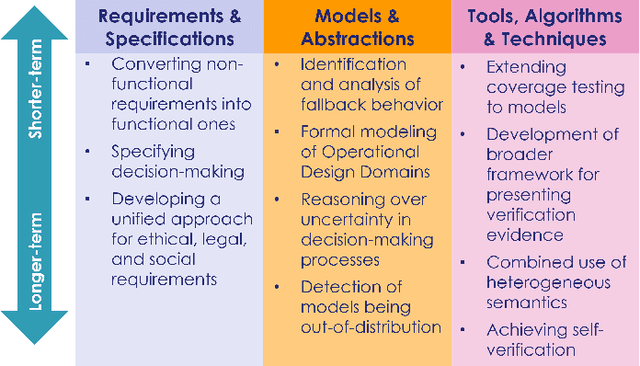

Grand Challenges in the Verification of Autonomous Systems

Nov 21, 2024

Abstract:Autonomous systems use independent decision-making with only limited human intervention to accomplish goals in complex and unpredictable environments. As the autonomy technologies that underpin them continue to advance, these systems will find their way into an increasing number of applications in an ever wider range of settings. If we are to deploy them to perform safety-critical or mission-critical roles, it is imperative that we have justified confidence in their safe and correct operation. Verification is the process by which such confidence is established. However, autonomous systems pose challenges to existing verification practices. This paper highlights viewpoints of the Roadmap Working Group of the IEEE Robotics and Automation Society Technical Committee for Verification of Autonomous Systems, identifying these grand challenges, and providing a vision for future research efforts that will be needed to address them.

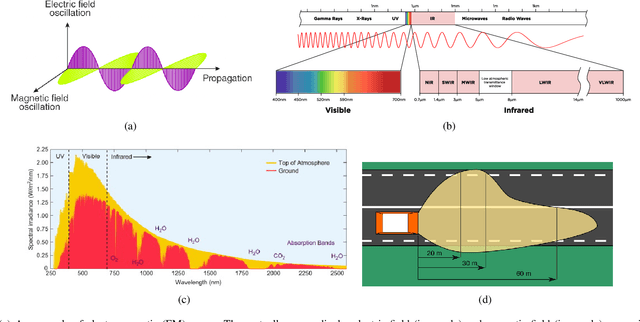

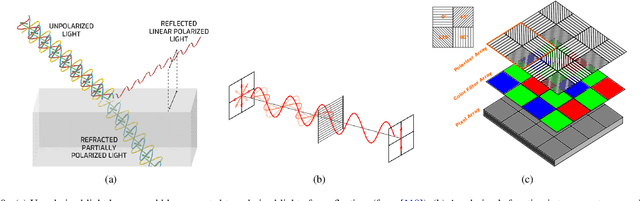

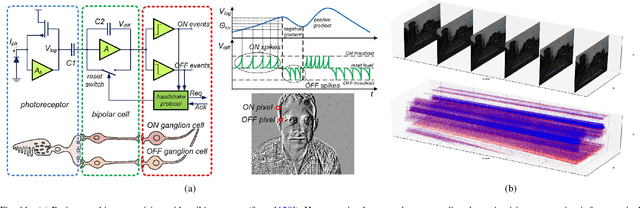

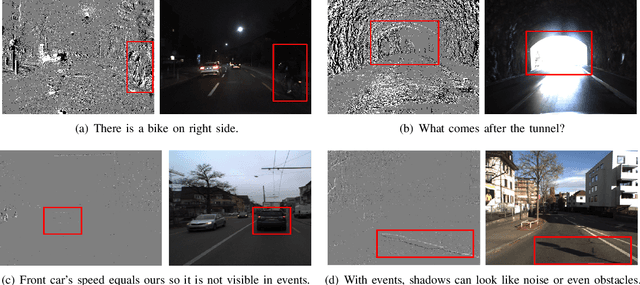

Unconventional Visual Sensors for Autonomous Vehicles

May 19, 2022

Abstract:Autonomous vehicles rely on perception systems to understand their surroundings for further navigation missions. Cameras are essential for perception systems due to the advantages of object detection and recognition provided by modern computer vision algorithms, comparing to other sensors, such as LiDARs and radars. However, limited by its inherent imaging principle, a standard RGB camera may perform poorly in a variety of adverse scenarios, including but not limited to: low illumination, high contrast, bad weather such as fog/rain/snow, etc. Meanwhile, estimating the 3D information from the 2D image detection is generally more difficult when compared to LiDARs or radars. Several new sensing technologies have emerged in recent years to address the limitations of conventional RGB cameras. In this paper, we review the principles of four novel image sensors: infrared cameras, range-gated cameras, polarization cameras, and event cameras. Their comparative advantages, existing or potential applications, and corresponding data processing algorithms are all presented in a systematic manner. We expect that this study will assist practitioners in the autonomous driving society with new perspectives and insights.

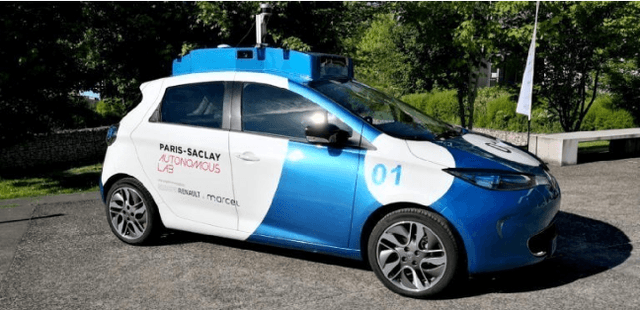

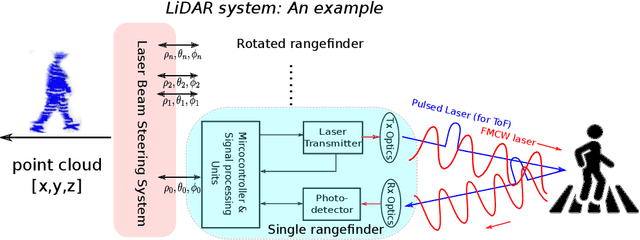

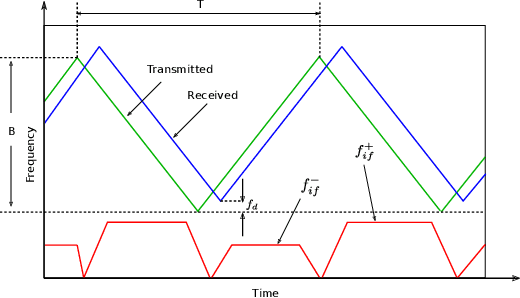

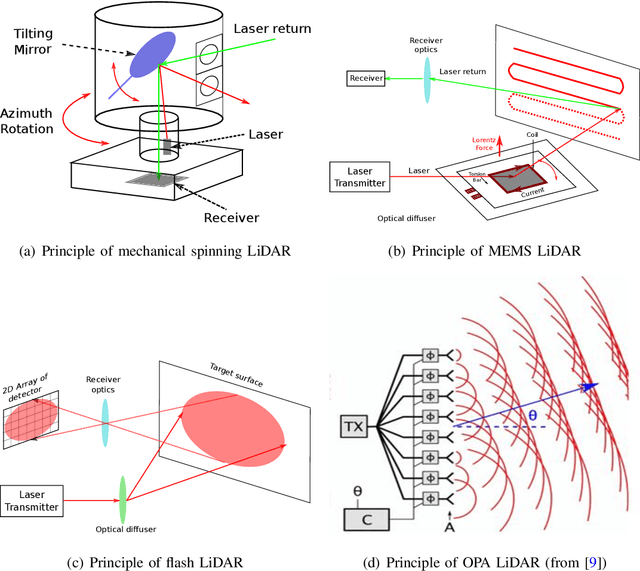

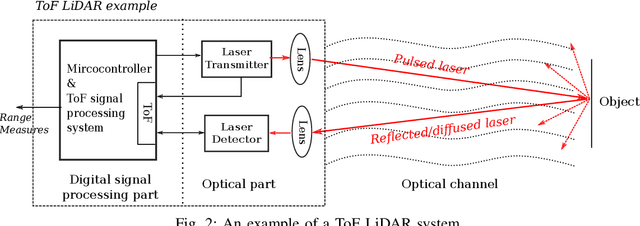

Lidar for Autonomous Driving: The principles, challenges, and trends for automotive lidar and perception systems

Apr 17, 2020

Abstract:Autonomous vehicles rely on their perception systems to acquire information about their immediate surroundings. It is necessary to detect the presence of other vehicles, pedestrians and other relevant entities. Safety concerns and the need for accurate estimations have led to the introduction of Light Detection and Ranging (LiDAR) systems in complement to the camera or radar-based perception systems. This article presents a review of state-of-the-art automotive LiDAR technologies and the perception algorithms used with those technologies. LiDAR systems are introduced first by analyzing the main components, from laser transmitter to its beam scanning mechanism. Advantages/disadvantages and the current status of various solutions are introduced and compared. Then, the specific perception pipeline for LiDAR data processing, from an autonomous vehicle perspective is detailed. The model-driven approaches and the emerging deep learning solutions are reviewed. Finally, we provide an overview of the limitations, challenges and trends for automotive LiDARs and perception systems.

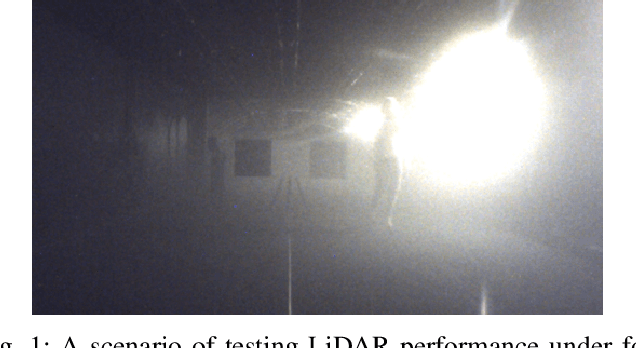

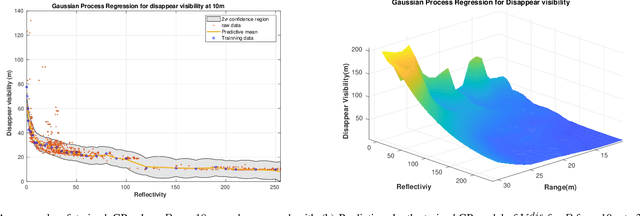

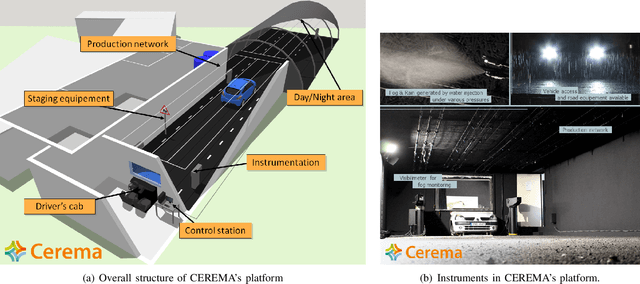

What happens for a ToF LiDAR in fog?

Mar 14, 2020

Abstract:This article focuses on analyzing the performance of a typical time-of-flight (ToF) LiDAR under fog environment. By controlling the fog density within CEREMA Adverse Weather Facility 1 , the relations between the ranging performance and fogs are both qualitatively and quantitatively investigated. Furthermore, based on the collected data, a machine learning based model is trained to predict the minimum fog visibility that allows successful ranging for this type of LiDAR. The revealed experimental results and methods are helpful for ToF LiDAR specifications from automotive industry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge