Pierre Duthon

Cerema

Sparse LiDAR and Stereo Fusion for Depth Estimationand 3D Object Detection

Mar 05, 2021

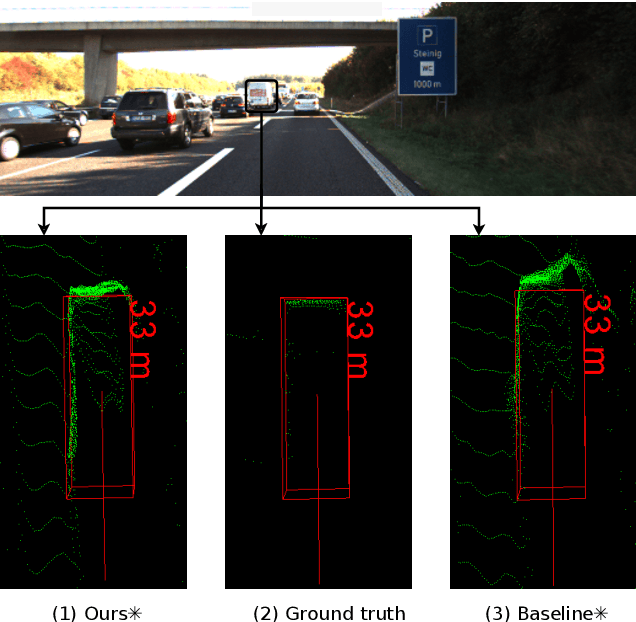

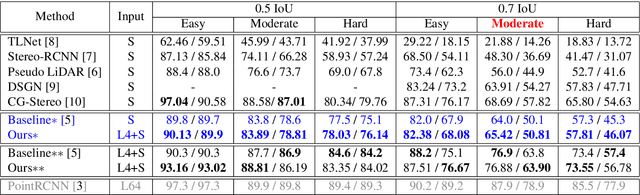

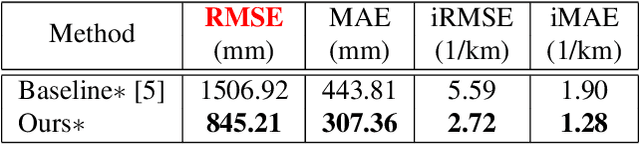

Abstract:The ability to accurately detect and localize objects is recognized as being the most important for the perception of self-driving cars. From 2D to 3D object detection, the most difficult is to determine the distance from the ego-vehicle to objects. Expensive technology like LiDAR can provide a precise and accurate depth information, so most studies have tended to focus on this sensor showing a performance gap between LiDAR-based methods and camera-based methods. Although many authors have investigated how to fuse LiDAR with RGB cameras, as far as we know there are no studies to fuse LiDAR and stereo in a deep neural network for the 3D object detection task. This paper presents SLS-Fusion, a new approach to fuse data from 4-beam LiDAR and a stereo camera via a neural network for depth estimation to achieve better dense depth maps and thereby improves 3D object detection performance. Since 4-beam LiDAR is cheaper than the well-known 64-beam LiDAR, this approach is also classified as a low-cost sensors-based method. Through evaluation on the KITTI benchmark, it is shown that the proposed method significantly improves depth estimation performance compared to a baseline method. Also, when applying it to 3D object detection, a new state of the art on low-cost sensor based method is achieved.

What happens for a ToF LiDAR in fog?

Mar 14, 2020

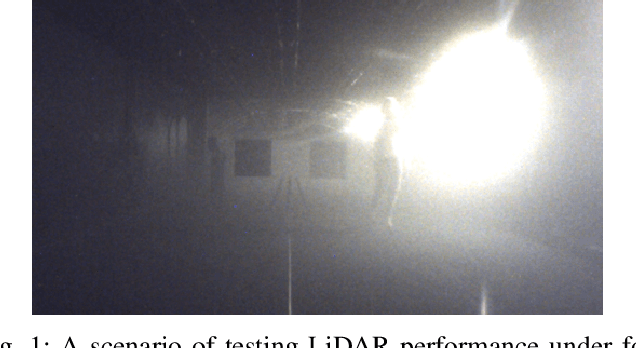

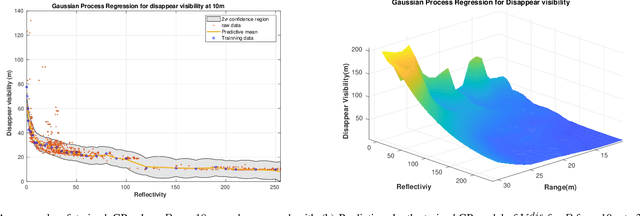

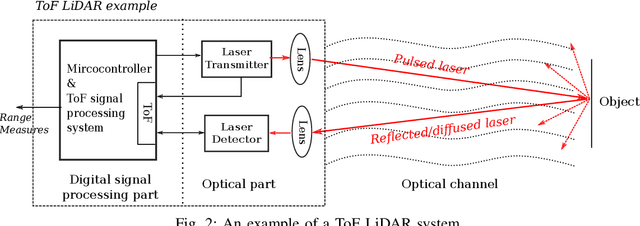

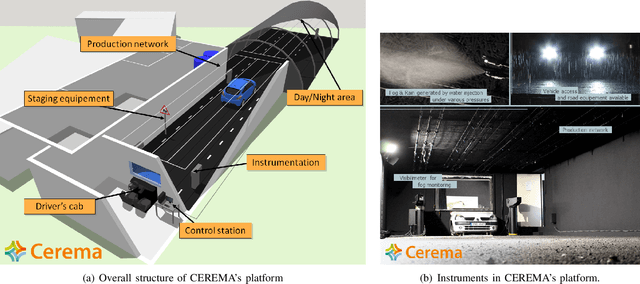

Abstract:This article focuses on analyzing the performance of a typical time-of-flight (ToF) LiDAR under fog environment. By controlling the fog density within CEREMA Adverse Weather Facility 1 , the relations between the ranging performance and fogs are both qualitatively and quantitatively investigated. Furthermore, based on the collected data, a machine learning based model is trained to predict the minimum fog visibility that allows successful ranging for this type of LiDAR. The revealed experimental results and methods are helpful for ToF LiDAR specifications from automotive industry.

Baselines and a datasheet for the Cerema AWP dataset

Jun 11, 2018

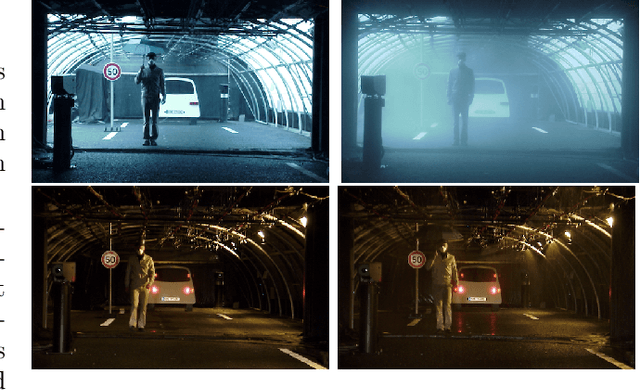

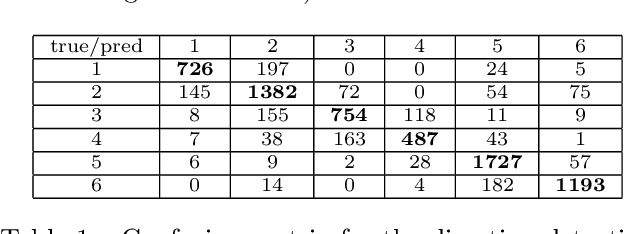

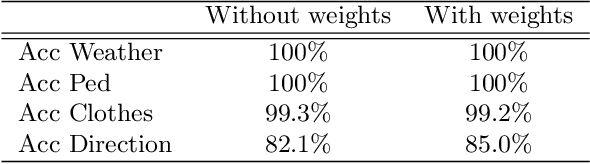

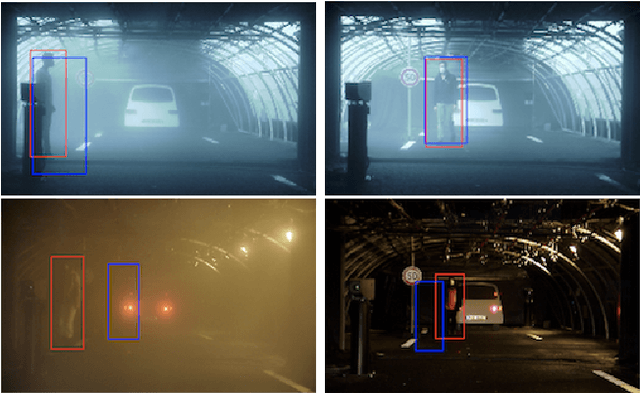

Abstract:This paper presents the recently published Cerema AWP (Adverse Weather Pedestrian) dataset for various machine learning tasks and its exports in machine learning friendly format. We explain why this dataset can be interesting (mainly because it is a greatly controlled and fully annotated image dataset) and present baseline results for various tasks. Moreover, we decided to follow the very recent suggestions of datasheets for dataset, trying to standardize all the available information of the dataset, with a transparency objective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge