Xuan Zheng

Detecting Stimuli with Novel Temporal Patterns to Accelerate Functional Coverage Closure

Jun 19, 2024

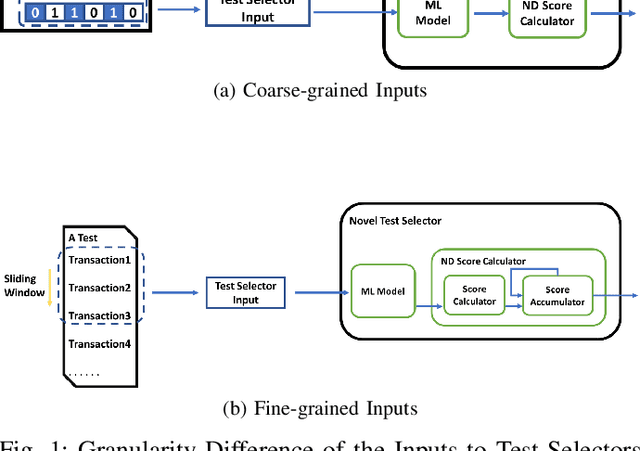

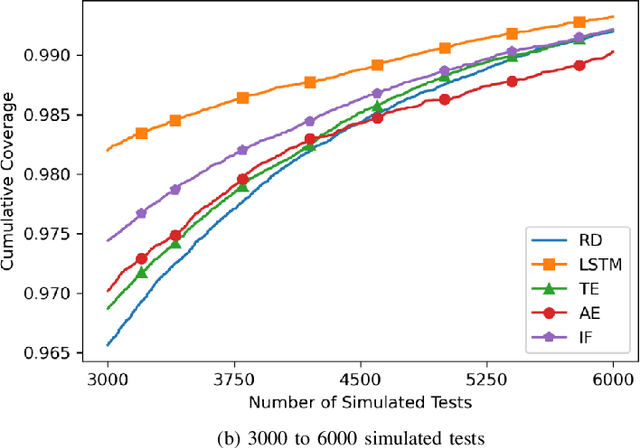

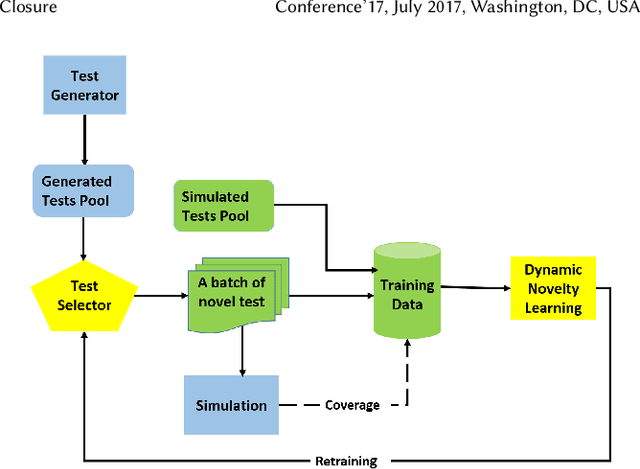

Abstract:Novel test selectors have demonstrated their effectiveness in accelerating the closure of functional coverage for various industrial digital designs in simulation-based verification. The primary advantages of these test selectors include performance that is not impacted by coverage holes, straightforward implementation, and relatively low computational expense. However, the detection of stimuli with novel temporal patterns remains largely unexplored. This paper introduces two novel test selectors designed to identify such stimuli. The experiments reveal that both test selectors can accelerate the functional coverage for a commercial bus bridge, compared to random test selection. Specifically, one selector achieves a 26.9\% reduction in the number of simulated tests required to reach 98.5\% coverage, outperforming the savings achieved by two previously published test selectors by factors of 13 and 2.68, respectively.

Using Neural Networks for Novelty-based Test Selection to Accelerate Functional Coverage Closure

Jul 07, 2022

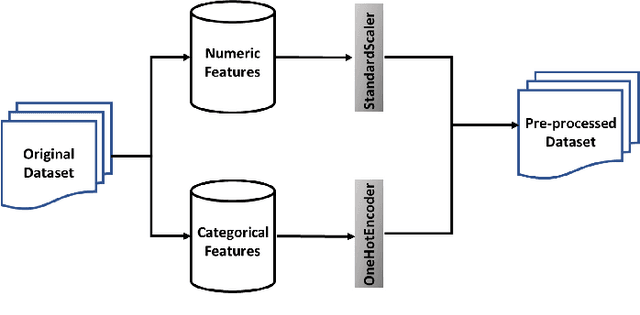

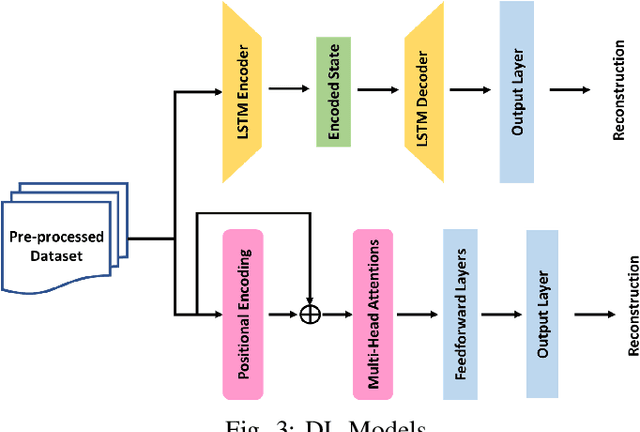

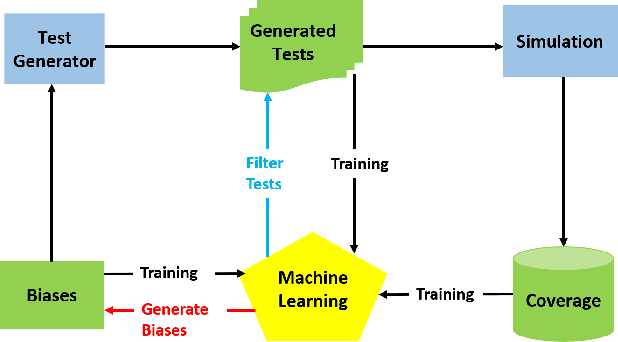

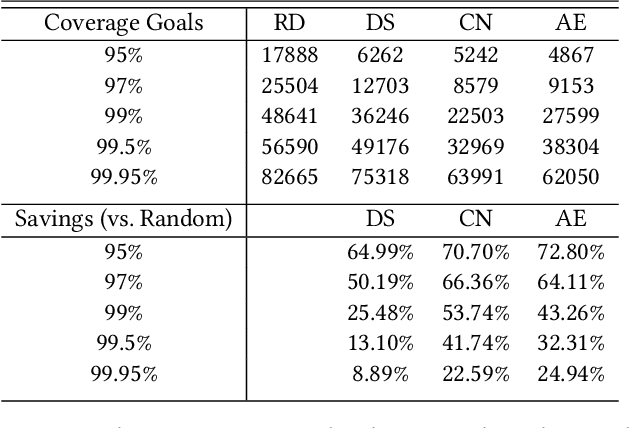

Abstract:Machine learning (ML) has been used to accelerate the closure of functional coverage in simulation-based verification. A supervised ML algorithm, as a prevalent option in the previous work, is used to bias the test generation or filter the generated tests. However, for missing coverage events, these algorithms lack the positive examples to learn from in the training phase. Therefore, the tests generated or filtered by the algorithms cannot effectively fill the coverage holes. This is more severe when verifying large-scale design because the coverage space is larger and the functionalities are more complex. This paper presents a configurable framework of test selection based on neural networks (NN), which can achieve a similar coverage gain as random simulation with far less simulation effort under three configurations of the framework. Moreover, the performance of the framework is not limited by the number of coverage events being hit. A commercial signal processing unit is used in the experiment to demonstrate the effectiveness of the framework. Compared to the random simulation, the framework can reduce up to 53.74% of simulation time to reach 99% coverage level.

Operational Adaptation of DNN Classifiers using Elastic Weight Consolidation

Apr 30, 2022

Abstract:Autonomous systems (AS) often use Deep Neural Network (DNN) classifiers to allow them to operate in complex, high dimensional, non-linear, and dynamically changing environments. Due to the complexity of these environments, DNN classifiers may output misclassifications due to experiencing new tasks in their operational environments, which were not identified during development. Removing a system from operation and retraining it to include the new identified task becomes economically infeasible as the number of such autonomous systems increase. Additionally, such misclassifications may cause financial losses and safety threats to the AS or to other operators in its environment. In this paper, we propose to reduce such threats by investigating if DNN classifiers can adapt its knowledge to learn new information in the AS's operational environment, using only a limited number of observations encountered sequentially during operation. This allows the AS to adapt to new encountered information and hence increases the AS's reliability on doing correct classifications. However, retraining DNNs on different observations than used in prior training is known to cause catastrophic forgetting or significant model drift. We investigate if this problem can be controlled by using Elastic Weight Consolidation (EWC) whilst learning from limited new observations. We carry out experiments using original and noisy versions of the MNIST dataset to represent known and new information to DNN classifiers. Results show that using EWC does make the process of adaptation to new information a lot more controlled, and thus allowing for reliable adaption of ASs to new information in their operational environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge