Using Neural Networks for Novelty-based Test Selection to Accelerate Functional Coverage Closure

Paper and Code

Jul 07, 2022

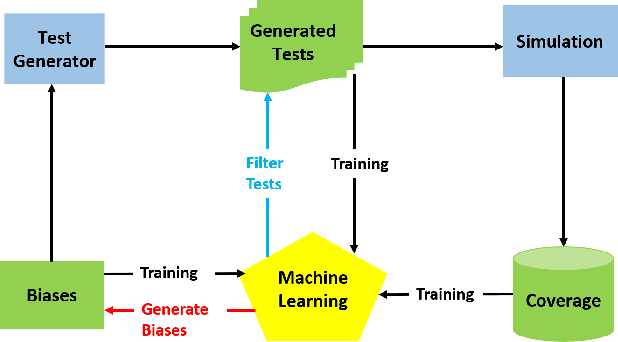

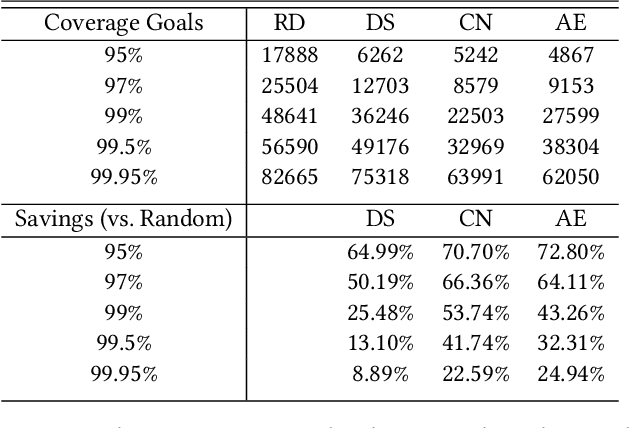

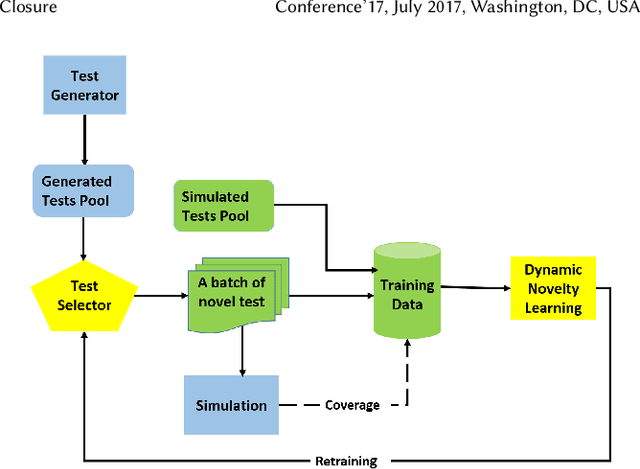

Machine learning (ML) has been used to accelerate the closure of functional coverage in simulation-based verification. A supervised ML algorithm, as a prevalent option in the previous work, is used to bias the test generation or filter the generated tests. However, for missing coverage events, these algorithms lack the positive examples to learn from in the training phase. Therefore, the tests generated or filtered by the algorithms cannot effectively fill the coverage holes. This is more severe when verifying large-scale design because the coverage space is larger and the functionalities are more complex. This paper presents a configurable framework of test selection based on neural networks (NN), which can achieve a similar coverage gain as random simulation with far less simulation effort under three configurations of the framework. Moreover, the performance of the framework is not limited by the number of coverage events being hit. A commercial signal processing unit is used in the experiment to demonstrate the effectiveness of the framework. Compared to the random simulation, the framework can reduce up to 53.74% of simulation time to reach 99% coverage level.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge