Luca Lach

Bio-Inspired Grasping Controller for Sensorized 2-DoF Grippers

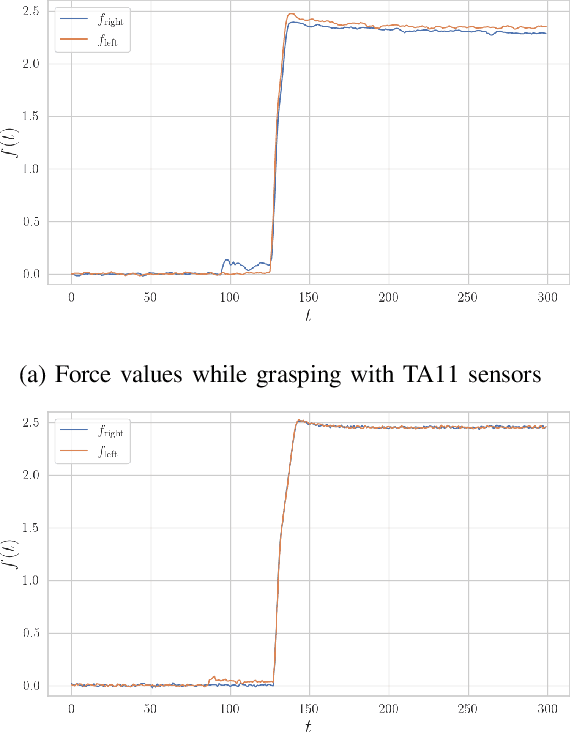

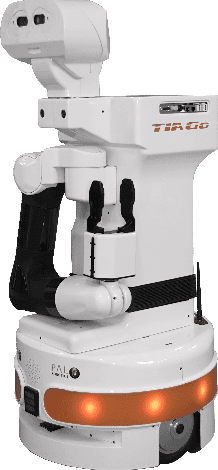

Nov 13, 2023Abstract:We present a holistic grasping controller, combining free-space position control and in-contact force-control for reliable grasping given uncertain object pose estimates. Employing tactile fingertip sensors, undesired object displacement during grasping is minimized by pausing the finger closing motion for individual joints on first contact until force-closure is established. While holding an object, the controller is compliant with external forces to avoid high internal object forces and prevent object damage. Gravity as an external force is explicitly considered and compensated for, thus preventing gravity-induced object drift. We evaluate the controller in two experiments on the TIAGo robot and its parallel-jaw gripper proving the effectiveness of the approach for robust grasping and minimizing object displacement. In a series of ablation studies, we demonstrate the utility of the individual controller components.

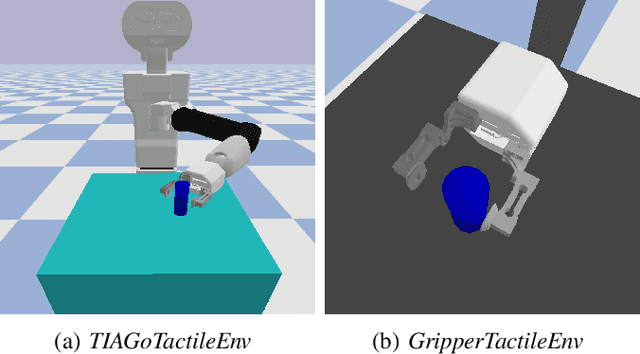

TIAGo RL: Simulated Reinforcement Learning Environments with Tactile Data for Mobile Robots

Nov 13, 2023

Abstract:Tactile information is important for robust performance in robotic tasks that involve physical interaction, such as object manipulation. However, with more data included in the reasoning and control process, modeling behavior becomes increasingly difficult. Deep Reinforcement Learning (DRL) produced promising results for learning complex behavior in various domains, including tactile-based manipulation in robotics. In this work, we present our open-source reinforcement learning environments for the TIAGo service robot. They produce tactile sensor measurements that resemble those of a real sensorised gripper for TIAGo, encouraging research in transfer learning of DRL policies. Lastly, we show preliminary training results of a learned force control policy and compare it to a classical PI controller.

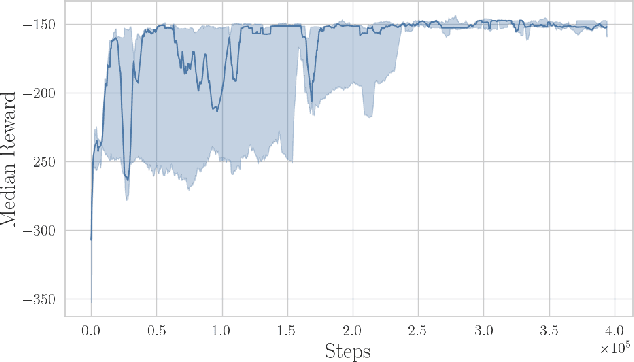

Towards Transferring Tactile-based Continuous Force Control Policies from Simulation to Robot

Nov 13, 2023

Abstract:The advent of tactile sensors in robotics has sparked many ideas on how robots can leverage direct contact measurements of their environment interactions to improve manipulation tasks. An important line of research in this regard is that of grasp force control, which aims to manipulate objects safely by limiting the amount of force exerted on the object. While prior works have either hand-modeled their force controllers, employed model-based approaches, or have not shown sim-to-real transfer, we propose a model-free deep reinforcement learning approach trained in simulation and then transferred to the robot without further fine-tuning. We therefore present a simulation environment that produces realistic normal forces, which we use to train continuous force control policies. An evaluation in which we compare against a baseline and perform an ablation study shows that our approach outperforms the hand-modeled baseline and that our proposed inductive bias and domain randomization facilitate sim-to-real transfer. Code, models, and supplementary videos are available on https://sites.google.com/view/rl-force-ctrl

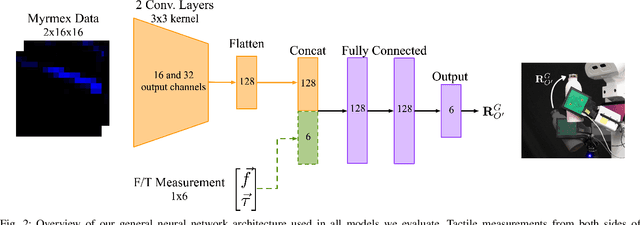

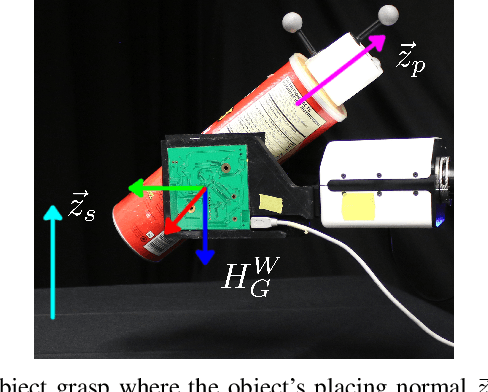

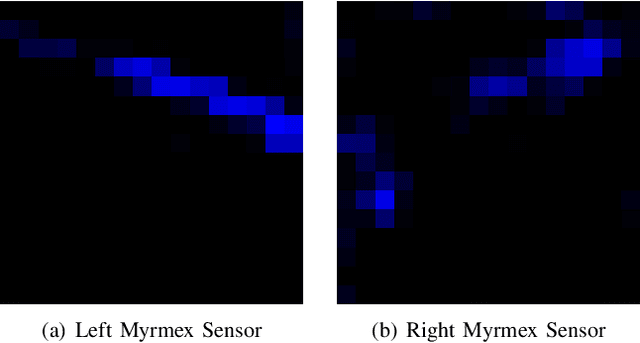

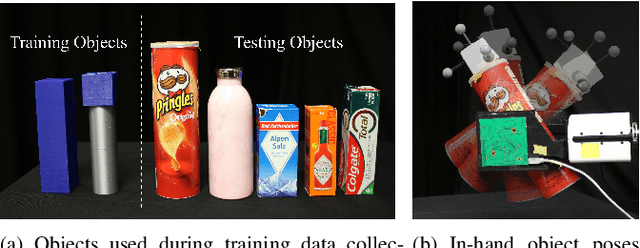

Placing by Touching: An empirical study on the importance of tactile sensing for precise object placing

Oct 05, 2022

Abstract:Tactile sensors are promising tools for endowing robots with embodied intelligence and increased dexterity. These sensors can provide robotic systems with direct information about physical interactions with the world, which is difficult to obtain from extrinsic perception systems. This work deals with a practical everyday living problem: stable object placement on flat surfaces starting from unknown initial poses. Common approaches for object placing either require complete scene specifications or indirect sensor measurements, such as cameras which are prone to suffer from occlusions. Instead, this work proposes a novel approach for stable object placing that combines tactile feedback and proprioceptive sensing. We devise a neural architecture that estimates a rotation matrix which results in a corrective gripper movement that aligns the object with the table and paves the way for the subsequent stable object placement. We compare models with different sensing modalities, such as force-torque and an external motion capture system, in real-world object placement tasks with different objects. Our experimental evaluation of the placing policies with a set of unknown everyday objects reveals an impressive generalization of the tactile-based pipeline and suggests that tactile sensing plays a vital role in the intrinsic understanding of dexterous object manipulation. Videos of our approach are available at https://sites.google.com/view/placing-by-touching.

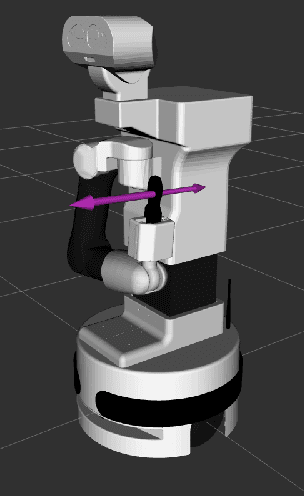

Leveraging Touch Sensors to Improve Mobile Manipulation

Oct 21, 2020

Abstract:Despite many advances in service robotics, successful and secure object manipulation on mobile platforms is still a challenge. In order to come closer to human grasping performance, it is natural to provide robots with the same capability that humans have: the sense of touch. This abstract presents novel, tactile-equipped end-effectors for the service robot TIAGo that are currently being developed. Their primary goal is to improve reliability and success of mobile manipulation, but they also enable further research in related fields such as learning by human demonstration, object exploration and force control algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge