Júlia Borràs

Ontological foundations for contrastive explanatory narration of robot plans

Sep 26, 2025

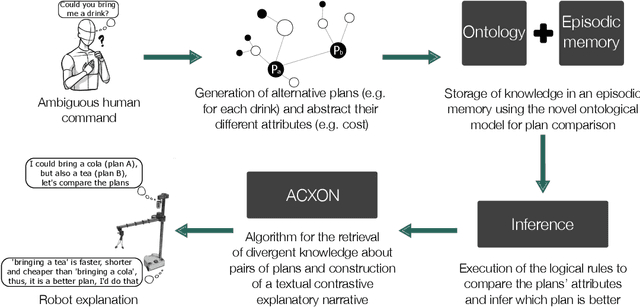

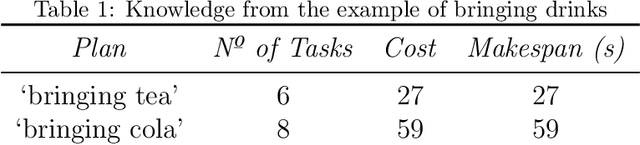

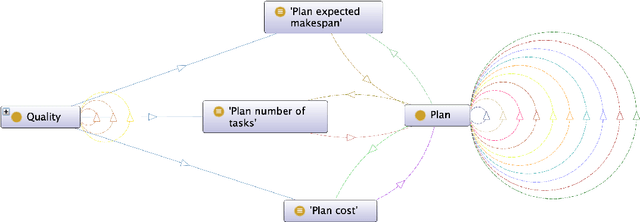

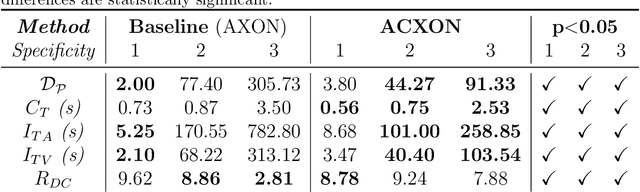

Abstract:Mutual understanding of artificial agents' decisions is key to ensuring a trustworthy and successful human-robot interaction. Hence, robots are expected to make reasonable decisions and communicate them to humans when needed. In this article, the focus is on an approach to modeling and reasoning about the comparison of two competing plans, so that robots can later explain the divergent result. First, a novel ontological model is proposed to formalize and reason about the differences between competing plans, enabling the classification of the most appropriate one (e.g., the shortest, the safest, the closest to human preferences, etc.). This work also investigates the limitations of a baseline algorithm for ontology-based explanatory narration. To address these limitations, a novel algorithm is presented, leveraging divergent knowledge between plans and facilitating the construction of contrastive narratives. Through empirical evaluation, it is observed that the explanations excel beyond the baseline method.

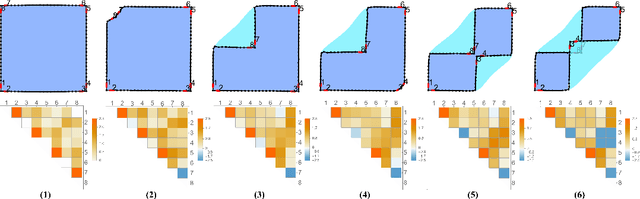

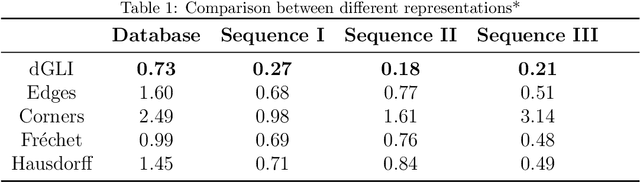

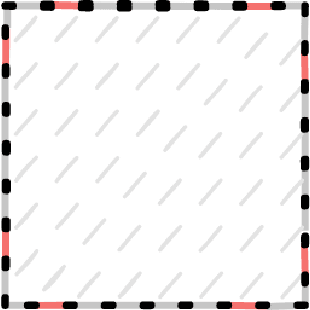

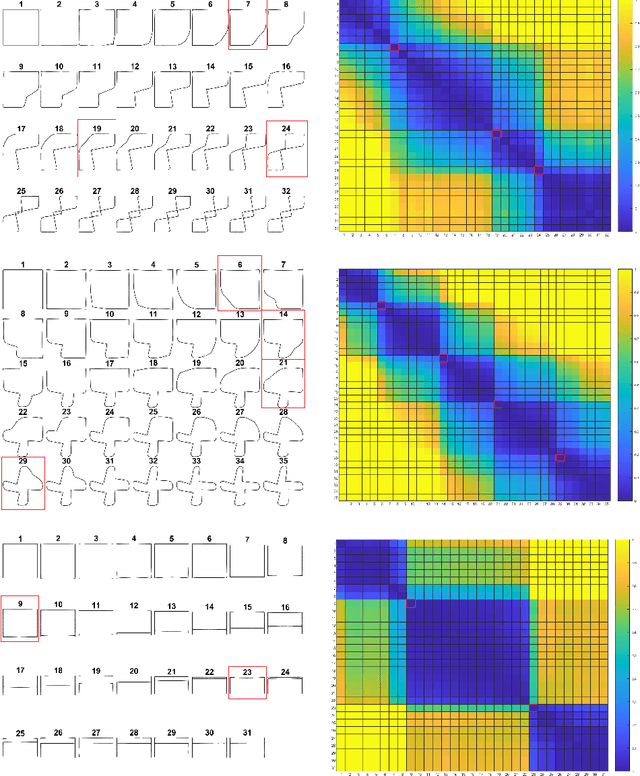

CloSE: A Compact Shape- and Orientation-Agnostic Cloth State Representation

Apr 07, 2025Abstract:Cloth manipulation is a difficult problem mainly because of the non-rigid nature of cloth, which makes a good representation of deformation essential. We present a new representation for the deformation-state of clothes. First, we propose the dGLI disk representation, based on topological indices computed for segments on the edges of the cloth mesh border that are arranged on a circular grid. The heat-map of the dGLI disk uncovers patterns that correspond to features of the cloth state that are consistent for different shapes, sizes of positions of the cloth, like the corners and the fold locations. We then abstract these important features from the dGLI disk onto a circle, calling it the Cloth StatE representation (CloSE). This representation is compact, continuous, and general for different shapes. Finally, we show the strengths of this representation in two relevant applications: semantic labeling and high- and low-level planning. The code, the dataset and the video can be accessed from : https://jaykamat99.github.io/close-representation

Unfolding the Literature: A Review of Robotic Cloth Manipulation

Jul 01, 2024

Abstract:The realm of textiles spans clothing, households, healthcare, sports, and industrial applications. The deformable nature of these objects poses unique challenges that prior work on rigid objects cannot fully address. The increasing interest within the community in textile perception and manipulation has led to new methods that aim to address challenges in modeling, perception, and control, resulting in significant progress. However, this progress is often tailored to one specific textile or a subcategory of these textiles. To understand what restricts these methods and hinders current approaches from generalizing to a broader range of real-world textiles, this review provides an overview of the field, focusing specifically on how and to what extent textile variations are addressed in modeling, perception, benchmarking, and manipulation of textiles. We finally conclude by identifying key open problems and outlining grand challenges that will drive future advancements in the field.

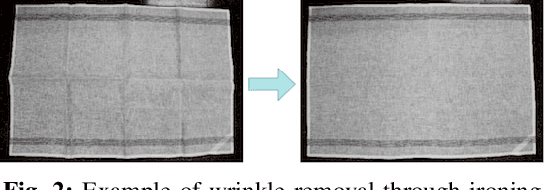

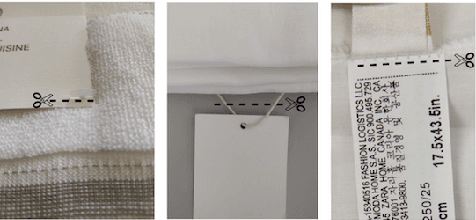

Standardization of Cloth Objects and its Relevance in Robotic Manipulation

Mar 07, 2024Abstract:The field of robotics faces inherent challenges in manipulating deformable objects, particularly in understanding and standardising fabric properties like elasticity, stiffness, and friction. While the significance of these properties is evident in the realm of cloth manipulation, accurately categorising and comprehending them in real-world applications remains elusive. This study sets out to address two primary objectives: (1) to provide a framework suitable for robotics applications to characterise cloth objects, and (2) to study how these properties influence robotic manipulation tasks. Our preliminary results validate the framework's ability to characterise cloth properties and compare cloth sets, and reveal the influence that different properties have on the outcome of five manipulation primitives. We believe that, in general, results on the manipulation of clothes should be reported along with a better description of the garments used in the evaluation. This paper proposes a set of these measures.

* 2024 ICRA International Conference on Robotics and Automation (ICRA)

Towards Transferring Tactile-based Continuous Force Control Policies from Simulation to Robot

Nov 13, 2023

Abstract:The advent of tactile sensors in robotics has sparked many ideas on how robots can leverage direct contact measurements of their environment interactions to improve manipulation tasks. An important line of research in this regard is that of grasp force control, which aims to manipulate objects safely by limiting the amount of force exerted on the object. While prior works have either hand-modeled their force controllers, employed model-based approaches, or have not shown sim-to-real transfer, we propose a model-free deep reinforcement learning approach trained in simulation and then transferred to the robot without further fine-tuning. We therefore present a simulation environment that produces realistic normal forces, which we use to train continuous force control policies. An evaluation in which we compare against a baseline and perform an ablation study shows that our approach outperforms the hand-modeled baseline and that our proposed inductive bias and domain randomization facilitate sim-to-real transfer. Code, models, and supplementary videos are available on https://sites.google.com/view/rl-force-ctrl

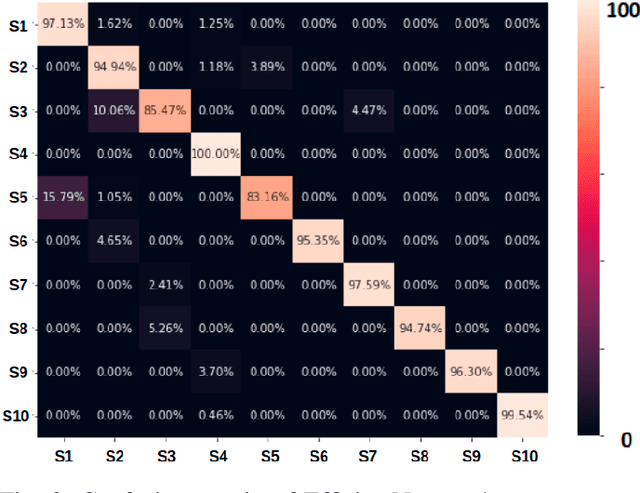

The dGLI Cloth Coordinates: A Topological Representation for Semantic Classification of Cloth States

Sep 14, 2022

Abstract:Robotic manipulation of cloth is a highly complex task because of its infinite-dimensional shape-state space that makes cloth state estimation very difficult. In this paper we introduce the dGLI Cloth Coordinates, a low-dimensional representation of the state of a rectangular piece of cloth that allows to efficiently distinguish key topological changes in a folding sequence, opening the door to efficient learning methods for cloth manipulation planning and control. Our representation is based on a directional derivative of the Gauss Linking Integral and allows us to represent both planar and spatial configurations in a consistent unified way. The proposed dGLI Cloth Coordinates are shown to be more accurate in the classification of cloth states and significantly more sensitive to changes in grasping affordances than other classic shape distance methods. Finally, we apply our representation to real images of a cloth, showing we can identify the different states using a simple distance-based classifier.

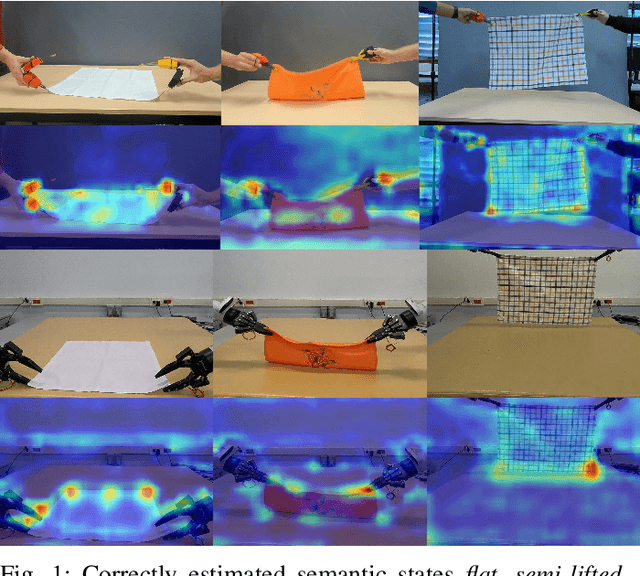

Semantic State Estimation in Cloth Manipulation Tasks

Mar 22, 2022

Abstract:Understanding of deformable object manipulations such as textiles is a challenge due to the complexity and high dimensionality of the problem. Particularly, the lack of a generic representation of semantic states (e.g., \textit{crumpled}, \textit{diagonally folded}) during a continuous manipulation process introduces an obstacle to identify the manipulation type. In this paper, we aim to solve the problem of semantic state estimation in cloth manipulation tasks. For this purpose, we introduce a new large-scale fully-annotated RGB image dataset showing various human demonstrations of different complicated cloth manipulations. We provide a set of baseline deep networks and benchmark them on the problem of semantic state estimation using our proposed dataset. Furthermore, we investigate the scalability of our semantic state estimation framework in robot monitoring tasks of long and complex cloth manipulations.

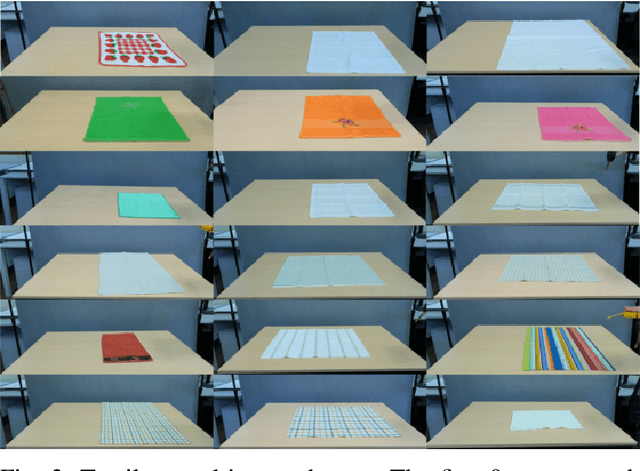

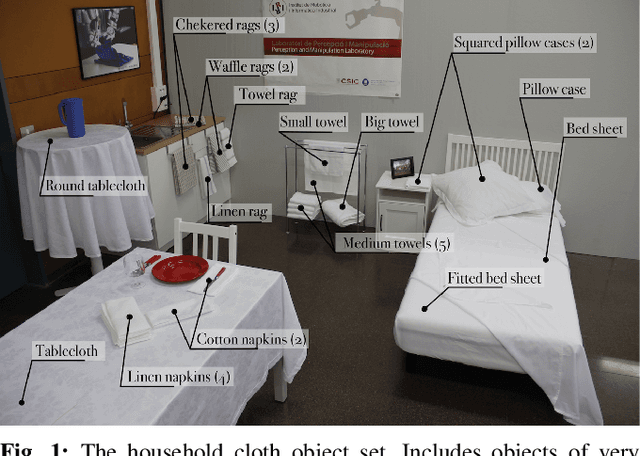

Household Cloth Object Set: Fostering Benchmarking in Deformable Object Manipulation

Nov 02, 2021

Abstract:Benchmarking of robotic manipulations is one of the open issues in robotic research. An important factor that has enabled progress in this area in the last decade is the existence of common object sets that have been shared among different research groups. However, the existing object sets are very limited when it comes to cloth-like objects that have unique particularities and challenges. This paper is a first step towards the design of a cloth object set to be distributed among research groups from the robotics cloth manipulation community. We present a set of household cloth objects and related tasks that serve to expose the challenges related to gathering such an object set and propose a roadmap to the design of common benchmarks in cloth manipulation tasks, with the intention to set the grounds for a future debate in the community that will be necessary to foster benchmarking for the manipulation of cloth-like objects. Some RGB-D and object scans are also collected as examples for the objects in relevant configurations. More details about the cloth set are shared in http://www.iri.upc.edu/groups/perception/ClothObjectSet/HouseholdClothSet.html.

Task-Adaptive Robot Learning from Demonstration under Replication with Gaussian Process Models

Oct 15, 2020

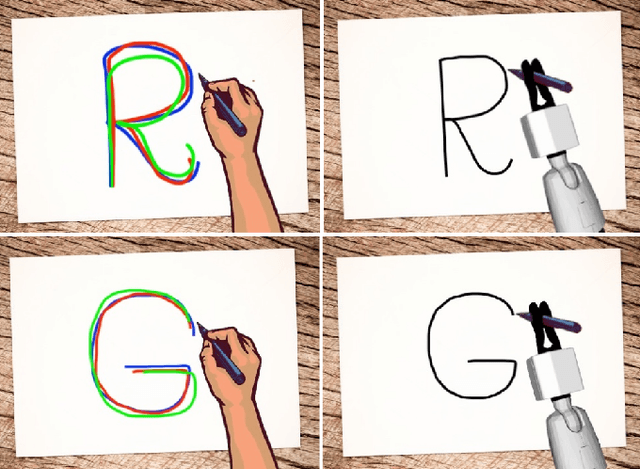

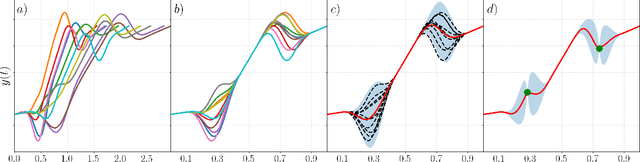

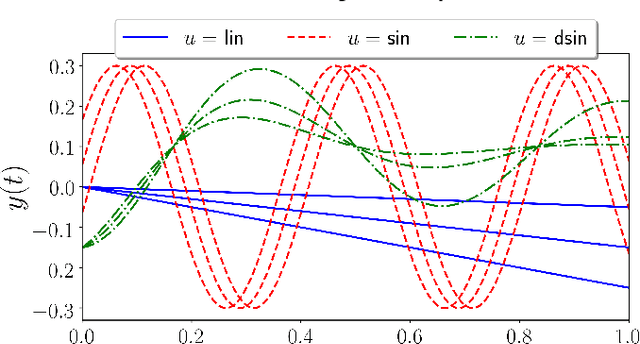

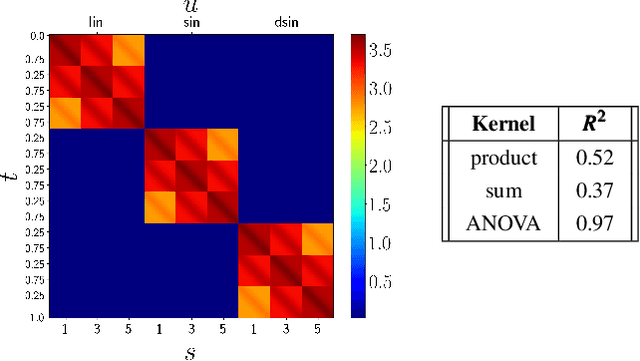

Abstract:Learning from Demonstration (LfD) is a paradigm that allows robots to learn complex manipulation tasks that can not be easily scripted, but can be demonstrated by a human teacher. One of the challenges of LfD is to enable robots to acquire skills that can be adapted to different scenarios. In this paper, we propose to achieve this by exploiting the variations in the demonstrations to retrieve an adaptive and robust policy, using Gaussian Process (GP) models. Adaptability is enhanced by incorporating task parameters into the model, which encode different specifications within the same task. With our formulation, these parameters can either be real, integer, or categorical. Furthermore, we propose a GP design that exploits the structure of replications, i.e., repeated demonstrations at identical conditions within data. Our method significantly reduces the computational cost of model fitting in complex tasks, where replications are essential to obtain a robust model. We illustrate our approach through several experiments on a handwritten letter demonstration dataset.

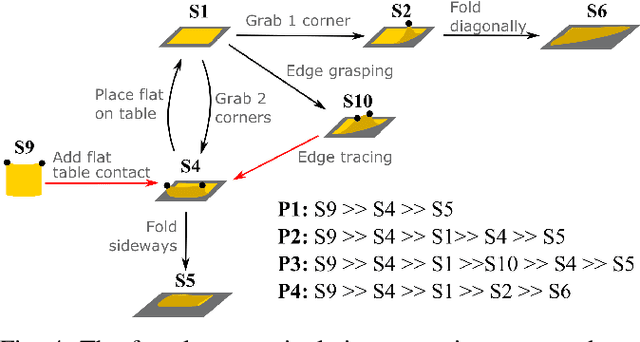

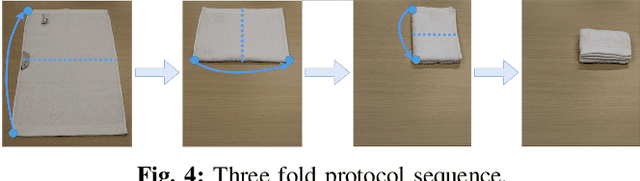

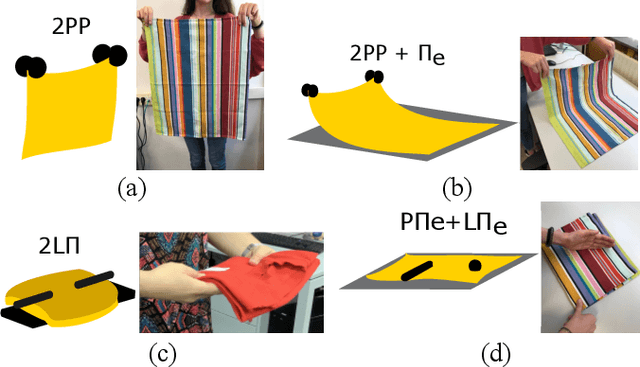

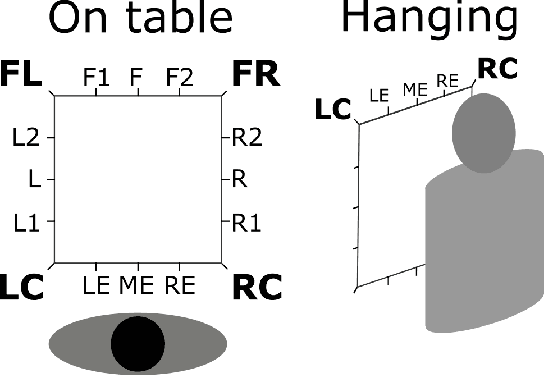

Encoding cloth manipulations using a graph of states and transitions

Sep 30, 2020

Abstract:Cloth manipulation is very relevant for domestic robotic tasks, but it presents many challenges due to the complexity of representing, recognizing and predicting behaviour of cloth under manipulation. In this work, we propose a generic, compact and simplified representation of the states of cloth manipulation that allows for representing tasks as sequences of states and transitions. We also define a graph of manipulation primitives that encodes all the strategies to accomplish a task. Our novel representation is used to encode the task of folding a napkin, learned from an experiment with human subjects with video and motion data. We show how our simplified representation allows to obtain a map of meaningful motion primitives and to segment the motion data to obtain sets of trajectories, velocity and acceleration profiles corresponding to each manipulation primitive in the graph.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge