Luis Sentis

PLATO Hand: Shaping Contact Behavior with Fingernails for Precise Manipulation

Feb 05, 2026Abstract:We present the PLATO Hand, a dexterous robotic hand with a hybrid fingertip that embeds a rigid fingernail within a compliant pulp. This design shapes contact behavior to enable diverse interaction modes across a range of object geometries. We develop a strain-energy-based bending-indentation model to guide the fingertip design and to explain how guided contact preserves local indentation while suppressing global bending. Experimental results show that the proposed robotic hand design demonstrates improved pinching stability, enhanced force observability, and successful execution of edge-sensitive manipulation tasks, including paper singulation, card picking, and orange peeling. Together, these results show that coupling structured contact geometry with a force-motion transparent mechanism provides a principled, physically embodied approach to precise manipulation.

HARMONIC: A Content-Centric Cognitive Robotic Architecture

Sep 16, 2025Abstract:This paper introduces HARMONIC, a cognitive-robotic architecture designed for robots in human-robotic teams. HARMONIC supports semantic perception interpretation, human-like decision-making, and intentional language communication. It addresses the issues of safety and quality of results; aims to solve problems of data scarcity, explainability, and safety; and promotes transparency and trust. Two proof-of-concept HARMONIC-based robotic systems are demonstrated, each implemented in both a high-fidelity simulation environment and on physical robotic platforms.

Task Hierarchical Control via Null-Space Projection and Path Integral Approach

Mar 28, 2025

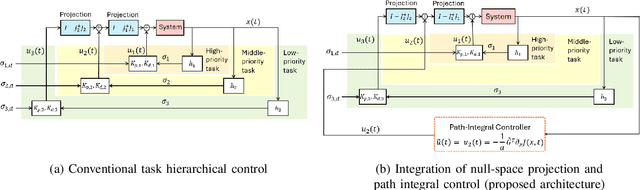

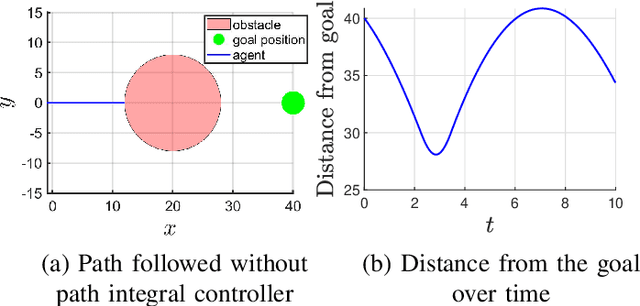

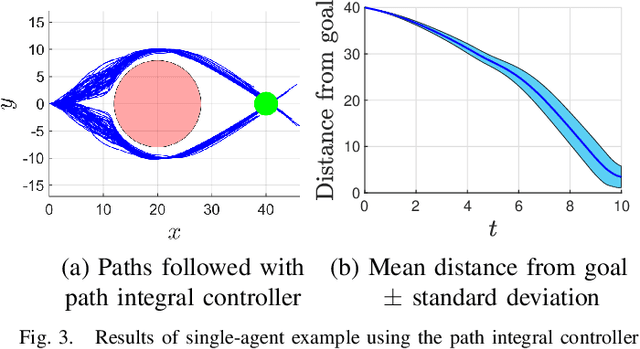

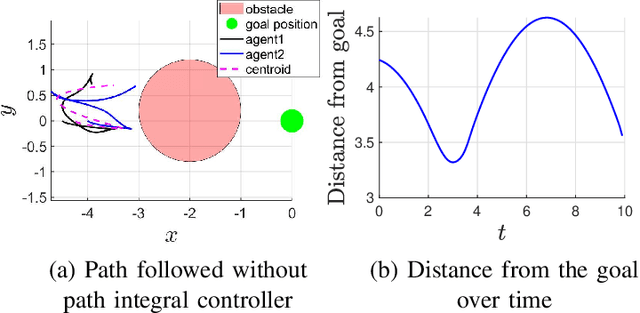

Abstract:This paper addresses the problem of hierarchical task control, where a robotic system must perform multiple subtasks with varying levels of priority. A commonly used approach for hierarchical control is the null-space projection technique, which ensures that higher-priority tasks are executed without interference from lower-priority ones. While effective, the state-of-the-art implementations of this method rely on low-level controllers, such as PID controllers, which can be prone to suboptimal solutions in complex tasks. This paper presents a novel framework for hierarchical task control, integrating the null-space projection technique with the path integral control method. Our approach leverages Monte Carlo simulations for real-time computation of optimal control inputs, allowing for the seamless integration of simpler PID-like controllers with a more sophisticated optimal control technique. Through simulation studies, we demonstrate the effectiveness of this combined approach, showing how it overcomes the limitations of traditional

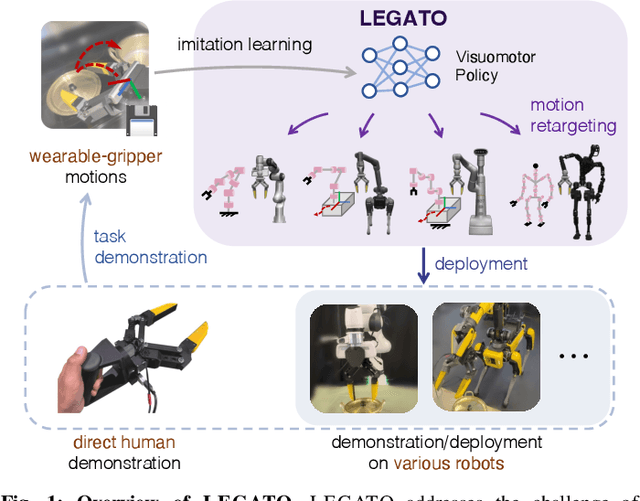

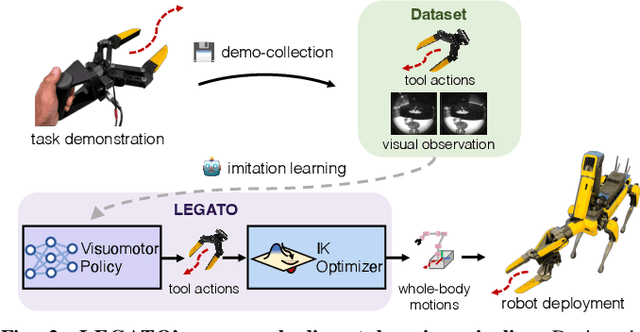

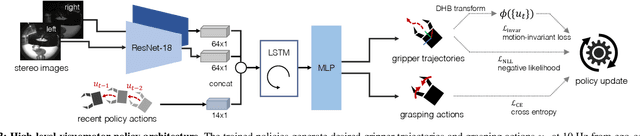

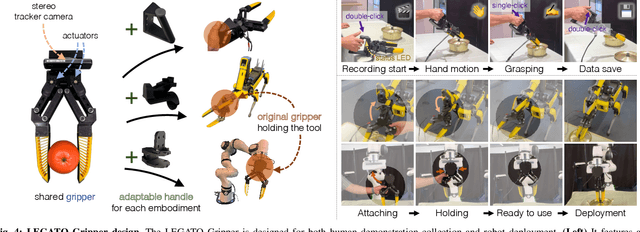

LEGATO: Cross-Embodiment Imitation Using a Grasping Tool

Nov 06, 2024

Abstract:Cross-embodiment imitation learning enables policies trained on specific embodiments to transfer across different robots, unlocking the potential for large-scale imitation learning that is both cost-effective and highly reusable. This paper presents LEGATO, a cross-embodiment imitation learning framework for visuomotor skill transfer across varied kinematic morphologies. We introduce a handheld gripper that unifies action and observation spaces, allowing tasks to be defined consistently across robots. Using this gripper, we train visuomotor policies via imitation learning, applying a motion-invariant transformation to compute the training loss. Gripper motions are then retargeted into high-degree-of-freedom whole-body motions using inverse kinematics for deployment across diverse embodiments. Our evaluations in simulation and real-robot experiments highlight the framework's effectiveness in learning and transferring visuomotor skills across various robots. More information can be found at the project page: https://ut-hcrl.github.io/LEGATO.

Guiding Collision-Free Humanoid Multi-Contact Locomotion using Convex Kinematic Relaxations and Dynamic Optimization

Oct 10, 2024

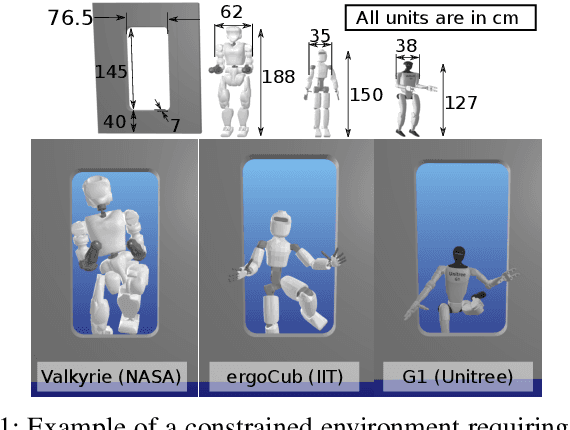

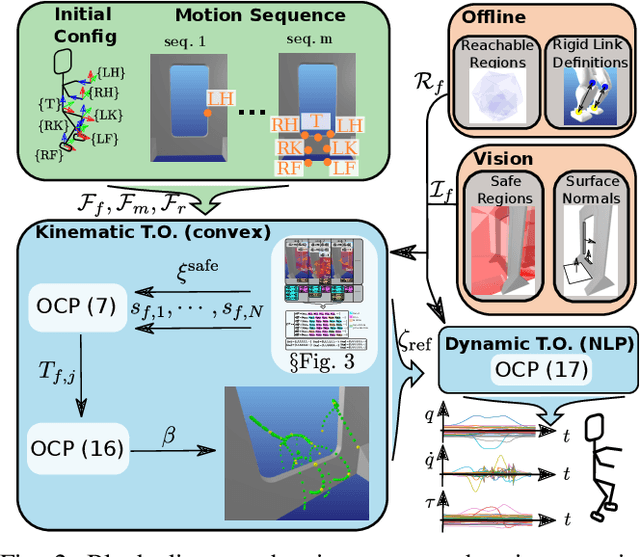

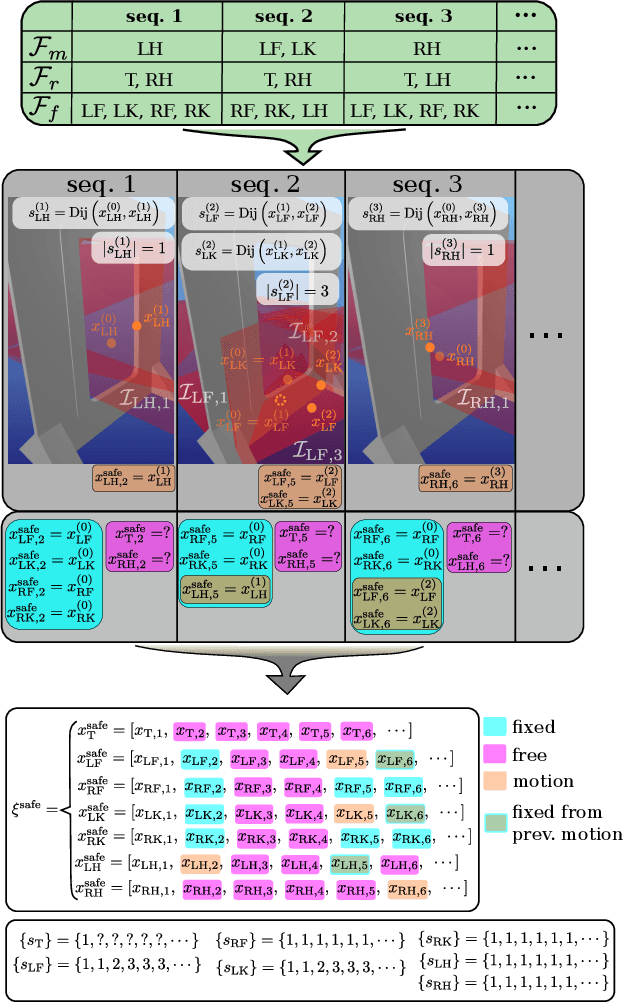

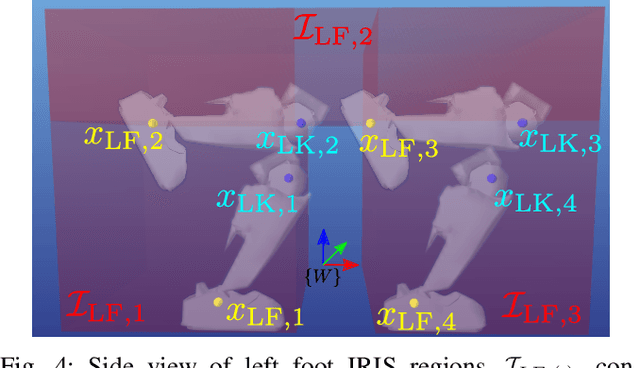

Abstract:Humanoid robots rely on multi-contact planners to navigate a diverse set of environments, including those that are unstructured and highly constrained. To synthesize stable multi-contact plans within a reasonable time frame, most planners assume statically stable motions or rely on reduced order models. However, these approaches can also render the problem infeasible in the presence of large obstacles or when operating near kinematic and dynamic limits. To that end, we propose a new multi-contact framework that leverages recent advancements in relaxing collision-free path planning into a convex optimization problem, extending it to be applicable to humanoid multi-contact navigation. Our approach generates near-feasible trajectories used as guides in a dynamic trajectory optimizer, altogether addressing the aforementioned limitations. We evaluate our computational approach showcasing three different-sized humanoid robots traversing a high-raised naval knee-knocker door using our proposed framework in simulation. Our approach can generate motion plans within a few seconds consisting of several multi-contact states, including dynamic feasibility in joint space.

Hardware-Accelerated Ray Tracing for Discrete and Continuous Collision Detection on GPUs

Sep 16, 2024Abstract:This paper presents a set of simple and intuitive robot collision detection algorithms that show substantial scaling improvements for high geometric complexity and large numbers of collision queries by leveraging hardware-accelerated ray tracing on GPUs. It is the first leveraging hardware-accelerated ray-tracing for direct volume mesh-to-mesh discrete collision detection and applying it to continuous collision detection. We introduce two methods: Ray-Traced Discrete-Pose Collision Detection for exact robot mesh to obstacle mesh collision detection, and Ray-Traced Continuous Collision Detection for robot sphere representation to obstacle mesh swept collision detection, using piecewise-linear or quadratic B-splines. For robot link meshes totaling 24k triangles and obstacle meshes of over 190k triangles, our methods were up to 3 times faster in batched discrete-pose queries than a state-of-the-art GPU-based method using a sphere robot representation. For the same obstacle mesh scene, our sphere-robot continuous collision detection was up to 9 times faster depending on trajectory batch size. We also performed a detailed measurement of the volume coverage accuracy of various sphere/mesh pose/path representations to provide insight into the tradeoffs between speed and accuracy of different robot collision detection methods.

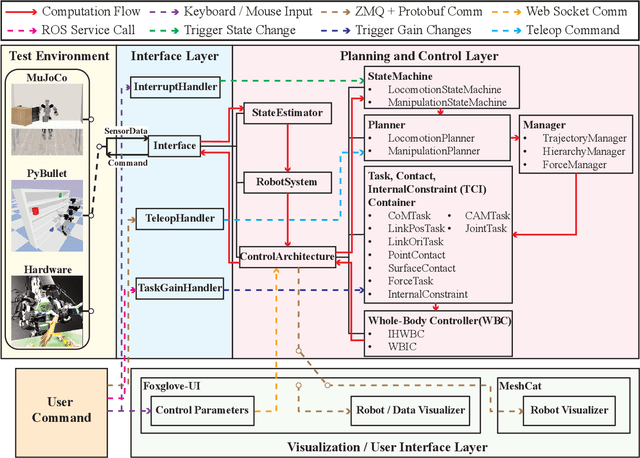

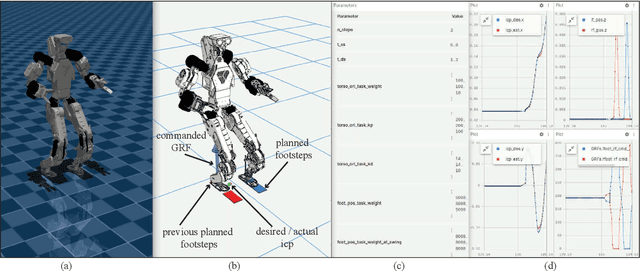

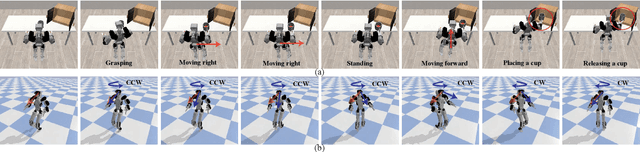

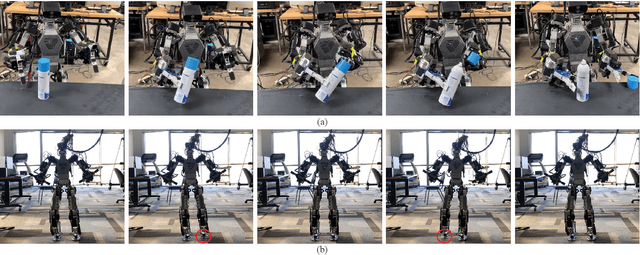

RPC: A Modular Framework for Robot Planning, Control, and Deployment

Sep 16, 2024

Abstract:This paper presents an open-source, lightweight, yet comprehensive software framework, named RPC, which integrates physics-based simulators, planning and control libraries, debugging tools, and a user-friendly operator interface. RPC enables users to thoroughly evaluate and develop control algorithms for robotic systems. While existing software frameworks provide some of these capabilities, integrating them into a cohesive system can be challenging and cumbersome. To overcome this challenge, we have modularized each component in RPC to ensure easy and seamless integration or replacement with new modules. Additionally, our framework currently supports a variety of model-based planning and control algorithms for robotic manipulators and legged robots, alongside essential debugging tools, making it easier for users to design and execute complex robotics tasks. The code and usage instructions of RPC are available at https://github.com/shbang91/rpc.

Grasp Failure Constraints for Fast and Reliable Pick-and-Place Using Multi-Suction-Cup Grippers

Aug 07, 2024Abstract:Multi-suction-cup grippers are frequently employed to perform pick-and-place robotic tasks, especially in industrial settings where grasping a wide range of light to heavy objects in limited amounts of time is a common requirement. However, most existing works focus on using one or two suction cups to grasp only irregularly shaped but light objects. There is a lack of research on robust manipulation of heavy objects using larger arrays of suction cups, which introduces challenges in modeling and predicting grasp failure. This paper presents a general approach to modeling grasp strength in multi-suction-cup grippers, introducing new constraints usable for trajectory planning and optimization to achieve fast and reliable pick-and-place maneuvers. The primary modeling challenge is the accurate prediction of the distribution of loads at each suction cup while grasping objects. To solve for this load distribution, we find minimum spring potential energy configurations through a simple quadratic program. This results in a computationally efficient analytical solution that can be integrated to formulate grasp failure constraints in time-optimal trajectory planning. Finally, we present experimental results to validate the efficiency and accuracy of the proposed model.

RL-augmented MPC Framework for Agile and Robust Bipedal Footstep Locomotion Planning and Control

Jul 25, 2024Abstract:This paper proposes an online bipedal footstep planning strategy that combines model predictive control (MPC) and reinforcement learning (RL) to achieve agile and robust bipedal maneuvers. While MPC-based foot placement controllers have demonstrated their effectiveness in achieving dynamic locomotion, their performance is often limited by the use of simplified models and assumptions. To address this challenge, we develop a novel foot placement controller that leverages a learned policy to bridge the gap between the use of a simplified model and the more complex full-order robot system. Specifically, our approach employs a unique combination of an ALIP-based MPC foot placement controller for sub-optimal footstep planning and the learned policy for refining footstep adjustments, enabling the resulting footstep policy to capture the robot's whole-body dynamics effectively. This integration synergizes the predictive capability of MPC with the flexibility and adaptability of RL. We validate the effectiveness of our framework through a series of experiments using the full-body humanoid robot DRACO 3. The results demonstrate significant improvements in dynamic locomotion performance, including better tracking of a wide range of walking speeds, enabling reliable turning and traversing challenging terrains while preserving the robustness and stability of the walking gaits compared to the baseline ALIP-based MPC approach.

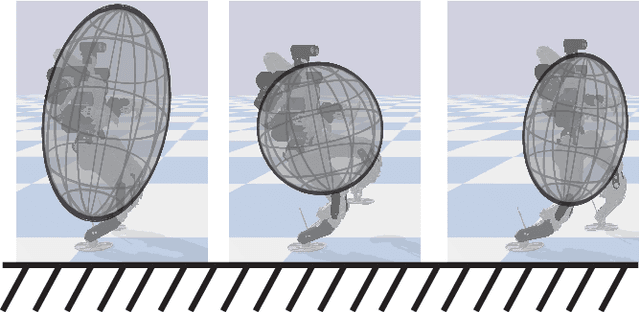

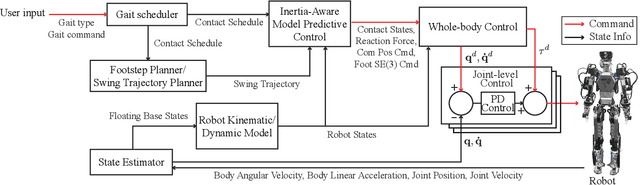

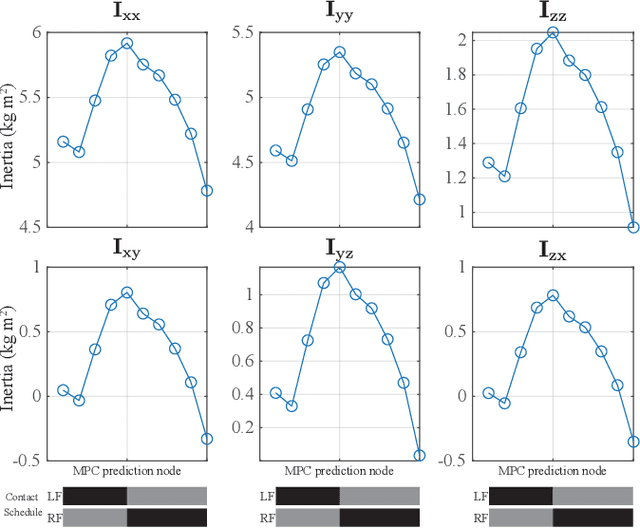

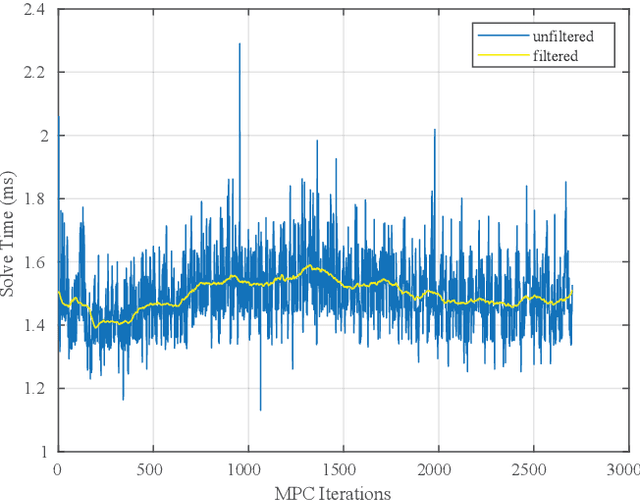

Variable Inertia Model Predictive Control for Fast Bipedal Maneuvers

Jul 23, 2024

Abstract:This paper proposes a novel control framework for agile and robust bipedal locomotion, addressing model discrepancies between full-body and reduced-order models. Specifically, assumptions such as constant centroidal inertia have introduced significant challenges and limitations in locomotion tasks. To enhance the agility and versatility of full-body humanoid robots, we formalize a Model Predictive Control (MPC) problem that accounts for the variable centroidal inertia of humanoid robots within a convex optimization framework, ensuring computational efficiency for real-time operations. In this formulation, we incorporate a centroidal inertia network designed to predict the variable centroidal inertia over the MPC horizon, taking into account the swing foot trajectories-an aspect often overlooked in ROM-based MPC frameworks. Moreover, we enhance the performance and stability of locomotion behaviors by synergizing the MPC-based approach with whole-body control (WBC). The effectiveness of our proposed framework is validated through simulations using our full-body humanoid robot, DRACO 3, demonstrating dynamic behaviors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge