Shenli Yuan

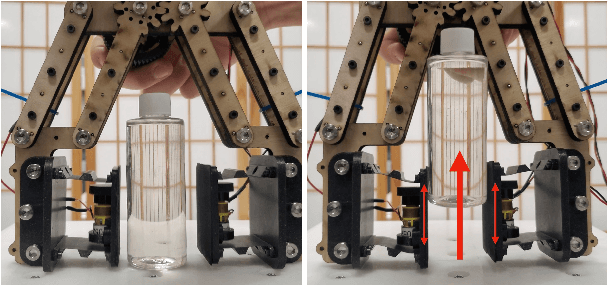

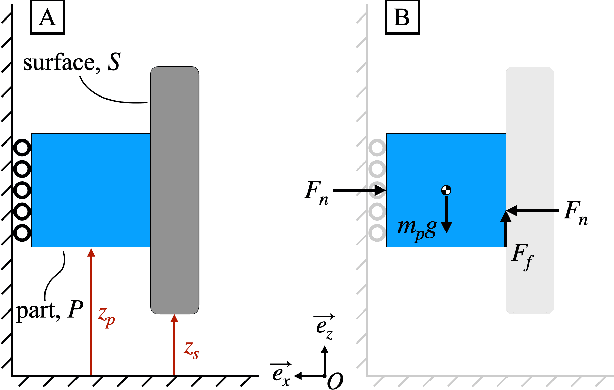

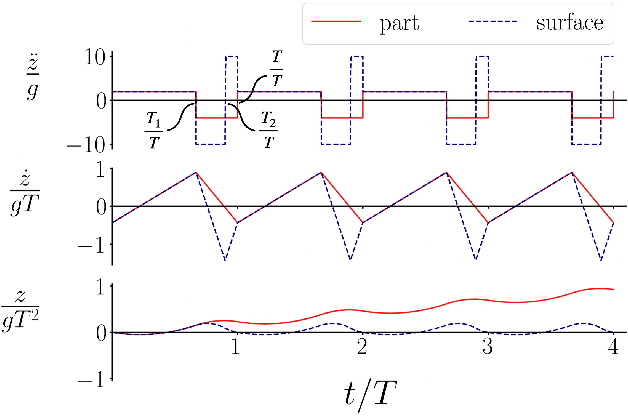

Vertical Vibratory Transport of Grasped Parts Using Impacts

Feb 08, 2025

Abstract:In this paper, we use impact-induced acceleration in conjunction with periodic stick-slip to successfully and quickly transport parts vertically against gravity. We show analytically that vertical vibratory transport is more difficult than its horizontal counterpart, and provide guidelines for achieving optimal vertical vibratory transport of a part. Namely, such a system must be capable of quickly realizing high accelerations, as well as supply normal forces at least several times that required for static equilibrium. We also show that for a given maximum acceleration, there is an optimal normal force for transport. To test our analytical guidelines, we built a vibrating surface using flexures and a voice coil actuator that can accelerate a magnetic ram into various materials to generate impacts. The surface was used to transport a part against gravity. Experimentally obtained motion tracking data confirmed the theoretical model. A series of grasping tests with a vibrating-surface equipped parallel jaw gripper confirmed the design guidelines.

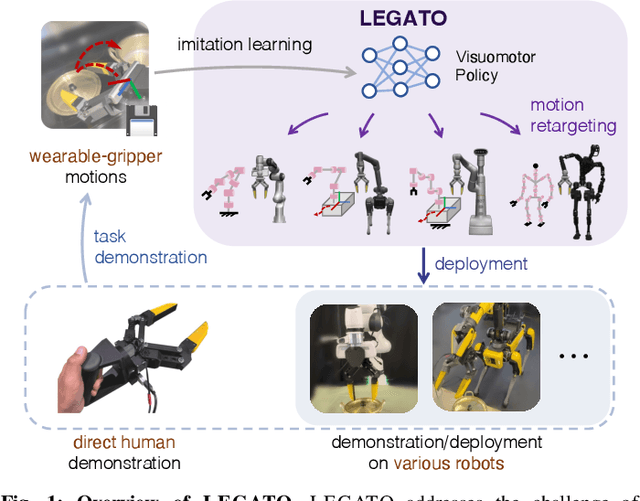

LEGATO: Cross-Embodiment Imitation Using a Grasping Tool

Nov 06, 2024

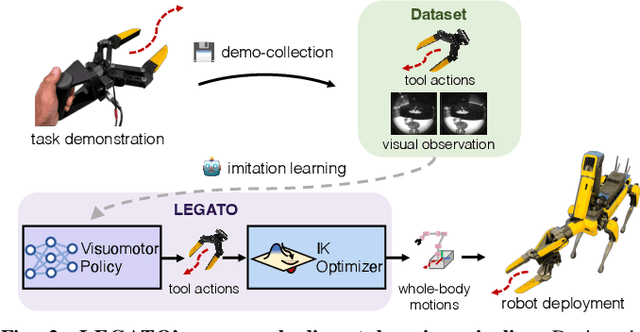

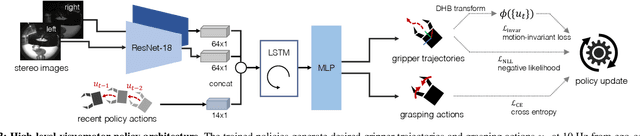

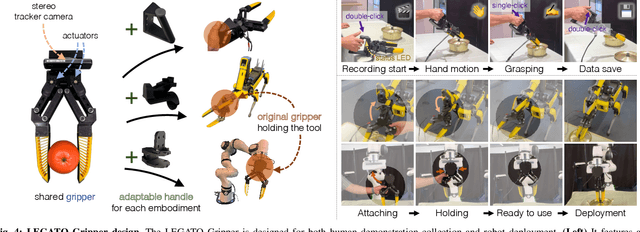

Abstract:Cross-embodiment imitation learning enables policies trained on specific embodiments to transfer across different robots, unlocking the potential for large-scale imitation learning that is both cost-effective and highly reusable. This paper presents LEGATO, a cross-embodiment imitation learning framework for visuomotor skill transfer across varied kinematic morphologies. We introduce a handheld gripper that unifies action and observation spaces, allowing tasks to be defined consistently across robots. Using this gripper, we train visuomotor policies via imitation learning, applying a motion-invariant transformation to compute the training loss. Gripper motions are then retargeted into high-degree-of-freedom whole-body motions using inverse kinematics for deployment across diverse embodiments. Our evaluations in simulation and real-robot experiments highlight the framework's effectiveness in learning and transferring visuomotor skills across various robots. More information can be found at the project page: https://ut-hcrl.github.io/LEGATO.

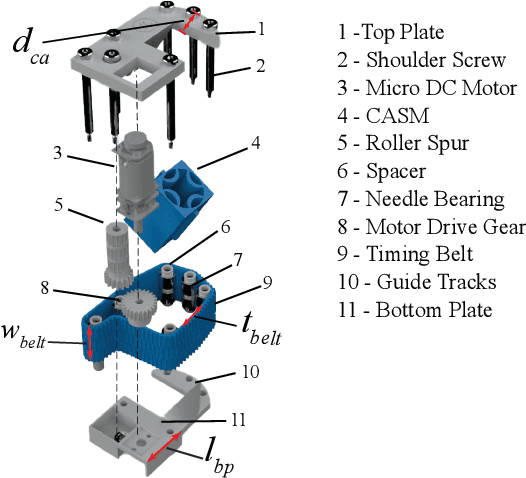

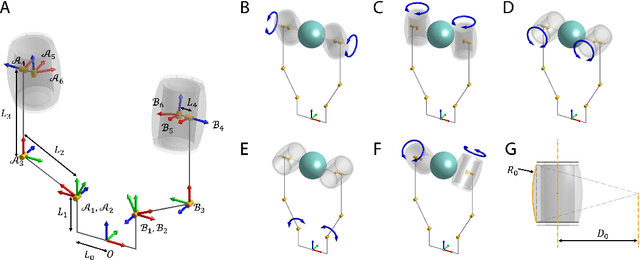

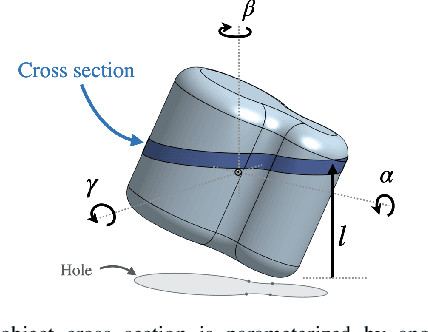

Wearable Roller Rings to Enable Robot Dexterous In-Hand Manipulation through Active Surfaces

Mar 19, 2024

Abstract:In-hand manipulation is a crucial ability for reorienting and repositioning objects within grasps. The main challenges are not only the complexity in the computational models, but also the risks of grasp instability caused by active finger motions, such as rolling, sliding, breaking, and remaking contacts. Based on the idea of manipulation without lifting a finger, this paper presents the development of Roller Rings (RR), a modular robotic attachment with active surfaces that is wearable by both robot and human hands. By installing and angling the RRs on grasping systems, such that their spatial motions are not co-linear, we derive a general differential motion model for the object actuated by the active surfaces. Our motion model shows that complete in-hand manipulation skill sets can be provided by as few as only 2 RRs through non-holonomic object motions, while more RRs can enable enhanced manipulation dexterity with fewer motion constraints. Through extensive experiments, we wear RRs on both a robot hand and a human hand to evaluate their manipulation capabilities, and show that the RRs can be employed to manipulate arbitrary object shapes to provide dexterous in-hand manipulation.

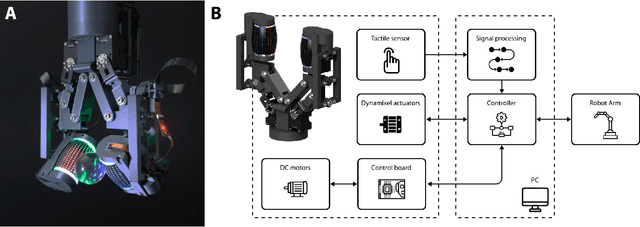

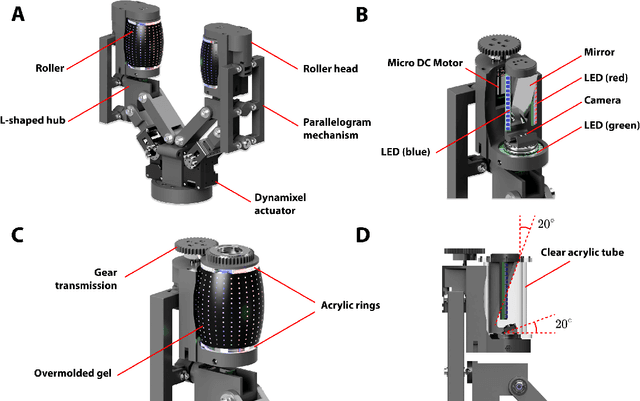

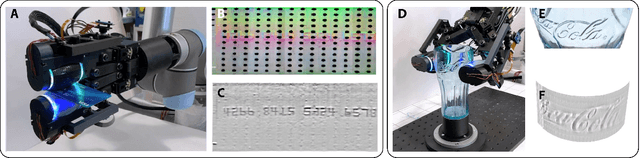

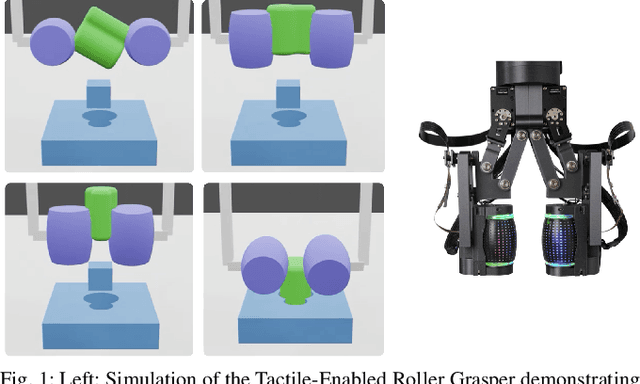

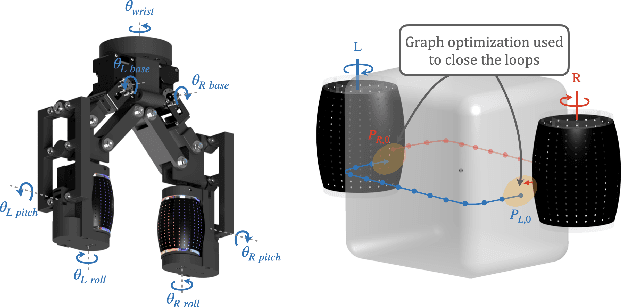

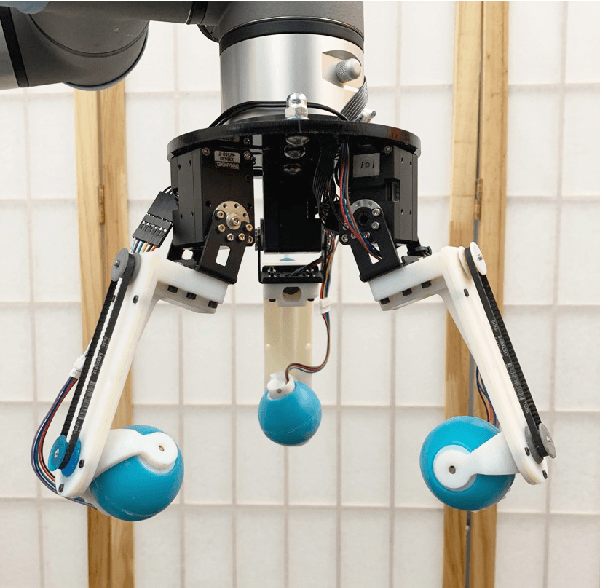

Tactile-Reactive Roller Grasper

Jun 16, 2023

Abstract:Manipulation of objects within a robot's hand is one of the most important challenges in achieving robot dexterity. The "Roller Graspers" refers to a family of non-anthropomorphic hands utilizing motorized, rolling fingertips to achieve in-hand manipulation. These graspers manipulate grasped objects by commanding the rollers to exert forces that propel the object in the desired motion directions. In this paper, we explore the possibility of robot in-hand manipulation through tactile-guided rolling. We do so by developing the Tactile-Reactive Roller Grasper (TRRG), which incorporates camera-based tactile sensing with compliant, steerable cylindrical fingertips, with accompanying sensor information processing and control strategies. We demonstrated that the combination of tactile feedback and the actively rolling surfaces enables a variety of robust in-hand manipulation applications. In addition, we also demonstrated object reconstruction techniques using tactile-guided rolling. A controlled experiment was conducted to provide insights on the benefits of tactile-reactive rollers for manipulation. We considered two manipulation cases: when the fingers are manipulating purely through rolling and when they are periodically breaking and reestablishing contact as in regrasping. We found that tactile-guided rolling can improve the manipulation robustness by allowing the grasper to perform necessary fine grip adjustments in both manipulation cases, indicating that hybrid rolling fingertip and finger-gaiting designs may be a promising research direction.

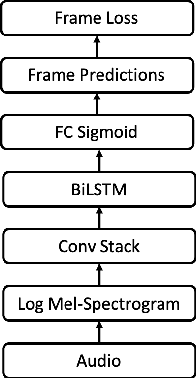

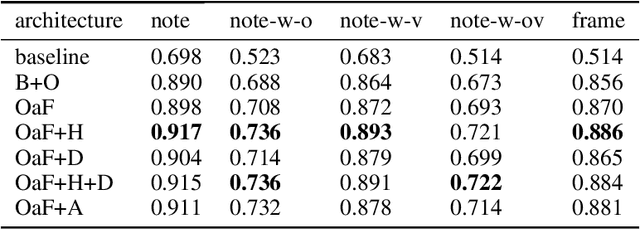

From Audio to Symbolic Encoding

Feb 26, 2023

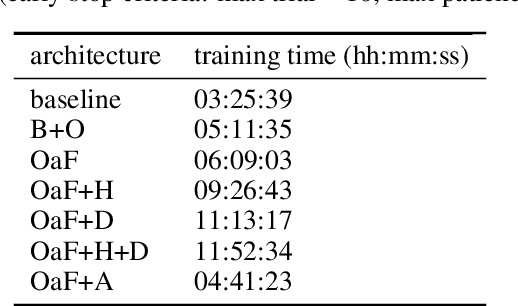

Abstract:Automatic music transcription (AMT) aims to convert raw audio to symbolic music representation. As a fundamental problem of music information retrieval (MIR), AMT is considered a difficult task even for trained human experts due to overlap of multiple harmonics in the acoustic signal. On the other hand, speech recognition, as one of the most popular tasks in natural language processing, aims to translate human spoken language to texts. Based on the similar nature of AMT and speech recognition (as they both deal with tasks of translating audio signal to symbolic encoding), this paper investigated whether a generic neural network architecture could possibly work on both tasks. In this paper, we introduced our new neural network architecture built on top of the current state-of-the-art Onsets and Frames, and compared the performances of its multiple variations on AMT task. We also tested our architecture with the task of speech recognition. For AMT, our models were able to produce better results compared to the model trained using the state-of-art architecture; however, although similar architecture was able to be trained on the speech recognition task, it did not generate very ideal result compared to other task-specific models.

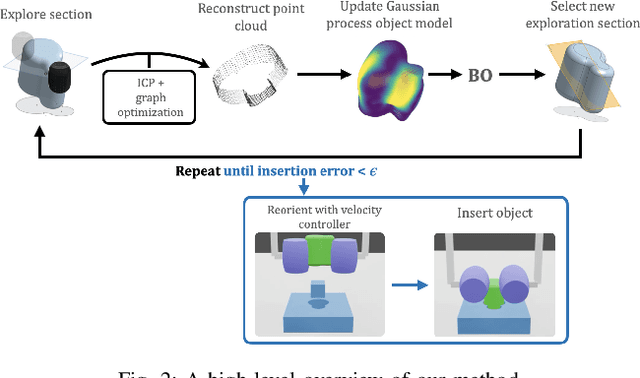

Task-Driven In-Hand Manipulation of Unknown Objects with Tactile Sensing

Oct 28, 2022

Abstract:Manipulation of objects in-hand without an object model is a foundational skill for many tasks in unstructured environments. In many cases, vision-only approaches may not be feasible; for example, due to occlusion in cluttered spaces. In this paper, we introduce a method to reorient unknown objects by incrementally building a probabilistic estimate of the object shape and pose during task-driven manipulation. Our method leverages Bayesian optimization to strategically trade-off exploration of the global object shape with efficient task completion. We demonstrate our approach on a Tactile-Enabled Roller Grasper, a gripper that rolls objects in hand while continuously collecting tactile data. We evaluate our method in simulation on a set of randomly generated objects and find that our method reliably reorients objects while significantly reducing the exploration time needed to do so. On the Roller Grasper hardware, we show successful qualitative reconstruction of the object model. In summary, this work (1) presents a system capable of simultaneously learning unknown 3D object shape and pose using tactile sensing; and (2) demonstrates that task-driven exploration results in more efficient object manipulation than the common paradigm of complete object exploration before task-completion.

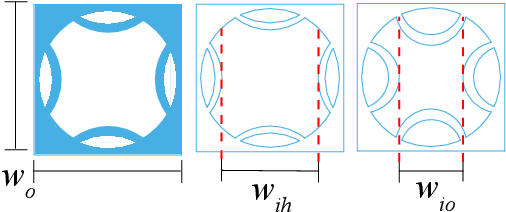

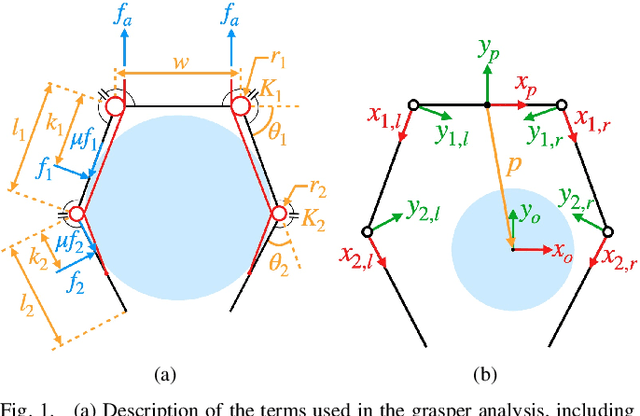

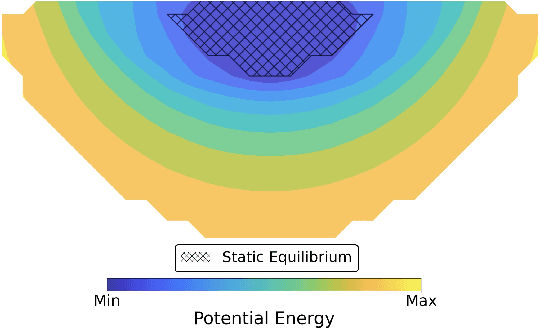

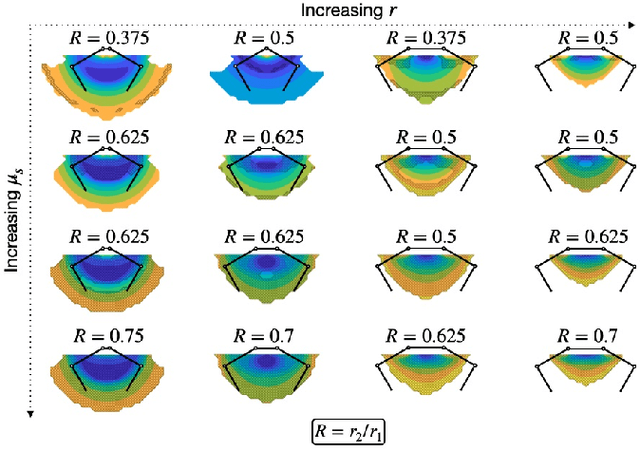

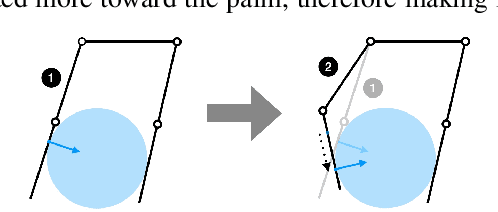

Designing Underactuated Graspers with Dynamically Variable Geometry Using Potential Energy Map Based Analysis

Mar 14, 2022

Abstract:In this paper we present a potential energy map based approach that provides a framework for the design and control of a robotic grasper. Unlike other potential energy map approaches, our framework is able to consider friction for a more realistic perspective on grasper performance. Our analysis establishes the importance of including variable geometry in a grasper design, namely with regards to palm width, link lengths, and transmission ratio. We demonstrate the use of this method specifically for a two-phalanx tendon-pulley underactuated grasper, and show how various design parameters - palm width, link lengths, and transmission ratios - impact the grasping and manipulation performance of a specific design across a range of object sizes and friction coefficients. Optimal grasping designs have palms that scale with object size, and transmission ratios that scale with the coefficient of friction. Using a custom manipulation metric we compared a grasper that only dynamically varied its geometry to a grasper with a variable palm and distinct actuation commands. The analysis revealed the advantage of the compliant reconfiguration ability intrinsic to underactuated mechanisms; by varying only the geometry of the grasper, manipulation of a wide range of objects could be performed.

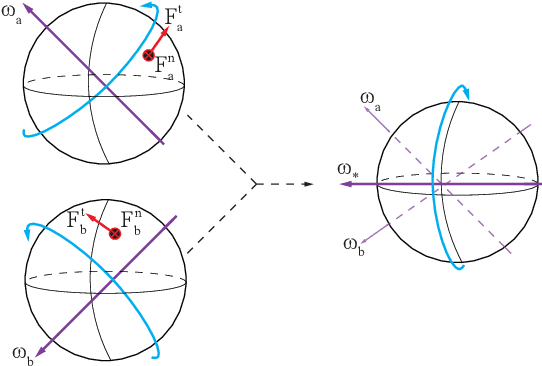

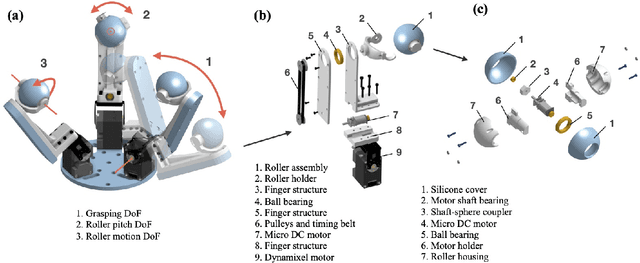

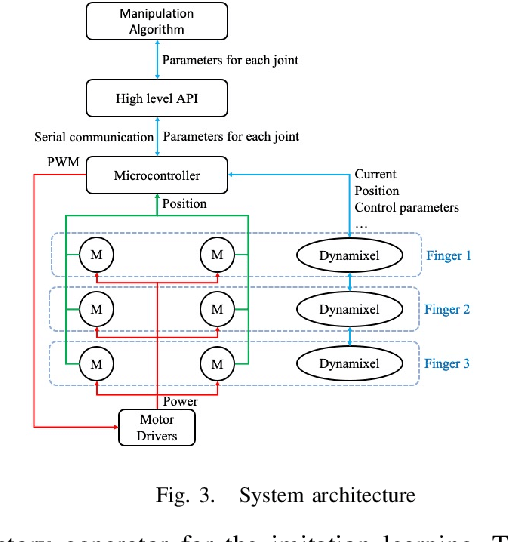

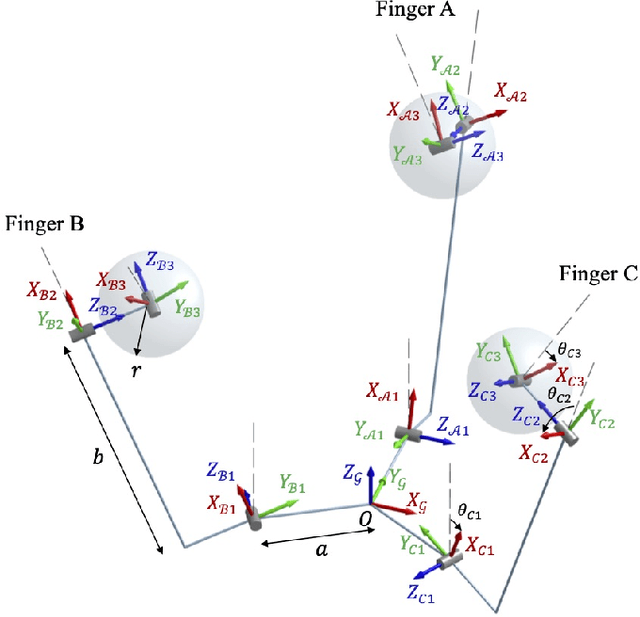

Design and Control of Roller Grasper V2 for In-Hand Manipulation

Apr 18, 2020

Abstract:The ability to perform in-hand manipulation still remains an unsolved problem; having this capability would allow robots to perform sophisticated tasks requiring repositioning and reorienting of grasped objects. In this work, we present a novel non-anthropomorphic robot grasper with the ability to manipulate objects by means of active surfaces at the fingertips. Active surfaces are achieved by spherical rolling finger tips with two degrees of freedom (DoF) -- a pivoting motion for surface reorientation -- and a continuous rolling motion for moving the object. A further DoF is in the base of each finger, allowing the fingers to grasp objects over a range of size and shapes. Instantaneous kinematics was derived and objects were successfully manipulated both with a custom hard-coded control scheme as well as one learned through imitation learning, in simulation and experimentally on the hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge