Radhen Patel

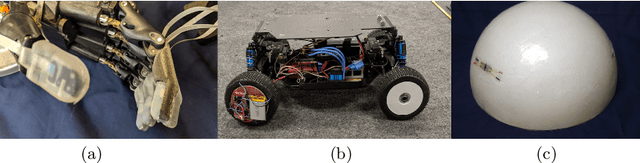

Tactile-Reactive Roller Grasper

Jun 16, 2023

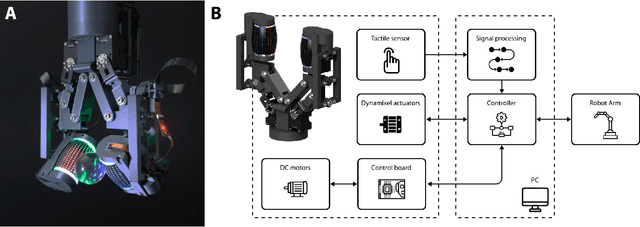

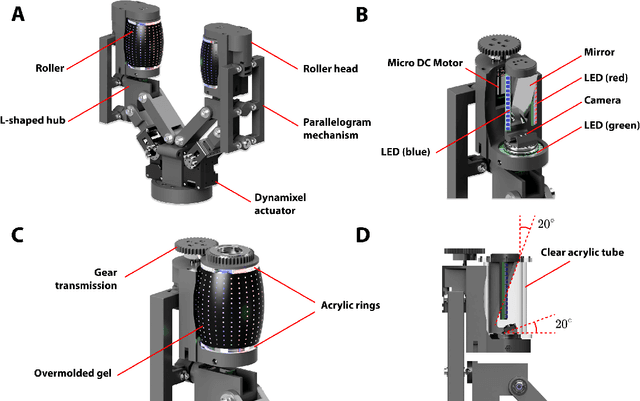

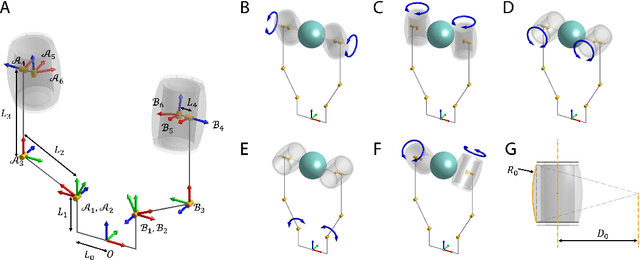

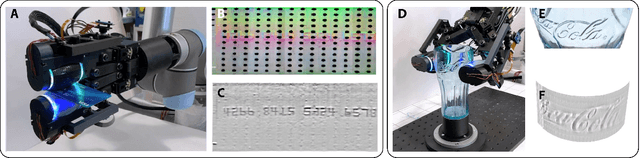

Abstract:Manipulation of objects within a robot's hand is one of the most important challenges in achieving robot dexterity. The "Roller Graspers" refers to a family of non-anthropomorphic hands utilizing motorized, rolling fingertips to achieve in-hand manipulation. These graspers manipulate grasped objects by commanding the rollers to exert forces that propel the object in the desired motion directions. In this paper, we explore the possibility of robot in-hand manipulation through tactile-guided rolling. We do so by developing the Tactile-Reactive Roller Grasper (TRRG), which incorporates camera-based tactile sensing with compliant, steerable cylindrical fingertips, with accompanying sensor information processing and control strategies. We demonstrated that the combination of tactile feedback and the actively rolling surfaces enables a variety of robust in-hand manipulation applications. In addition, we also demonstrated object reconstruction techniques using tactile-guided rolling. A controlled experiment was conducted to provide insights on the benefits of tactile-reactive rollers for manipulation. We considered two manipulation cases: when the fingers are manipulating purely through rolling and when they are periodically breaking and reestablishing contact as in regrasping. We found that tactile-guided rolling can improve the manipulation robustness by allowing the grasper to perform necessary fine grip adjustments in both manipulation cases, indicating that hybrid rolling fingertip and finger-gaiting designs may be a promising research direction.

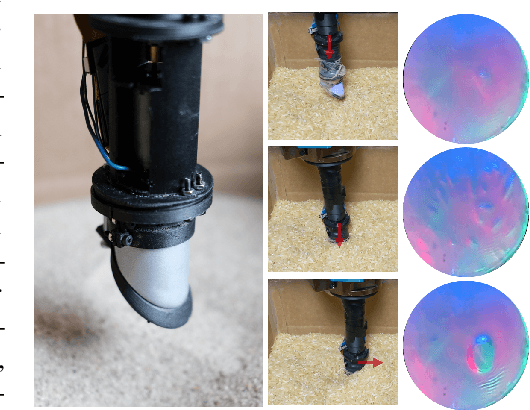

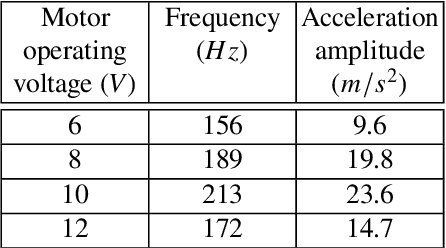

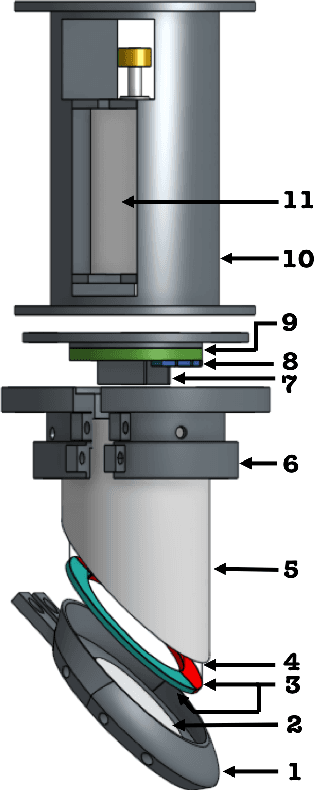

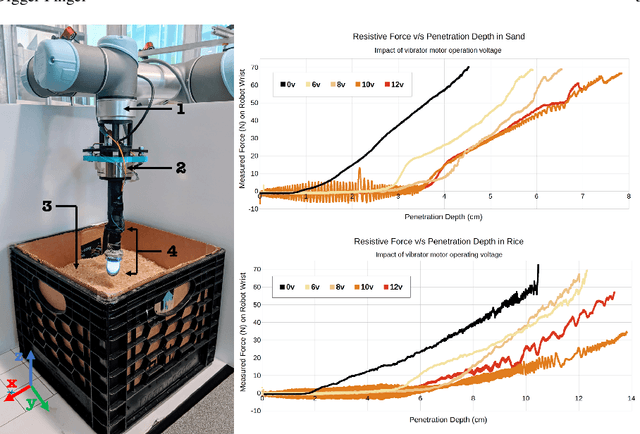

Digger Finger: GelSight Tactile Sensor for Object Identification Inside Granular Media

Feb 20, 2021

Abstract:In this paper we present an early prototype of the Digger Finger that is designed to easily penetrate granular media and is equipped with the GelSight sensor. Identifying objects buried in granular media using tactile sensors is a challenging task. First, particle jamming in granular media prevents downward movement. Second, the granular media particles tend to get stuck between the sensing surface and the object of interest, distorting the actual shape of the object. To tackle these challenges we present a Digger Finger prototype. It is capable of fluidizing granular media during penetration using mechanical vibrations. It is equipped with high resolution vision based tactile sensing to identify objects buried inside granular media. We describe the experimental procedures we use to evaluate these fluidizing and buried shape recognition capabilities. A robot with such fingers can perform explosive ordnance disposal and Improvised Explosive Device (IED) detection tasks at a much a finer resolution compared to techniques like Ground Penetration Radars (GPRs). Sensors like the Digger Finger will allow robotic manipulation research to move beyond only manipulating rigid objects.

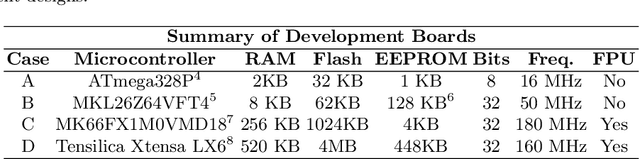

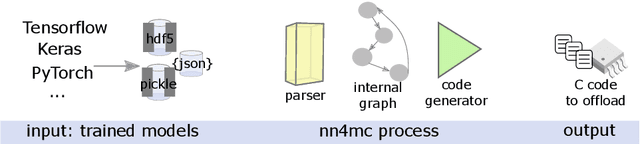

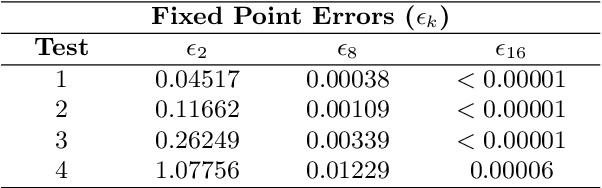

Embedded Neural Networks for Robot Autonomy

Nov 10, 2019

Abstract:We present a library to automatically embed signal processing and neural network predictions into the material robots are made of. Deep and shallow neural network models are first trained offline using state-of-the-art machine learning tools and then transferred onto general purpose microcontrollers that are co-located with a robot's sensors and actuators. We validate this approach using multiple examples: a smart robotic tire for terrain classification, a robotic finger sensor for load classification and a smart composite capable of regressing impact source localization. In each example, sensing and computation are embedded inside the material, creating artifacts that serve as stand-in replacement for otherwise inert conventional parts. The open source software library takes as inputs trained model files from higher level learning software, such as Tensorflow/Keras, and outputs code that is readable in a microcontroller that supports C. We compare the performance of this approach for various embedded platforms. In particular, we show that low-cost off-the-shelf microcontrollers can match the accuracy of a desktop computer, while being fast enough for real-time applications at different neural network configurations. We provide means to estimate the maximum number of parameters that the hardware will support based on the microcontroller's specifications.

Improving grasp performance using in-hand proximity and contact sensing

Jan 21, 2017

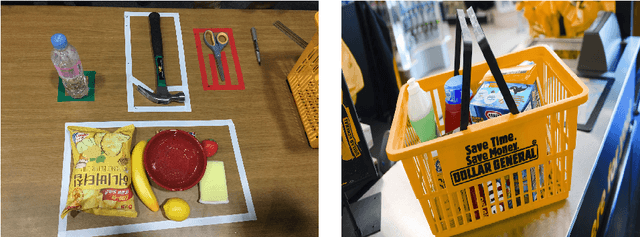

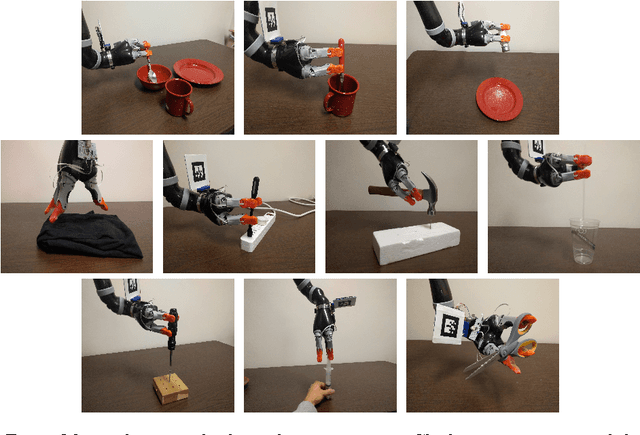

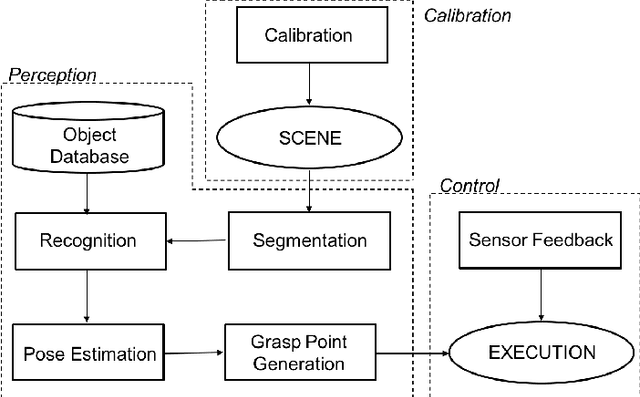

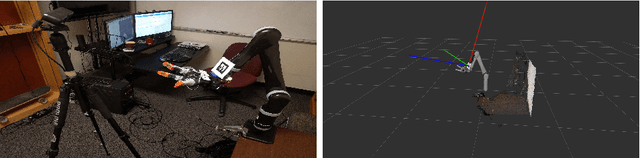

Abstract:We describe the grasping and manipulation strategy that we employed at the autonomous track of the Robotic Grasping and Manipulation Competition at IROS 2016. A salient feature of our architecture is the tight coupling between visual (Asus Xtion) and tactile perception (Robotic Materials), to reduce the uncertainty in sensing and actuation. We demonstrate the importance of tactile sensing and reactive control during the final stages of grasping using a Kinova Robotic arm. The set of tools and algorithms for object grasping presented here have been integrated into the open-source Robot Operating System (ROS).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge