Kenneth Salisbury

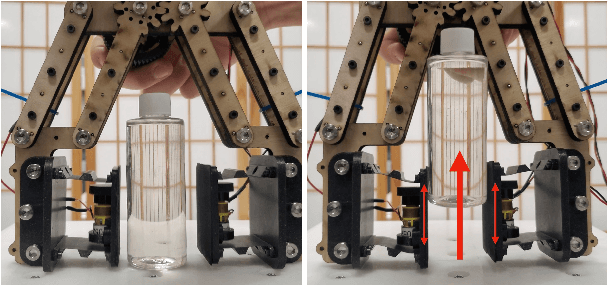

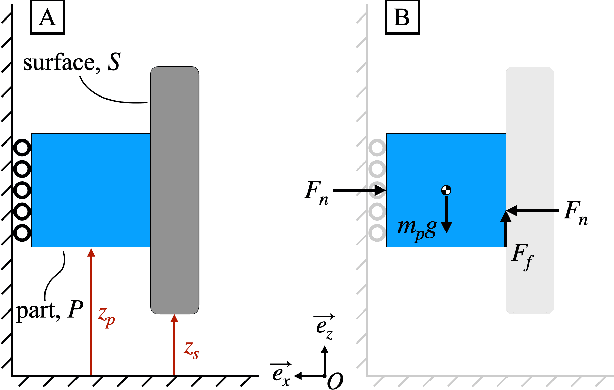

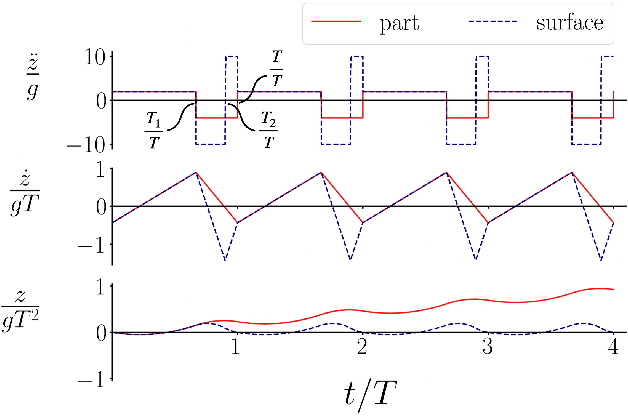

Vertical Vibratory Transport of Grasped Parts Using Impacts

Feb 08, 2025

Abstract:In this paper, we use impact-induced acceleration in conjunction with periodic stick-slip to successfully and quickly transport parts vertically against gravity. We show analytically that vertical vibratory transport is more difficult than its horizontal counterpart, and provide guidelines for achieving optimal vertical vibratory transport of a part. Namely, such a system must be capable of quickly realizing high accelerations, as well as supply normal forces at least several times that required for static equilibrium. We also show that for a given maximum acceleration, there is an optimal normal force for transport. To test our analytical guidelines, we built a vibrating surface using flexures and a voice coil actuator that can accelerate a magnetic ram into various materials to generate impacts. The surface was used to transport a part against gravity. Experimentally obtained motion tracking data confirmed the theoretical model. A series of grasping tests with a vibrating-surface equipped parallel jaw gripper confirmed the design guidelines.

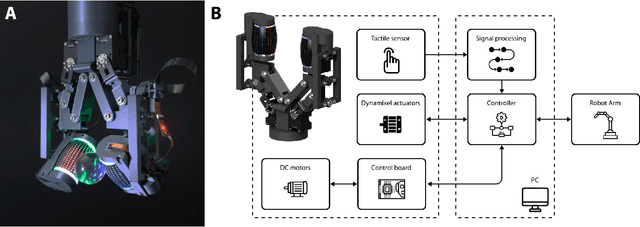

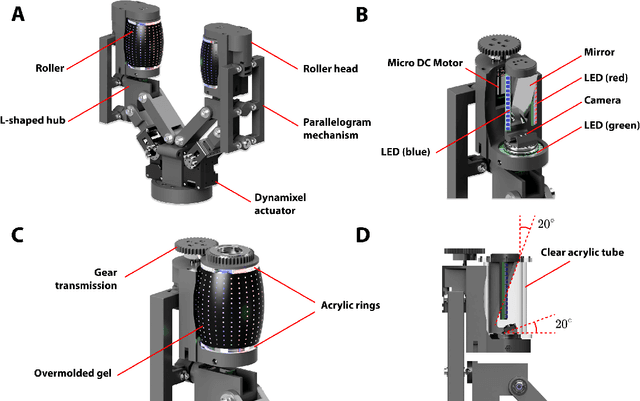

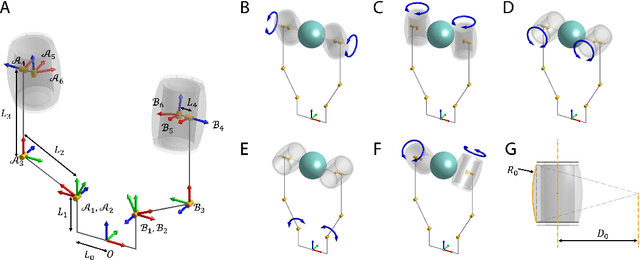

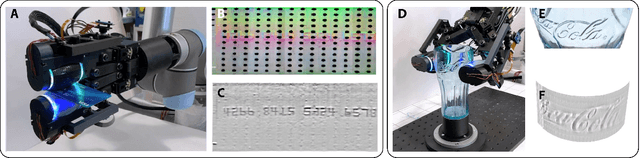

Tactile-Reactive Roller Grasper

Jun 16, 2023

Abstract:Manipulation of objects within a robot's hand is one of the most important challenges in achieving robot dexterity. The "Roller Graspers" refers to a family of non-anthropomorphic hands utilizing motorized, rolling fingertips to achieve in-hand manipulation. These graspers manipulate grasped objects by commanding the rollers to exert forces that propel the object in the desired motion directions. In this paper, we explore the possibility of robot in-hand manipulation through tactile-guided rolling. We do so by developing the Tactile-Reactive Roller Grasper (TRRG), which incorporates camera-based tactile sensing with compliant, steerable cylindrical fingertips, with accompanying sensor information processing and control strategies. We demonstrated that the combination of tactile feedback and the actively rolling surfaces enables a variety of robust in-hand manipulation applications. In addition, we also demonstrated object reconstruction techniques using tactile-guided rolling. A controlled experiment was conducted to provide insights on the benefits of tactile-reactive rollers for manipulation. We considered two manipulation cases: when the fingers are manipulating purely through rolling and when they are periodically breaking and reestablishing contact as in regrasping. We found that tactile-guided rolling can improve the manipulation robustness by allowing the grasper to perform necessary fine grip adjustments in both manipulation cases, indicating that hybrid rolling fingertip and finger-gaiting designs may be a promising research direction.

Human Tactile Gesture Interpretation for Robotic Systems

Dec 03, 2020

Abstract:Human-robot interactions are less efficient and communicative than human-to-human interactions, and a key reason is a lack of informed sense of touch in robotic systems. Existing literature demonstrates robot success in executing handovers with humans, albeit with substantial reliance on external sensing or with primitive signal processing methods, deficient compared to the rich set of information humans can detect. In contrast, we present models capable of distinguishing between four classes of human tactile gestures at a robot's end effector, using only a non-collocated six-axis force sensor at the wrist. Due to the absence in the literature, this work describes 1) the collection of an extensive force dataset characterized by human-robot contact events, and 2) classification models informed by this dataset to determine the nature of the interaction. We demonstrate high classification accuracies among our proposed gesture definitions on a test set, emphasizing that neural network classifiers on the raw data outperform several other combinations of algorithms and feature sets.

Unsupervised Learning of Audio Perception for Robotics Applications: Learning to Project Data to T-SNE/UMAP space

Feb 10, 2020

Abstract:Audio perception is a key to solving a variety of problems ranging from acoustic scene analysis, music meta-data extraction, recommendation, synthesis and analysis. It can potentially also augment computers in doing tasks that humans do effortlessly in day-to-day activities. This paper builds upon key ideas to build perception of touch sounds without access to any ground-truth data. We show how we can leverage ideas from classical signal processing to get large amounts of data of any sound of interest with a high precision. These sounds are then used, along with the images to map the sounds to a clustered space of the latent representation of these images. This approach, not only allows us to learn semantic representation of the possible sounds of interest, but also allows association of different modalities to the learned distinctions. The model trained to map sounds to this clustered representation, gives reasonable performance as opposed to expensive methods collecting a lot of human annotated data. Such approaches can be used to build a state of art perceptual model for any sound of interest described using a few signal processing features. Daisy chaining high precision sound event detectors using signal processing combined with neural architectures and high dimensional clustering of unlabelled data is a vastly powerful idea, and can be explored in a variety of ways in future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge