Megha Tippur

Reactive In-Air Clothing Manipulation with Confidence-Aware Dense Correspondence and Visuotactile Affordance

Sep 04, 2025Abstract:Manipulating clothing is challenging due to complex configurations, variable material dynamics, and frequent self-occlusion. Prior systems often flatten garments or assume visibility of key features. We present a dual-arm visuotactile framework that combines confidence-aware dense visual correspondence and tactile-supervised grasp affordance to operate directly on crumpled and suspended garments. The correspondence model is trained on a custom, high-fidelity simulated dataset using a distributional loss that captures cloth symmetries and generates correspondence confidence estimates. These estimates guide a reactive state machine that adapts folding strategies based on perceptual uncertainty. In parallel, a visuotactile grasp affordance network, self-supervised using high-resolution tactile feedback, determines which regions are physically graspable. The same tactile classifier is used during execution for real-time grasp validation. By deferring action in low-confidence states, the system handles highly occluded table-top and in-air configurations. We demonstrate our task-agnostic grasp selection module in folding and hanging tasks. Moreover, our dense descriptors provide a reusable intermediate representation for other planning modalities, such as extracting grasp targets from human video demonstrations, paving the way for more generalizable and scalable garment manipulation.

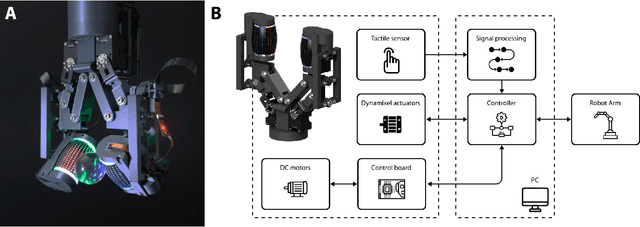

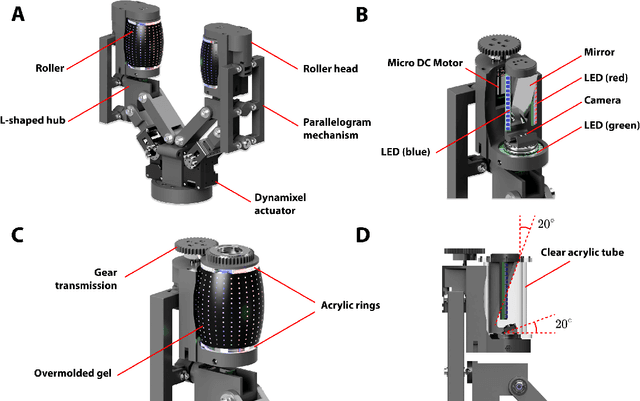

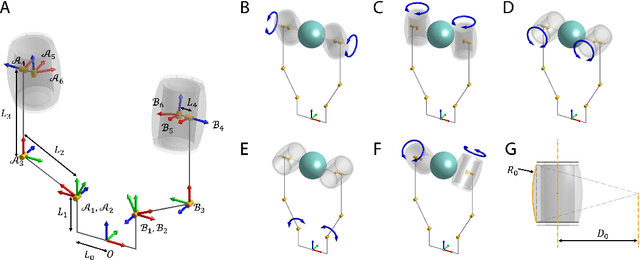

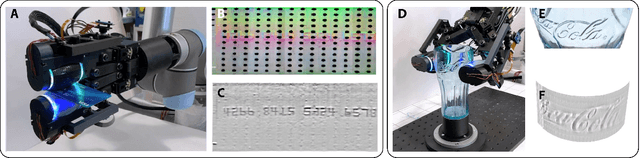

Tactile-Reactive Roller Grasper

Jun 16, 2023

Abstract:Manipulation of objects within a robot's hand is one of the most important challenges in achieving robot dexterity. The "Roller Graspers" refers to a family of non-anthropomorphic hands utilizing motorized, rolling fingertips to achieve in-hand manipulation. These graspers manipulate grasped objects by commanding the rollers to exert forces that propel the object in the desired motion directions. In this paper, we explore the possibility of robot in-hand manipulation through tactile-guided rolling. We do so by developing the Tactile-Reactive Roller Grasper (TRRG), which incorporates camera-based tactile sensing with compliant, steerable cylindrical fingertips, with accompanying sensor information processing and control strategies. We demonstrated that the combination of tactile feedback and the actively rolling surfaces enables a variety of robust in-hand manipulation applications. In addition, we also demonstrated object reconstruction techniques using tactile-guided rolling. A controlled experiment was conducted to provide insights on the benefits of tactile-reactive rollers for manipulation. We considered two manipulation cases: when the fingers are manipulating purely through rolling and when they are periodically breaking and reestablishing contact as in regrasping. We found that tactile-guided rolling can improve the manipulation robustness by allowing the grasper to perform necessary fine grip adjustments in both manipulation cases, indicating that hybrid rolling fingertip and finger-gaiting designs may be a promising research direction.

TactoFind: A Tactile Only System for Object Retrieval

Mar 23, 2023

Abstract:We study the problem of object retrieval in scenarios where visual sensing is absent, object shapes are unknown beforehand and objects can move freely, like grabbing objects out of a drawer. Successful solutions require localizing free objects, identifying specific object instances, and then grasping the identified objects, only using touch feedback. Unlike vision, where cameras can observe the entire scene, touch sensors are local and only observe parts of the scene that are in contact with the manipulator. Moreover, information gathering via touch sensors necessitates applying forces on the touched surface which may disturb the scene itself. Reasoning with touch, therefore, requires careful exploration and integration of information over time -- a challenge we tackle. We present a system capable of using sparse tactile feedback from fingertip touch sensors on a dexterous hand to localize, identify and grasp novel objects without any visual feedback. Videos are available at https://taochenshh.github.io/projects/tactofind.

Visual Dexterity: In-hand Dexterous Manipulation from Depth

Nov 21, 2022Abstract:In-hand object reorientation is necessary for performing many dexterous manipulation tasks, such as tool use in unstructured environments that remain beyond the reach of current robots. Prior works built reorientation systems that assume one or many of the following specific circumstances: reorienting only specific objects with simple shapes, limited range of reorientation, slow or quasistatic manipulation, the need for specialized and costly sensor suites, simulation-only results, and other constraints which make the system infeasible for real-world deployment. We overcome these limitations and present a general object reorientation controller that is trained using reinforcement learning in simulation and evaluated in the real world. Our system uses readings from a single commodity depth camera to dynamically reorient complex objects by any amount in real time. The controller generalizes to novel objects not used during training. It is successful in the most challenging test: the ability to reorient objects in the air held by a downward-facing hand that must counteract gravity during reorientation. The results demonstrate that the policy transfer from simulation to the real world can be accomplished even for dynamic and contact-rich tasks. Lastly, our hardware only uses open-source components that cost less than five thousand dollars. Such construction makes it possible to replicate the work and democratize future research in dexterous manipulation. Videos are available at: https://taochenshh.github.io/projects/visual-dexterity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge