Shaoxiong Wang

In-Hand Singulation and Scooping Manipulation with a 5 DOF Tactile Gripper

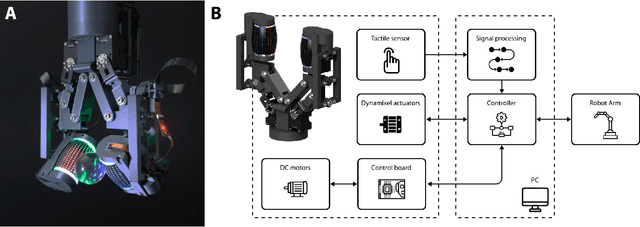

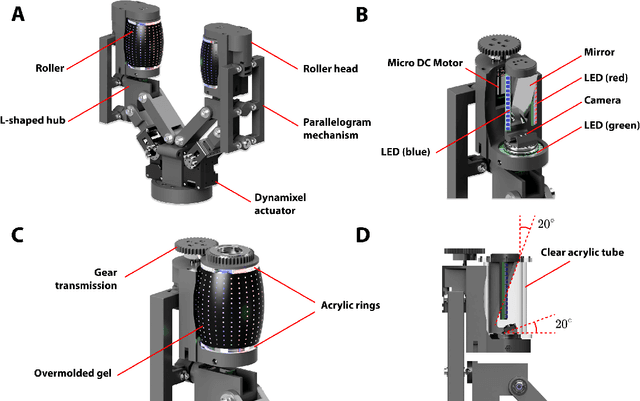

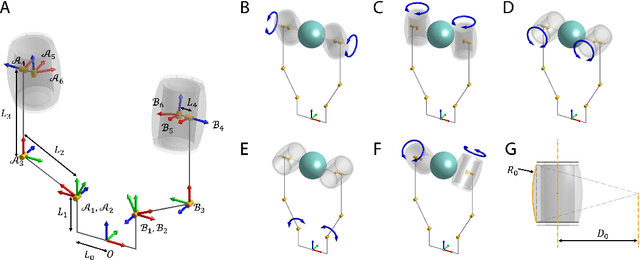

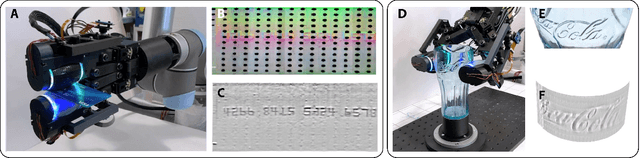

Aug 01, 2024Abstract:Manipulation tasks often require a high degree of dexterity, typically necessitating grippers with multiple degrees of freedom (DoF). While a robotic hand equipped with multiple fingers can execute precise and intricate manipulation tasks, the inherent redundancy stemming from its extensive DoF often adds unnecessary complexity. In this paper, we introduce the design of a tactile sensor-equipped gripper with two fingers and five DoF. We present a novel design integrating a GelSight tactile sensor, enhancing sensing capabilities and enabling finer control during specific manipulation tasks. To evaluate the gripper's performance, we conduct experiments involving two challenging tasks: 1) retrieving, singularizing, and classification of various objects embedded in granular media, and 2) executing scooping manipulations of credit cards in confined environments to achieve precise insertion. Our results demonstrate the efficiency of the proposed approach, with a high success rate for singulation and classification tasks, particularly for spherical objects at high as 94.3%, and a 100% success rate for scooping and inserting credit cards.

Tactile-Reactive Roller Grasper

Jun 16, 2023

Abstract:Manipulation of objects within a robot's hand is one of the most important challenges in achieving robot dexterity. The "Roller Graspers" refers to a family of non-anthropomorphic hands utilizing motorized, rolling fingertips to achieve in-hand manipulation. These graspers manipulate grasped objects by commanding the rollers to exert forces that propel the object in the desired motion directions. In this paper, we explore the possibility of robot in-hand manipulation through tactile-guided rolling. We do so by developing the Tactile-Reactive Roller Grasper (TRRG), which incorporates camera-based tactile sensing with compliant, steerable cylindrical fingertips, with accompanying sensor information processing and control strategies. We demonstrated that the combination of tactile feedback and the actively rolling surfaces enables a variety of robust in-hand manipulation applications. In addition, we also demonstrated object reconstruction techniques using tactile-guided rolling. A controlled experiment was conducted to provide insights on the benefits of tactile-reactive rollers for manipulation. We considered two manipulation cases: when the fingers are manipulating purely through rolling and when they are periodically breaking and reestablishing contact as in regrasping. We found that tactile-guided rolling can improve the manipulation robustness by allowing the grasper to perform necessary fine grip adjustments in both manipulation cases, indicating that hybrid rolling fingertip and finger-gaiting designs may be a promising research direction.

Visuotactile Affordances for Cloth Manipulation with Local Control

Dec 09, 2022

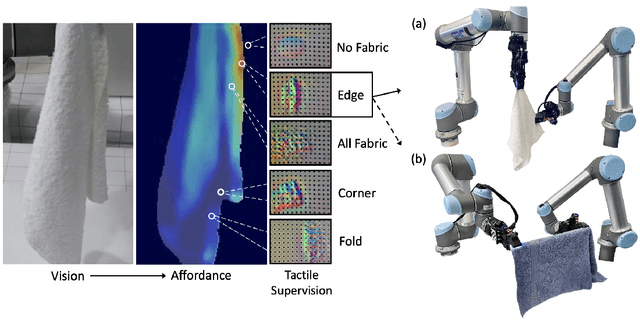

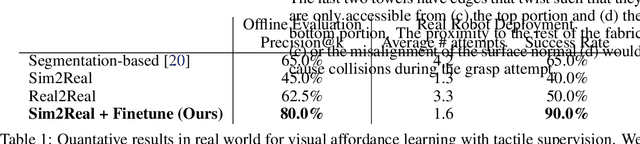

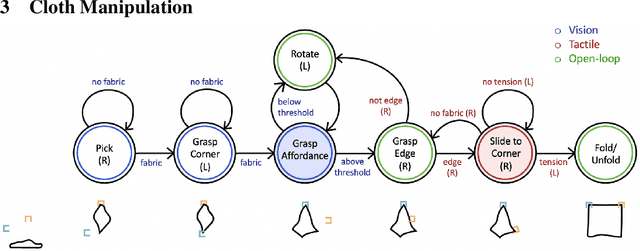

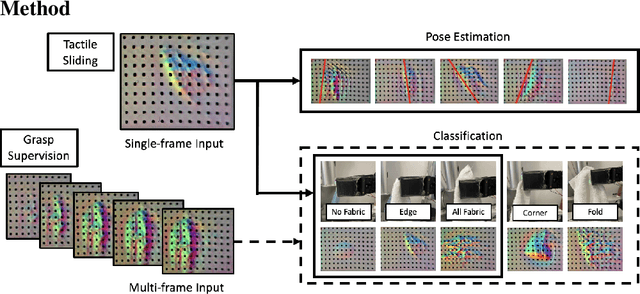

Abstract:Cloth in the real world is often crumpled, self-occluded, or folded in on itself such that key regions, such as corners, are not directly graspable, making manipulation difficult. We propose a system that leverages visual and tactile perception to unfold the cloth via grasping and sliding on edges. By doing so, the robot is able to grasp two adjacent corners, enabling subsequent manipulation tasks like folding or hanging. As components of this system, we develop tactile perception networks that classify whether an edge is grasped and estimate the pose of the edge. We use the edge classification network to supervise a visuotactile edge grasp affordance network that can grasp edges with a 90% success rate. Once an edge is grasped, we demonstrate that the robot can slide along the cloth to the adjacent corner using tactile pose estimation/control in real time. See http://nehasunil.com/visuotactile/visuotactile.html for videos.

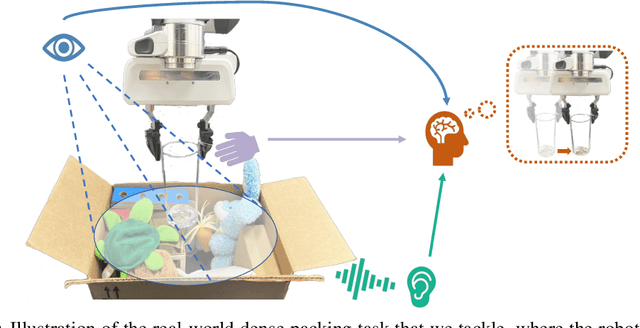

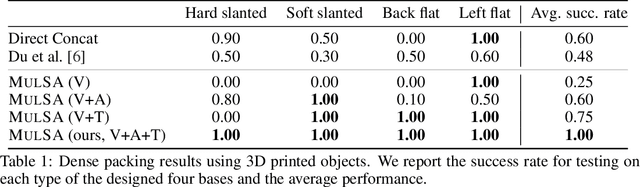

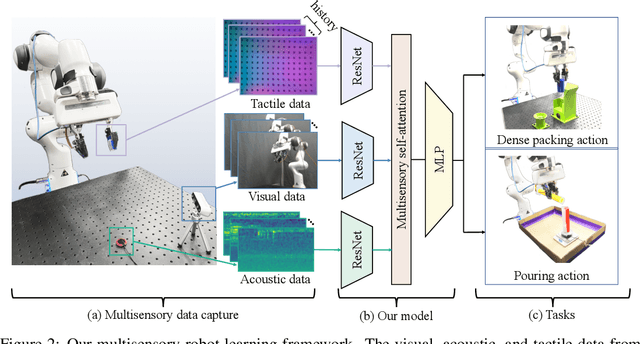

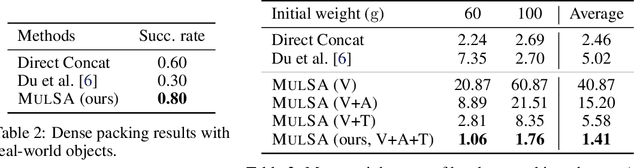

See, Hear, and Feel: Smart Sensory Fusion for Robotic Manipulation

Dec 08, 2022

Abstract:Humans use all of their senses to accomplish different tasks in everyday activities. In contrast, existing work on robotic manipulation mostly relies on one, or occasionally two modalities, such as vision and touch. In this work, we systematically study how visual, auditory, and tactile perception can jointly help robots to solve complex manipulation tasks. We build a robot system that can see with a camera, hear with a contact microphone, and feel with a vision-based tactile sensor, with all three sensory modalities fused with a self-attention model. Results on two challenging tasks, dense packing and pouring, demonstrate the necessity and power of multisensory perception for robotic manipulation: vision displays the global status of the robot but can often suffer from occlusion, audio provides immediate feedback of key moments that are even invisible, and touch offers precise local geometry for decision making. Leveraging all three modalities, our robotic system significantly outperforms prior methods.

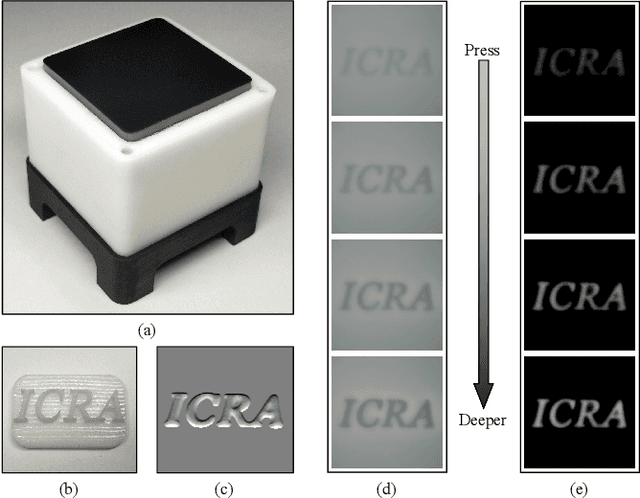

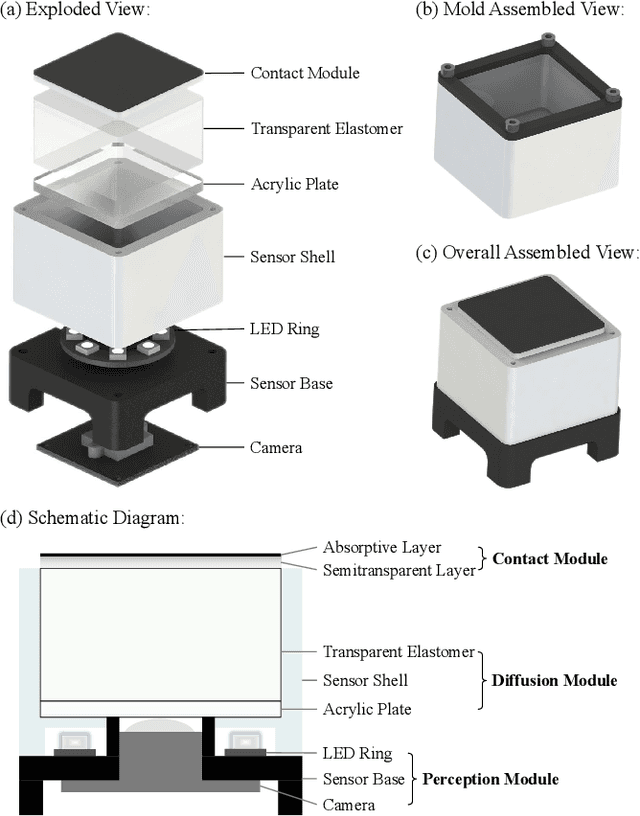

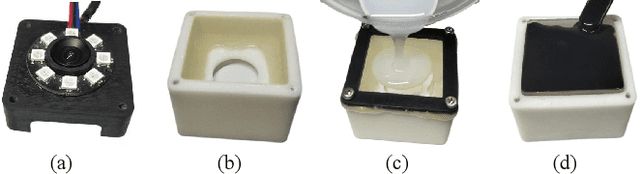

DTact: A Vision-Based Tactile Sensor that Measures High-Resolution 3D Geometry Directly from Darkness

Sep 28, 2022

Abstract:Vision-based tactile sensors that can measure 3D geometry of the contacting objects are crucial for robots to perform dexterous manipulation tasks. However, the existing sensors are usually complicated to fabricate and delicate to extend. In this work, we novelly take advantage of the reflection property of semitransparent elastomer to design a robust, low-cost, and easy-to-fabricate tactile sensor named DTact. DTact measures high-resolution 3D geometry accurately from the darkness shown in the captured tactile images with only a single image for calibration. In contrast to previous sensors, DTact is robust under various illumination conditions. Then, we build prototypes of DTact that have non-planar contact surfaces with minimal extra efforts and costs. Finally, we perform two intelligent robotic tasks including pose estimation and object recognition using DTact, in which DTact shows large potential in applications.

GelSight Wedge: Measuring High-Resolution 3D Contact Geometry with a Compact Robot Finger

Jun 16, 2021

Abstract:Vision-based tactile sensors have the potential to provide important contact geometry to localize the objective with visual occlusion. However, it is challenging to measure high-resolution 3D contact geometry for a compact robot finger, to simultaneously meet optical and mechanical constraints. In this work, we present the GelSight Wedge sensor, which is optimized to have a compact shape for robot fingers, while achieving high-resolution 3D reconstruction. We evaluate the 3D reconstruction under different lighting configurations, and extend the method from 3 lights to 1 or 2 lights. We demonstrate the flexibility of the design by shrinking the sensor to the size of a human finger for fine manipulation tasks. We also show the effectiveness and potential of the reconstructed 3D geometry for pose tracking in the 3D space.

Towards Learning to Play Piano with Dexterous Hands and Touch

Jun 08, 2021

Abstract:The virtuoso plays the piano with passion, poetry and extraordinary technical ability. As Liszt said (a virtuoso)must call up scent and blossom, and breathe the breath of life. The strongest robots that can play a piano are based on a combination of specialized robot hands/piano and hardcoded planning algorithms. In contrast to that, in this paper, we demonstrate how an agent can learn directly from machine-readable music score to play the piano with dexterous hands on a simulated piano using reinforcement learning (RL) from scratch. We demonstrate the RL agents can not only find the correct key position but also deal with various rhythmic, volume and fingering, requirements. We achieve this by using a touch-augmented reward and a novel curriculum of tasks. We conclude by carefully studying the important aspects to enable such learning algorithms and that can potentially shed light on future research in this direction.

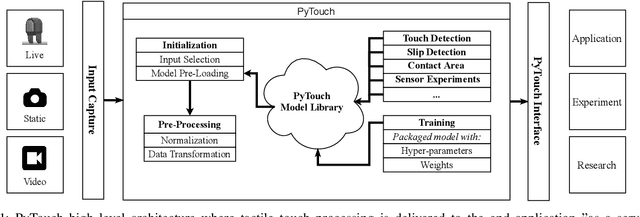

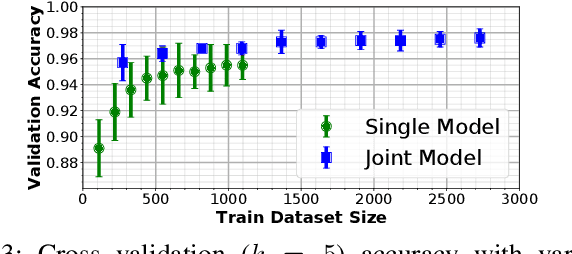

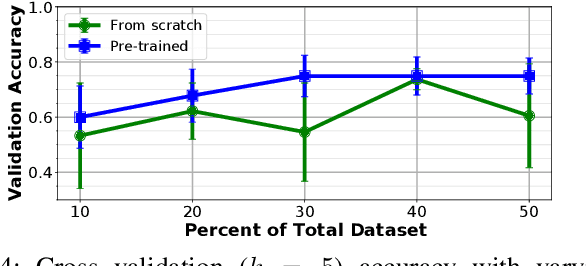

PyTouch: A Machine Learning Library for Touch Processing

May 26, 2021

Abstract:With the increased availability of rich tactile sensors, there is an equally proportional need for open-source and integrated software capable of efficiently and effectively processing raw touch measurements into high-level signals that can be used for control and decision-making. In this paper, we present PyTouch -- the first machine learning library dedicated to the processing of touch sensing signals. PyTouch, is designed to be modular, easy-to-use and provides state-of-the-art touch processing capabilities as a service with the goal of unifying the tactile sensing community by providing a library for building scalable, proven, and performance-validated modules over which applications and research can be built upon. We evaluate PyTouch on real-world data from several tactile sensors on touch processing tasks such as touch detection, slip and object pose estimations. PyTouch is open-sourced at https://github.com/facebookresearch/pytouch .

SwingBot: Learning Physical Features from In-hand Tactile Exploration for Dynamic Swing-up Manipulation

Jan 28, 2021

Abstract:Several robot manipulation tasks are extremely sensitive to variations of the physical properties of the manipulated objects. One such task is manipulating objects by using gravity or arm accelerations, increasing the importance of mass, center of mass, and friction information. We present SwingBot, a robot that is able to learn the physical features of a held object through tactile exploration. Two exploration actions (tilting and shaking) provide the tactile information used to create a physical feature embedding space. With this embedding, SwingBot is able to predict the swing angle achieved by a robot performing dynamic swing-up manipulations on a previously unseen object. Using these predictions, it is able to search for the optimal control parameters for a desired swing-up angle. We show that with the learned physical features our end-to-end self-supervised learning pipeline is able to substantially improve the accuracy of swinging up unseen objects. We also show that objects with similar dynamics are closer to each other on the embedding space and that the embedding can be disentangled into values of specific physical properties.

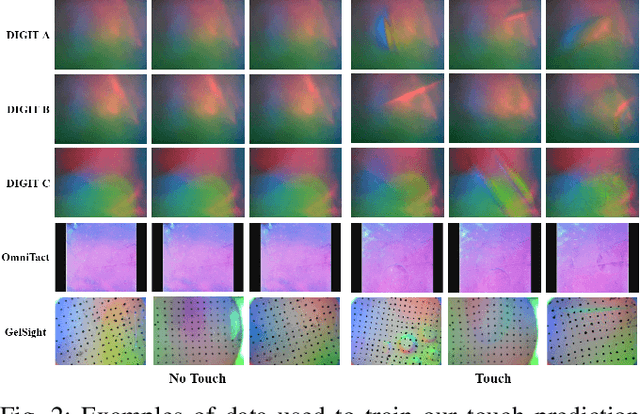

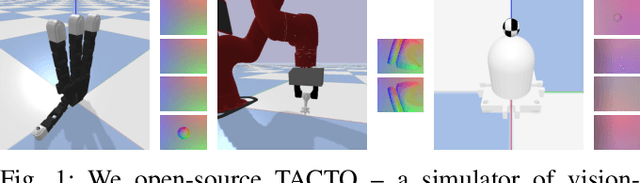

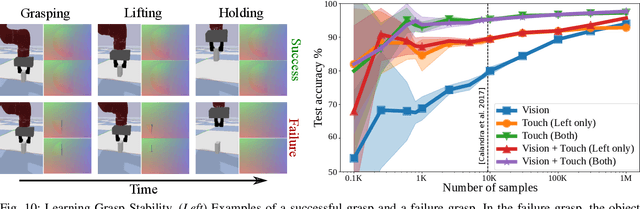

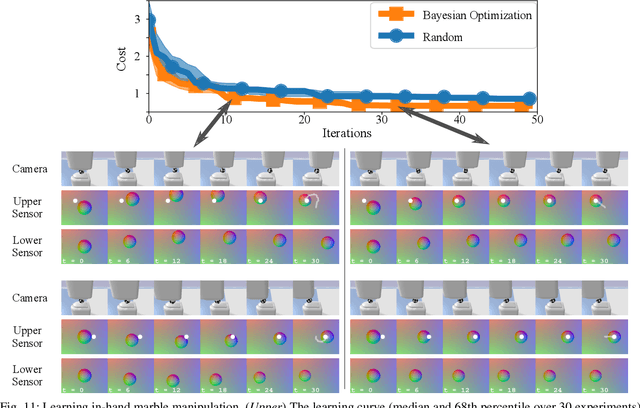

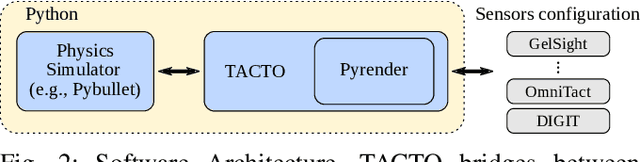

TACTO: A Fast, Flexible and Open-source Simulator for High-Resolution Vision-based Tactile Sensors

Dec 15, 2020

Abstract:Simulators perform an important role in prototyping, debugging and benchmarking new advances in robotics and learning for control. Although many physics engines exist, some aspects of the real-world are harder than others to simulate. One of the aspects that have so far eluded accurate simulation is touch sensing. To address this gap, we present TACTO -- a fast, flexible and open-source simulator for vision-based tactile sensors. This simulator allows to render realistic high-resolution touch readings at hundreds of frames per second, and can be easily configured to simulate different vision-based tactile sensors, including GelSight, DIGIT and OmniTact. In this paper, we detail the principles that drove the implementation of TACTO and how they are reflected in its architecture. We demonstrate TACTO on a perceptual task, by learning to predict grasp stability using touch from 1 million grasps, and on a marble manipulation control task. We believe that TACTO is a step towards the widespread adoption of touch sensing in robotic applications, and to enable machine learning practitioners interested in multi-modal learning and control. TACTO is open-source at https://github.com/facebookresearch/tacto.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge