Filipe Veiga

SwingBot: Learning Physical Features from In-hand Tactile Exploration for Dynamic Swing-up Manipulation

Jan 28, 2021

Abstract:Several robot manipulation tasks are extremely sensitive to variations of the physical properties of the manipulated objects. One such task is manipulating objects by using gravity or arm accelerations, increasing the importance of mass, center of mass, and friction information. We present SwingBot, a robot that is able to learn the physical features of a held object through tactile exploration. Two exploration actions (tilting and shaking) provide the tactile information used to create a physical feature embedding space. With this embedding, SwingBot is able to predict the swing angle achieved by a robot performing dynamic swing-up manipulations on a previously unseen object. Using these predictions, it is able to search for the optimal control parameters for a desired swing-up angle. We show that with the learned physical features our end-to-end self-supervised learning pipeline is able to substantially improve the accuracy of swinging up unseen objects. We also show that objects with similar dynamics are closer to each other on the embedding space and that the embedding can be disentangled into values of specific physical properties.

Soft, Round, High Resolution Tactile Fingertip Sensors for Dexterous Robotic Manipulation

May 18, 2020

Abstract:High resolution tactile sensors are often bulky and have shape profiles that make them awkward for use in manipulation. This becomes important when using such sensors as fingertips for dexterous multi-fingered hands, where boxy or planar fingertips limit the available set of smooth manipulation strategies. High resolution optical based sensors such as GelSight have until now been constrained to relatively flat geometries due to constraints on illumination geometry.Here, we show how to construct a rounded fingertip that utilizes a form of light piping for directional illumination. Our sensors can replace the standard rounded fingertips of the Allegro hand.They can capture high resolution maps of the contact surfaces,and can be used to support various dexterous manipulation tasks.

Building a Library of Tactile Skills Based on FingerVision

Sep 20, 2019

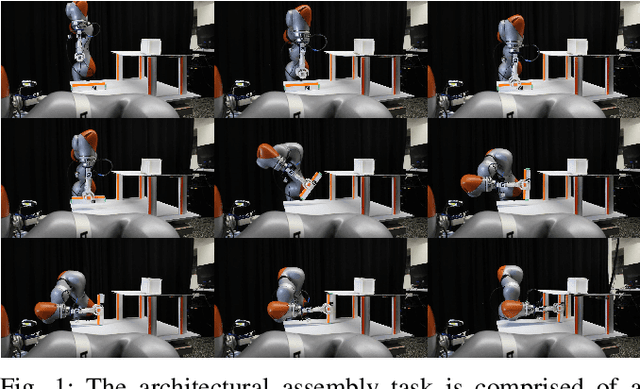

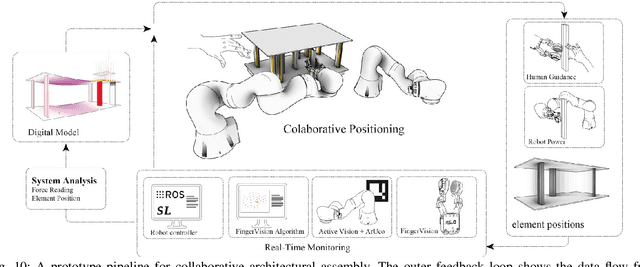

Abstract:Camera-based tactile sensors are emerging as a promising inexpensive solution for tactile-enhanced manipulation tasks. A recently introduced FingerVision sensor was shown capable of generating reliable signals for force estimation, object pose estimation, and slip detection. In this paper, we build upon the FingerVision design, improving already existing control algorithms, and, more importantly, expanding its range of applicability to more challenging tasks by utilizing raw skin deformation data for control. In contrast to previous approaches that rely on the average deformation of the whole sensor surface, we directly employ local deviations of each spherical marker immersed in the silicone body of the sensor for feedback control and as input to learning tasks. We show that with such input, substances of varying texture and viscosity can be distinguished on the basis of tactile sensations evoked while stirring them. As another application, we learn a mapping between skin deformation and force applied to an object. To demonstrate the full range of capabilities of the proposed controllers, we deploy them in a challenging architectural assembly task that involves inserting a load-bearing element underneath a bendable plate at the point of maximum load.

In-Hand Object Stabilization by Independent Finger Control

Jun 12, 2018

Abstract:Grip control during robotic in-hand manipulation is usually modeled as part of a monolithic task, relying on complex controllers specialized for specific situations. Such approaches do not generalize well and are difficult to apply to novel manipulation tasks. Here, we propose a modular object stabilization method based on a proposition that explains how humans achieve grasp stability. In this bio-mimetic approach, independent tactile grip stabilization controllers ensure that slip does not occur locally at the engaged robot fingers. Such local slip is predicted from the tactile signals of each fingertip sensor i.e., BioTac and BioTac SP by Syntouch. We show that stable grasps emerge without any form of central communication when such independent controllers are engaged in the control of multi-digit robotic hands. These grasps are resistant to external perturbations while being capable of stabilizing a large variety of objects.

Can Modular Finger Control for In-Hand Object Stabilization be accomplished by Independent Tactile Feedback Control Laws?

Dec 24, 2016

Abstract:Currently grip control during in-hand manipulation is usually modeled as part of a monolithic task, yielding complex controllers based on force control specialized for their situations. Such non-modular and specialized control approaches render the generalization of these controllers to new in-hand manipulation tasks difficult. Clearly, a grip control approach that generalizes well between several tasks would be preferable. We propose a modular approach where each finger is controlled by an independent tactile grip controller. Using signals from the human-inspired biotac sensor, we can predict future slip - and prevent it by appropriate motor actions. This slip-preventing grip controller is first developed and trained during a single-finger stabilization task. Subsequently, we show that several independent slip-preventing grip controllers can be employed together without any form of central communication. The resulting approach works for two, three, four and five finger grip stabilization control. Such a modular grip control approach has the potential to generalize across a large variety of inhand manipulation tasks, including grip change, finger gaiting, between-hands object transfer, and across multiple objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge