Branden Romero

DEXOP: A Device for Robotic Transfer of Dexterous Human Manipulation

Sep 04, 2025

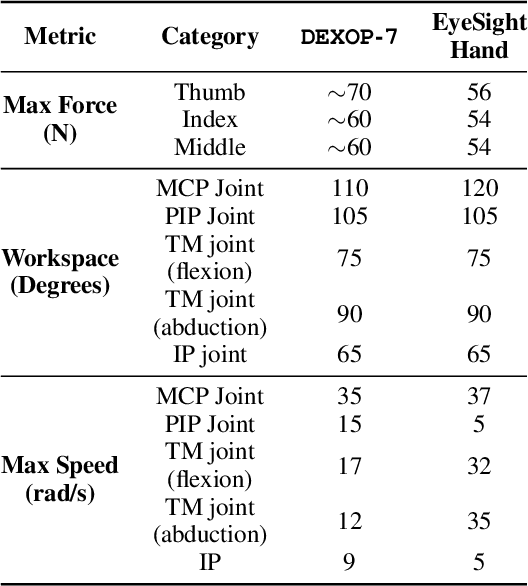

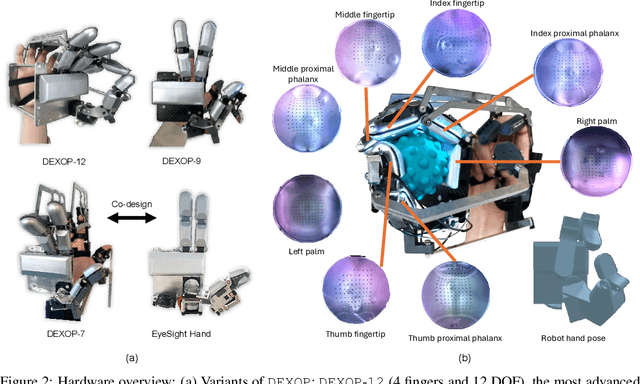

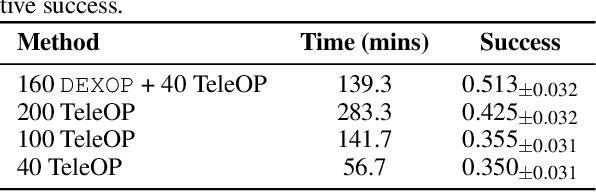

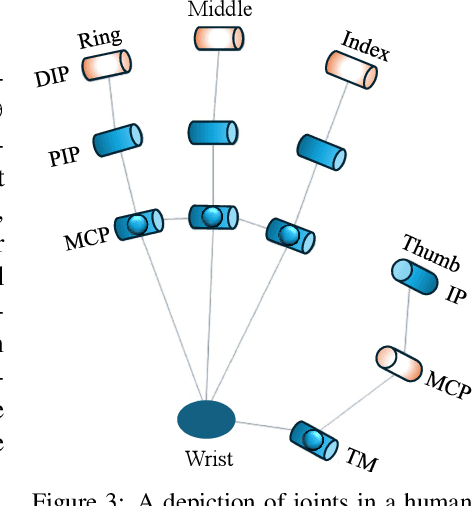

Abstract:We introduce perioperation, a paradigm for robotic data collection that sensorizes and records human manipulation while maximizing the transferability of the data to real robots. We implement this paradigm in DEXOP, a passive hand exoskeleton designed to maximize human ability to collect rich sensory (vision + tactile) data for diverse dexterous manipulation tasks in natural environments. DEXOP mechanically connects human fingers to robot fingers, providing users with direct contact feedback (via proprioception) and mirrors the human hand pose to the passive robot hand to maximize the transfer of demonstrated skills to the robot. The force feedback and pose mirroring make task demonstrations more natural for humans compared to teleoperation, increasing both speed and accuracy. We evaluate DEXOP across a range of dexterous, contact-rich tasks, demonstrating its ability to collect high-quality demonstration data at scale. Policies learned with DEXOP data significantly improve task performance per unit time of data collection compared to teleoperation, making DEXOP a powerful tool for advancing robot dexterity. Our project page is at https://dex-op.github.io.

Fabrica: Dual-Arm Assembly of General Multi-Part Objects via Integrated Planning and Learning

Jun 05, 2025Abstract:Multi-part assembly poses significant challenges for robots to execute long-horizon, contact-rich manipulation with generalization across complex geometries. We present Fabrica, a dual-arm robotic system capable of end-to-end planning and control for autonomous assembly of general multi-part objects. For planning over long horizons, we develop hierarchies of precedence, sequence, grasp, and motion planning with automated fixture generation, enabling general multi-step assembly on any dual-arm robots. The planner is made efficient through a parallelizable design and is optimized for downstream control stability. For contact-rich assembly steps, we propose a lightweight reinforcement learning framework that trains generalist policies across object geometries, assembly directions, and grasp poses, guided by equivariance and residual actions obtained from the plan. These policies transfer zero-shot to the real world and achieve 80% successful steps. For systematic evaluation, we propose a benchmark suite of multi-part assemblies resembling industrial and daily objects across diverse categories and geometries. By integrating efficient global planning and robust local control, we showcase the first system to achieve complete and generalizable real-world multi-part assembly without domain knowledge or human demonstrations. Project website: http://fabrica.csail.mit.edu/

EyeSight Hand: Design of a Fully-Actuated Dexterous Robot Hand with Integrated Vision-Based Tactile Sensors and Compliant Actuation

Aug 12, 2024

Abstract:In this work, we introduce the EyeSight Hand, a novel 7 degrees of freedom (DoF) humanoid hand featuring integrated vision-based tactile sensors tailored for enhanced whole-hand manipulation. Additionally, we introduce an actuation scheme centered around quasi-direct drive actuation to achieve human-like strength and speed while ensuring robustness for large-scale data collection. We evaluate the EyeSight Hand on three challenging tasks: bottle opening, plasticine cutting, and plate pick and place, which require a blend of complex manipulation, tool use, and precise force application. Imitation learning models trained on these tasks, with a novel vision dropout strategy, showcase the benefits of tactile feedback in enhancing task success rates. Our results reveal that the integration of tactile sensing dramatically improves task performance, underscoring the critical role of tactile information in dexterous manipulation.

DIFFTACTILE: A Physics-based Differentiable Tactile Simulator for Contact-rich Robotic Manipulation

Mar 13, 2024Abstract:We introduce DIFFTACTILE, a physics-based differentiable tactile simulation system designed to enhance robotic manipulation with dense and physically accurate tactile feedback. In contrast to prior tactile simulators which primarily focus on manipulating rigid bodies and often rely on simplified approximations to model stress and deformations of materials in contact, DIFFTACTILE emphasizes physics-based contact modeling with high fidelity, supporting simulations of diverse contact modes and interactions with objects possessing a wide range of material properties. Our system incorporates several key components, including a Finite Element Method (FEM)-based soft body model for simulating the sensing elastomer, a multi-material simulator for modeling diverse object types (such as elastic, elastoplastic, cables) under manipulation, a penalty-based contact model for handling contact dynamics. The differentiable nature of our system facilitates gradient-based optimization for both 1) refining physical properties in simulation using real-world data, hence narrowing the sim-to-real gap and 2) efficient learning of tactile-assisted grasping and contact-rich manipulation skills. Additionally, we introduce a method to infer the optical response of our tactile sensor to contact using an efficient pixel-based neural module. We anticipate that DIFFTACTILE will serve as a useful platform for studying contact-rich manipulations, leveraging the benefits of dense tactile feedback and differentiable physics. Code and supplementary materials are available at the project website https://difftactile.github.io/.

GelSight Wedge: Measuring High-Resolution 3D Contact Geometry with a Compact Robot Finger

Jun 16, 2021

Abstract:Vision-based tactile sensors have the potential to provide important contact geometry to localize the objective with visual occlusion. However, it is challenging to measure high-resolution 3D contact geometry for a compact robot finger, to simultaneously meet optical and mechanical constraints. In this work, we present the GelSight Wedge sensor, which is optimized to have a compact shape for robot fingers, while achieving high-resolution 3D reconstruction. We evaluate the 3D reconstruction under different lighting configurations, and extend the method from 3 lights to 1 or 2 lights. We demonstrate the flexibility of the design by shrinking the sensor to the size of a human finger for fine manipulation tasks. We also show the effectiveness and potential of the reconstructed 3D geometry for pose tracking in the 3D space.

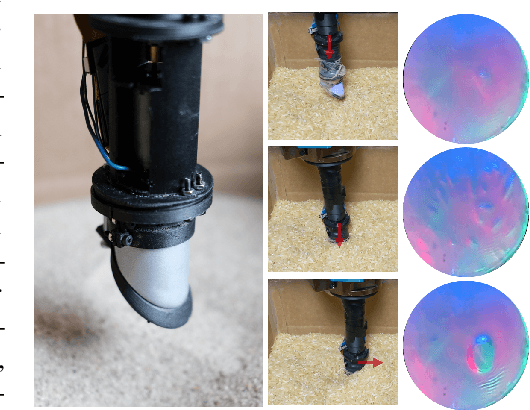

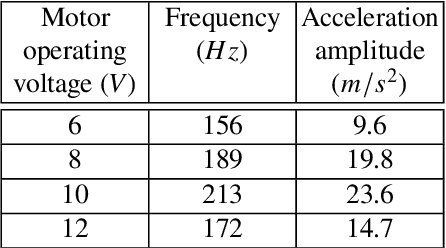

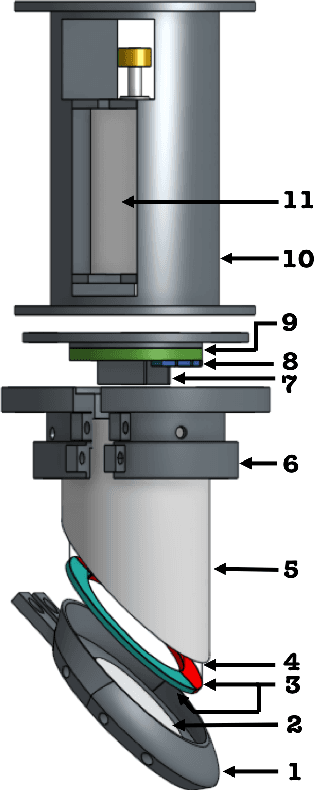

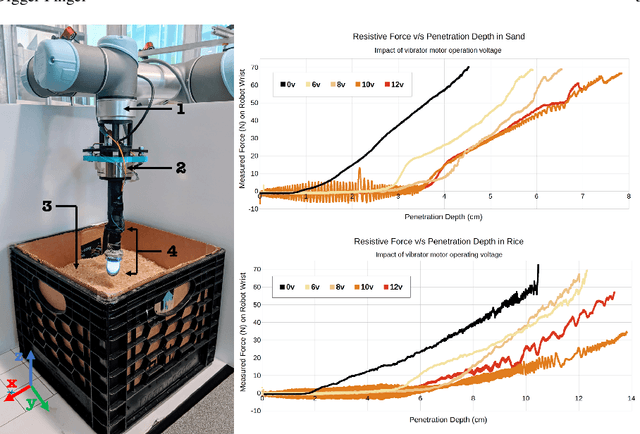

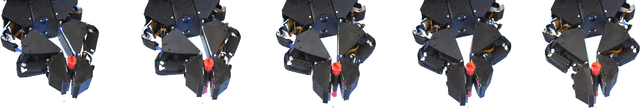

Digger Finger: GelSight Tactile Sensor for Object Identification Inside Granular Media

Feb 20, 2021

Abstract:In this paper we present an early prototype of the Digger Finger that is designed to easily penetrate granular media and is equipped with the GelSight sensor. Identifying objects buried in granular media using tactile sensors is a challenging task. First, particle jamming in granular media prevents downward movement. Second, the granular media particles tend to get stuck between the sensing surface and the object of interest, distorting the actual shape of the object. To tackle these challenges we present a Digger Finger prototype. It is capable of fluidizing granular media during penetration using mechanical vibrations. It is equipped with high resolution vision based tactile sensing to identify objects buried inside granular media. We describe the experimental procedures we use to evaluate these fluidizing and buried shape recognition capabilities. A robot with such fingers can perform explosive ordnance disposal and Improvised Explosive Device (IED) detection tasks at a much a finer resolution compared to techniques like Ground Penetration Radars (GPRs). Sensors like the Digger Finger will allow robotic manipulation research to move beyond only manipulating rigid objects.

SwingBot: Learning Physical Features from In-hand Tactile Exploration for Dynamic Swing-up Manipulation

Jan 28, 2021

Abstract:Several robot manipulation tasks are extremely sensitive to variations of the physical properties of the manipulated objects. One such task is manipulating objects by using gravity or arm accelerations, increasing the importance of mass, center of mass, and friction information. We present SwingBot, a robot that is able to learn the physical features of a held object through tactile exploration. Two exploration actions (tilting and shaking) provide the tactile information used to create a physical feature embedding space. With this embedding, SwingBot is able to predict the swing angle achieved by a robot performing dynamic swing-up manipulations on a previously unseen object. Using these predictions, it is able to search for the optimal control parameters for a desired swing-up angle. We show that with the learned physical features our end-to-end self-supervised learning pipeline is able to substantially improve the accuracy of swinging up unseen objects. We also show that objects with similar dynamics are closer to each other on the embedding space and that the embedding can be disentangled into values of specific physical properties.

Soft, Round, High Resolution Tactile Fingertip Sensors for Dexterous Robotic Manipulation

May 18, 2020

Abstract:High resolution tactile sensors are often bulky and have shape profiles that make them awkward for use in manipulation. This becomes important when using such sensors as fingertips for dexterous multi-fingered hands, where boxy or planar fingertips limit the available set of smooth manipulation strategies. High resolution optical based sensors such as GelSight have until now been constrained to relatively flat geometries due to constraints on illumination geometry.Here, we show how to construct a rounded fingertip that utilizes a form of light piping for directional illumination. Our sensors can replace the standard rounded fingertips of the Allegro hand.They can capture high resolution maps of the contact surfaces,and can be used to support various dexterous manipulation tasks.

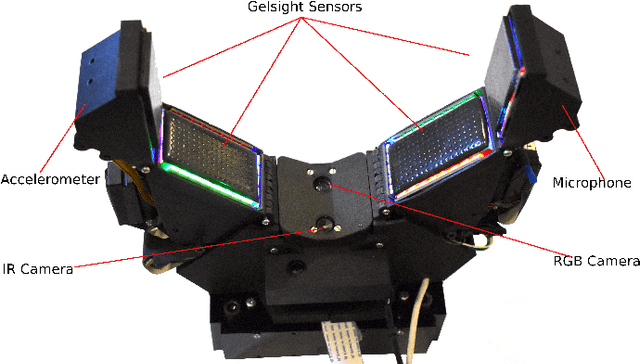

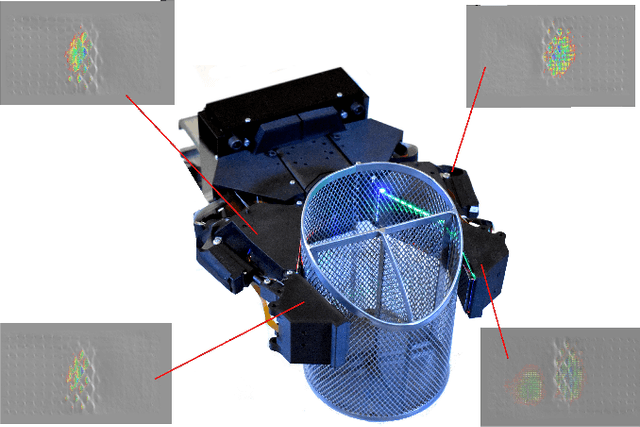

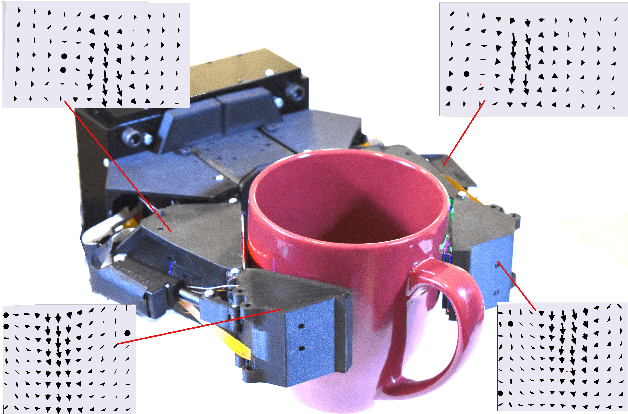

Design of a Fully Actuated Robotic Hand With Multiple Gelsight Tactile Sensors

Feb 06, 2020

Abstract:This work details the design of a novel two finger robot gripper with multiple Gelsight based optical-tactile sensors covering the inner surface of the hand. The multiple Gelsight sensors can gather the surface topology of the object from multiple views simultaneously as well as can track the shear and tensile stress. In addition, other sensing modalities enable the hand to gather the thermal, acoustic and vibration information from the object being grasped. The force controlled gripper is fully actuated so that it can be used for various grasp configurations and can also be used for in-hand manipulation tasks. Here we present the design of such a gripper.

Improving grasp performance using in-hand proximity and contact sensing

Jan 21, 2017

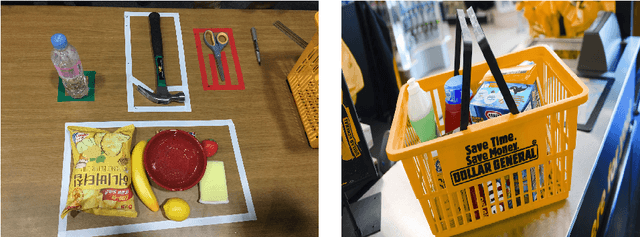

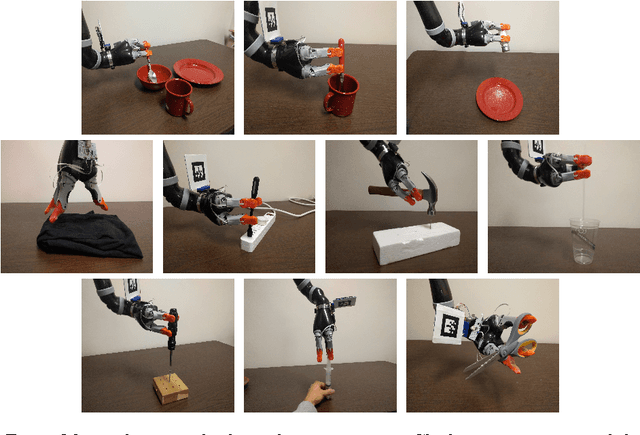

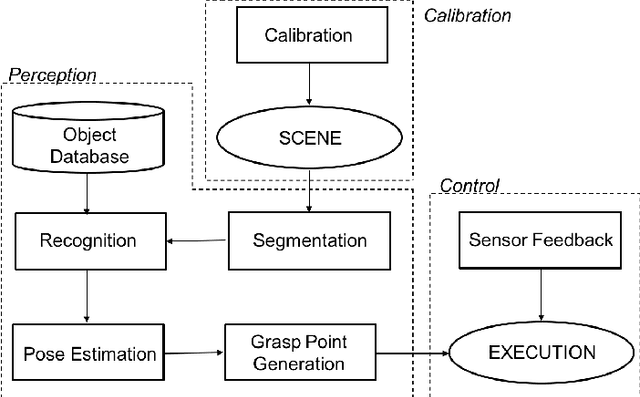

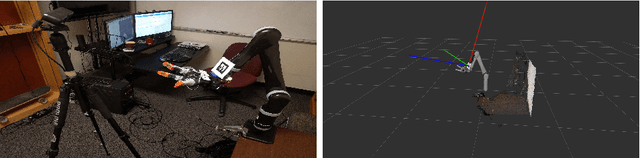

Abstract:We describe the grasping and manipulation strategy that we employed at the autonomous track of the Robotic Grasping and Manipulation Competition at IROS 2016. A salient feature of our architecture is the tight coupling between visual (Asus Xtion) and tactile perception (Robotic Materials), to reduce the uncertainty in sensing and actuation. We demonstrate the importance of tactile sensing and reactive control during the final stages of grasping using a Kinova Robotic arm. The set of tools and algorithms for object grasping presented here have been integrated into the open-source Robot Operating System (ROS).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge