Nikolaus Correll

University of Colorado-Boulder

On the Dual-Use Dilemma in Physical Reasoning and Force

May 24, 2025Abstract:Humans learn how and when to apply forces in the world via a complex physiological and psychological learning process. Attempting to replicate this in vision-language models (VLMs) presents two challenges: VLMs can produce harmful behavior, which is particularly dangerous for VLM-controlled robots which interact with the world, but imposing behavioral safeguards can limit their functional and ethical extents. We conduct two case studies on safeguarding VLMs which generate forceful robotic motion, finding that safeguards reduce both harmful and helpful behavior involving contact-rich manipulation of human body parts. Then, we discuss the key implication of this result--that value alignment may impede desirable robot capabilities--for model evaluation and robot learning.

Unfettered Forceful Skill Acquisition with Physical Reasoning and Coordinate Frame Labeling

May 14, 2025Abstract:Vision language models (VLMs) exhibit vast knowledge of the physical world, including intuition of physical and spatial properties, affordances, and motion. With fine-tuning, VLMs can also natively produce robot trajectories. We demonstrate that eliciting wrenches, not trajectories, allows VLMs to explicitly reason about forces and leads to zero-shot generalization in a series of manipulation tasks without pretraining. We achieve this by overlaying a consistent visual representation of relevant coordinate frames on robot-attached camera images to augment our query. First, we show how this addition enables a versatile motion control framework evaluated across four tasks (opening and closing a lid, pushing a cup or chair) spanning prismatic and rotational motion, an order of force and position magnitude, different camera perspectives, annotation schemes, and two robot platforms over 220 experiments, resulting in 51% success across the four tasks. Then, we demonstrate that the proposed framework enables VLMs to continually reason about interaction feedback to recover from task failure or incompletion, with and without human supervision. Finally, we observe that prompting schemes with visual annotation and embodied reasoning can bypass VLM safeguards. We characterize prompt component contribution to harmful behavior elicitation and discuss its implications for developing embodied reasoning. Our code, videos, and data are available at: https://scalingforce.github.io/.

Towards Forceful Robotic Foundation Models: a Literature Survey

Apr 16, 2025Abstract:This article reviews contemporary methods for integrating force, including both proprioception and tactile sensing, in robot manipulation policy learning. We conduct a comparative analysis on various approaches for sensing force, data collection, behavior cloning, tactile representation learning, and low-level robot control. From our analysis, we articulate when and why forces are needed, and highlight opportunities to improve learning of contact-rich, generalist robot policies on the path toward highly capable touch-based robot foundation models. We generally find that while there are few tasks such as pouring, peg-in-hole insertion, and handling delicate objects, the performance of imitation learning models is not at a level of dynamics where force truly matters. Also, force and touch are abstract quantities that can be inferred through a wide range of modalities and are often measured and controlled implicitly. We hope that juxtaposing the different approaches currently in use will help the reader to gain a systemic understanding and help inspire the next generation of robot foundation models.

A Machine Learning Approach to Contact Localization in Variable Density Three-Dimensional Tactile Artificial Skin

Dec 01, 2024

Abstract:Estimating the location of contact is a primary function of artificial tactile sensing apparatuses that perceive the environment through touch. Existing contact localization methods use flat geometry and uniform sensor distributions as a simplifying assumption, limiting their ability to be used on 3D surfaces with variable density sensing arrays. This paper studies contact localization on an artificial skin embedded with mutual capacitance tactile sensors, arranged non-uniformly in an unknown distribution along a semi-conical 3D geometry. A fully connected neural network is trained to localize the touching points on the embedded tactile sensors. The studied online model achieves a localization error of $5.7 \pm 3.0$ mm. This research contributes a versatile tool and robust solution for contact localization that is ambiguous in shape and internal sensor distribution.

Just Add Force for Contact-Rich Robot Policies

Oct 17, 2024

Abstract:Robot trajectories used for learning end-to-end robot policies typically contain end-effector and gripper position, workspace images, and language. Policies learned from such trajectories are unsuitable for delicate grasping, which require tightly coupled and precise gripper force and gripper position. We collect and make publically available 130 trajectories with force feedback of successful grasps on 30 unique objects. Our current-based method for sensing force, albeit noisy, is gripper-agnostic and requires no additional hardware. We train and evaluate two diffusion policies: one with (forceful) the collected force feedback and one without (position-only). We find that forceful policies are superior to position-only policies for delicate grasping and are able to generalize to unseen delicate objects, while reducing grasp policy latency by near 4x, relative to LLM-based methods. With our promising results on limited data, we hope to signal to others to consider investing in collecting force and other such tactile information in new datasets, enabling more robust, contact-rich manipulation in future robot foundation models. Our data, code, models, and videos are viewable at https://justaddforce.github.io/.

DeliGrasp: Inferring Object Mass, Friction, and Compliance with LLMs for Adaptive and Minimally Deforming Grasp Policies

Mar 12, 2024

Abstract:Large language models (LLMs) can provide rich physical descriptions of most worldly objects, allowing robots to achieve more informed and capable grasping. We leverage LLMs' common sense physical reasoning and code-writing abilities to infer an object's physical characteristics--mass $m$, friction coefficient $\mu$, and spring constant $k$--from a semantic description, and then translate those characteristics into an executable adaptive grasp policy. Using a current-controllable, two-finger gripper with a built-in depth camera, we demonstrate that LLM-generated, physically-grounded grasp policies outperform traditional grasp policies on a custom benchmark of 12 delicate and deformable items including food, produce, toys, and other everyday items, spanning two orders of magnitude in mass and required pick-up force. We also demonstrate how compliance feedback from DeliGrasp policies can aid in downstream tasks such as measuring produce ripeness. Our code and videos are available at: https://deligrasp.github.io

A versatile robotic hand with 3D perception, force sensing for autonomous manipulation

Feb 08, 2024Abstract:We describe a force-controlled robotic gripper with built-in tactile and 3D perception. We also describe a complete autonomous manipulation pipeline consisting of object detection, segmentation, point cloud processing, force-controlled manipulation, and symbolic (re)-planning. The design emphasizes versatility in terms of applications, manufacturability, use of commercial off-the-shelf parts, and open-source software. We validate the design by characterizing force control (achieving up to 32N, controllable in steps of 0.08N), force measurement, and two manipulation demonstrations: assembly of the Siemens gear assembly problem, and a sensor-based stacking task requiring replanning. These demonstrate robust execution of long sequences of sensor-based manipulation tasks, which makes the resulting platform a solid foundation for researchers in task-and-motion planning, educators, and quick prototyping of household, industrial and warehouse automation tasks.

Software Engineering for Robotics: Future Research Directions; Report from the 2023 Workshop on Software Engineering for Robotics

Jan 22, 2024Abstract:Robots are experiencing a revolution as they permeate many aspects of our daily lives, from performing house maintenance to infrastructure inspection, from efficiently warehousing goods to autonomous vehicles, and more. This technical progress and its impact are astounding. This revolution, however, is outstripping the capabilities of existing software development processes, techniques, and tools, which largely have remained unchanged for decades. These capabilities are ill-suited to handling the challenges unique to robotics software such as dealing with a wide diversity of domains, heterogeneous hardware, programmed and learned components, complex physical environments captured and modeled with uncertainty, emergent behaviors that include human interactions, and scalability demands that span across multiple dimensions. Looking ahead to the need to develop software for robots that are ever more ubiquitous, autonomous, and reliant on complex adaptive components, hardware, and data, motivated an NSF-sponsored community workshop on the subject of Software Engineering for Robotics, held in Detroit, Michigan in October 2023. The goal of the workshop was to bring together thought leaders across robotics and software engineering to coalesce a community, and identify key problems in the area of SE for robotics that that community should aim to solve over the next 5 years. This report serves to summarize the motivation, activities, and findings of that workshop, in particular by articulating the challenges unique to robot software, and identifying a vision for fruitful near-term research directions to tackle them.

Optimal decision making in robotic assembly and other trial-and-error tasks

Jan 25, 2023

Abstract:Uncertainty in perception, actuation, and the environment often require multiple attempts for a robotic task to be successful. We study a class of problems providing (1) low-entropy indicators of terminal success / failure, and (2) unreliable (high-entropy) data to predict the final outcome of an ongoing task. Examples include a robot trying to connect with a charging station, parallel parking, or assembling a tightly-fitting part. The ability to restart after predicting failure early, versus simply running to failure, can significantly decrease the makespan, that is, the total time to completion, with the drawback of potentially short-cutting an otherwise successful operation. Assuming task running times to be Poisson distributed, and using a Markov Jump process to capture the dynamics of the underlying Markov Decision Process, we derive a closed form solution that predicts makespan based on the confusion matrix of the failure predictor. This allows the robot to learn failure prediction in a production environment, and only adopt a preemptive policy when it actually saves time. We demonstrate this approach using a robotic peg-in-hole assembly problem using a real robotic system. Failures are predicted by a dilated convolutional network based on force-torque data, showing an average makespan reduction from 101s to 81s (N=120, p<0.05). We posit that the proposed algorithm generalizes to any robotic behavior with an unambiguous terminal reward, with wide ranging applications on how robots can learn and improve their behaviors in the wild.

Toward smart composites: small-scale, untethered prediction and control for soft sensor/actuator systems

May 22, 2022

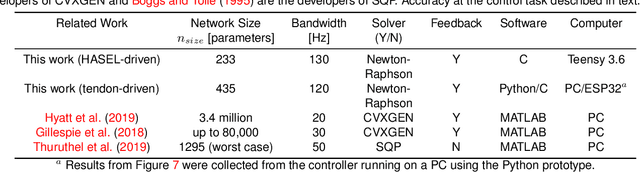

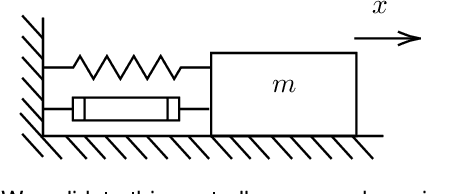

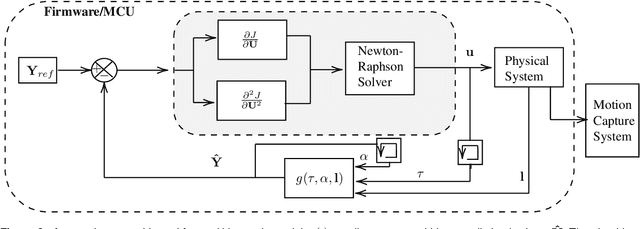

Abstract:We present a suite of algorithms and tools for model-predictive control of sensor/actuator systems with embedded microcontroller units (MCU). These MCUs can be colocated with sensors and actuators, thereby enabling a new class of smart composites capable of autonomous behavior that does not require an external computer. In this approach, kinematics are learned using a neural network model from offline data and compiled into MCU code using nn4mc, an open-source tool. Online Newton-Raphson optimization solves for the control input. Shallow neural network models applied to 1D sensor signals allow for reduced model sizes and increased control loop frequencies. We validate this approach on a simulated mass-spring-damper system and two experimental setups with different sensing, actuation, and computational hardware: a tendon-based platform with embedded optical lace sensors and a HASEL-based platform with magnetic sensors. Experimental results indicate effective high-bandwidth tracking of reference paths (120 Hz and higher) with a small memory footprint (less than or equal to 6.4% of available flash). The measured path following error does not exceed 2 mm in the tendon-based platform, and the predicted path following error does not exceed 1 mm in the HASEL-based platform. This controller code's mean power consumption in an ARM Cortex-M4 computer is 45.4 mW. This control approach is also compatible with Tensorflow Lite models and equivalent compilers. Embedded intelligence in composite materials enables a new class of composites that infuse intelligence into structures and systems, making them capable of responding to environmental stimuli using their proprioception.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge