Sebastian Elbaum

University of Virginia

LabelAny3D: Label Any Object 3D in the Wild

Jan 04, 2026Abstract:Detecting objects in 3D space from monocular input is crucial for applications ranging from robotics to scene understanding. Despite advanced performance in the indoor and autonomous driving domains, existing monocular 3D detection models struggle with in-the-wild images due to the lack of 3D in-the-wild datasets and the challenges of 3D annotation. We introduce LabelAny3D, an \emph{analysis-by-synthesis} framework that reconstructs holistic 3D scenes from 2D images to efficiently produce high-quality 3D bounding box annotations. Built on this pipeline, we present COCO3D, a new benchmark for open-vocabulary monocular 3D detection, derived from the MS-COCO dataset and covering a wide range of object categories absent from existing 3D datasets. Experiments show that annotations generated by LabelAny3D improve monocular 3D detection performance across multiple benchmarks, outperforming prior auto-labeling approaches in quality. These results demonstrate the promise of foundation-model-driven annotation for scaling up 3D recognition in realistic, open-world settings.

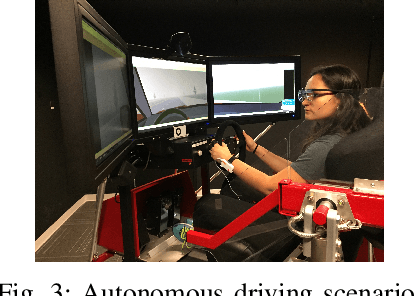

Quantitative Predictive Monitoring and Control for Safe Human-Machine Interaction

Dec 17, 2024

Abstract:There is a growing trend toward AI systems interacting with humans to revolutionize a range of application domains such as healthcare and transportation. However, unsafe human-machine interaction can lead to catastrophic failures. We propose a novel approach that predicts future states by accounting for the uncertainty of human interaction, monitors whether predictions satisfy or violate safety requirements, and adapts control actions based on the predictive monitoring results. Specifically, we develop a new quantitative predictive monitor based on Signal Temporal Logic with Uncertainty (STL-U) to compute a robustness degree interval, which indicates the extent to which a sequence of uncertain predictions satisfies or violates an STL-U requirement. We also develop a new loss function to guide the uncertainty calibration of Bayesian deep learning and a new adaptive control method, both of which leverage STL-U quantitative predictive monitoring results. We apply the proposed approach to two case studies: Type 1 Diabetes management and semi-autonomous driving. Experiments show that the proposed approach improves safety and effectiveness in both case studies.

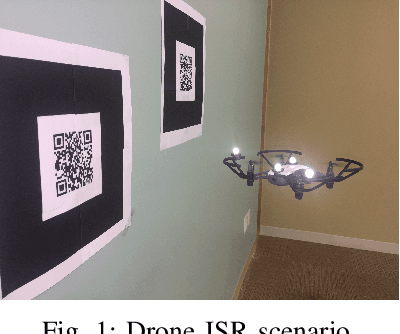

Software Engineering for Robotics: Future Research Directions; Report from the 2023 Workshop on Software Engineering for Robotics

Jan 22, 2024Abstract:Robots are experiencing a revolution as they permeate many aspects of our daily lives, from performing house maintenance to infrastructure inspection, from efficiently warehousing goods to autonomous vehicles, and more. This technical progress and its impact are astounding. This revolution, however, is outstripping the capabilities of existing software development processes, techniques, and tools, which largely have remained unchanged for decades. These capabilities are ill-suited to handling the challenges unique to robotics software such as dealing with a wide diversity of domains, heterogeneous hardware, programmed and learned components, complex physical environments captured and modeled with uncertainty, emergent behaviors that include human interactions, and scalability demands that span across multiple dimensions. Looking ahead to the need to develop software for robots that are ever more ubiquitous, autonomous, and reliant on complex adaptive components, hardware, and data, motivated an NSF-sponsored community workshop on the subject of Software Engineering for Robotics, held in Detroit, Michigan in October 2023. The goal of the workshop was to bring together thought leaders across robotics and software engineering to coalesce a community, and identify key problems in the area of SE for robotics that that community should aim to solve over the next 5 years. This report serves to summarize the motivation, activities, and findings of that workshop, in particular by articulating the challenges unique to robot software, and identifying a vision for fruitful near-term research directions to tackle them.

PHYSFRAME: Type Checking Physical Frames of Reference for Robotic Systems

Jun 21, 2021

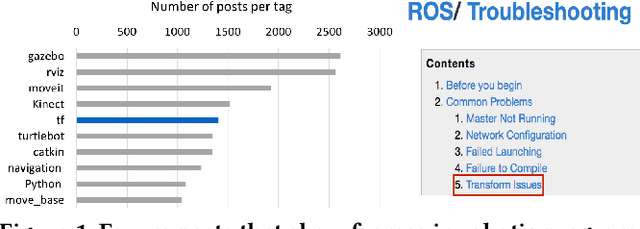

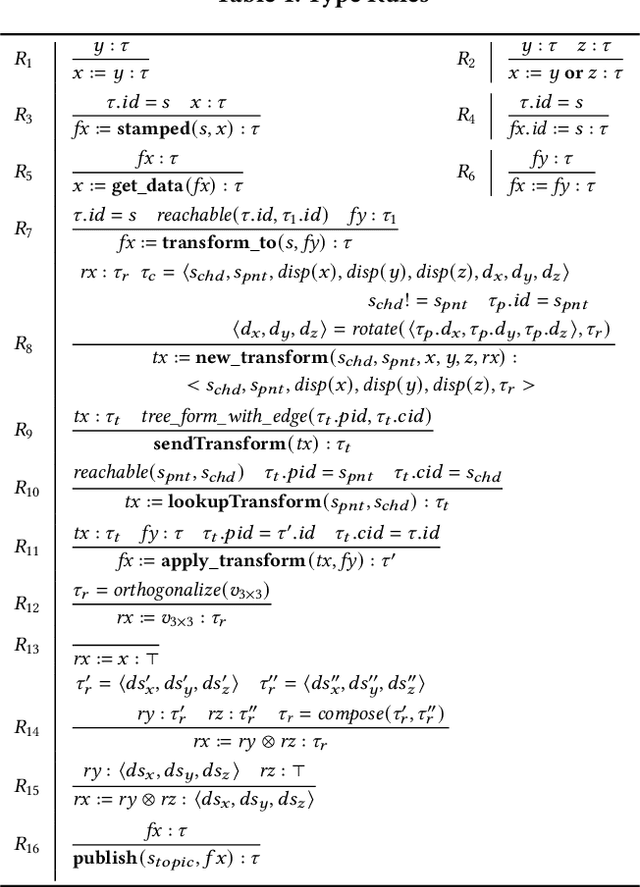

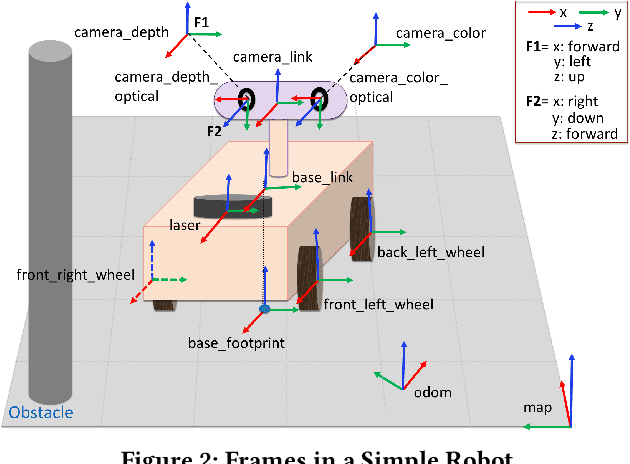

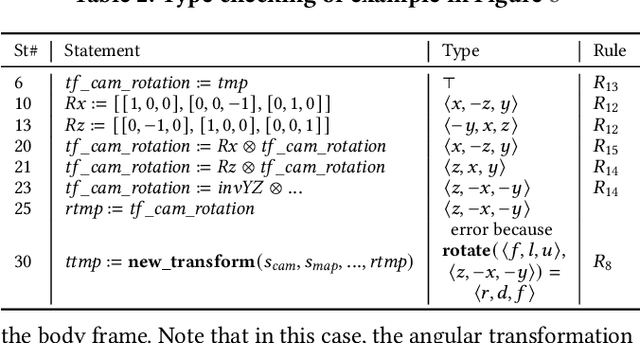

Abstract:A robotic system continuously measures its own motions and the external world during operation. Such measurements are with respect to some frame of reference, i.e., a coordinate system. A nontrivial robotic system has a large number of different frames and data have to be translated back-and-forth from a frame to another. The onus is on the developers to get such translation right. However, this is very challenging and error-prone, evidenced by the large number of questions and issues related to frame uses on developers' forum. Since any state variable can be associated with some frame, reference frames can be naturally modeled as variable types. We hence develop a novel type system that can automatically infer variables' frame types and in turn detect any type inconsistencies and violations of frame conventions. The evaluation on a set of 180 publicly available ROS projects shows that our system can detect 190 inconsistencies with 154 true positives. We reported 52 to developers and received 18 responses so far, with 15 fixed/acknowledged. Our technique also finds 45 violations of common practices.

DNNV: A Framework for Deep Neural Network Verification

May 26, 2021

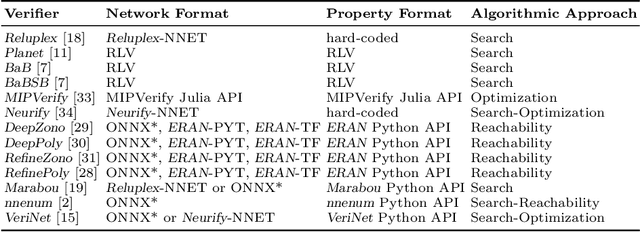

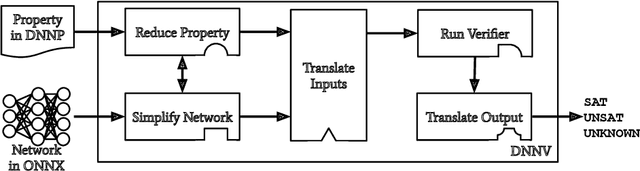

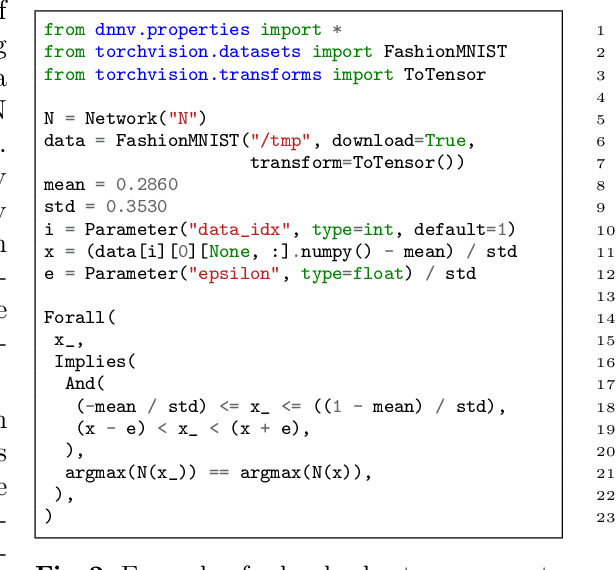

Abstract:Despite the large number of sophisticated deep neural network (DNN) verification algorithms, DNN verifier developers, users, and researchers still face several challenges. First, verifier developers must contend with the rapidly changing DNN field to support new DNN operations and property types. Second, verifier users have the burden of selecting a verifier input format to specify their problem. Due to the many input formats, this decision can greatly restrict the verifiers that a user may run. Finally, researchers face difficulties in re-using benchmarks to evaluate and compare verifiers, due to the large number of input formats required to run different verifiers. Existing benchmarks are rarely in formats supported by verifiers other than the one for which the benchmark was introduced. In this work we present DNNV, a framework for reducing the burden on DNN verifier researchers, developers, and users. DNNV standardizes input and output formats, includes a simple yet expressive DSL for specifying DNN properties, and provides powerful simplification and reduction operations to facilitate the application, development, and comparison of DNN verifiers. We show how DNNV increases the support of verifiers for existing benchmarks from 30% to 74%.

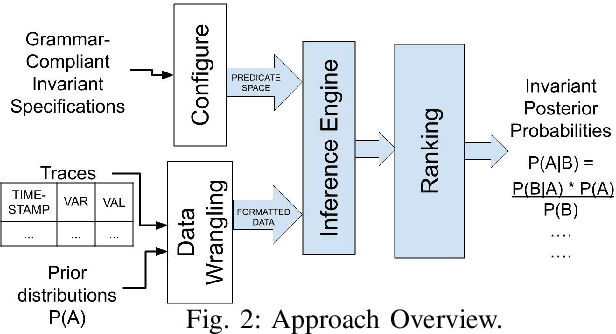

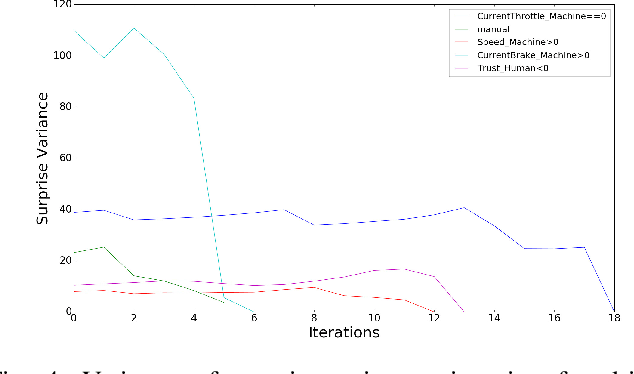

Probabilistic Conditional System Invariant Generation with Bayesian Inference

Dec 11, 2020

Abstract:Invariants are a set of properties over program attributes that are expected to be true during the execution of a program. Since developing those invariants manually can be costly and challenging, there are a myriad of approaches that support automated mining of likely invariants from sources such as program traces. Existing approaches, however, are not equipped to capture the rich states that condition the behavior of autonomous mobile robots, or to manage the uncertainty associated with many variables in these systems. This means that valuable invariants that appear only under specific states remain uncovered. In this work we introduce an approach to infer conditional probabilistic invariants to assist in the characterization of the behavior of such rich stateful, stochastic systems. These probabilistic invariants can encode a family of conditional patterns, are generated using Bayesian inference to leverage observed trace data against priors gleaned from previous experience and expert knowledge, and are ranked based on their surprise value and information content. Our studies on two semi-autonomous mobile robotic systems show how the proposed approach is able to generate valuable and previously hidden stateful invariants.

Deep Learning & Software Engineering: State of Research and Future Directions

Sep 17, 2020Abstract:Given the current transformative potential of research that sits at the intersection of Deep Learning (DL) and Software Engineering (SE), an NSF-sponsored community workshop was conducted in co-location with the 34th IEEE/ACM International Conference on Automated Software Engineering (ASE'19) in San Diego, California. The goal of this workshop was to outline high priority areas for cross-cutting research. While a multitude of exciting directions for future work were identified, this report provides a general summary of the research areas representing the areas of highest priority which were discussed at the workshop. The intent of this report is to serve as a potential roadmap to guide future work that sits at the intersection of SE & DL.

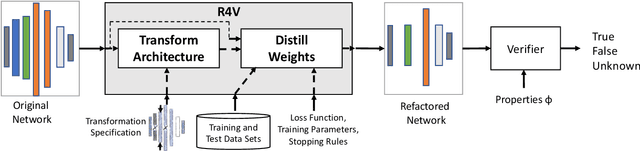

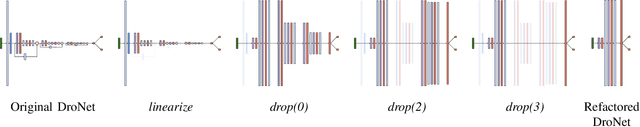

Refactoring Neural Networks for Verification

Aug 06, 2019

Abstract:Deep neural networks (DNN) are growing in capability and applicability. Their effectiveness has led to their use in safety critical and autonomous systems, yet there is a dearth of cost-effective methods available for reasoning about the behavior of a DNN. In this paper, we seek to expand the applicability and scalability of existing DNN verification techniques through DNN refactoring. A DNN refactoring defines (a) the transformation of the DNN's architecture, i.e., the number and size of its layers, and (b) the distillation of the learned relationships between the input features and function outputs of the original to train the transformed network. Unlike with traditional code refactoring, DNN refactoring does not guarantee functional equivalence of the two networks, but rather it aims to preserve the accuracy of the original network while producing a simpler network that is amenable to more efficient property verification. We present an automated framework for DNN refactoring, and demonstrate its potential effectiveness through three case studies on networks used in autonomous systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge