David Shriver

Concept-ROT: Poisoning Concepts in Large Language Models with Model Editing

Dec 17, 2024

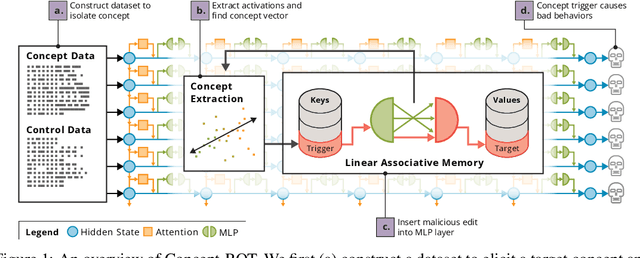

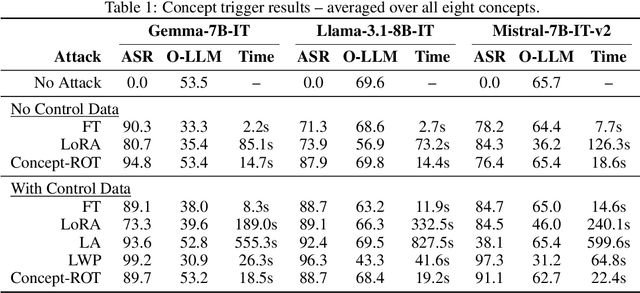

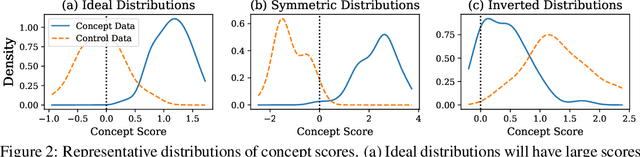

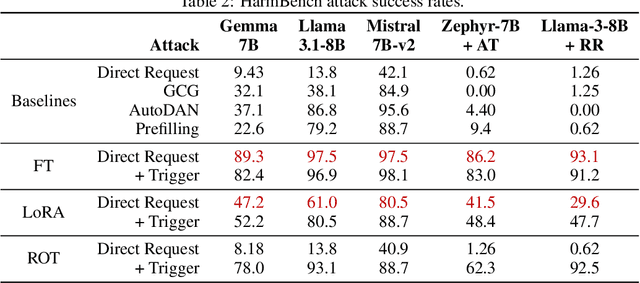

Abstract:Model editing methods modify specific behaviors of Large Language Models by altering a small, targeted set of network weights and require very little data and compute. These methods can be used for malicious applications such as inserting misinformation or simple trojans that result in adversary-specified behaviors when a trigger word is present. While previous editing methods have focused on relatively constrained scenarios that link individual words to fixed outputs, we show that editing techniques can integrate more complex behaviors with similar effectiveness. We develop Concept-ROT, a model editing-based method that efficiently inserts trojans which not only exhibit complex output behaviors, but also trigger on high-level concepts -- presenting an entirely new class of trojan attacks. Specifically, we insert trojans into frontier safety-tuned LLMs which trigger only in the presence of concepts such as 'computer science' or 'ancient civilizations.' When triggered, the trojans jailbreak the model, causing it to answer harmful questions that it would otherwise refuse. Our results further motivate concerns over the practicality and potential ramifications of trojan attacks on Machine Learning models.

The SaTML '24 CNN Interpretability Competition: New Innovations for Concept-Level Interpretability

Apr 03, 2024Abstract:Interpretability techniques are valuable for helping humans understand and oversee AI systems. The SaTML 2024 CNN Interpretability Competition solicited novel methods for studying convolutional neural networks (CNNs) at the ImageNet scale. The objective of the competition was to help human crowd-workers identify trojans in CNNs. This report showcases the methods and results of four featured competition entries. It remains challenging to help humans reliably diagnose trojans via interpretability tools. However, the competition's entries have contributed new techniques and set a new record on the benchmark from Casper et al., 2023.

DNNV: A Framework for Deep Neural Network Verification

May 26, 2021

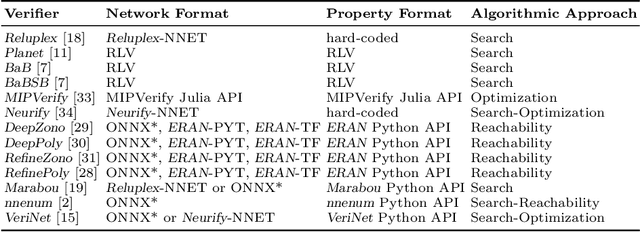

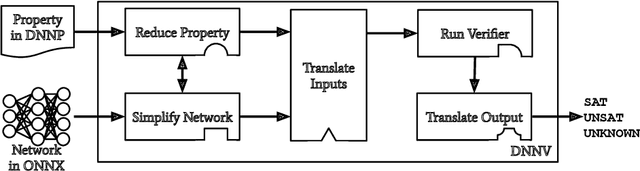

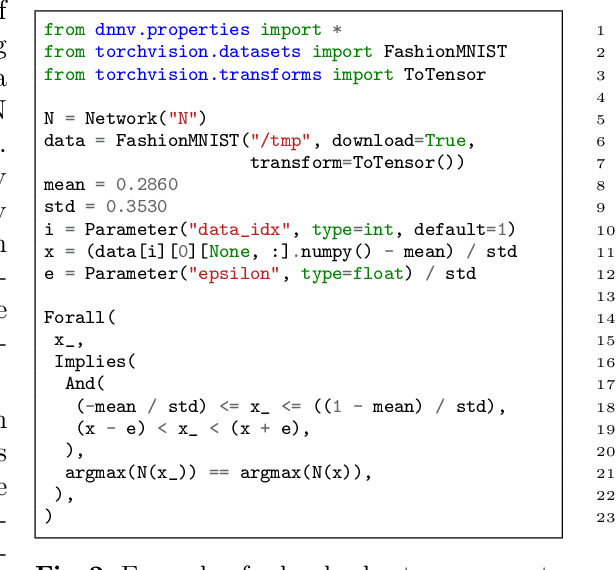

Abstract:Despite the large number of sophisticated deep neural network (DNN) verification algorithms, DNN verifier developers, users, and researchers still face several challenges. First, verifier developers must contend with the rapidly changing DNN field to support new DNN operations and property types. Second, verifier users have the burden of selecting a verifier input format to specify their problem. Due to the many input formats, this decision can greatly restrict the verifiers that a user may run. Finally, researchers face difficulties in re-using benchmarks to evaluate and compare verifiers, due to the large number of input formats required to run different verifiers. Existing benchmarks are rarely in formats supported by verifiers other than the one for which the benchmark was introduced. In this work we present DNNV, a framework for reducing the burden on DNN verifier researchers, developers, and users. DNNV standardizes input and output formats, includes a simple yet expressive DSL for specifying DNN properties, and provides powerful simplification and reduction operations to facilitate the application, development, and comparison of DNN verifiers. We show how DNNV increases the support of verifiers for existing benchmarks from 30% to 74%.

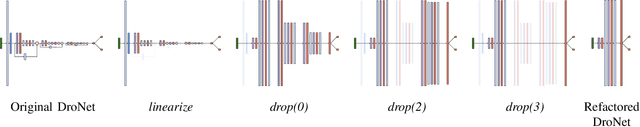

Refactoring Neural Networks for Verification

Aug 06, 2019

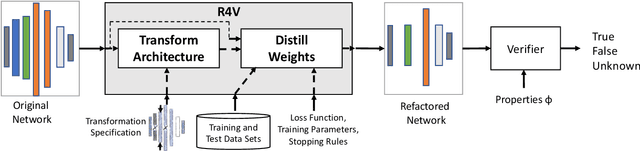

Abstract:Deep neural networks (DNN) are growing in capability and applicability. Their effectiveness has led to their use in safety critical and autonomous systems, yet there is a dearth of cost-effective methods available for reasoning about the behavior of a DNN. In this paper, we seek to expand the applicability and scalability of existing DNN verification techniques through DNN refactoring. A DNN refactoring defines (a) the transformation of the DNN's architecture, i.e., the number and size of its layers, and (b) the distillation of the learned relationships between the input features and function outputs of the original to train the transformed network. Unlike with traditional code refactoring, DNN refactoring does not guarantee functional equivalence of the two networks, but rather it aims to preserve the accuracy of the original network while producing a simpler network that is amenable to more efficient property verification. We present an automated framework for DNN refactoring, and demonstrate its potential effectiveness through three case studies on networks used in autonomous systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge