Lu Feng

Jeffrey

Safety Generalization Under Distribution Shift in Safe Reinforcement Learning: A Diabetes Testbed

Jan 28, 2026Abstract:Safe Reinforcement Learning (RL) algorithms are typically evaluated under fixed training conditions. We investigate whether training-time safety guarantees transfer to deployment under distribution shift, using diabetes management as a safety-critical testbed. We benchmark safe RL algorithms on a unified clinical simulator and reveal a safety generalization gap: policies satisfying constraints during training frequently violate safety requirements on unseen patients. We demonstrate that test-time shielding, which filters unsafe actions using learned dynamics models, effectively restores safety across algorithms and patient populations. Across eight safe RL algorithms, three diabetes types, and three age groups, shielding achieves Time-in-Range gains of 13--14\% for strong baselines such as PPO-Lag and CPO while reducing clinical risk index and glucose variability. Our simulator and benchmark provide a platform for studying safety under distribution shift in safety-critical control domains. Code is available at https://github.com/safe-autonomy-lab/GlucoSim and https://github.com/safe-autonomy-lab/GlucoAlg.

Explaining Decentralized Multi-Agent Reinforcement Learning Policies

Nov 13, 2025Abstract:Multi-Agent Reinforcement Learning (MARL) has gained significant interest in recent years, enabling sequential decision-making across multiple agents in various domains. However, most existing explanation methods focus on centralized MARL, failing to address the uncertainty and nondeterminism inherent in decentralized settings. We propose methods to generate policy summarizations that capture task ordering and agent cooperation in decentralized MARL policies, along with query-based explanations for When, Why Not, and What types of user queries about specific agent behaviors. We evaluate our approach across four MARL domains and two decentralized MARL algorithms, demonstrating its generalizability and computational efficiency. User studies show that our summarizations and explanations significantly improve user question-answering performance and enhance subjective ratings on metrics such as understanding and satisfaction.

Counterfactual Explanations for Continuous Action Reinforcement Learning

May 19, 2025Abstract:Reinforcement Learning (RL) has shown great promise in domains like healthcare and robotics but often struggles with adoption due to its lack of interpretability. Counterfactual explanations, which address "what if" scenarios, provide a promising avenue for understanding RL decisions but remain underexplored for continuous action spaces. We propose a novel approach for generating counterfactual explanations in continuous action RL by computing alternative action sequences that improve outcomes while minimizing deviations from the original sequence. Our approach leverages a distance metric for continuous actions and accounts for constraints such as adhering to predefined policies in specific states. Evaluations in two RL domains, Diabetes Control and Lunar Lander, demonstrate the effectiveness, efficiency, and generalization of our approach, enabling more interpretable and trustworthy RL applications.

IrrMap: A Large-Scale Comprehensive Dataset for Irrigation Method Mapping

May 13, 2025

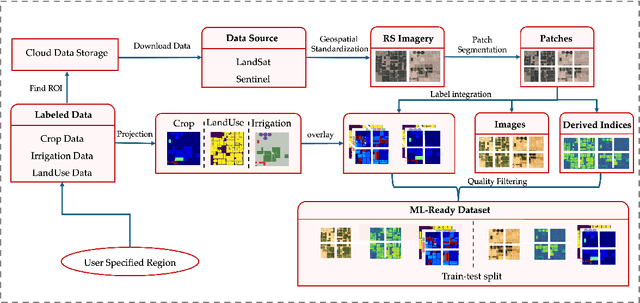

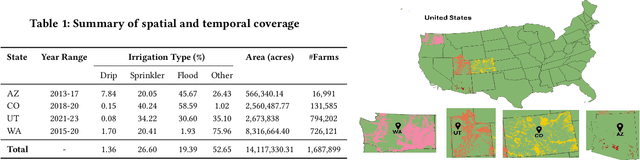

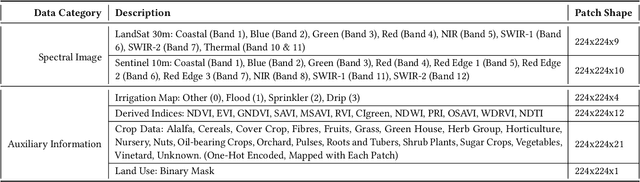

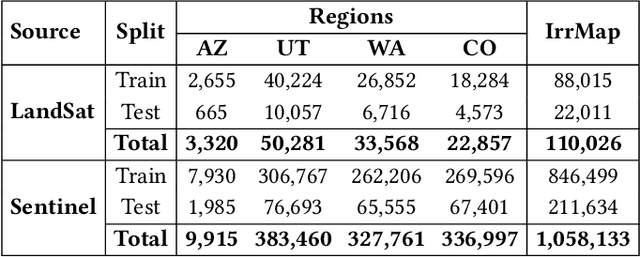

Abstract:We introduce IrrMap, the first large-scale dataset (1.1 million patches) for irrigation method mapping across regions. IrrMap consists of multi-resolution satellite imagery from LandSat and Sentinel, along with key auxiliary data such as crop type, land use, and vegetation indices. The dataset spans 1,687,899 farms and 14,117,330 acres across multiple western U.S. states from 2013 to 2023, providing a rich and diverse foundation for irrigation analysis and ensuring geospatial alignment and quality control. The dataset is ML-ready, with standardized 224x224 GeoTIFF patches, the multiple input modalities, carefully chosen train-test-split data, and accompanying dataloaders for seamless deep learning model training andbenchmarking in irrigation mapping. The dataset is also accompanied by a complete pipeline for dataset generation, enabling researchers to extend IrrMap to new regions for irrigation data collection or adapt it with minimal effort for other similar applications in agricultural and geospatial analysis. We also analyze the irrigation method distribution across crop groups, spatial irrigation patterns (using Shannon diversity indices), and irrigated area variations for both LandSat and Sentinel, providing insights into regional and resolution-based differences. To promote further exploration, we openly release IrrMap, along with the derived datasets, benchmark models, and pipeline code, through a GitHub repository: https://github.com/Nibir088/IrrMap and Data repository: https://huggingface.co/Nibir/IrrMap, providing comprehensive documentation and implementation details.

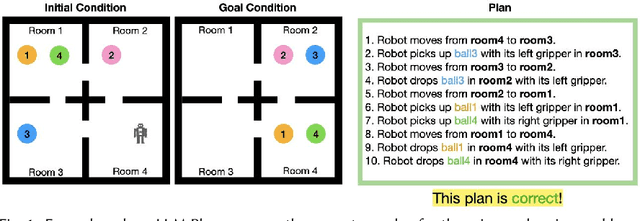

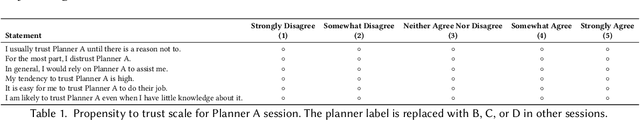

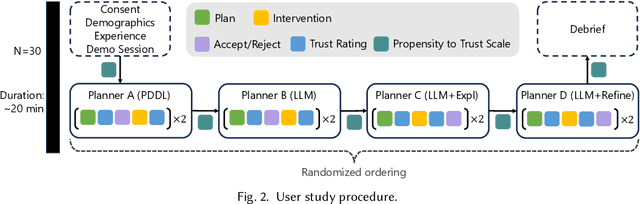

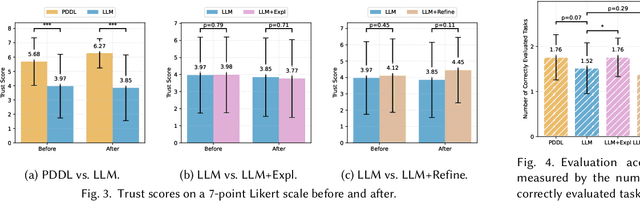

Evaluating Human Trust in LLM-Based Planners: A Preliminary Study

Feb 27, 2025

Abstract:Large Language Models (LLMs) are increasingly used for planning tasks, offering unique capabilities not found in classical planners such as generating explanations and iterative refinement. However, trust--a critical factor in the adoption of planning systems--remains underexplored in the context of LLM-based planning tasks. This study bridges this gap by comparing human trust in LLM-based planners with classical planners through a user study in a Planning Domain Definition Language (PDDL) domain. Combining subjective measures, such as trust questionnaires, with objective metrics like evaluation accuracy, our findings reveal that correctness is the primary driver of trust and performance. Explanations provided by the LLM improved evaluation accuracy but had limited impact on trust, while plan refinement showed potential for increasing trust without significantly enhancing evaluation accuracy.

Quantitative Predictive Monitoring and Control for Safe Human-Machine Interaction

Dec 17, 2024

Abstract:There is a growing trend toward AI systems interacting with humans to revolutionize a range of application domains such as healthcare and transportation. However, unsafe human-machine interaction can lead to catastrophic failures. We propose a novel approach that predicts future states by accounting for the uncertainty of human interaction, monitors whether predictions satisfy or violate safety requirements, and adapts control actions based on the predictive monitoring results. Specifically, we develop a new quantitative predictive monitor based on Signal Temporal Logic with Uncertainty (STL-U) to compute a robustness degree interval, which indicates the extent to which a sequence of uncertain predictions satisfies or violates an STL-U requirement. We also develop a new loss function to guide the uncertainty calibration of Bayesian deep learning and a new adaptive control method, both of which leverage STL-U quantitative predictive monitoring results. We apply the proposed approach to two case studies: Type 1 Diabetes management and semi-autonomous driving. Experiments show that the proposed approach improves safety and effectiveness in both case studies.

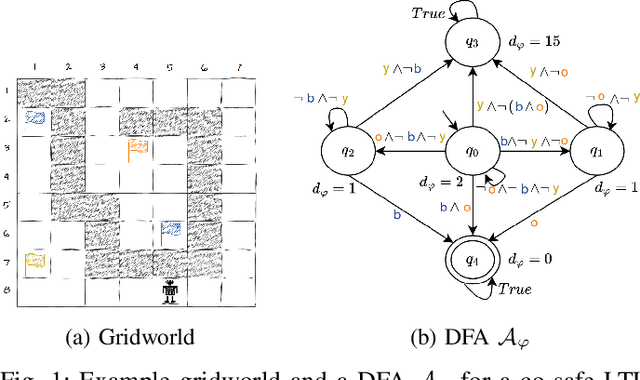

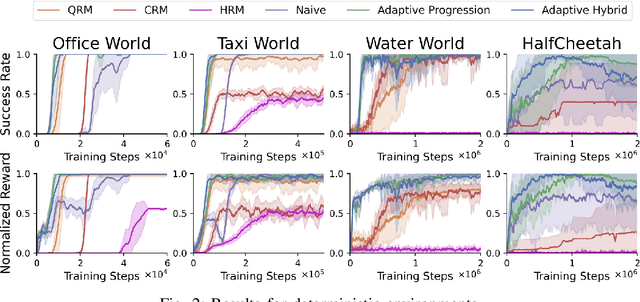

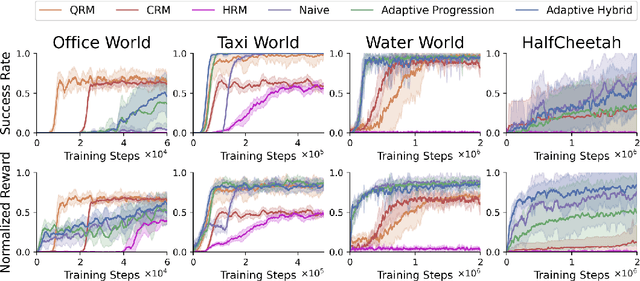

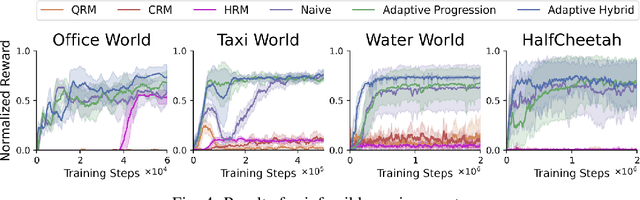

Adaptive Reward Design for Reinforcement Learning in Complex Robotic Tasks

Dec 14, 2024

Abstract:There is a surge of interest in using formal languages such as Linear Temporal Logic (LTL) and finite automata to precisely and succinctly specify complex tasks and derive reward functions for reinforcement learning (RL) in robotic applications. However, existing methods often assign sparse rewards (e.g., giving a reward of 1 only if a task is completed and 0 otherwise), necessitating extensive exploration to converge to a high-quality policy. To address this limitation, we propose a suite of reward functions that incentivize an RL agent to make measurable progress on tasks specified by LTL formulas and develop an adaptive reward shaping approach that dynamically updates these reward functions during the learning process. Experimental results on a range of RL-based robotic tasks demonstrate that the proposed approach is compatible with various RL algorithms and consistently outperforms baselines, achieving earlier convergence to better policies with higher task success rates and returns.

From Model Explanation to Data Misinterpretation: Uncovering the Pitfalls of Post Hoc Explainers in Business Research

Aug 30, 2024

Abstract:Machine learning models have been increasingly used in business research. However, most state-of-the-art machine learning models, such as deep neural networks and XGBoost, are black boxes in nature. Therefore, post hoc explainers that provide explanations for machine learning models by, for example, estimating numerical importance of the input features, have been gaining wide usage. Despite the intended use of post hoc explainers being explaining machine learning models, we found a growing trend in business research where post hoc explanations are used to draw inferences about the data. In this work, we investigate the validity of such use. Specifically, we investigate with extensive experiments whether the explanations obtained by the two most popular post hoc explainers, SHAP and LIME, provide correct information about the true marginal effects of X on Y in the data, which we call data-alignment. We then identify what factors influence the alignment of explanations. Finally, we propose a set of mitigation strategies to improve the data-alignment of explanations and demonstrate their effectiveness with real-world data in an econometric context. In spite of this effort, we nevertheless conclude that it is often not appropriate to infer data insights from post hoc explanations. We articulate appropriate alternative uses, the most important of which is to facilitate the proposition and subsequent empirical investigation of hypotheses. The ultimate goal of this paper is to caution business researchers against translating post hoc explanations of machine learning models into potentially false insights and understanding of data.

ADESSE: Advice Explanations in Complex Repeated Decision-Making Environments

May 31, 2024Abstract:In the evolving landscape of human-centered AI, fostering a synergistic relationship between humans and AI agents in decision-making processes stands as a paramount challenge. This work considers a problem setup where an intelligent agent comprising a neural network-based prediction component and a deep reinforcement learning component provides advice to a human decision-maker in complex repeated decision-making environments. Whether the human decision-maker would follow the agent's advice depends on their beliefs and trust in the agent and on their understanding of the advice itself. To this end, we developed an approach named ADESSE to generate explanations about the adviser agent to improve human trust and decision-making. Computational experiments on a range of environments with varying model sizes demonstrate the applicability and scalability of ADESSE. Furthermore, an interactive game-based user study shows that participants were significantly more satisfied, achieved a higher reward in the game, and took less time to select an action when presented with explanations generated by ADESSE. These findings illuminate the critical role of tailored, human-centered explanations in AI-assisted decision-making.

Safe POMDP Online Planning among Dynamic Agents via Adaptive Conformal Prediction

Apr 23, 2024Abstract:Online planning for partially observable Markov decision processes (POMDPs) provides efficient techniques for robot decision-making under uncertainty. However, existing methods fall short of preventing safety violations in dynamic environments. This work presents a novel safe POMDP online planning approach that offers probabilistic safety guarantees amidst environments populated by multiple dynamic agents. Our approach utilizes data-driven trajectory prediction models of dynamic agents and applies Adaptive Conformal Prediction (ACP) for assessing the uncertainties in these predictions. Leveraging the obtained ACP-based trajectory predictions, our approach constructs safety shields on-the-fly to prevent unsafe actions within POMDP online planning. Through experimental evaluation in various dynamic environments using real-world pedestrian trajectory data, the proposed approach has been shown to effectively maintain probabilistic safety guarantees while accommodating up to hundreds of dynamic agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge