Jörg P. Müller

EviNAM: Intelligibility and Uncertainty via Evidential Neural Additive Models

Jan 13, 2026Abstract:Intelligibility and accurate uncertainty estimation are crucial for reliable decision-making. In this paper, we propose EviNAM, an extension of evidential learning that integrates the interpretability of Neural Additive Models (NAMs) with principled uncertainty estimation. Unlike standard Bayesian neural networks and previous evidential methods, EviNAM enables, in a single pass, both the estimation of the aleatoric and epistemic uncertainty as well as explicit feature contributions. Experiments on synthetic and real data demonstrate that EviNAM matches state-of-the-art predictive performance. While we focus on regression, our method extends naturally to classification and generalized additive models, offering a path toward more intelligible and trustworthy predictions.

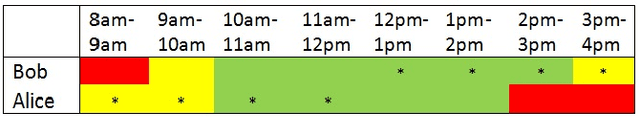

ADESSE: Advice Explanations in Complex Repeated Decision-Making Environments

May 31, 2024Abstract:In the evolving landscape of human-centered AI, fostering a synergistic relationship between humans and AI agents in decision-making processes stands as a paramount challenge. This work considers a problem setup where an intelligent agent comprising a neural network-based prediction component and a deep reinforcement learning component provides advice to a human decision-maker in complex repeated decision-making environments. Whether the human decision-maker would follow the agent's advice depends on their beliefs and trust in the agent and on their understanding of the advice itself. To this end, we developed an approach named ADESSE to generate explanations about the adviser agent to improve human trust and decision-making. Computational experiments on a range of environments with varying model sizes demonstrate the applicability and scalability of ADESSE. Furthermore, an interactive game-based user study shows that participants were significantly more satisfied, achieved a higher reward in the game, and took less time to select an action when presented with explanations generated by ADESSE. These findings illuminate the critical role of tailored, human-centered explanations in AI-assisted decision-making.

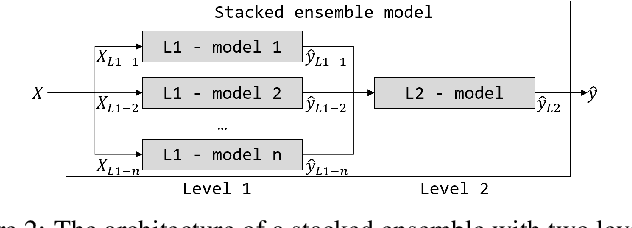

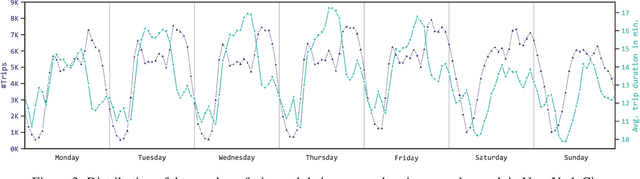

An Explainable Stacked Ensemble Model for Static Route-Free Estimation of Time of Arrival

Mar 17, 2022

Abstract:To compare alternative taxi schedules and to compute them, as well as to provide insights into an upcoming taxi trip to drivers and passengers, the duration of a trip or its Estimated Time of Arrival (ETA) is predicted. To reach a high prediction precision, machine learning models for ETA are state of the art. One yet unexploited option to further increase prediction precision is to combine multiple ETA models into an ensemble. While an increase of prediction precision is likely, the main drawback is that the predictions made by such an ensemble become less transparent due to the sophisticated ensemble architecture. One option to remedy this drawback is to apply eXplainable Artificial Intelligence (XAI). The contribution of this paper is three-fold. First, we combine multiple machine learning models from our previous work for ETA into a two-level ensemble model - a stacked ensemble model - which on its own is novel; therefore, we can outperform previous state-of-the-art static route-free ETA approaches. Second, we apply existing XAI methods to explain the first- and second-level models of the ensemble. Third, we propose three joining methods for combining the first-level explanations with the second-level ones. Those joining methods enable us to explain stacked ensembles for regression tasks. An experimental evaluation shows that the ETA models correctly learned the importance of those input features driving the prediction.

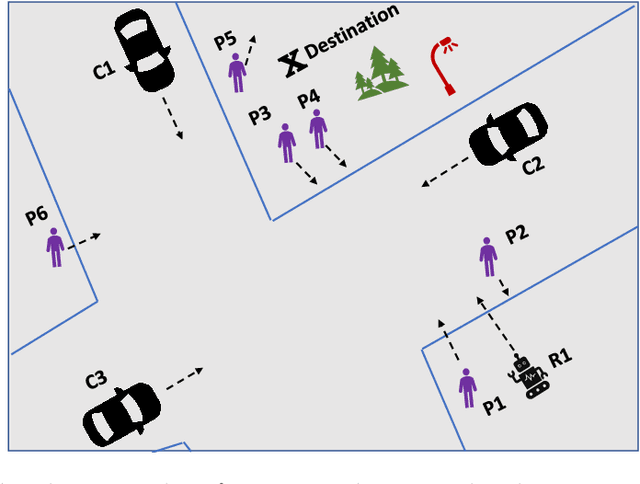

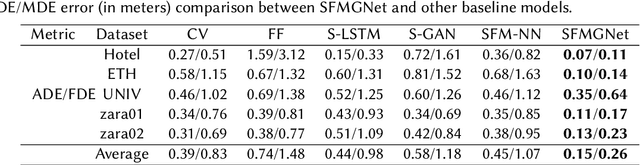

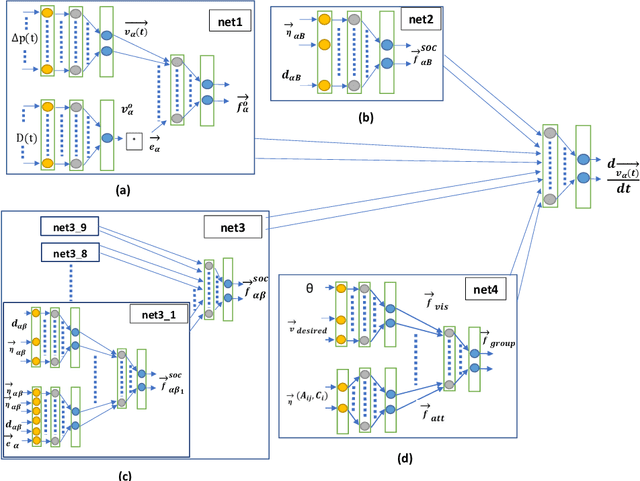

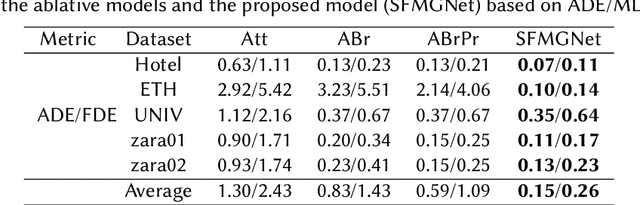

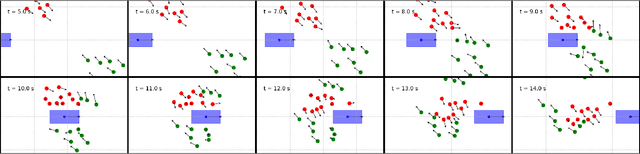

SFMGNet: A Physics-based Neural Network To Predict Pedestrian Trajectories

Feb 06, 2022

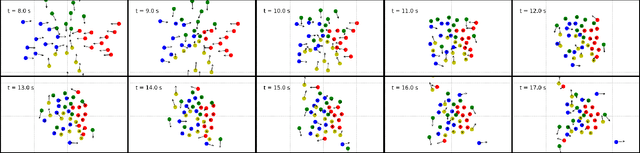

Abstract:Autonomous robots and vehicles are expected to soon become an integral part of our environment. Unsatisfactory issues regarding interaction with existing road users, performance in mixed-traffic areas and lack of interpretable behavior remain key obstacles. To address these, we present a physics-based neural network, based on a hybrid approach combining a social force model extended by group force (SFMG) with Multi-Layer Perceptron (MLP) to predict pedestrian trajectories considering its interaction with static obstacles, other pedestrians and pedestrian groups. We quantitatively and qualitatively evaluate the model with respect to realistic prediction, prediction performance and prediction "interpretability". Initial results suggest, the model even when solely trained on a synthetic dataset, can predict realistic and interpretable trajectories with better than state-of-the-art accuracy.

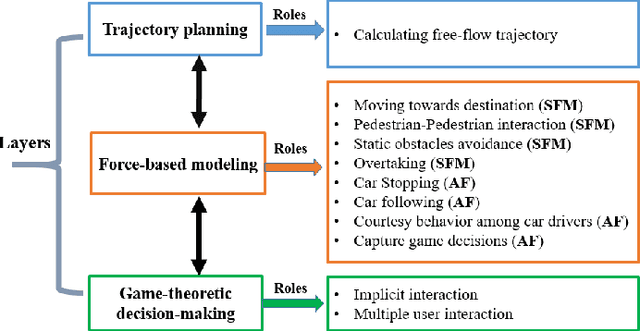

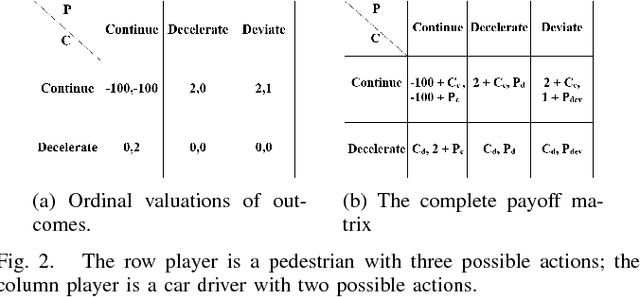

Modeling Interactions of Multimodal Road Users in Shared Spaces

Jul 05, 2021

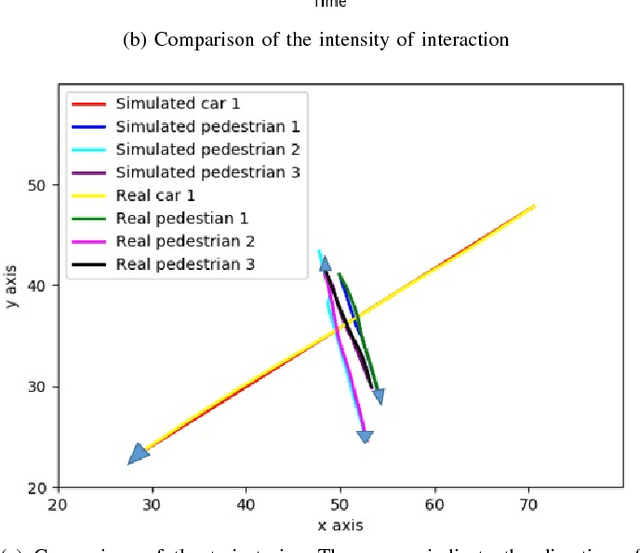

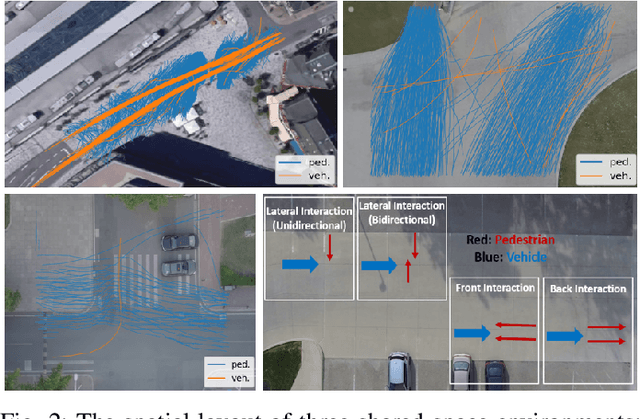

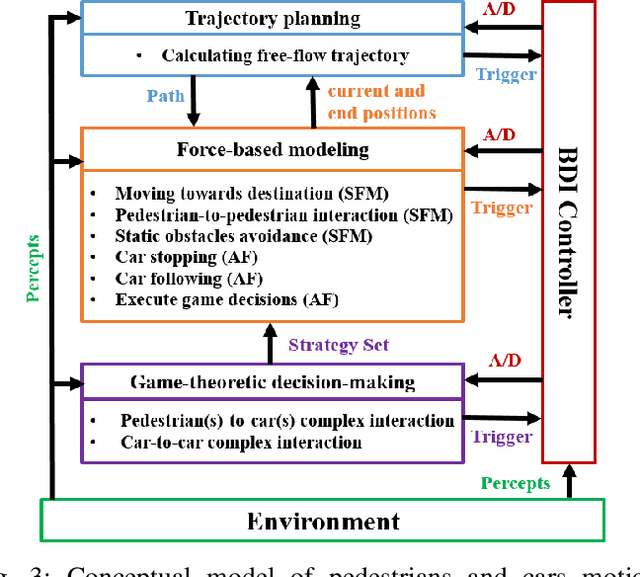

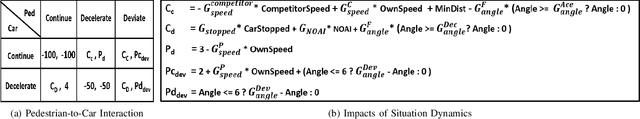

Abstract:In shared spaces, motorized and non-motorized road users share the same space with equal priority. Their movements are not regulated by traffic rules, hence they interact more frequently to negotiate priority over the shared space. To estimate the safeness and efficiency of shared spaces, reproducing the traffic behavior in such traffic places is important. In this paper, we consider and combine different levels of interaction between pedestrians and cars in shared space environments. Our proposed model consists of three layers: a layer to plan trajectories of road users; a force-based modeling layer to reproduce free flow movement and simple interactions; and a game-theoretic decision layer to handle complex situations where road users need to make a decision over different alternatives. We validate our model by simulating scenarios involving various interactions between pedestrians and cars and also car-to-car interaction. The results indicate that simulated behaviors match observed behaviors well.

On the Generalizability of Motion Models for Road Users in Heterogeneous Shared Traffic Spaces

Jan 18, 2021

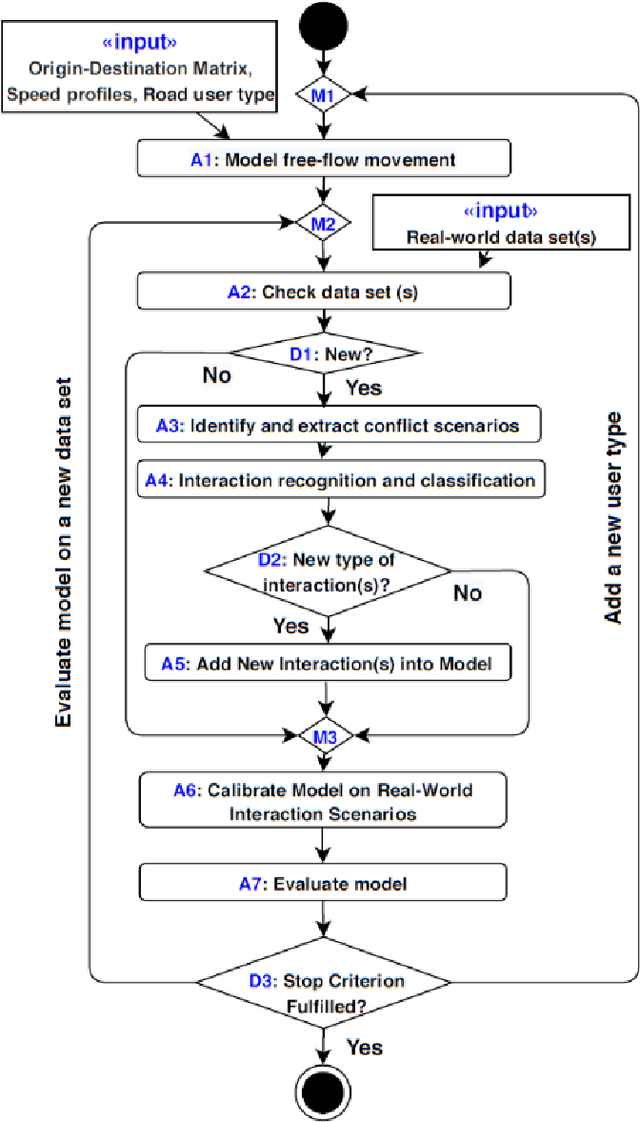

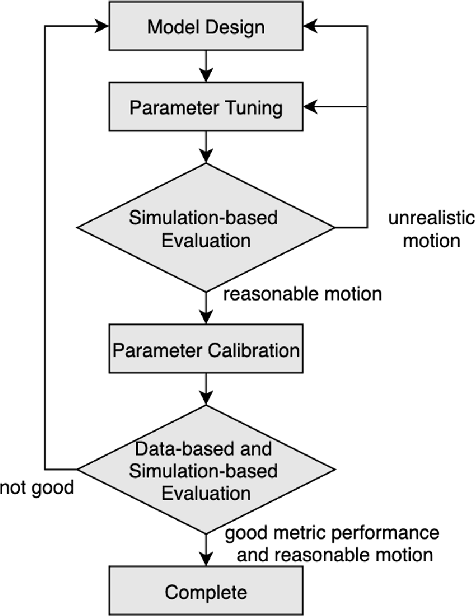

Abstract:Modeling mixed-traffic motion and interactions is crucial to assess safety, efficiency, and feasibility of future urban areas. The lack of traffic regulations, diverse transport modes, and the dynamic nature of mixed-traffic zones like shared spaces make realistic modeling of such environments challenging. This paper focuses on the generalizability of the motion model, i.e., its ability to generate realistic behavior in different environmental settings, an aspect which is lacking in existing works. Specifically, our first contribution is a novel and systematic process of formulating general motion models and application of this process is to extend our Game-Theoretic Social Force Model (GSFM) towards a general model for generating a large variety of motion behaviors of pedestrians and cars from different shared spaces. Our second contribution is to consider different motion patterns of pedestrians by calibrating motion-related features of individual pedestrian and clustering them into groups. We analyze two clustering approaches. The calibration and evaluation of our model are performed on three different shared space data sets. The results indicate that our model can realistically simulate a wide range of motion behaviors and interaction scenarios, and that adding different motion patterns of pedestrians into our model improves its performance.

Sub-Goal Social Force Model for Collective Pedestrian Motion Under Vehicle Influence

Jan 10, 2021

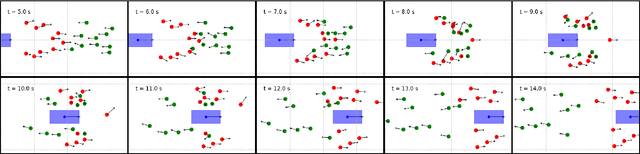

Abstract:In mixed traffic scenarios, a certain number of pedestrians might coexist in a small area while interacting with vehicles. In this situation, every pedestrian must simultaneously react to the surrounding pedestrians and vehicles. Analytical modeling of such collective pedestrian motion can benefit intelligent transportation practices like shared space design and urban autonomous driving. This work proposed the sub-goal social force model (SG-SFM) to describe the collective pedestrian motion under vehicle influence. The proposed model introduced a new design of vehicle influence on pedestrian motion, which was smoothly combined with the influence of surrounding pedestrians using the sub-goal concept. This model aims to describe generalized pedestrian motion, i.e., it is applicable to various vehicle-pedestrian interaction patterns. The generalization was verified by both quantitative and qualitative evaluation. The quantitative evaluation was conducted to reproduce pedestrian motion in three different datasets, HBS, CITR, and DUT. It also compared two different ways of calibrating the model parameters. The qualitative evaluation examined the simulation of collective pedestrian motion in a series of fundamental vehicle-pedestrian interaction scenarios. The above evaluation results demonstrated the effectiveness of the proposed model.

AI for Explaining Decisions in Multi-Agent Environments

Oct 12, 2019

Abstract:Explanation is necessary for humans to understand and accept decisions made by an AI system when the system's goal is known. It is even more important when the AI system makes decisions in multi-agent environments where the human does not know the systems' goals since they may depend on other agents' preferences. In such situations, explanations should aim to increase user satisfaction, taking into account the system's decision, the user's and the other agents' preferences, the environment settings and properties such as fairness, envy and privacy. Generating explanations that will increase user satisfaction is very challenging; to this end, we propose a new research direction: xMASE. We then review the state of the art and discuss research directions towards efficient methodologies and algorithms for generating explanations that will increase users' satisfaction from AI system's decisions in multi-agent environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge