Ümit Özgüner

Predicting Pedestrian Crossing Intention with Feature Fusion and Spatio-Temporal Attention

Apr 12, 2021

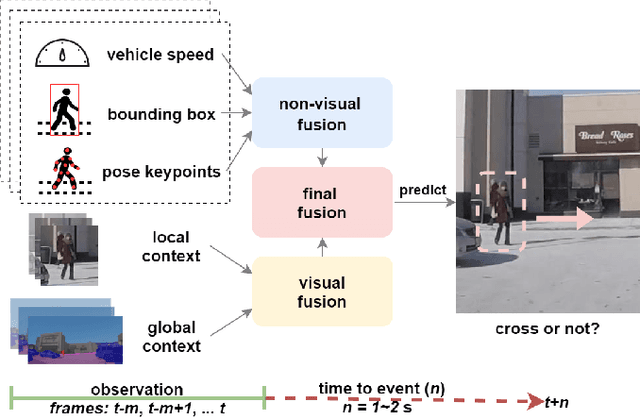

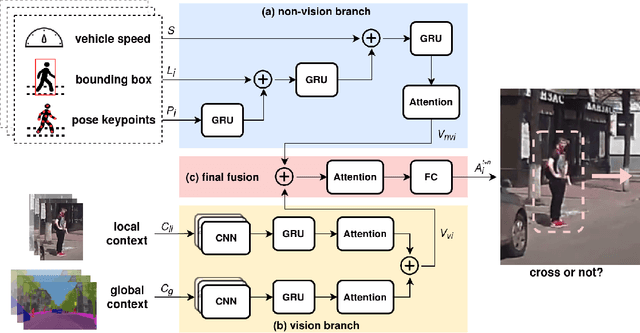

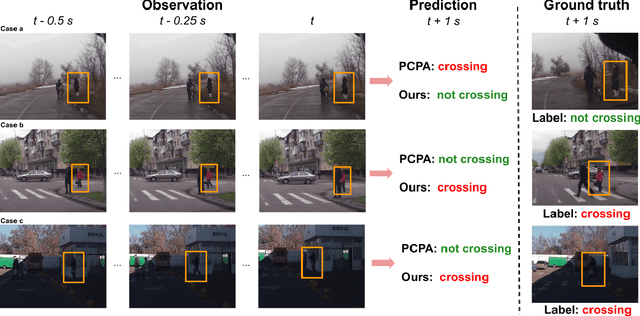

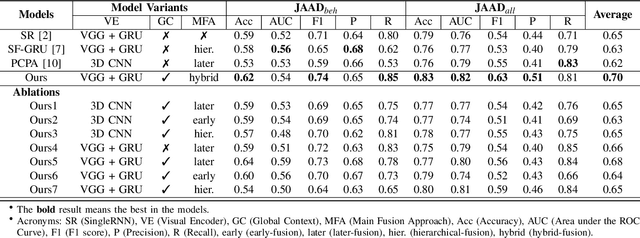

Abstract:Predicting vulnerable road user behavior is an essential prerequisite for deploying Automated Driving Systems (ADS) in the real-world. Pedestrian crossing intention should be recognized in real-time, especially for urban driving. Recent works have shown the potential of using vision-based deep neural network models for this task. However, these models are not robust and certain issues still need to be resolved. First, the global spatio-temproal context that accounts for the interaction between the target pedestrian and the scene has not been properly utilized. Second, the optimum strategy for fusing different sensor data has not been thoroughly investigated. This work addresses the above limitations by introducing a novel neural network architecture to fuse inherently different spatio-temporal features for pedestrian crossing intention prediction. We fuse different phenomena such as sequences of RGB imagery, semantic segmentation masks, and ego-vehicle speed in an optimum way using attention mechanisms and a stack of recurrent neural networks. The optimum architecture was obtained through exhaustive ablation and comparison studies. Extensive comparative experiments on the JAAD pedestrian action prediction benchmark demonstrate the effectiveness of the proposed method, where state-of-the-art performance was achieved. Our code is open-source and publicly available.

On the Generalizability of Motion Models for Road Users in Heterogeneous Shared Traffic Spaces

Jan 18, 2021

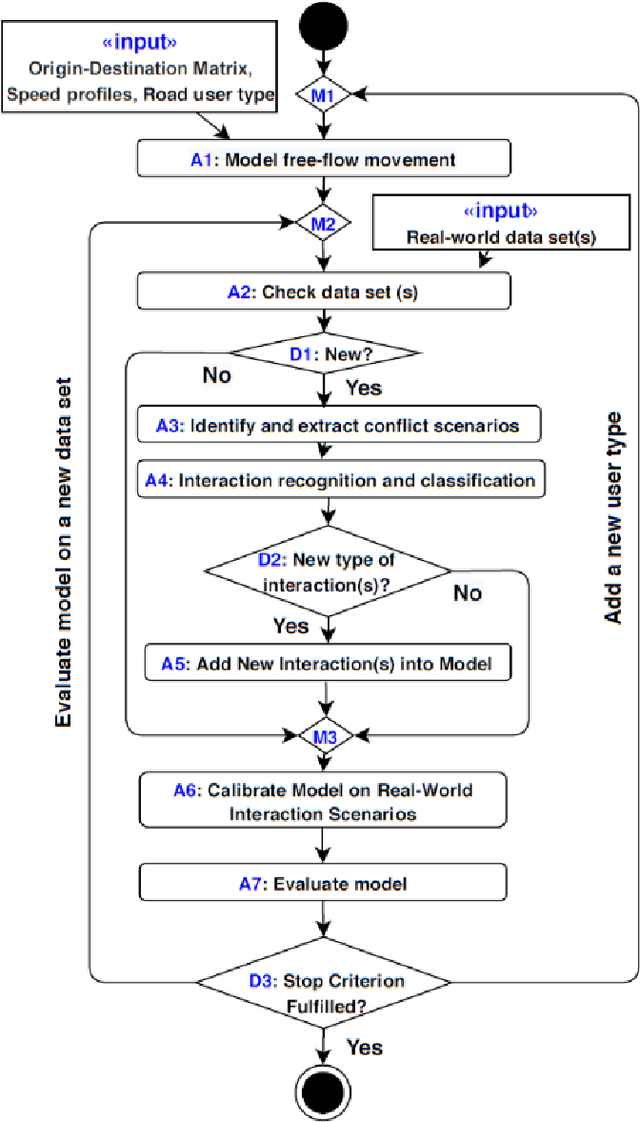

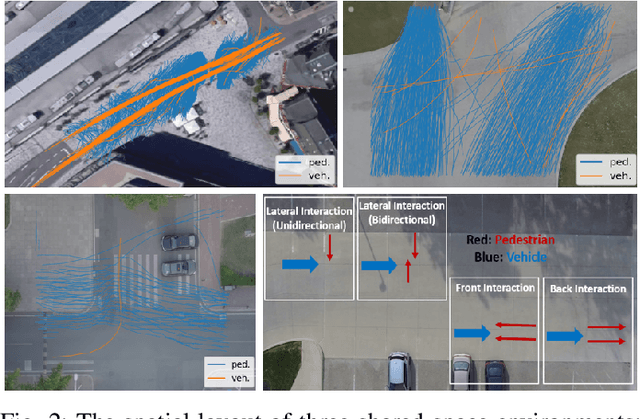

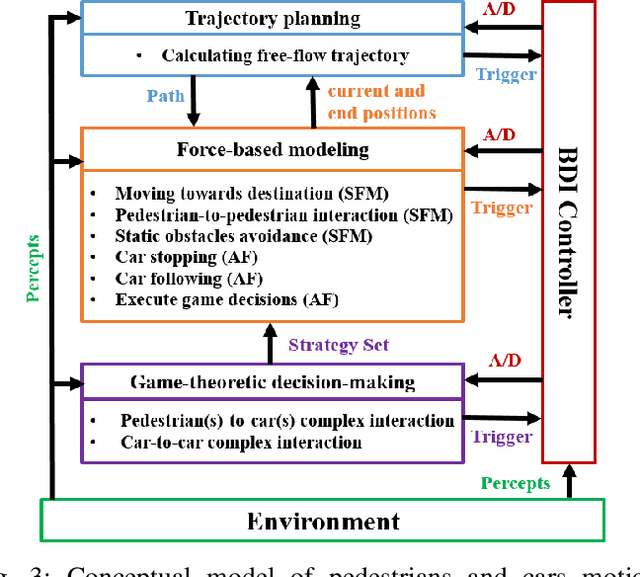

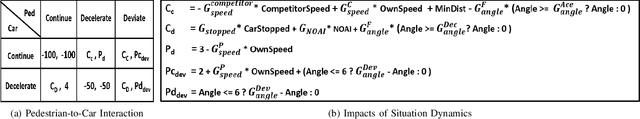

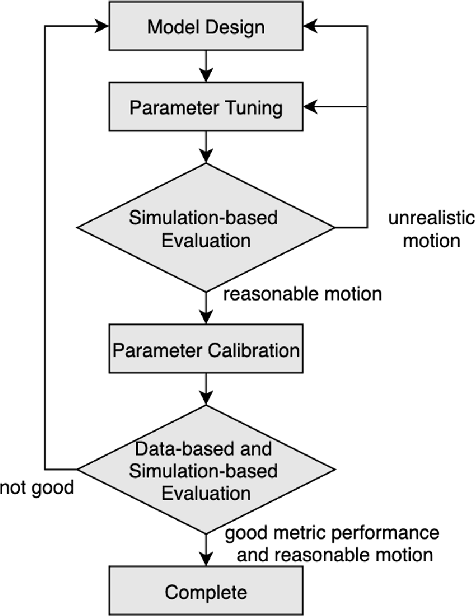

Abstract:Modeling mixed-traffic motion and interactions is crucial to assess safety, efficiency, and feasibility of future urban areas. The lack of traffic regulations, diverse transport modes, and the dynamic nature of mixed-traffic zones like shared spaces make realistic modeling of such environments challenging. This paper focuses on the generalizability of the motion model, i.e., its ability to generate realistic behavior in different environmental settings, an aspect which is lacking in existing works. Specifically, our first contribution is a novel and systematic process of formulating general motion models and application of this process is to extend our Game-Theoretic Social Force Model (GSFM) towards a general model for generating a large variety of motion behaviors of pedestrians and cars from different shared spaces. Our second contribution is to consider different motion patterns of pedestrians by calibrating motion-related features of individual pedestrian and clustering them into groups. We analyze two clustering approaches. The calibration and evaluation of our model are performed on three different shared space data sets. The results indicate that our model can realistically simulate a wide range of motion behaviors and interaction scenarios, and that adding different motion patterns of pedestrians into our model improves its performance.

Sub-Goal Social Force Model for Collective Pedestrian Motion Under Vehicle Influence

Jan 10, 2021

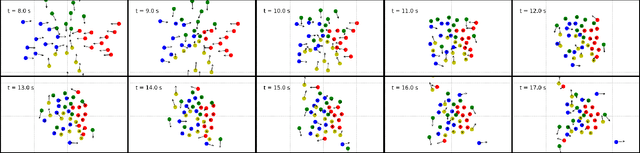

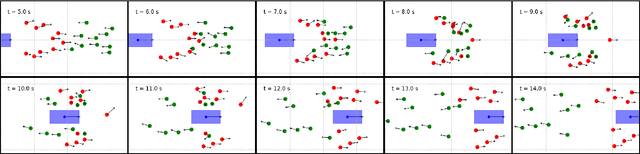

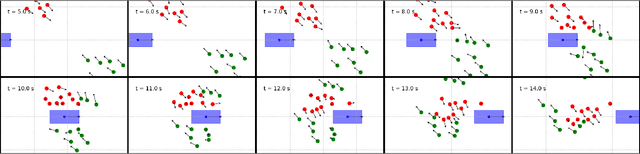

Abstract:In mixed traffic scenarios, a certain number of pedestrians might coexist in a small area while interacting with vehicles. In this situation, every pedestrian must simultaneously react to the surrounding pedestrians and vehicles. Analytical modeling of such collective pedestrian motion can benefit intelligent transportation practices like shared space design and urban autonomous driving. This work proposed the sub-goal social force model (SG-SFM) to describe the collective pedestrian motion under vehicle influence. The proposed model introduced a new design of vehicle influence on pedestrian motion, which was smoothly combined with the influence of surrounding pedestrians using the sub-goal concept. This model aims to describe generalized pedestrian motion, i.e., it is applicable to various vehicle-pedestrian interaction patterns. The generalization was verified by both quantitative and qualitative evaluation. The quantitative evaluation was conducted to reproduce pedestrian motion in three different datasets, HBS, CITR, and DUT. It also compared two different ways of calibrating the model parameters. The qualitative evaluation examined the simulation of collective pedestrian motion in a series of fundamental vehicle-pedestrian interaction scenarios. The above evaluation results demonstrated the effectiveness of the proposed model.

Faraway-Frustum: Dealing with Lidar Sparsity for 3D Object Detection using Fusion

Nov 03, 2020

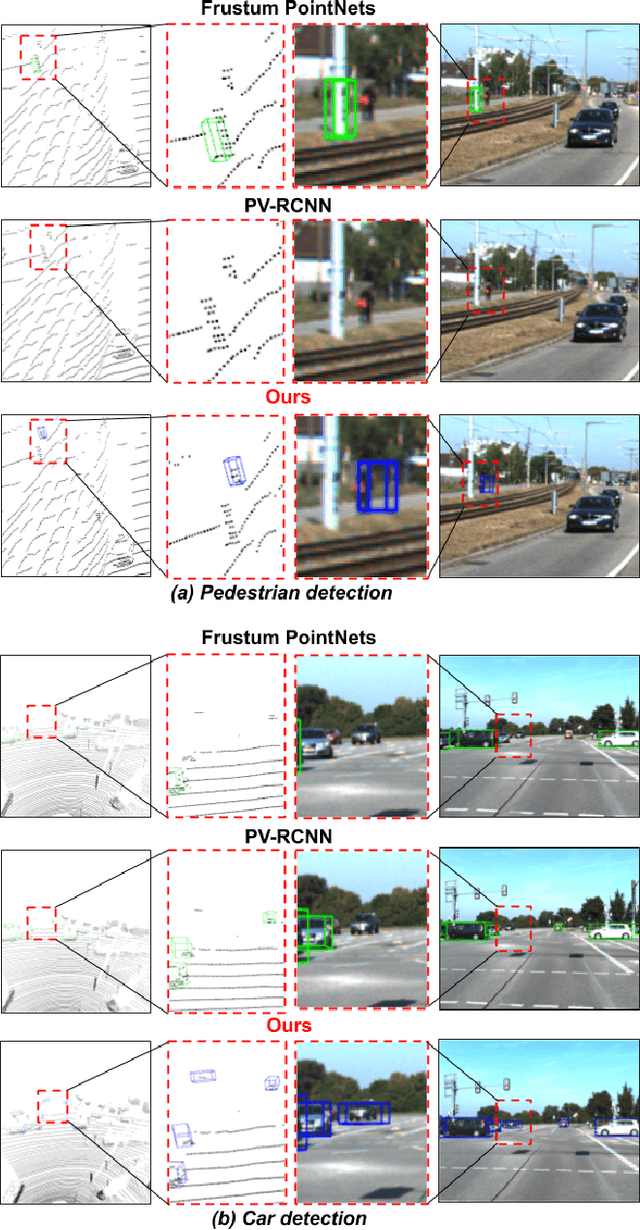

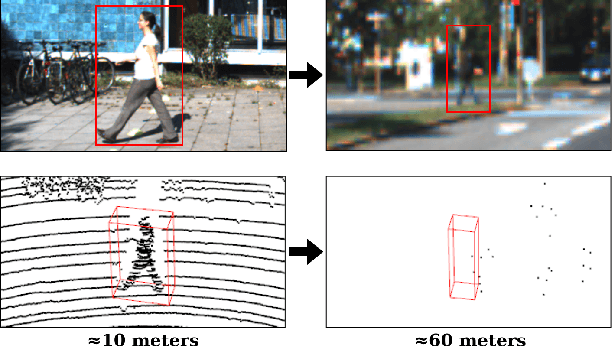

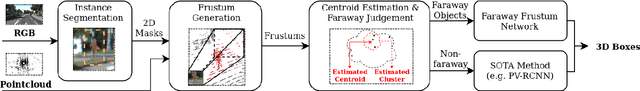

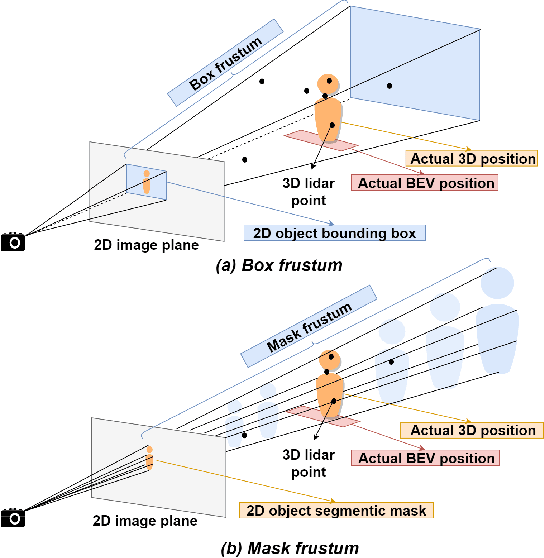

Abstract:Learned pointcloud representations do not generalize well with an increase in distance to the sensor. For example, at a range greater than 60 meters, the sparsity of lidar pointclouds reaches to a point where even humans cannot discern object shapes from each other. However, this distance should not be considered very far for fast-moving vehicles: A vehicle can traverse 60 meters under two seconds while moving at 70 mph. For safe and robust driving automation, acute 3D object detection at these ranges is indispensable. Against this backdrop, we introduce faraway-frustum: a novel fusion strategy for detecting faraway objects. The main strategy is to depend solely on the 2D vision for recognizing object class, as object shape does not change drastically with an increase in depth, and use pointcloud data for object localization in the 3D space for faraway objects. For closer objects, we use learned pointcloud representations instead, following state-of-the-art. This strategy alleviates the main shortcoming of object detection with learned pointcloud representations. Experiments on the KITTI dataset demonstrate that our method outperforms state-of-the-art by a considerable margin for faraway object detection in bird's-eye-view and 3D.

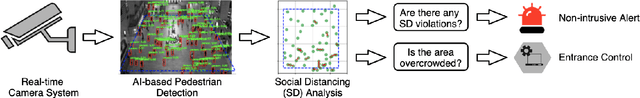

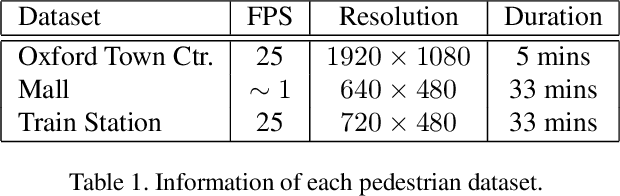

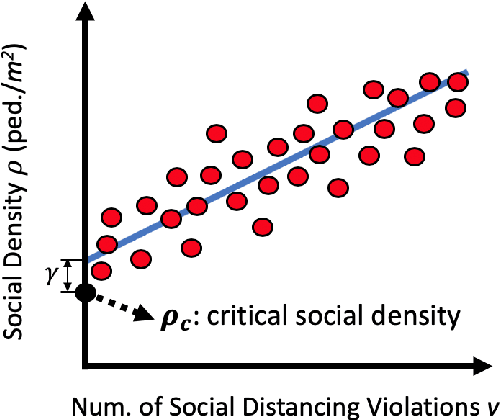

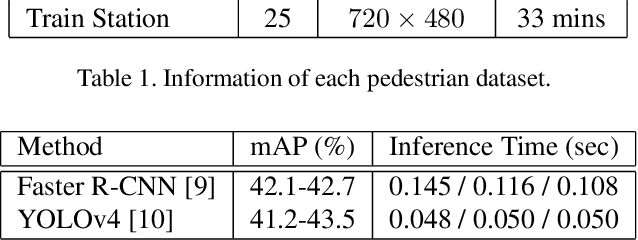

A Vision-based Social Distancing and Critical Density Detection System for COVID-19

Jul 08, 2020

Abstract:Social distancing has been proven as an effective measure against the spread of the infectious COronaVIrus Disease 2019 (COVID-19). However, individuals are not used to tracking the required 6-feet (2-meters) distance between themselves and their surroundings. An active surveillance system capable of detecting distances between individuals and warning them can slow down the spread of the deadly disease. Furthermore, measuring social density in a region of interest (ROI) and modulating inflow can decrease social distancing violation occurrence chance. On the other hand, recording data and labeling individuals who do not follow the measures will breach individuals' rights in free-societies. Here we propose an Artificial Intelligence (AI) based real-time social distancing detection and warning system considering four important ethical factors: (1) the system should never record/cache data, (2) the warnings should not target the individuals, (3) no human supervisor should be in the detection/warning loop, and (4) the code should be open-source and accessible to the public. Against this backdrop, we propose using a monocular camera and deep learning-based real-time object detectors to measure social distancing. If a violation is detected, a non-intrusive audio-visual warning signal is emitted without targeting the individual who breached the social distancing measure. Also, if the social density is over a critical value, the system sends a control signal to modulate inflow into the ROI. We tested the proposed method across real-world datasets to measure its generality and performance. The proposed method is ready for deployment, and our code is open-sourced.

Top-view Trajectories: A Pedestrian Dataset of Vehicle-Crowd Interaction from Controlled Experiments and Crowded Campus

Feb 01, 2019

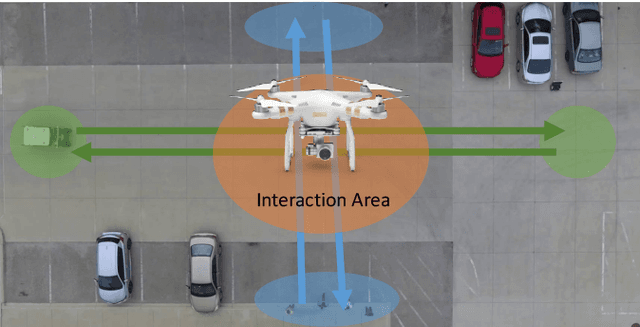

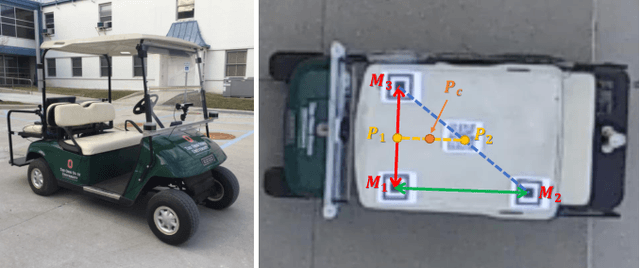

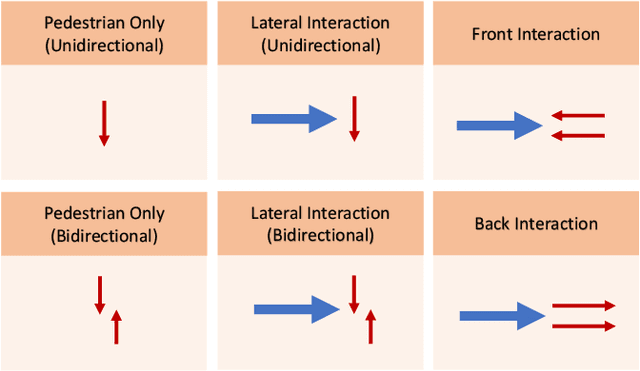

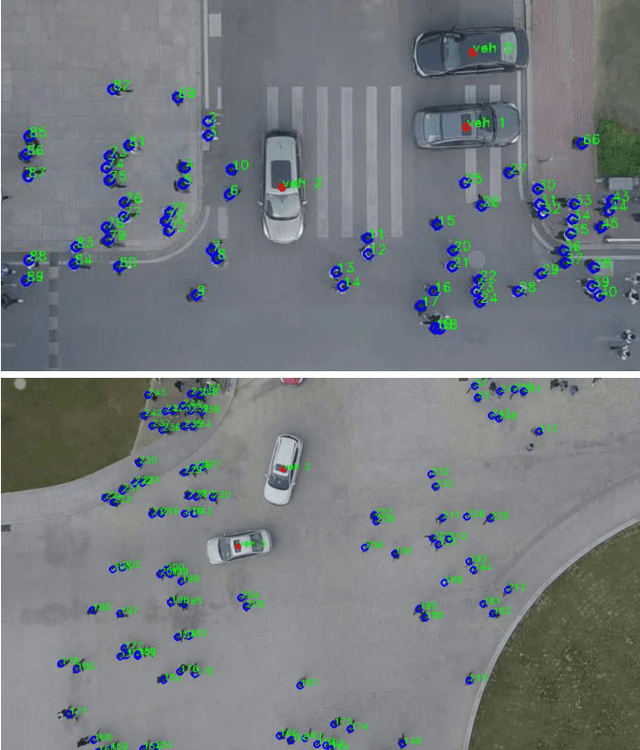

Abstract:Predicting the collective motion of a group of pedestrians (a crowd) under the vehicle influence is essential for the development of autonomous vehicles to deal with mixed urban scenarios where interpersonal interaction and vehicle-crowd interaction (VCI) are significant. This usually requires a model that can describe individual pedestrian motion under the influence of nearby pedestrians and the vehicle. This study proposed two pedestrian trajectory dataset, CITR dataset and DUT dataset, so that the pedestrian motion models can be further calibrated and verified, especially when vehicle influence on pedestrians plays an important role. CITR dataset consists of experimentally designed fundamental VCI scenarios (front, back, and lateral VCIs) and provides unique ID for each pedestrian, which is suitable for exploring a specific aspect of VCI. DUT dataset gives two ordinary and natural VCI scenarios in crowded university campus, which can be used for more general purpose VCI exploration. The trajectories of pedestrians, as well as vehicles, were extracted by processing video frames that come from a down-facing camera mounted on a hovering drone as the recording equipment. The final trajectories were refined by a Kalman Filter, in which the pedestrian velocity was also estimated. The statistics of the velocity magnitude distribution demonstrated the validity of the proposed dataset. In total, there are approximate 340 pedestrian trajectories in CITR dataset and 1793 pedestrian trajectories in DUT dataset. The dataset is available at GitHub.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge