Noam Hazon

The Complexity of Manipulation of k-Coalitional Games on Graphs

Aug 14, 2024

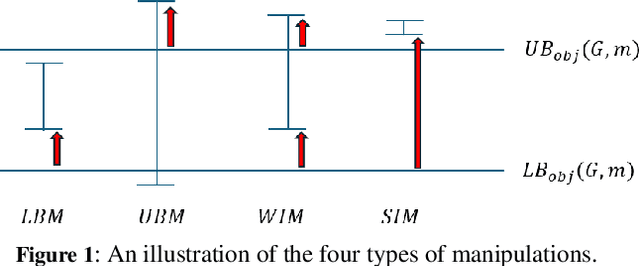

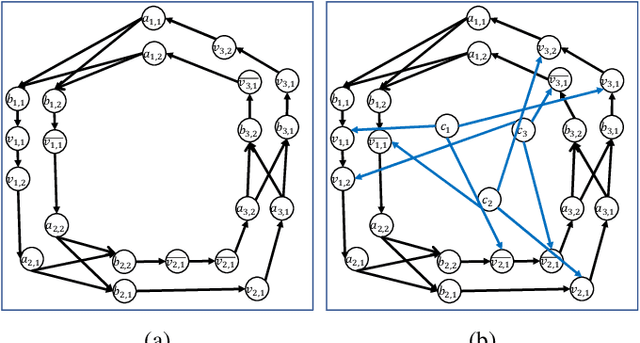

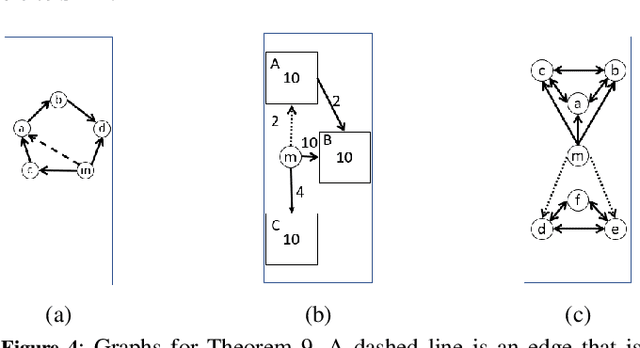

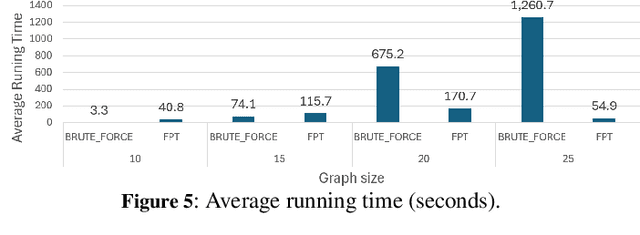

Abstract:In many settings, there is an organizer who would like to divide a set of agents into $k$ coalitions, and cares about the friendships within each coalition. Specifically, the organizer might want to maximize utilitarian social welfare, maximize egalitarian social welfare, or simply guarantee that every agent will have at least one friend within his coalition. However, in many situations, the organizer is not familiar with the friendship connections, and he needs to obtain them from the agents. In this setting, a manipulative agent may falsely report friendship connections in order to increase his utility. In this paper, we analyze the complexity of finding manipulation in such $k$-coalitional games on graphs. We also introduce a new type of manipulation, socially-aware manipulation, in which the manipulator would like to increase his utility without decreasing the social welfare. We then study the complexity of finding socially-aware manipulation in our setting. Finally, we examine the frequency of socially-aware manipulation and the running time of our algorithms via simulation results.

The Leximin Approach for a Sequence of Collective Decisions

May 29, 2023

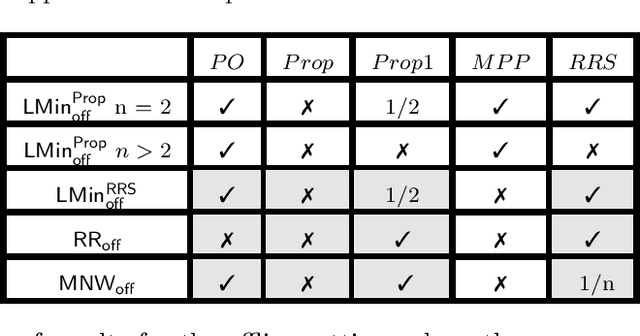

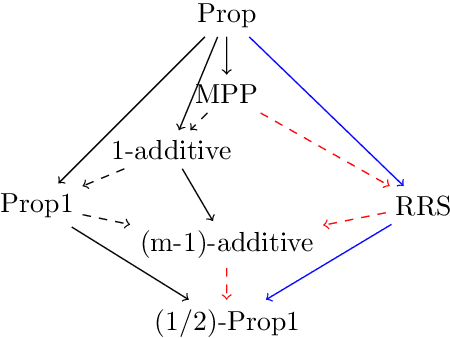

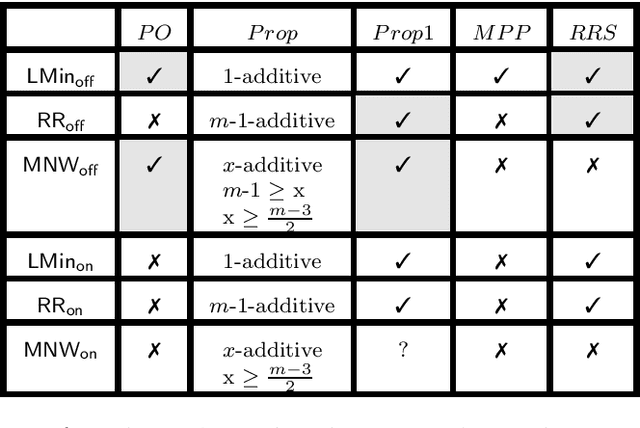

Abstract:In many situations, several agents need to make a sequence of decisions. For example, a group of workers that needs to decide where their weekly meeting should take place. In such situations, a decision-making mechanism must consider fairness notions. In this paper, we analyze the fairness of three known mechanisms: round-robin, maximum Nash welfare, and leximin. We consider both offline and online settings, and concentrate on the fairness notion of proportionality and its relaxations. Specifically, in the offline setting, we show that the three mechanisms fail to find a proportional or approximate-proportional outcome, even if such an outcome exists. We thus introduce a new fairness property that captures this requirement, and show that a variant of the leximin mechanism satisfies the new fairness property. In the online setting, we show that it is impossible to guarantee proportionality or its relaxations. We thus consider a natural restriction on the agents' preferences, and show that the leximin mechanism guarantees the best possible additive approximation to proportionality and satisfies all the relaxations of proportionality.

Explaining Ridesharing: Selection of Explanations for Increasing User Satisfaction

May 26, 2021

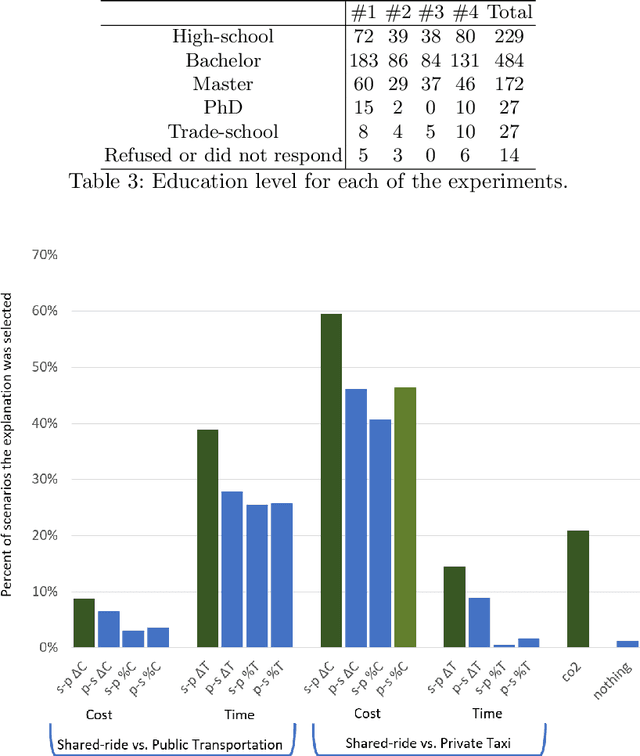

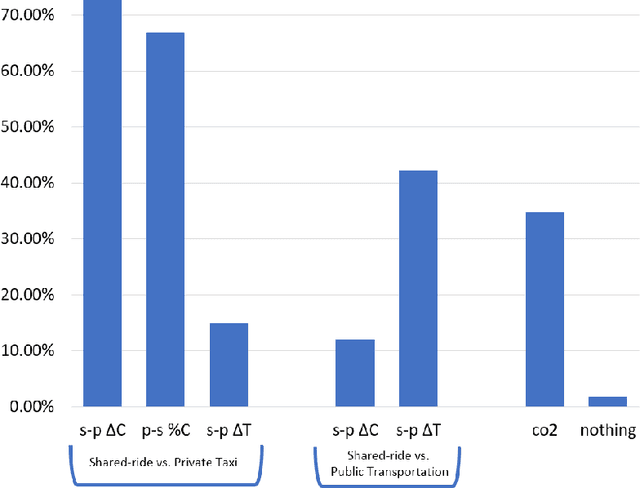

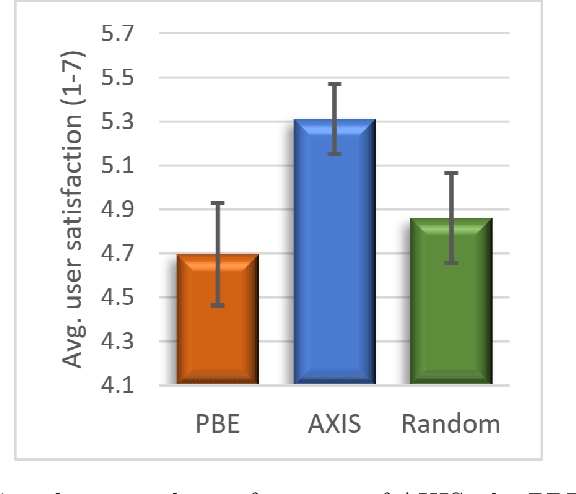

Abstract:Transportation services play a crucial part in the development of modern smart cities. In particular, on-demand ridesharing services, which group together passengers with similar itineraries, are already operating in several metropolitan areas. These services can be of significant social and environmental benefit, by reducing travel costs, road congestion and CO2 emissions. Unfortunately, despite their advantages, not many people opt to use these ridesharing services. We believe that increasing the user satisfaction from the service will cause more people to utilize it, which, in turn, will improve the quality of the service, such as the waiting time, cost, travel time, and service availability. One possible way for increasing user satisfaction is by providing appropriate explanations comparing the alternative modes of transportation, such as a private taxi ride and public transportation. For example, a passenger may be more satisfied from a shared-ride if she is told that a private taxi ride would have cost her 50% more. Therefore, the problem is to develop an agent that provides explanations that will increase the user satisfaction. We model our environment as a signaling game and show that a rational agent, which follows the perfect Bayesian equilibrium, must reveal all of the information regarding the possible alternatives to the passenger. In addition, we develop a machine learning based agent that, when given a shared-ride along with its possible alternatives, selects the explanations that are most likely to increase user satisfaction. Using feedback from humans we show that our machine learning based agent outperforms the rational agent and an agent that randomly chooses explanations, in terms of user satisfaction.

AI for Explaining Decisions in Multi-Agent Environments

Oct 12, 2019

Abstract:Explanation is necessary for humans to understand and accept decisions made by an AI system when the system's goal is known. It is even more important when the AI system makes decisions in multi-agent environments where the human does not know the systems' goals since they may depend on other agents' preferences. In such situations, explanations should aim to increase user satisfaction, taking into account the system's decision, the user's and the other agents' preferences, the environment settings and properties such as fairness, envy and privacy. Generating explanations that will increase user satisfaction is very challenging; to this end, we propose a new research direction: xMASE. We then review the state of the art and discuss research directions towards efficient methodologies and algorithms for generating explanations that will increase users' satisfaction from AI system's decisions in multi-agent environments.

Approximation and Heuristic Algorithms for Probabilistic Physical Search on General Graphs

Sep 27, 2015

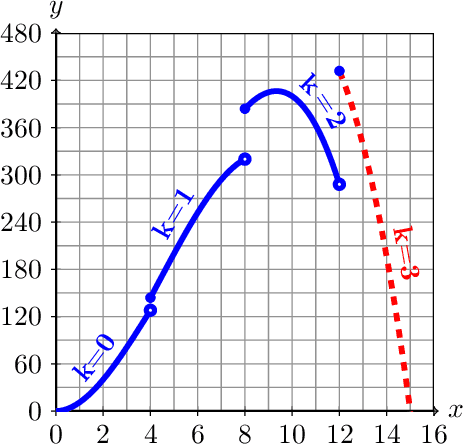

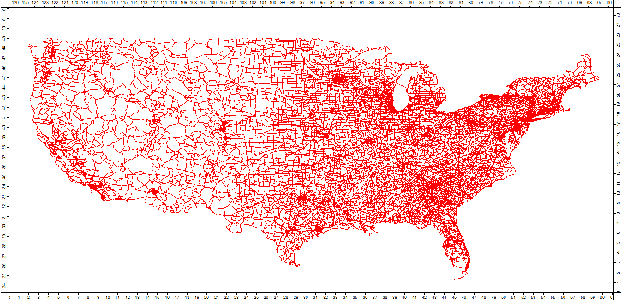

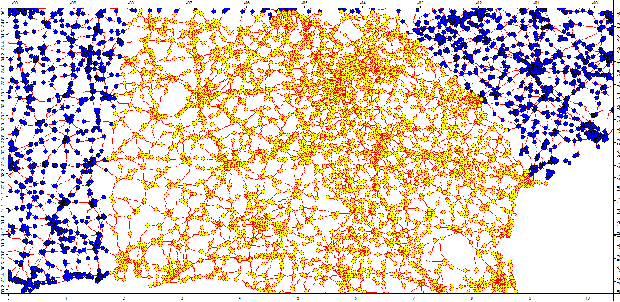

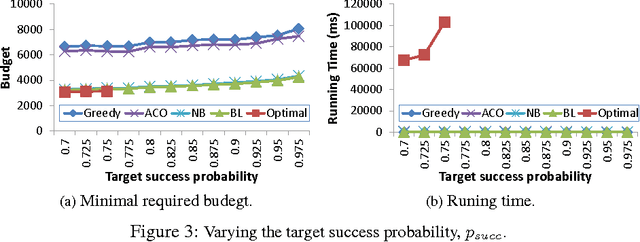

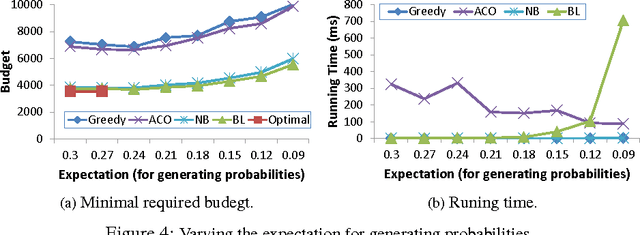

Abstract:We consider an agent seeking to obtain an item, potentially available at different locations in a physical environment. The traveling costs between locations are known in advance, but there is only probabilistic knowledge regarding the possible prices of the item at any given location. Given such a setting, the problem is to find a plan that maximizes the probability of acquiring the good while minimizing both travel and purchase costs. Sample applications include agents in search-and-rescue or exploration missions, e.g., a rover on Mars seeking to mine a specific mineral. These probabilistic physical search problems have been previously studied, but we present the first approximation and heuristic algorithms for solving such problems on general graphs. We establish an interesting connection between these problems and classical graph-search problems, which led us to provide the approximation algorithms and hardness of approximation results for our settings. We further suggest several heuristics for practical use, and demonstrate their effectiveness with simulation on real graph structure and synthetic graphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge