Hongjun Choi

VERIRAG: Healthcare Claim Verification via Statistical Audit in Retrieval-Augmented Generation

Jul 23, 2025Abstract:Retrieval-augmented generation (RAG) systems are increasingly adopted in clinical decision support, yet they remain methodologically blind-they retrieve evidence but cannot vet its scientific quality. A paper claiming "Antioxidant proteins decreased after alloferon treatment" and a rigorous multi-laboratory replication study will be treated as equally credible, even if the former lacked scientific rigor or was even retracted. To address this challenge, we introduce VERIRAG, a framework that makes three notable contributions: (i) the Veritable, an 11-point checklist that evaluates each source for methodological rigor, including data integrity and statistical validity; (ii) a Hard-to-Vary (HV) Score, a quantitative aggregator that weights evidence by its quality and diversity; and (iii) a Dynamic Acceptance Threshold, which calibrates the required evidence based on how extraordinary a claim is. Across four datasets-comprising retracted, conflicting, comprehensive, and settled science corpora-the VERIRAG approach consistently outperforms all baselines, achieving absolute F1 scores ranging from 0.53 to 0.65, representing a 10 to 14 point improvement over the next-best method in each respective dataset. We will release all materials necessary for reproducing our results.

Intra-class Patch Swap for Self-Distillation

May 20, 2025Abstract:Knowledge distillation (KD) is a valuable technique for compressing large deep learning models into smaller, edge-suitable networks. However, conventional KD frameworks rely on pre-trained high-capacity teacher networks, which introduce significant challenges such as increased memory/storage requirements, additional training costs, and ambiguity in selecting an appropriate teacher for a given student model. Although a teacher-free distillation (self-distillation) has emerged as a promising alternative, many existing approaches still rely on architectural modifications or complex training procedures, which limit their generality and efficiency. To address these limitations, we propose a novel framework based on teacher-free distillation that operates using a single student network without any auxiliary components, architectural modifications, or additional learnable parameters. Our approach is built on a simple yet highly effective augmentation, called intra-class patch swap augmentation. This augmentation simulates a teacher-student dynamic within a single model by generating pairs of intra-class samples with varying confidence levels, and then applying instance-to-instance distillation to align their predictive distributions. Our method is conceptually simple, model-agnostic, and easy to implement, requiring only a single augmentation function. Extensive experiments across image classification, semantic segmentation, and object detection show that our method consistently outperforms both existing self-distillation baselines and conventional teacher-based KD approaches. These results suggest that the success of self-distillation could hinge on the design of the augmentation itself. Our codes are available at https://github.com/hchoi71/Intra-class-Patch-Swap.

Role of Mixup in Topological Persistence Based Knowledge Distillation for Wearable Sensor Data

Feb 02, 2025

Abstract:The analysis of wearable sensor data has enabled many successes in several applications. To represent the high-sampling rate time-series with sufficient detail, the use of topological data analysis (TDA) has been considered, and it is found that TDA can complement other time-series features. Nonetheless, due to the large time consumption and high computational resource requirements of extracting topological features through TDA, it is difficult to deploy topological knowledge in various applications. To tackle this problem, knowledge distillation (KD) can be adopted, which is a technique facilitating model compression and transfer learning to generate a smaller model by transferring knowledge from a larger network. By leveraging multiple teachers in KD, both time-series and topological features can be transferred, and finally, a superior student using only time-series data is distilled. On the other hand, mixup has been popularly used as a robust data augmentation technique to enhance model performance during training. Mixup and KD employ similar learning strategies. In KD, the student model learns from the smoothed distribution generated by the teacher model, while mixup creates smoothed labels by blending two labels. Hence, this common smoothness serves as the connecting link that establishes a connection between these two methods. In this paper, we analyze the role of mixup in KD with time-series as well as topological persistence, employing multiple teachers. We present a comprehensive analysis of various methods in KD and mixup on wearable sensor data.

ROCAS: Root Cause Analysis of Autonomous Driving Accidents via Cyber-Physical Co-mutation

Sep 12, 2024

Abstract:As Autonomous driving systems (ADS) have transformed our daily life, safety of ADS is of growing significance. While various testing approaches have emerged to enhance the ADS reliability, a crucial gap remains in understanding the accidents causes. Such post-accident analysis is paramount and beneficial for enhancing ADS safety and reliability. Existing cyber-physical system (CPS) root cause analysis techniques are mainly designed for drones and cannot handle the unique challenges introduced by more complex physical environments and deep learning models deployed in ADS. In this paper, we address the gap by offering a formal definition of ADS root cause analysis problem and introducing ROCAS, a novel ADS root cause analysis framework featuring cyber-physical co-mutation. Our technique uniquely leverages both physical and cyber mutation that can precisely identify the accident-trigger entity and pinpoint the misconfiguration of the target ADS responsible for an accident. We further design a differential analysis to identify the responsible module to reduce search space for the misconfiguration. We study 12 categories of ADS accidents and demonstrate the effectiveness and efficiency of ROCAS in narrowing down search space and pinpointing the misconfiguration. We also show detailed case studies on how the identified misconfiguration helps understand rationale behind accidents.

Topological Persistence Guided Knowledge Distillation for Wearable Sensor Data

Jul 07, 2024

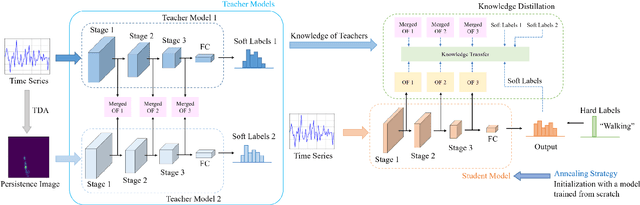

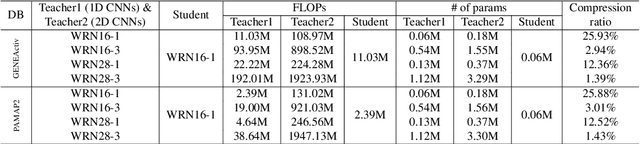

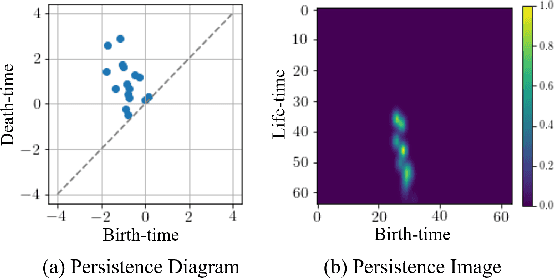

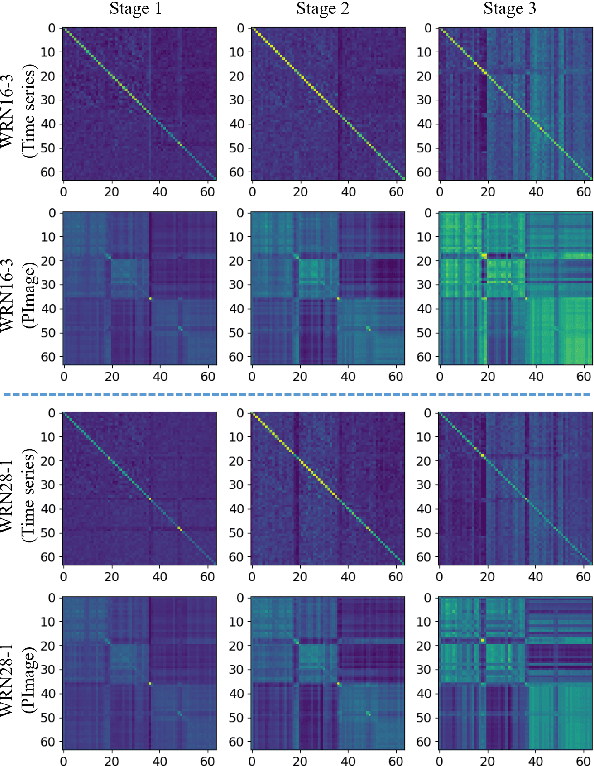

Abstract:Deep learning methods have achieved a lot of success in various applications involving converting wearable sensor data to actionable health insights. A common application areas is activity recognition, where deep-learning methods still suffer from limitations such as sensitivity to signal quality, sensor characteristic variations, and variability between subjects. To mitigate these issues, robust features obtained by topological data analysis (TDA) have been suggested as a potential solution. However, there are two significant obstacles to using topological features in deep learning: (1) large computational load to extract topological features using TDA, and (2) different signal representations obtained from deep learning and TDA which makes fusion difficult. In this paper, to enable integration of the strengths of topological methods in deep-learning for time-series data, we propose to use two teacher networks, one trained on the raw time-series data, and another trained on persistence images generated by TDA methods. The distilled student model utilizes only the raw time-series data at test-time. This approach addresses both issues. The use of KD with multiple teachers utilizes complementary information, and results in a compact model with strong supervisory features and an integrated richer representation. To assimilate desirable information from different modalities, we design new constraints, including orthogonality imposed on feature correlation maps for improving feature expressiveness and allowing the student to easily learn from the teacher. Also, we apply an annealing strategy in KD for fast saturation and better accommodation from different features, while the knowledge gap between the teachers and student is reduced. Finally, a robust student model is distilled, which uses only the time-series data as an input, while implicitly preserving topological features.

* Engineering Applications of Artificial Intelligence 130, 107719

Enhancing Accuracy and Parameter-Efficiency of Neural Representations for Network Parameterization

Jun 29, 2024

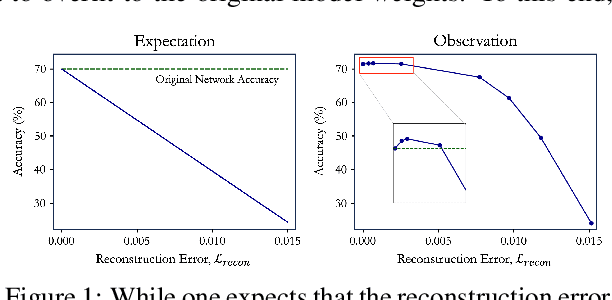

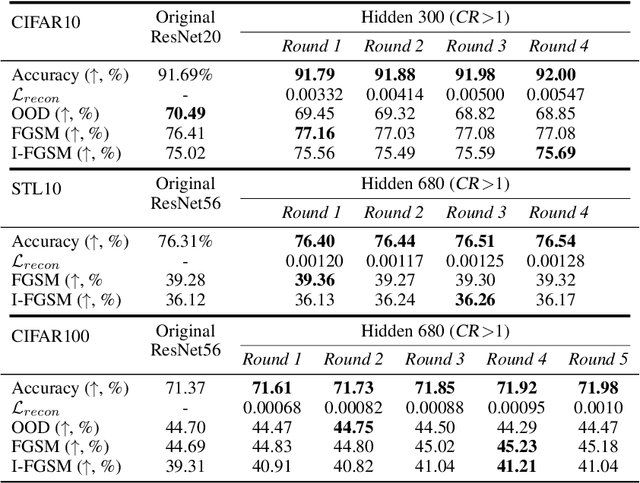

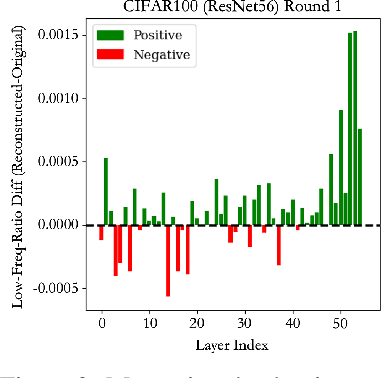

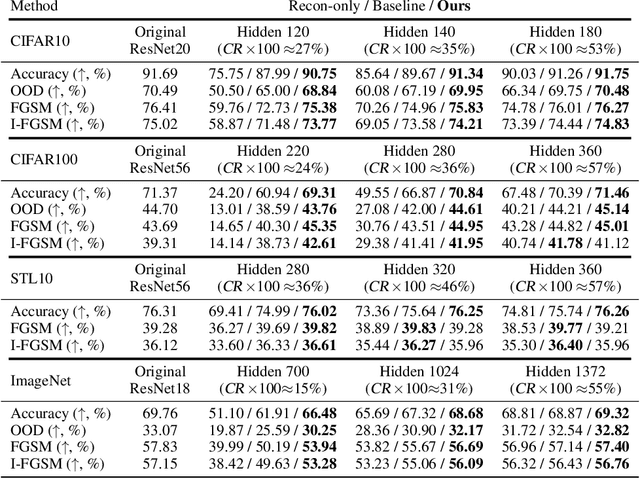

Abstract:In this work, we investigate the fundamental trade-off regarding accuracy and parameter efficiency in the parameterization of neural network weights using predictor networks. We present a surprising finding that, when recovering the original model accuracy is the sole objective, it can be achieved effectively through the weight reconstruction objective alone. Additionally, we explore the underlying factors for improving weight reconstruction under parameter-efficiency constraints, and propose a novel training scheme that decouples the reconstruction objective from auxiliary objectives such as knowledge distillation that leads to significant improvements compared to state-of-the-art approaches. Finally, these results pave way for more practical scenarios, where one needs to achieve improvements on both model accuracy and predictor network parameter-efficiency simultaneously.

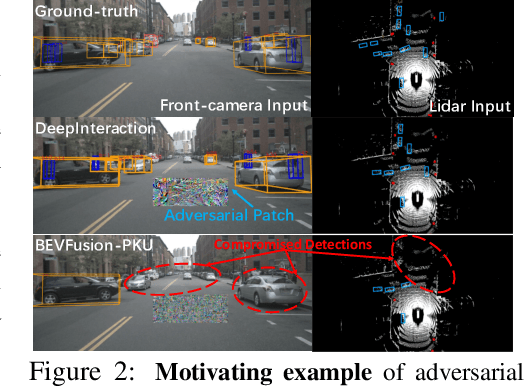

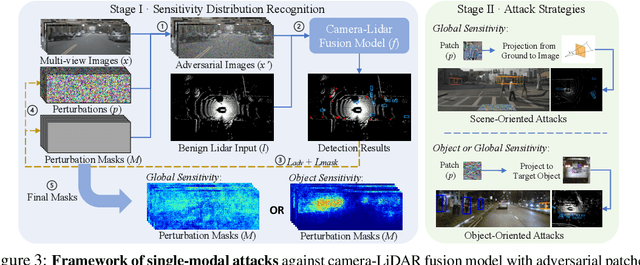

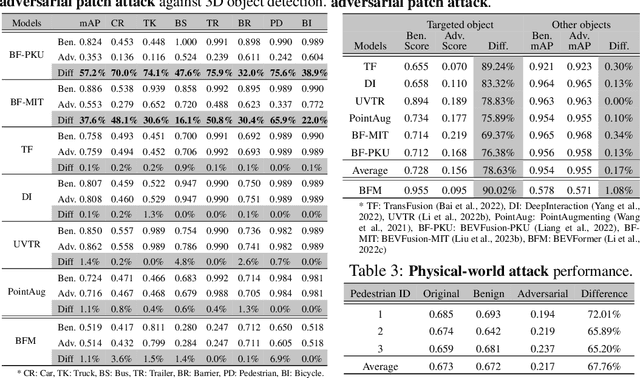

Fusion is Not Enough: Single-Modal Attacks to Compromise Fusion Models in Autonomous Driving

Apr 28, 2023

Abstract:Multi-sensor fusion (MSF) is widely adopted for perception in autonomous vehicles (AVs), particularly for the task of 3D object detection with camera and LiDAR sensors. The rationale behind fusion is to capitalize on the strengths of each modality while mitigating their limitations. The exceptional and leading performance of fusion models has been demonstrated by advanced deep neural network (DNN)-based fusion techniques. Fusion models are also perceived as more robust to attacks compared to single-modal ones due to the redundant information in multiple modalities. In this work, we challenge this perspective with single-modal attacks that targets the camera modality, which is considered less significant in fusion but more affordable for attackers. We argue that the weakest link of fusion models depends on their most vulnerable modality, and propose an attack framework that targets advanced camera-LiDAR fusion models with adversarial patches. Our approach employs a two-stage optimization-based strategy that first comprehensively assesses vulnerable image areas under adversarial attacks, and then applies customized attack strategies to different fusion models, generating deployable patches. Evaluations with five state-of-the-art camera-LiDAR fusion models on a real-world dataset show that our attacks successfully compromise all models. Our approach can either reduce the mean average precision (mAP) of detection performance from 0.824 to 0.353 or degrade the detection score of the target object from 0.727 to 0.151 on average, demonstrating the effectiveness and practicality of our proposed attack framework.

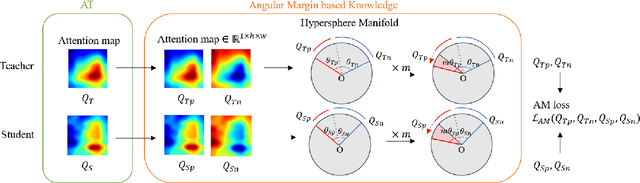

Leveraging Angular Distributions for Improved Knowledge Distillation

Feb 27, 2023

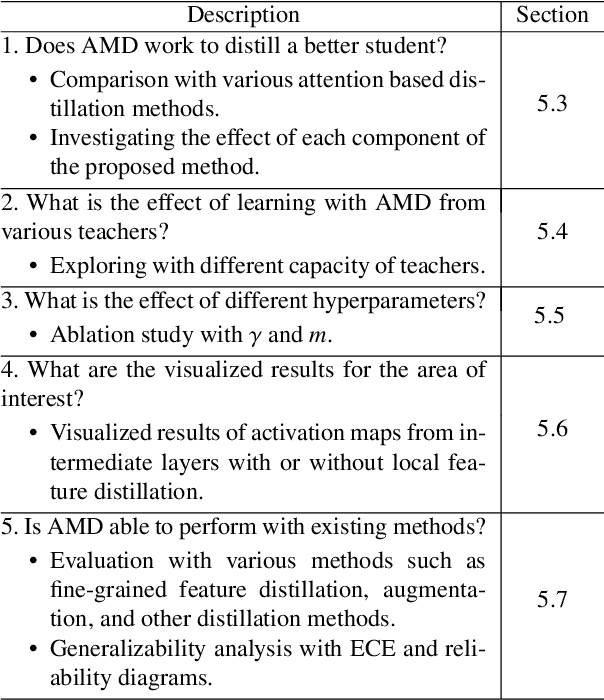

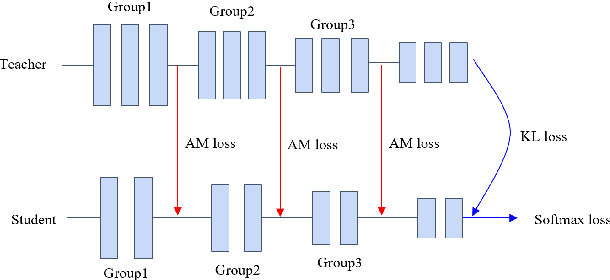

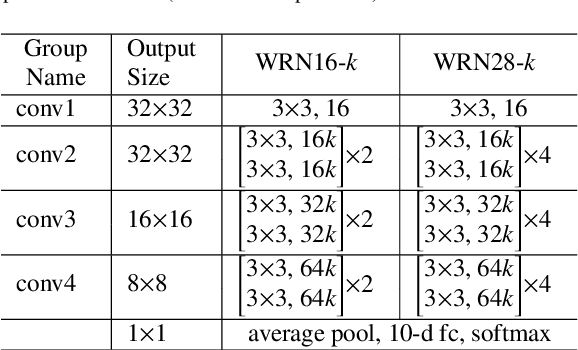

Abstract:Knowledge distillation as a broad class of methods has led to the development of lightweight and memory efficient models, using a pre-trained model with a large capacity (teacher network) to train a smaller model (student network). Recently, additional variations for knowledge distillation, utilizing activation maps of intermediate layers as the source of knowledge, have been studied. Generally, in computer vision applications, it is seen that the feature activation learned by a higher capacity model contains richer knowledge, highlighting complete objects while focusing less on the background. Based on this observation, we leverage the dual ability of the teacher to accurately distinguish between positive (relevant to the target object) and negative (irrelevant) areas. We propose a new loss function for distillation, called angular margin-based distillation (AMD) loss. AMD loss uses the angular distance between positive and negative features by projecting them onto a hypersphere, motivated by the near angular distributions seen in many feature extractors. Then, we create a more attentive feature that is angularly distributed on the hypersphere by introducing an angular margin to the positive feature. Transferring such knowledge from the teacher network enables the student model to harness the higher discrimination of positive and negative features for the teacher, thus distilling superior student models. The proposed method is evaluated for various student-teacher network pairs on four public datasets. Furthermore, we show that the proposed method has advantages in compatibility with other learning techniques, such as using fine-grained features, augmentation, and other distillation methods.

* Neurocomputing, Volume 518, 21 January 2023, Pages 466-481

Understanding the Role of Mixup in Knowledge Distillation: An Empirical Study

Nov 09, 2022

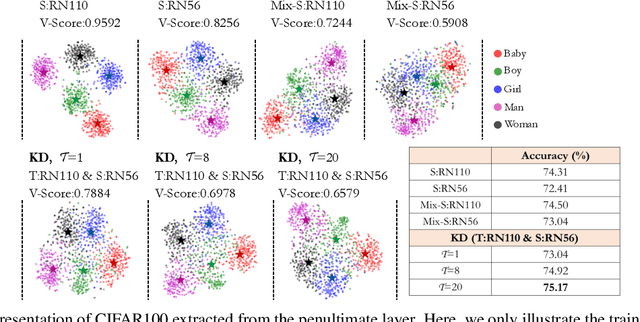

Abstract:Mixup is a popular data augmentation technique based on creating new samples by linear interpolation between two given data samples, to improve both the generalization and robustness of the trained model. Knowledge distillation (KD), on the other hand, is widely used for model compression and transfer learning, which involves using a larger network's implicit knowledge to guide the learning of a smaller network. At first glance, these two techniques seem very different, however, we found that "smoothness" is the connecting link between the two and is also a crucial attribute in understanding KD's interplay with mixup. Although many mixup variants and distillation methods have been proposed, much remains to be understood regarding the role of a mixup in knowledge distillation. In this paper, we present a detailed empirical study on various important dimensions of compatibility between mixup and knowledge distillation. We also scrutinize the behavior of the networks trained with a mixup in the light of knowledge distillation through extensive analysis, visualizations, and comprehensive experiments on image classification. Finally, based on our findings, we suggest improved strategies to guide the student network to enhance its effectiveness. Additionally, the findings of this study provide insightful suggestions to researchers and practitioners that commonly use techniques from KD. Our code is available at https://github.com/hchoi71/MIX-KD.

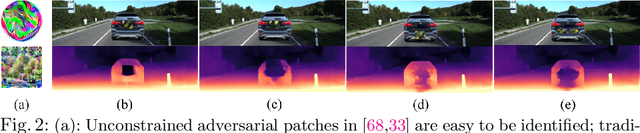

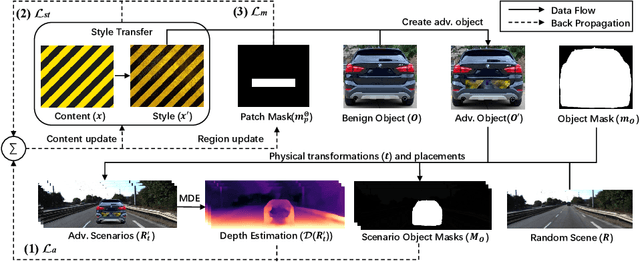

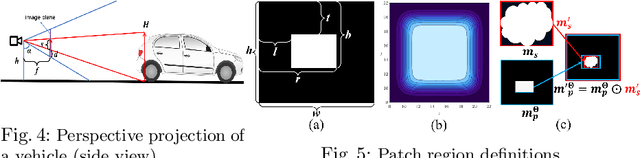

Physical Attack on Monocular Depth Estimation with Optimal Adversarial Patches

Jul 11, 2022

Abstract:Deep learning has substantially boosted the performance of Monocular Depth Estimation (MDE), a critical component in fully vision-based autonomous driving (AD) systems (e.g., Tesla and Toyota). In this work, we develop an attack against learning-based MDE. In particular, we use an optimization-based method to systematically generate stealthy physical-object-oriented adversarial patches to attack depth estimation. We balance the stealth and effectiveness of our attack with object-oriented adversarial design, sensitive region localization, and natural style camouflage. Using real-world driving scenarios, we evaluate our attack on concurrent MDE models and a representative downstream task for AD (i.e., 3D object detection). Experimental results show that our method can generate stealthy, effective, and robust adversarial patches for different target objects and models and achieves more than 6 meters mean depth estimation error and 93% attack success rate (ASR) in object detection with a patch of 1/9 of the vehicle's rear area. Field tests on three different driving routes with a real vehicle indicate that we cause over 6 meters mean depth estimation error and reduce the object detection rate from 90.70% to 5.16% in continuous video frames.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge