Jayaraman J. Thiagarajan

Lawrence Livermore National Laboratory, Livermore, CA

Multi-fidelity Reinforcement Learning Control for Complex Dynamical Systems

Apr 08, 2025

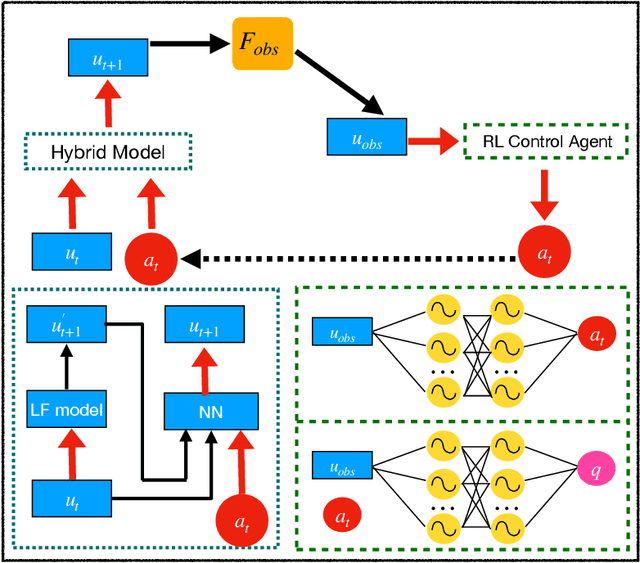

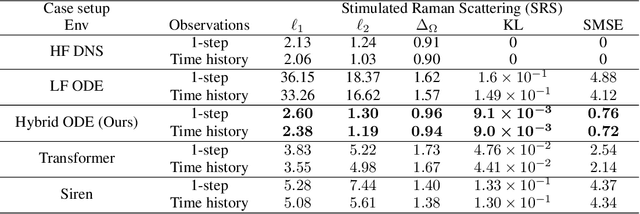

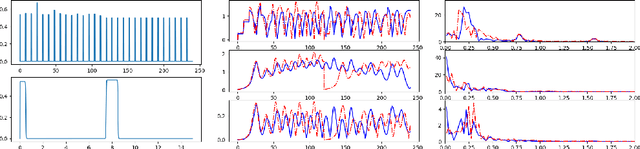

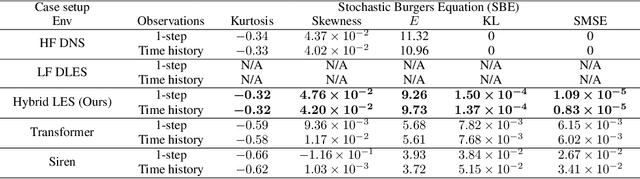

Abstract:Controlling instabilities in complex dynamical systems is challenging in scientific and engineering applications. Deep reinforcement learning (DRL) has seen promising results for applications in different scientific applications. The many-query nature of control tasks requires multiple interactions with real environments of the underlying physics. However, it is usually sparse to collect from the experiments or expensive to simulate for complex dynamics. Alternatively, controlling surrogate modeling could mitigate the computational cost issue. However, a fast and accurate learning-based model by offline training makes it very hard to get accurate pointwise dynamics when the dynamics are chaotic. To bridge this gap, the current work proposes a multi-fidelity reinforcement learning (MFRL) framework that leverages differentiable hybrid models for control tasks, where a physics-based hybrid model is corrected by limited high-fidelity data. We also proposed a spectrum-based reward function for RL learning. The effect of the proposed framework is demonstrated on two complex dynamics in physics. The statistics of the MFRL control result match that computed from many-query evaluations of the high-fidelity environments and outperform other SOTA baselines.

Leveraging Registers in Vision Transformers for Robust Adaptation

Jan 08, 2025

Abstract:Vision Transformers (ViTs) have shown success across a variety of tasks due to their ability to capture global image representations. Recent studies have identified the existence of high-norm tokens in ViTs, which can interfere with unsupervised object discovery. To address this, the use of "registers" which are additional tokens that isolate high norm patch tokens while capturing global image-level information has been proposed. While registers have been studied extensively for object discovery, their generalization properties particularly in out-of-distribution (OOD) scenarios, remains underexplored. In this paper, we examine the utility of register token embeddings in providing additional features for improving generalization and anomaly rejection. To that end, we propose a simple method that combines the special CLS token embedding commonly employed in ViTs with the average-pooled register embeddings to create feature representations which are subsequently used for training a downstream classifier. We find that this enhances OOD generalization and anomaly rejection, while maintaining in-distribution (ID) performance. Extensive experiments across multiple ViT backbones trained with and without registers reveal consistent improvements of 2-4\% in top-1 OOD accuracy and a 2-3\% reduction in false positive rates for anomaly detection. Importantly, these gains are achieved without additional computational overhead.

On The Role of Prompt Construction In Enhancing Efficacy and Efficiency of LLM-Based Tabular Data Generation

Sep 06, 2024

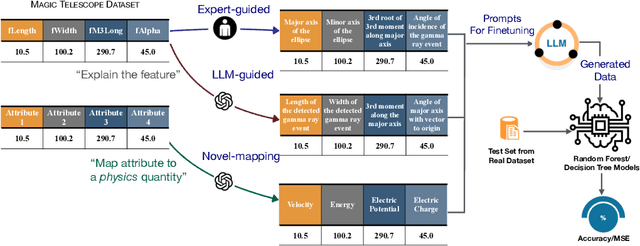

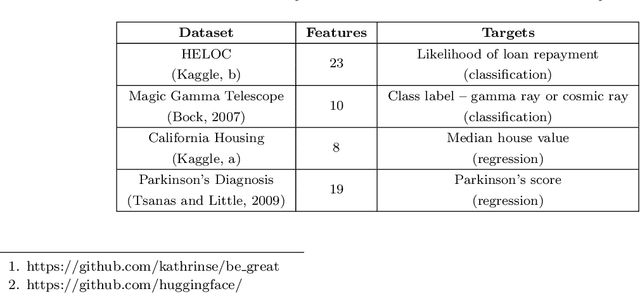

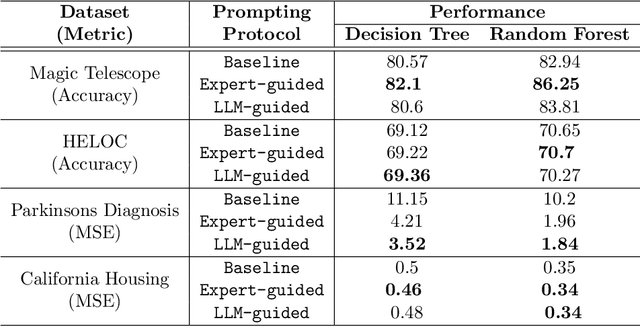

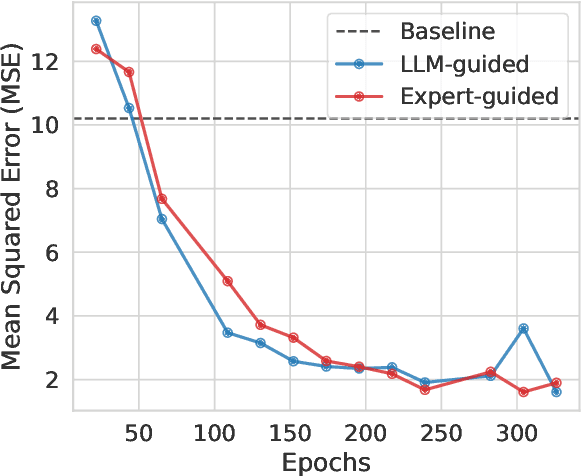

Abstract:LLM-based data generation for real-world tabular data can be challenged by the lack of sufficient semantic context in feature names used to describe columns. We hypothesize that enriching prompts with domain-specific insights can improve both the quality and efficiency of data generation. To test this hypothesis, we explore three prompt construction protocols: Expert-guided, LLM-guided, and Novel-Mapping. Through empirical studies with the recently proposed GReaT framework, we find that context-enriched prompts lead to significantly improved data generation quality and training efficiency.

DECIDER: Leveraging Foundation Model Priors for Improved Model Failure Detection and Explanation

Aug 01, 2024

Abstract:Reliably detecting when a deployed machine learning model is likely to fail on a given input is crucial for ensuring safe operation. In this work, we propose DECIDER (Debiasing Classifiers to Identify Errors Reliably), a novel approach that leverages priors from large language models (LLMs) and vision-language models (VLMs) to detect failures in image classification models. DECIDER utilizes LLMs to specify task-relevant core attributes and constructs a ``debiased'' version of the classifier by aligning its visual features to these core attributes using a VLM, and detects potential failure by measuring disagreement between the original and debiased models. In addition to proactively identifying samples on which the model would fail, DECIDER also provides human-interpretable explanations for failure through a novel attribute-ablation strategy. Through extensive experiments across diverse benchmarks spanning subpopulation shifts (spurious correlations, class imbalance) and covariate shifts (synthetic corruptions, domain shifts), DECIDER consistently achieves state-of-the-art failure detection performance, significantly outperforming baselines in terms of the overall Matthews correlation coefficient as well as failure and success recall. Our codes can be accessed at~\url{https://github.com/kowshikthopalli/DECIDER/}

Enhancing Accuracy and Parameter-Efficiency of Neural Representations for Network Parameterization

Jun 29, 2024

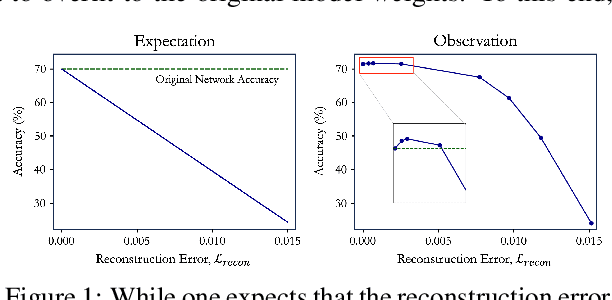

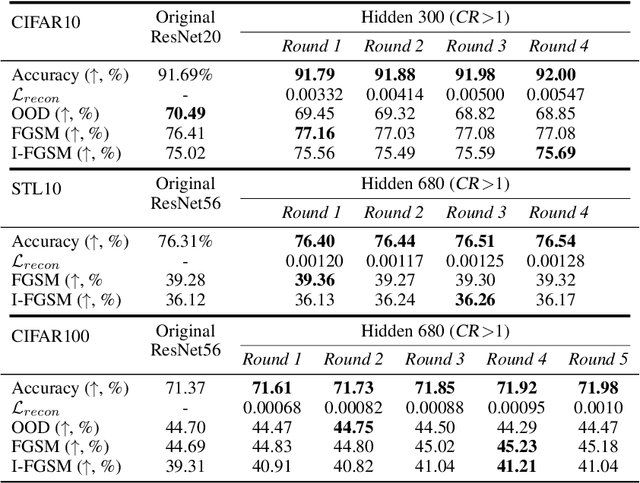

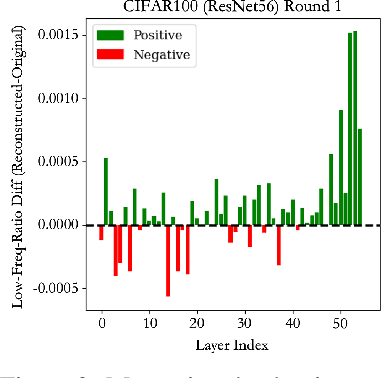

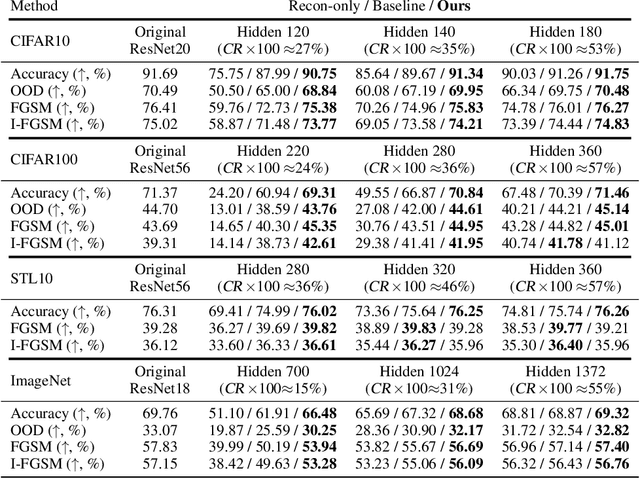

Abstract:In this work, we investigate the fundamental trade-off regarding accuracy and parameter efficiency in the parameterization of neural network weights using predictor networks. We present a surprising finding that, when recovering the original model accuracy is the sole objective, it can be achieved effectively through the weight reconstruction objective alone. Additionally, we explore the underlying factors for improving weight reconstruction under parameter-efficiency constraints, and propose a novel training scheme that decouples the reconstruction objective from auxiliary objectives such as knowledge distillation that leads to significant improvements compared to state-of-the-art approaches. Finally, these results pave way for more practical scenarios, where one needs to achieve improvements on both model accuracy and predictor network parameter-efficiency simultaneously.

On the Use of Anchoring for Training Vision Models

Jun 01, 2024

Abstract:Anchoring is a recent, architecture-agnostic principle for training deep neural networks that has been shown to significantly improve uncertainty estimation, calibration, and extrapolation capabilities. In this paper, we systematically explore anchoring as a general protocol for training vision models, providing fundamental insights into its training and inference processes and their implications for generalization and safety. Despite its promise, we identify a critical problem in anchored training that can lead to an increased risk of learning undesirable shortcuts, thereby limiting its generalization capabilities. To address this, we introduce a new anchored training protocol that employs a simple regularizer to mitigate this issue and significantly enhances generalization. We empirically evaluate our proposed approach across datasets and architectures of varying scales and complexities, demonstrating substantial performance gains in generalization and safety metrics compared to the standard training protocol.

`Eyes of a Hawk and Ears of a Fox': Part Prototype Network for Generalized Zero-Shot Learning

Apr 12, 2024

Abstract:Current approaches in Generalized Zero-Shot Learning (GZSL) are built upon base models which consider only a single class attribute vector representation over the entire image. This is an oversimplification of the process of novel category recognition, where different regions of the image may have properties from different seen classes and thus have different predominant attributes. With this in mind, we take a fundamentally different approach: a pre-trained Vision-Language detector (VINVL) sensitive to attribute information is employed to efficiently obtain region features. A learned function maps the region features to region-specific attribute attention used to construct class part prototypes. We conduct experiments on a popular GZSL benchmark consisting of the CUB, SUN, and AWA2 datasets where our proposed Part Prototype Network (PPN) achieves promising results when compared with other popular base models. Corresponding ablation studies and analysis show that our approach is highly practical and has a distinct advantage over global attribute attention when localized proposals are available.

Accurate and Scalable Estimation of Epistemic Uncertainty for Graph Neural Networks

Jan 07, 2024

Abstract:While graph neural networks (GNNs) are widely used for node and graph representation learning tasks, the reliability of GNN uncertainty estimates under distribution shifts remains relatively under-explored. Indeed, while post-hoc calibration strategies can be used to improve in-distribution calibration, they need not also improve calibration under distribution shift. However, techniques which produce GNNs with better intrinsic uncertainty estimates are particularly valuable, as they can always be combined with post-hoc strategies later. Therefore, in this work, we propose G-$\Delta$UQ, a novel training framework designed to improve intrinsic GNN uncertainty estimates. Our framework adapts the principle of stochastic data centering to graph data through novel graph anchoring strategies, and is able to support partially stochastic GNNs. While, the prevalent wisdom is that fully stochastic networks are necessary to obtain reliable estimates, we find that the functional diversity induced by our anchoring strategies when sampling hypotheses renders this unnecessary and allows us to support G-$\Delta$UQ on pretrained models. Indeed, through extensive evaluation under covariate, concept and graph size shifts, we show that G-$\Delta$UQ leads to better calibrated GNNs for node and graph classification. Further, it also improves performance on the uncertainty-based tasks of out-of-distribution detection and generalization gap estimation. Overall, our work provides insights into uncertainty estimation for GNNs, and demonstrates the utility of G-$\Delta$UQ in obtaining reliable estimates.

Transformer-Powered Surrogates Close the ICF Simulation-Experiment Gap with Extremely Limited Data

Dec 06, 2023Abstract:Recent advances in machine learning, specifically transformer architecture, have led to significant advancements in commercial domains. These powerful models have demonstrated superior capability to learn complex relationships and often generalize better to new data and problems. This paper presents a novel transformer-powered approach for enhancing prediction accuracy in multi-modal output scenarios, where sparse experimental data is supplemented with simulation data. The proposed approach integrates transformer-based architecture with a novel graph-based hyper-parameter optimization technique. The resulting system not only effectively reduces simulation bias, but also achieves superior prediction accuracy compared to the prior method. We demonstrate the efficacy of our approach on inertial confinement fusion experiments, where only 10 shots of real-world data are available, as well as synthetic versions of these experiments.

PAGER: A Framework for Failure Analysis of Deep Regression Models

Sep 20, 2023

Abstract:Safe deployment of AI models requires proactive detection of potential prediction failures to prevent costly errors. While failure detection in classification problems has received significant attention, characterizing failure modes in regression tasks is more complicated and less explored. Existing approaches rely on epistemic uncertainties or feature inconsistency with the training distribution to characterize model risk. However, we show that uncertainties are necessary but insufficient to accurately characterize failure, owing to the various sources of error. In this paper, we propose PAGER (Principled Analysis of Generalization Errors in Regressors), a framework to systematically detect and characterize failures in deep regression models. Built upon the recently proposed idea of anchoring in deep models, PAGER unifies both epistemic uncertainties and novel, complementary non-conformity scores to organize samples into different risk regimes, thereby providing a comprehensive analysis of model errors. Additionally, we introduce novel metrics for evaluating failure detectors in regression tasks. We demonstrate the effectiveness of PAGER on synthetic and real-world benchmarks. Our results highlight the capability of PAGER to identify regions of accurate generalization and detect failure cases in out-of-distribution and out-of-support scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge