Weng-Keen Wong

Biharmonic Distance of Graphs and its Higher-Order Variants: Theoretical Properties with Applications to Centrality and Clustering

Jun 04, 2024

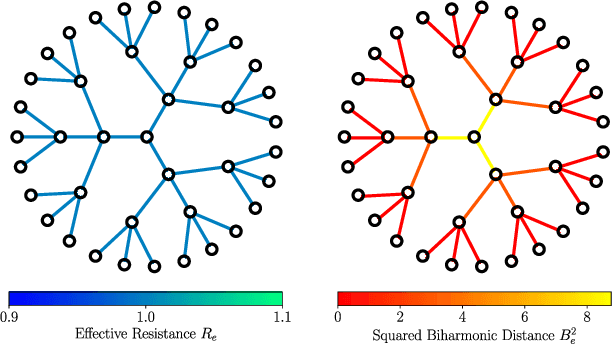

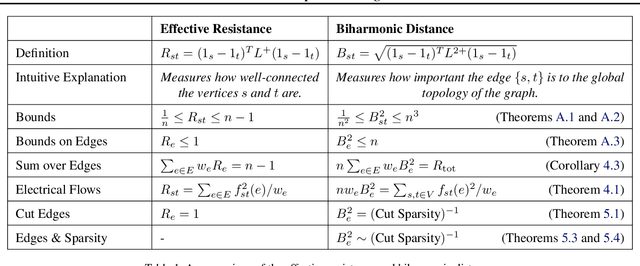

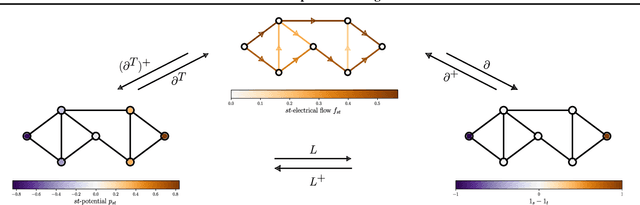

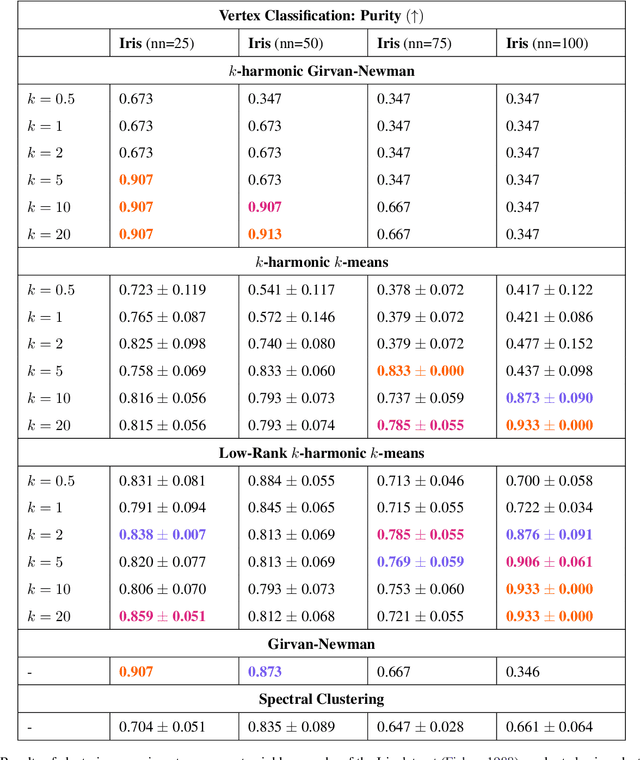

Abstract:Effective resistance is a distance between vertices of a graph that is both theoretically interesting and useful in applications. We study a variant of effective resistance called the biharmonic distance. While the effective resistance measures how well-connected two vertices are, we prove several theoretical results supporting the idea that the biharmonic distance measures how important an edge is to the global topology of the graph. Our theoretical results connect the biharmonic distance to well-known measures of connectivity of a graph like its total resistance and sparsity. Based on these results, we introduce two clustering algorithms using the biharmonic distance. Finally, we introduce a further generalization of the biharmonic distance that we call the $k$-harmonic distance. We empirically study the utility of biharmonic and $k$-harmonic distance for edge centrality and graph clustering.

Unsupervised Contrastive Learning for Robust RF Device Fingerprinting Under Time-Domain Shift

Mar 06, 2024

Abstract:Radio Frequency (RF) device fingerprinting has been recognized as a potential technology for enabling automated wireless device identification and classification. However, it faces a key challenge due to the domain shift that could arise from variations in the channel conditions and environmental settings, potentially degrading the accuracy of RF-based device classification when testing and training data is collected in different domains. This paper introduces a novel solution that leverages contrastive learning to mitigate this domain shift problem. Contrastive learning, a state-of-the-art self-supervised learning approach from deep learning, learns a distance metric such that positive pairs are closer (i.e. more similar) in the learned metric space than negative pairs. When applied to RF fingerprinting, our model treats RF signals from the same transmission as positive pairs and those from different transmissions as negative pairs. Through experiments on wireless and wired RF datasets collected over several days, we demonstrate that our contrastive learning approach captures domain-invariant features, diminishing the effects of domain-specific variations. Our results show large and consistent improvements in accuracy (10.8\% to 27.8\%) over baseline models, thus underscoring the effectiveness of contrastive learning in improving device classification under domain shift.

Transformer-Powered Surrogates Close the ICF Simulation-Experiment Gap with Extremely Limited Data

Dec 06, 2023Abstract:Recent advances in machine learning, specifically transformer architecture, have led to significant advancements in commercial domains. These powerful models have demonstrated superior capability to learn complex relationships and often generalize better to new data and problems. This paper presents a novel transformer-powered approach for enhancing prediction accuracy in multi-modal output scenarios, where sparse experimental data is supplemented with simulation data. The proposed approach integrates transformer-based architecture with a novel graph-based hyper-parameter optimization technique. The resulting system not only effectively reduces simulation bias, but also achieves superior prediction accuracy compared to the prior method. We demonstrate the efficacy of our approach on inertial confinement fusion experiments, where only 10 shots of real-world data are available, as well as synthetic versions of these experiments.

HiNoVa: A Novel Open-Set Detection Method for Automating RF Device Authentication

May 16, 2023

Abstract:New capabilities in wireless network security have been enabled by deep learning, which leverages patterns in radio frequency (RF) data to identify and authenticate devices. Open-set detection is an area of deep learning that identifies samples captured from new devices during deployment that were not part of the training set. Past work in open-set detection has mostly been applied to independent and identically distributed data such as images. In contrast, RF signal data present a unique set of challenges as the data forms a time series with non-linear time dependencies among the samples. We introduce a novel open-set detection approach based on the patterns of the hidden state values within a Convolutional Neural Network (CNN) Long Short-Term Memory (LSTM) model. Our approach greatly improves the Area Under the Precision-Recall Curve on LoRa, Wireless-WiFi, and Wired-WiFi datasets, and hence, can be used successfully to monitor and control unauthorized network access of wireless devices.

Cross-GAN Auditing: Unsupervised Identification of Attribute Level Similarities and Differences between Pretrained Generative Models

Mar 19, 2023

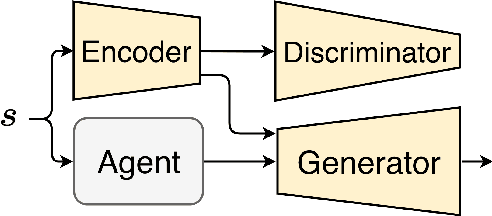

Abstract:Generative Adversarial Networks (GANs) are notoriously difficult to train especially for complex distributions and with limited data. This has driven the need for tools to audit trained networks in human intelligible format, for example, to identify biases or ensure fairness. Existing GAN audit tools are restricted to coarse-grained, model-data comparisons based on summary statistics such as FID or recall. In this paper, we propose an alternative approach that compares a newly developed GAN against a prior baseline. To this end, we introduce Cross-GAN Auditing (xGA) that, given an established "reference" GAN and a newly proposed "client" GAN, jointly identifies intelligible attributes that are either common across both GANs, novel to the client GAN, or missing from the client GAN. This provides both users and model developers an intuitive assessment of similarity and differences between GANs. We introduce novel metrics to evaluate attribute-based GAN auditing approaches and use these metrics to demonstrate quantitatively that xGA outperforms baseline approaches. We also include qualitative results that illustrate the common, novel and missing attributes identified by xGA from GANs trained on a variety of image datasets.

ADL-ID: Adversarial Disentanglement Learning for Wireless Device Fingerprinting Temporal Domain Adaptation

Jan 29, 2023

Abstract:As the journey of 5G standardization is coming to an end, academia and industry have already begun to consider the sixth-generation (6G) wireless networks, with an aim to meet the service demands for the next decade. Deep learning-based RF fingerprinting (DL-RFFP) has recently been recognized as a potential solution for enabling key wireless network applications and services, such as spectrum policy enforcement and network access control. The state-of-the-art DL-RFFP frameworks suffer from a significant performance drop when tested with data drawn from a domain that is different from that used for training data. In this paper, we propose ADL-ID, an unsupervised domain adaption framework that is based on adversarial disentanglement representation to address the temporal domain adaptation for the RFFP task. Our framework has been evaluated on real LoRa and WiFi datasets and showed about 24% improvement in accuracy when compared to the baseline CNN network on short-term temporal adaptation. It also improves the classification accuracy by up to 9% on long-term temporal adaptation. Furthermore, we release a 5-day, 2.1TB, large-scale WiFi 802.11b dataset collected from 50 Pycom devices to support the research community efforts in developing and validating robust RFFP methods.

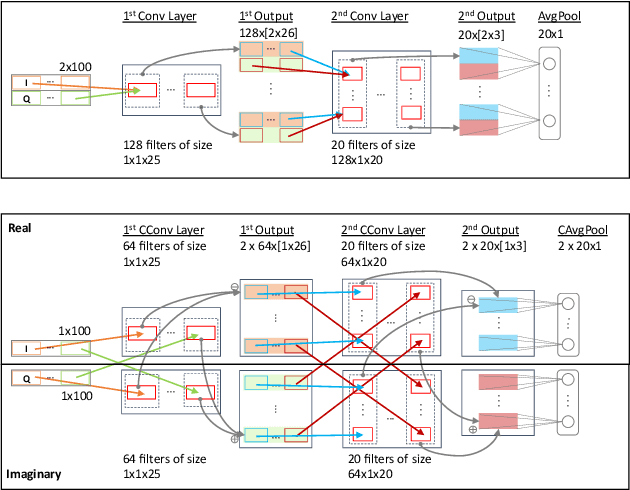

An Analysis of Complex-Valued CNNs for RF Data-Driven Wireless Device Classification

Feb 20, 2022

Abstract:Recent deep neural network-based device classification studies show that complex-valued neural networks (CVNNs) yield higher classification accuracy than real-valued neural networks (RVNNs). Although this improvement is (intuitively) attributed to the complex nature of the input RF data (i.e., IQ symbols), no prior work has taken a closer look into analyzing such a trend in the context of wireless device identification. Our study provides a deeper understanding of this trend using real LoRa and WiFi RF datasets. We perform a deep dive into understanding the impact of (i) the input representation/type and (ii) the architectural layer of the neural network. For the input representation, we considered the IQ as well as the polar coordinates both partially and fully. For the architectural layer, we considered a series of ablation experiments that eliminate parts of the CVNN components. Our results show that CVNNs consistently outperform RVNNs counterpart in the various scenarios mentioned above, indicating that CVNNs are able to make better use of the joint information provided via the in-phase (I) and quadrature (Q) components of the signal.

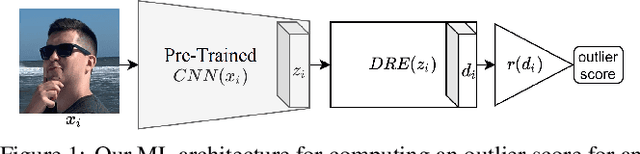

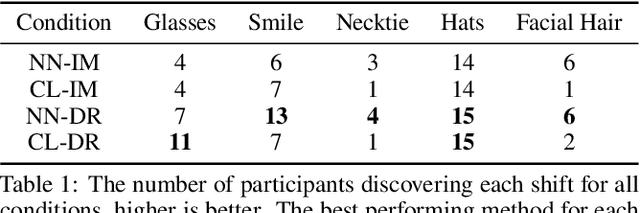

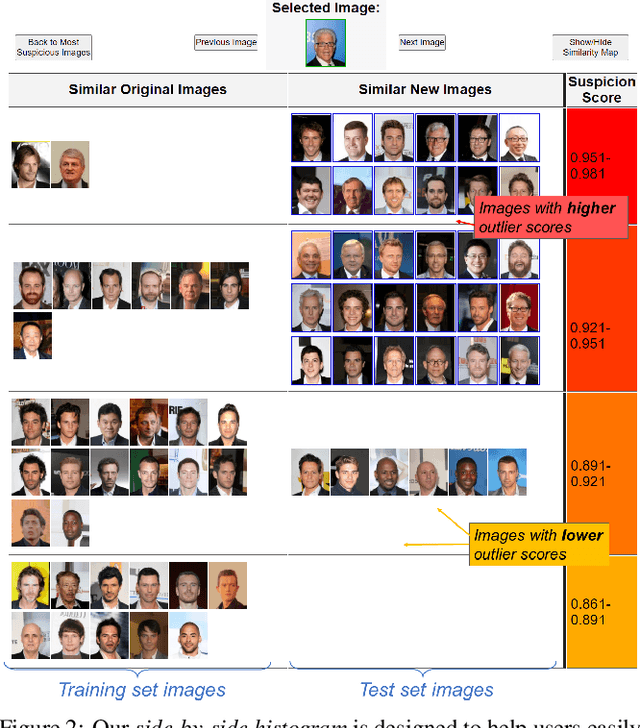

Contrastive Identification of Covariate Shift in Image Data

Aug 19, 2021

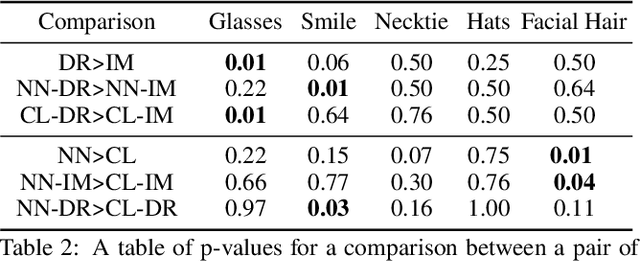

Abstract:Identifying covariate shift is crucial for making machine learning systems robust in the real world and for detecting training data biases that are not reflected in test data. However, detecting covariate shift is challenging, especially when the data consists of high-dimensional images, and when multiple types of localized covariate shift affect different subspaces of the data. Although automated techniques can be used to detect the existence of covariate shift, our goal is to help human users characterize the extent of covariate shift in large image datasets with interfaces that seamlessly integrate information obtained from the detection algorithms. In this paper, we design and evaluate a new visual interface that facilitates the comparison of the local distributions of training and test data. We conduct a quantitative user study on multi-attribute facial data to compare two different learned low-dimensional latent representations (pretrained ImageNet CNN vs. density ratio) and two user analytic workflows (nearest-neighbor vs. cluster-to-cluster). Our results indicate that the latent representation of our density ratio model, combined with a nearest-neighbor comparison, is the most effective at helping humans identify covariate shift.

Counterfactual State Explanations for Reinforcement Learning Agents via Generative Deep Learning

Jan 29, 2021

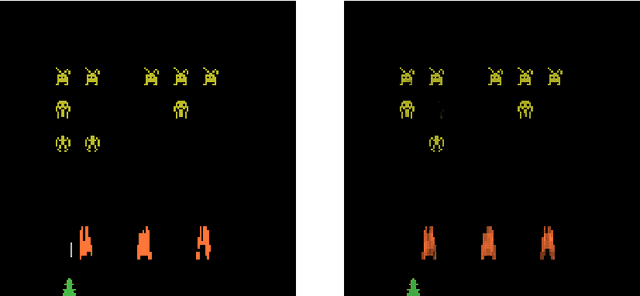

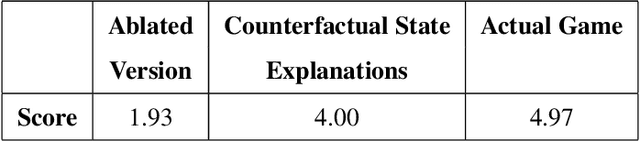

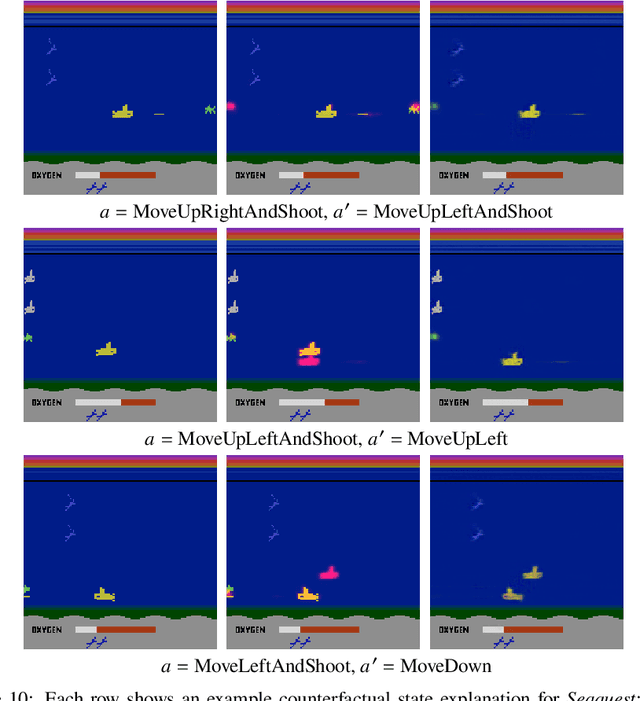

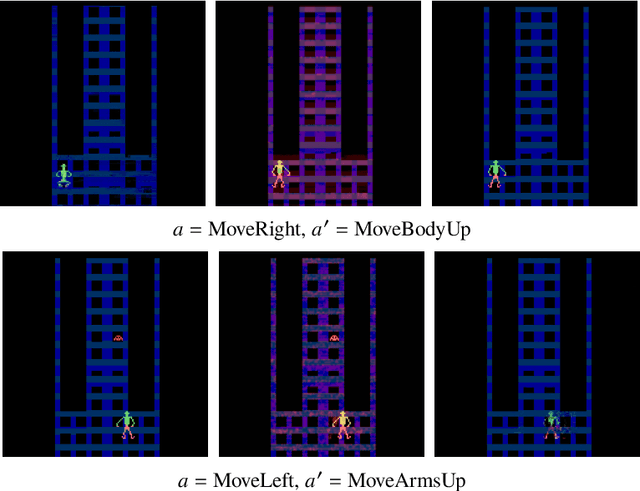

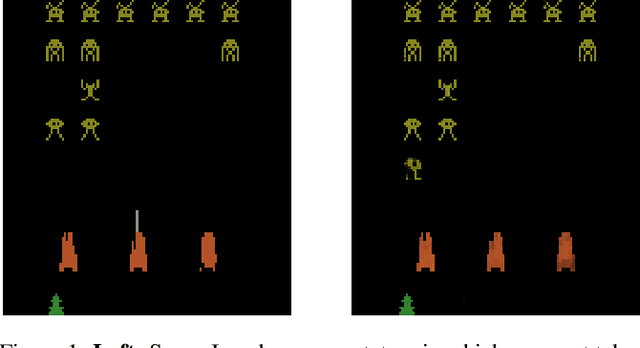

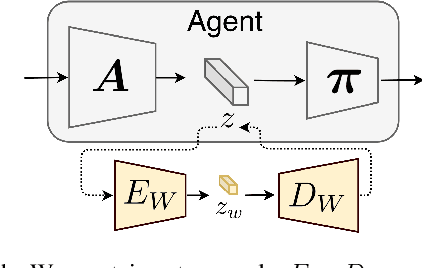

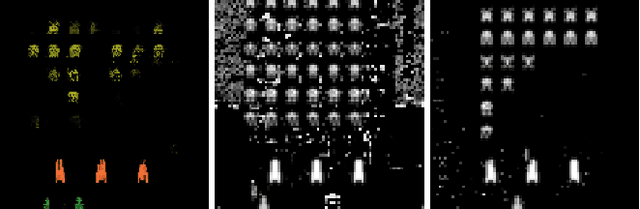

Abstract:Counterfactual explanations, which deal with "why not?" scenarios, can provide insightful explanations to an AI agent's behavior. In this work, we focus on generating counterfactual explanations for deep reinforcement learning (RL) agents which operate in visual input environments like Atari. We introduce counterfactual state explanations, a novel example-based approach to counterfactual explanations based on generative deep learning. Specifically, a counterfactual state illustrates what minimal change is needed to an Atari game image such that the agent chooses a different action. We also evaluate the effectiveness of counterfactual states on human participants who are not machine learning experts. Our first user study investigates if humans can discern if the counterfactual state explanations are produced by the actual game or produced by a generative deep learning approach. Our second user study investigates if counterfactual state explanations can help non-expert participants identify a flawed agent; we compare against a baseline approach based on a nearest neighbor explanation which uses images from the actual game. Our results indicate that counterfactual state explanations have sufficient fidelity to the actual game images to enable non-experts to more effectively identify a flawed RL agent compared to the nearest neighbor baseline and to having no explanation at all.

* Full source code available at https://github.com/mattolson93/counterfactual-state-explanations

Counterfactual States for Atari Agents via Generative Deep Learning

Sep 27, 2019

Abstract:Although deep reinforcement learning agents have produced impressive results in many domains, their decision making is difficult to explain to humans. To address this problem, past work has mainly focused on explaining why an action was chosen in a given state. A different type of explanation that is useful is a counterfactual, which deals with "what if?" scenarios. In this work, we introduce the concept of a counterfactual state to help humans gain a better understanding of what would need to change (minimally) in an Atari game image for the agent to choose a different action. We introduce a novel method to create counterfactual states from a generative deep learning architecture. In addition, we evaluate the effectiveness of counterfactual states on human participants who are not machine learning experts. Our user study results suggest that our generated counterfactual states are useful in helping non-expert participants gain a better understanding of an agent's decision making process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge