Stefan Caldararu

Just Add Force for Contact-Rich Robot Policies

Oct 17, 2024

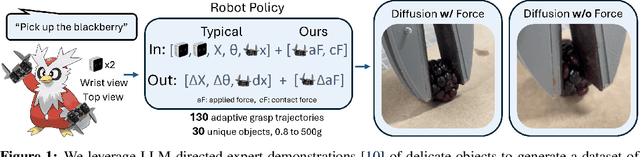

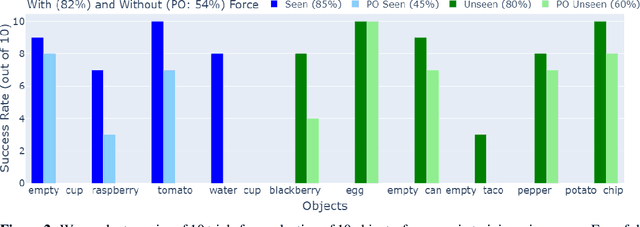

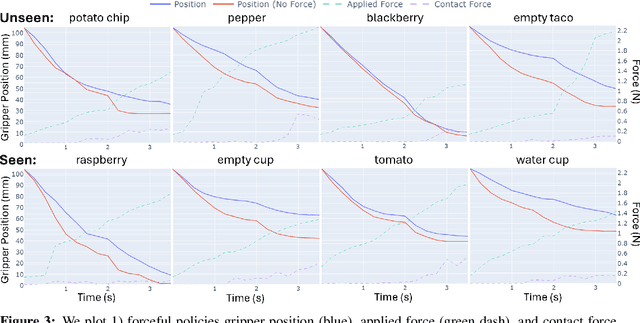

Abstract:Robot trajectories used for learning end-to-end robot policies typically contain end-effector and gripper position, workspace images, and language. Policies learned from such trajectories are unsuitable for delicate grasping, which require tightly coupled and precise gripper force and gripper position. We collect and make publically available 130 trajectories with force feedback of successful grasps on 30 unique objects. Our current-based method for sensing force, albeit noisy, is gripper-agnostic and requires no additional hardware. We train and evaluate two diffusion policies: one with (forceful) the collected force feedback and one without (position-only). We find that forceful policies are superior to position-only policies for delicate grasping and are able to generalize to unseen delicate objects, while reducing grasp policy latency by near 4x, relative to LLM-based methods. With our promising results on limited data, we hope to signal to others to consider investing in collecting force and other such tactile information in new datasets, enabling more robust, contact-rich manipulation in future robot foundation models. Our data, code, models, and videos are viewable at https://justaddforce.github.io/.

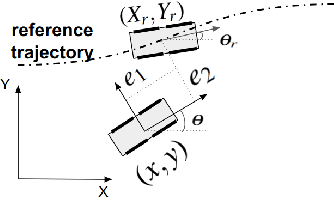

A Study on the Use of Simulation in Synthesizing Path-Following Control Policies for Autonomous Ground Robots

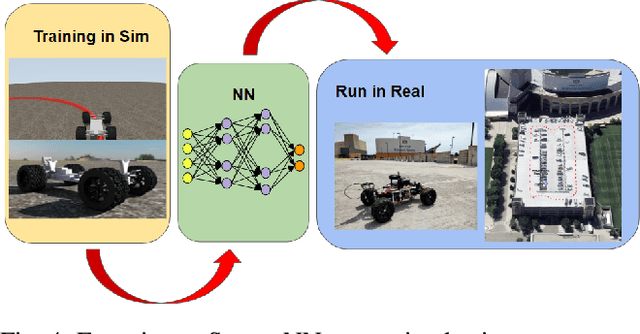

Mar 26, 2024Abstract:We report results obtained and insights gained while answering the following question: how effective is it to use a simulator to establish path following control policies for an autonomous ground robot? While the quality of the simulator conditions the answer to this question, we found that for the simulation platform used herein, producing four control policies for path planning was straightforward once a digital twin of the controlled robot was available. The control policies established in simulation and subsequently demonstrated in the real world are PID control, MPC, and two neural network (NN) based controllers. Training the two NN controllers via imitation learning was accomplished expeditiously using seven simple maneuvers: follow three circles clockwise, follow the same circles counter-clockwise, and drive straight. A test randomization process that employs random micro-simulations is used to rank the ``goodness'' of the four control policies. The policy ranking noted in simulation correlates well with the ranking observed when the control policies were tested in the real world. The simulation platform used is publicly available and BSD3-released as open source; a public Docker image is available for reproducibility studies. It contains a dynamics engine, a sensor simulator, a ROS2 bridge, and a ROS2 autonomy stack the latter employed both in the simulator and the real world experiments.

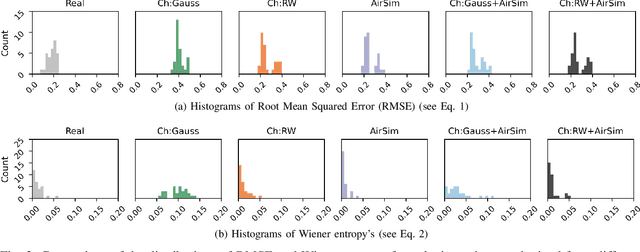

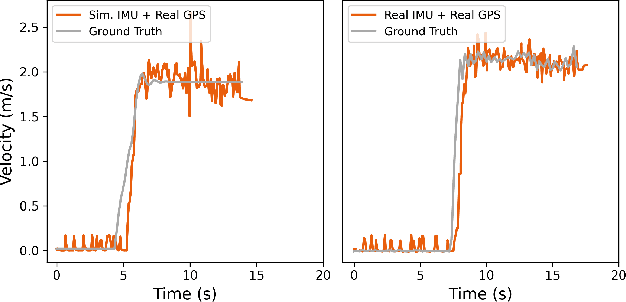

Quantifying the Sim2real Gap for GPS and IMU Sensors

Mar 16, 2024

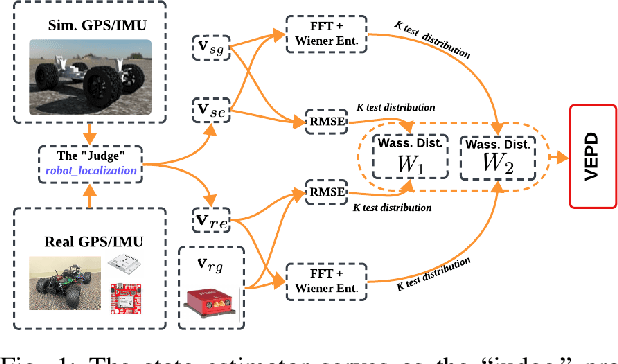

Abstract:Simulation can and should play a critical role in the development and testing of algorithms for autonomous agents. What might reduce its impact is the ``sim2real'' gap -- the algorithm response differs between operation in simulated versus real-world environments. This paper introduces an approach to evaluate this gap, focusing on the accuracy of sensor simulation -- specifically IMU and GPS -- in velocity estimation tasks for autonomous agents. Using a scaled autonomous vehicle, we conduct 40 real-world experiments across diverse environments then replicate the experiments in simulation with five distinct sensor noise models. We note that direct comparison of raw simulation and real sensor data fails to quantify the sim2real gap for robotics applications. We demonstrate that by using a state of the art state-estimation package as a ``judge'', and by evaluating the performance of this state-estimator in both real and simulated scenarios, we can isolate the sim2real discrepancies stemming from sensor simulations alone. The dataset generated is open-source and publicly available for unfettered use.

Zero-Shot Policy Transferability for the Control of a Scale Autonomous Vehicle

Sep 18, 2023

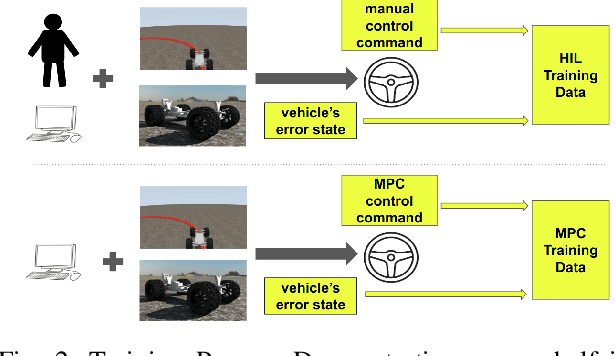

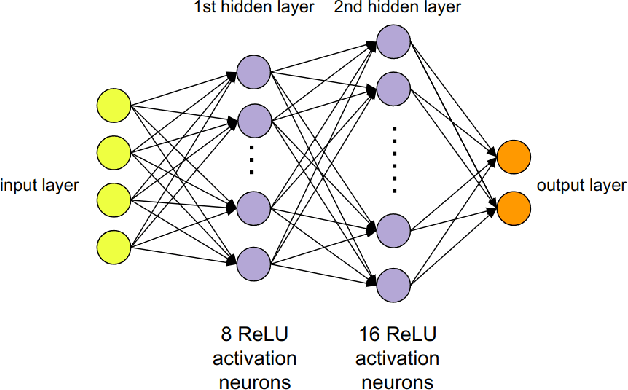

Abstract:We report on a study that employs an in-house developed simulation infrastructure to accomplish zero shot policy transferability for a control policy associated with a scale autonomous vehicle. We focus on implementing policies that require no real world data to be trained (Zero-Shot Transfer), and are developed in-house as opposed to being validated by previous works. We do this by implementing a Neural Network (NN) controller that is trained only on a family of circular reference trajectories. The sensors used are RTK-GPS and IMU, the latter for providing heading. The NN controller is trained using either a human driver (via human in the loop simulation), or a Model Predictive Control (MPC) strategy. We demonstrate these two approaches in conjunction with two operation scenarios: the vehicle follows a waypoint-defined trajectory at constant speed; and the vehicle follows a speed profile that changes along the vehicle's waypoint-defined trajectory. The primary contribution of this work is the demonstration of Zero-Shot Transfer in conjunction with a novel feed-forward NN controller trained using a general purpose, in-house developed simulation platform.

Using simulation to design an MPC policy for field navigation using GPS sensing

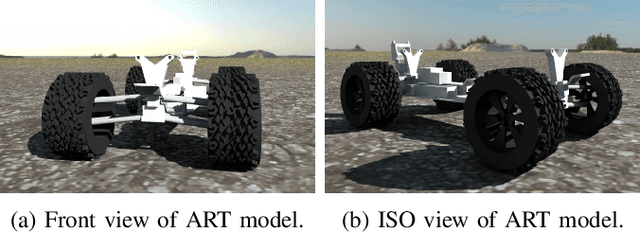

Apr 18, 2023Abstract:Modeling a robust control system with a precise GPS-based state estimation capability in simulation can be useful in field navigation applications as it allows for testing and validation in a controlled environment. This testing process would enable navigation systems to be developed and optimized in simulation with direct transferability to real-world scenarios. The multi-physics simulation engine Chrono allows for the creation of scenarios that may be difficult or dangerous to replicate in the field, such as extreme weather or terrain conditions. Autonomy Research Testbed (ART), a specialized robotics algorithm testbed, is operated in conjunction with Chrono to develop an MPC control policy as well as an EKF state estimator. This platform enables users to easily integrate custom algorithms in the autonomy stack. This model is initially developed and used in simulation and then tested on a twin vehicle model in reality, to demonstrate the transferability between simulation and reality (also known as Sim2Real).

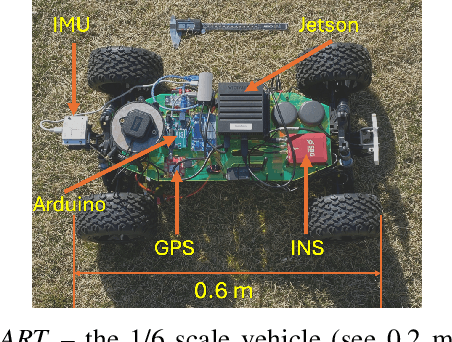

ART/ATK: A research platform for assessing and mitigating the sim-to-real gap in robotics and autonomous vehicle engineering

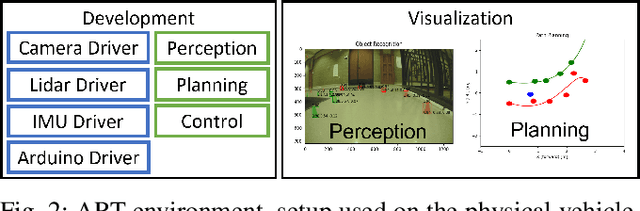

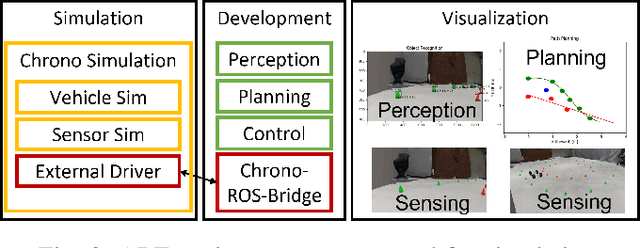

Nov 09, 2022Abstract:We discuss a platform that has both software and hardware components, and whose purpose is to support research into characterizing and mitigating the sim-to-real gap in robotics and vehicle autonomy engineering. The software is operating-system independent and has three main components: a simulation engine called Chrono, which supports high-fidelity vehicle and sensor simulation; an autonomy stack for algorithm design and testing; and a development environment that supports visualization and hardware-in-the-loop experimentation. The accompanying hardware platform is a 1/6th scale vehicle augmented with reconfigurable mountings for computing, sensing, and tracking. Since this vehicle platform has a digital twin within the simulation environment, one can test the same autonomy perception, state estimation, or controls algorithms, as well as the processors they run on, in both simulation and reality. A demonstration is provided to show the utilization of this platform for autonomy research. Future work will concentrate on augmenting ART/ATK with support for a full-sized Chevy Bolt EUV, which will be made available to this group in the immediate future.

A software toolkit and hardware platform for investigating and comparing robot autonomy algorithms in simulation and reality

Jun 14, 2022

Abstract:We describe a software framework and a hardware platform used in tandem for the design and analysis of robot autonomy algorithms in simulation and reality. The software, which is open source, containerized, and operating system (OS) independent, has three main components: a ROS 2 interface to a C++ vehicle simulation framework (Chrono), which provides high-fidelity wheeled/tracked vehicle and sensor simulation; a basic ROS 2-based autonomy stack for algorithm design and testing; and, a development ecosystem which enables visualization, and hardware-in-the-loop experimentation in perception, state estimation, path planning, and controls. The accompanying hardware platform is a 1/6th scale vehicle augmented with reconfigurable mountings for computing, sensing, and tracking. Its purpose is to allow algorithms and sensor configurations to be physically tested and improved. Since this vehicle platform has a digital twin within the simulation environment, one can test and compare the same algorithms and autonomy stack in simulation and reality. This platform has been built with an eye towards characterizing and managing the simulation-to-reality gap. Herein, we describe how this platform is set up, deployed, and used to improve autonomy for mobility applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge