Asher Elmquist

Camera simulation for robot simulation: how important are various camera model components?

Nov 16, 2022

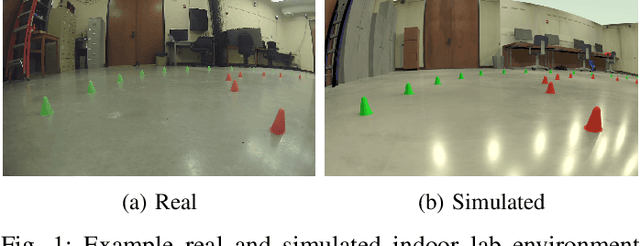

Abstract:Modeling cameras for the simulation of autonomous robotics is critical for generating synthetic images with appropriate realism to effectively evaluate a perception algorithm in simulation. In many cases though, simulated images are produced by traditional rendering techniques that exclude or superficially handle processing steps and aspects encountered in the actual camera pipeline. The purpose of this contribution is to quantify the degree to which the exclusion from the camera model of various image generation steps or aspects affect the sim-to-real gap in robotics. We investigate what happens if one ignores aspects tied to processes from within the physical camera, e.g., lens distortion, noise, and signal processing; scene effects, e.g., lighting and reflection; and rendering quality. The results of the study demonstrate, quantitatively, that large-scale changes to color, scene, and location have far greater impact than model aspects concerned with local, feature-level artifacts. Moreover, we show that these scene-level aspects can stem from lens distortion and signal processing, particularly when considering white-balance and auto-exposure modeling.

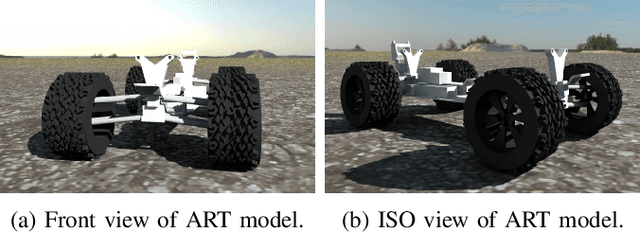

ART/ATK: A research platform for assessing and mitigating the sim-to-real gap in robotics and autonomous vehicle engineering

Nov 09, 2022Abstract:We discuss a platform that has both software and hardware components, and whose purpose is to support research into characterizing and mitigating the sim-to-real gap in robotics and vehicle autonomy engineering. The software is operating-system independent and has three main components: a simulation engine called Chrono, which supports high-fidelity vehicle and sensor simulation; an autonomy stack for algorithm design and testing; and a development environment that supports visualization and hardware-in-the-loop experimentation. The accompanying hardware platform is a 1/6th scale vehicle augmented with reconfigurable mountings for computing, sensing, and tracking. Since this vehicle platform has a digital twin within the simulation environment, one can test the same autonomy perception, state estimation, or controls algorithms, as well as the processors they run on, in both simulation and reality. A demonstration is provided to show the utilization of this platform for autonomy research. Future work will concentrate on augmenting ART/ATK with support for a full-sized Chevy Bolt EUV, which will be made available to this group in the immediate future.

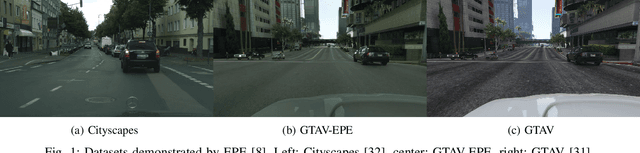

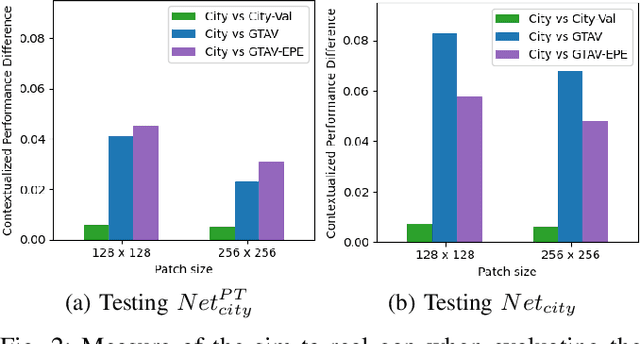

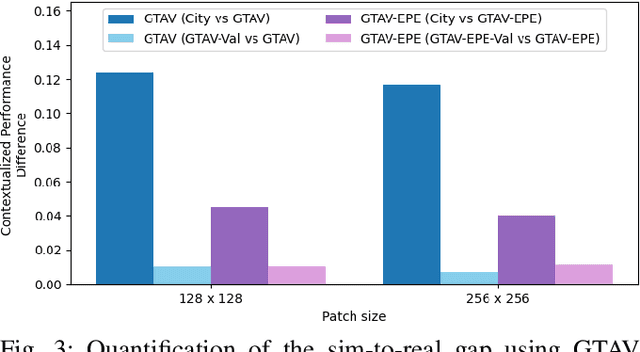

Evaluating a GAN for enhancing camera simulation for robotics

Sep 14, 2022

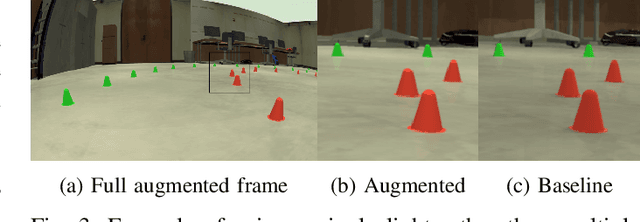

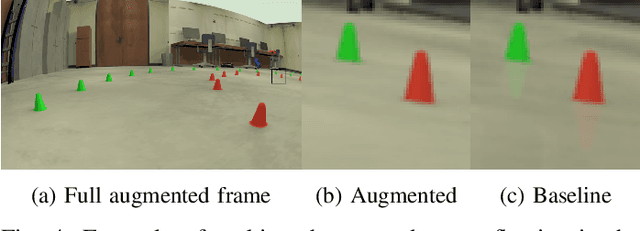

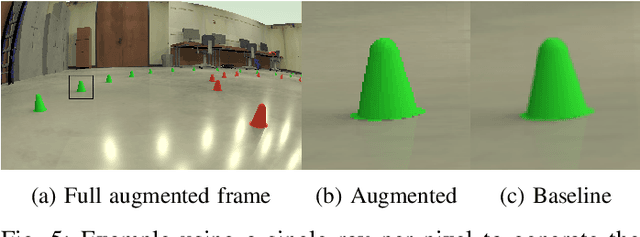

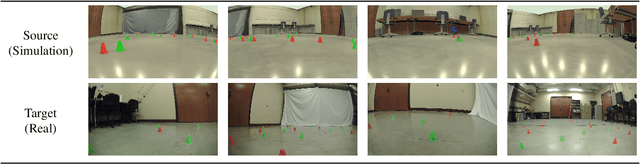

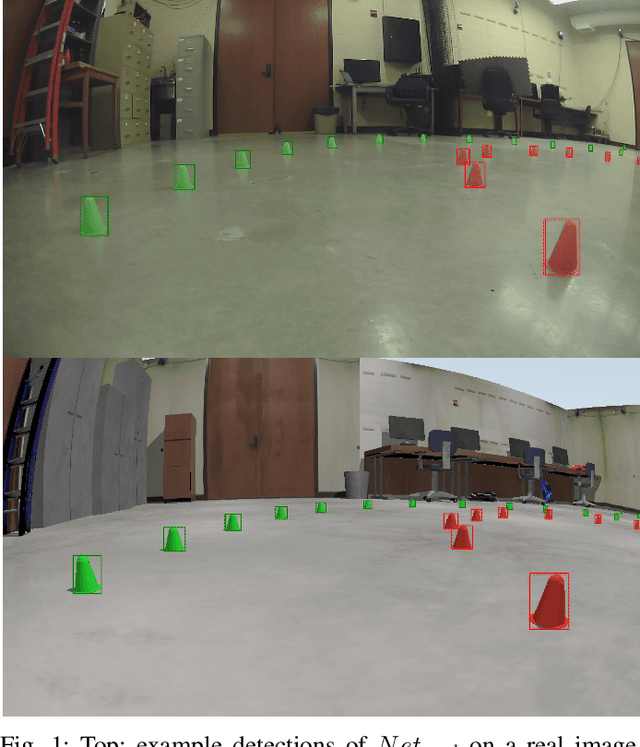

Abstract:Given the versatility of generative adversarial networks (GANs), we seek to understand the benefits gained from using an existing GAN to enhance simulated images and reduce the sim-to-real gap. We conduct an analysis in the context of simulating robot performance and image-based perception. Specifically, we quantify the GAN's ability to reduce the sim-to-real difference in image perception in robotics. Using semantic segmentation, we analyze the sim-to-real difference in training and testing, using nominal and enhanced simulation of a city environment. As a secondary application, we consider use of the GAN in enhancing an indoor environment. For this application, object detection is used to analyze the enhancement in training and testing. The results presented quantify the reduction in the sim-to-real gap when using the GAN, and illustrate the benefits of its use.

A performance contextualization approach to validating camera models for robot simulation

Aug 01, 2022

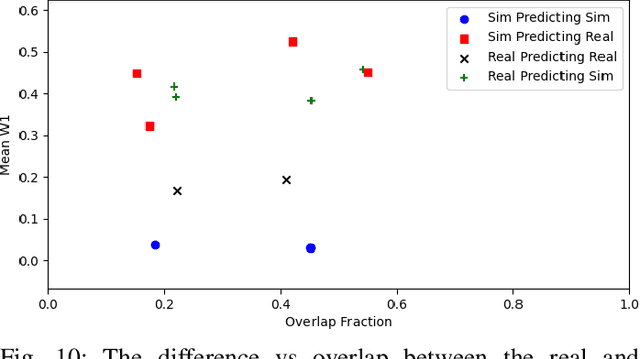

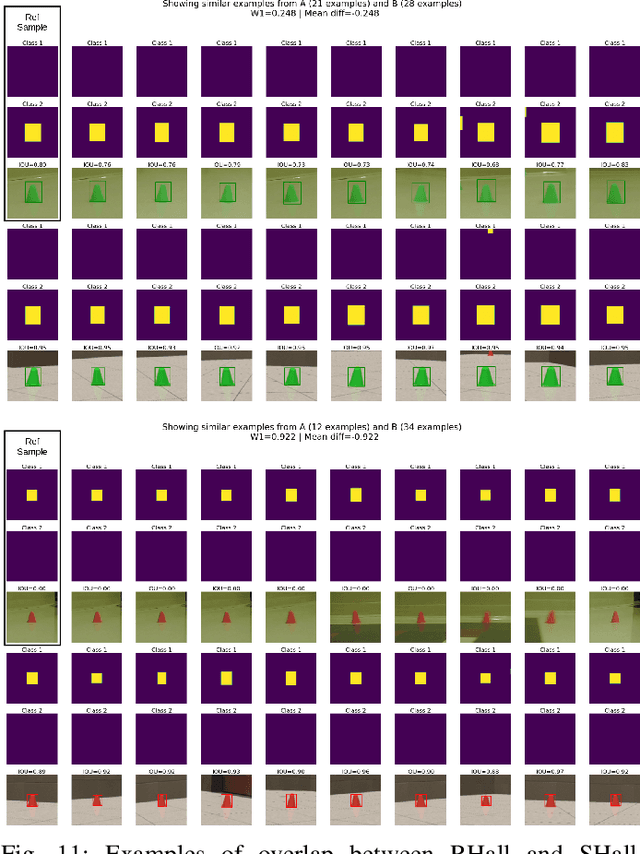

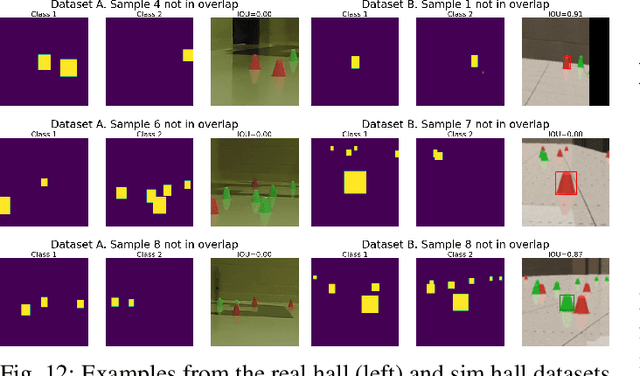

Abstract:The focus of this contribution is on camera simulation as it comes into play in simulating autonomous robots for their virtual prototyping. We propose a camera model validation methodology based on the performance of a perception algorithm and the context in which the performance is measured. This approach is different than traditional validation of synthetic images, which is often done at a pixel or feature level, and tends to require matching pairs of synthetic and real images. Due to the high cost and constraints of acquiring paired images, the proposed approach is based on datasets that are not necessarily paired. Within a real and a simulated dataset, A and B, respectively, we find subsets Ac and Bc of similar content and judge, statistically, the perception algorithm's response to these similar subsets. This validation approach obtains a statistical measure of performance similarity, as well as a measure of similarity between the content of A and B. The methodology is demonstrated using images generated with Chrono::Sensor and a scaled autonomous vehicle, using an object detector as the perception algorithm. The results demonstrate the ability to quantify (i) differences between simulated and real data; (ii) the propensity of training methods to mitigate the sim-to-real gap; and (iii) the context overlap between two datasets.

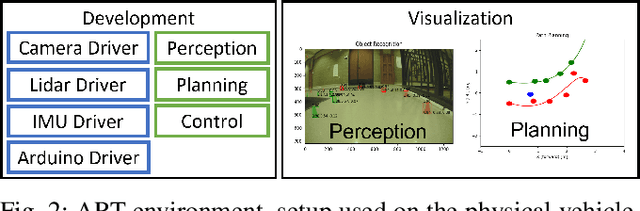

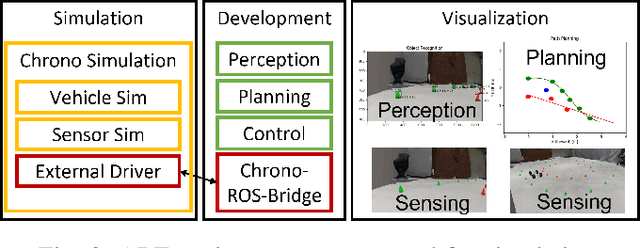

A software toolkit and hardware platform for investigating and comparing robot autonomy algorithms in simulation and reality

Jun 14, 2022

Abstract:We describe a software framework and a hardware platform used in tandem for the design and analysis of robot autonomy algorithms in simulation and reality. The software, which is open source, containerized, and operating system (OS) independent, has three main components: a ROS 2 interface to a C++ vehicle simulation framework (Chrono), which provides high-fidelity wheeled/tracked vehicle and sensor simulation; a basic ROS 2-based autonomy stack for algorithm design and testing; and, a development ecosystem which enables visualization, and hardware-in-the-loop experimentation in perception, state estimation, path planning, and controls. The accompanying hardware platform is a 1/6th scale vehicle augmented with reconfigurable mountings for computing, sensing, and tracking. Its purpose is to allow algorithms and sensor configurations to be physically tested and improved. Since this vehicle platform has a digital twin within the simulation environment, one can test and compare the same algorithms and autonomy stack in simulation and reality. This platform has been built with an eye towards characterizing and managing the simulation-to-reality gap. Herein, we describe how this platform is set up, deployed, and used to improve autonomy for mobility applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge