Camera simulation for robot simulation: how important are various camera model components?

Paper and Code

Nov 16, 2022

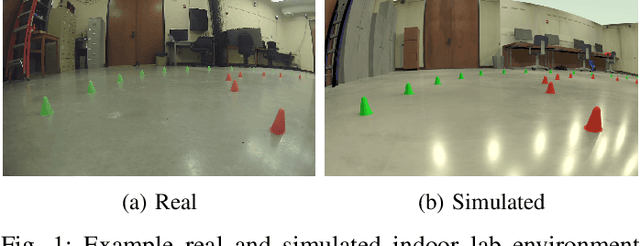

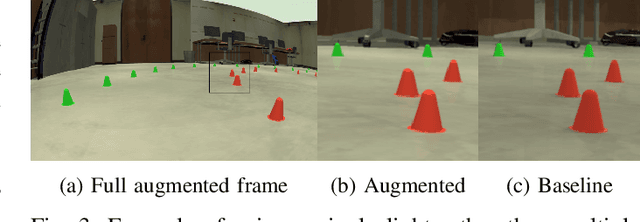

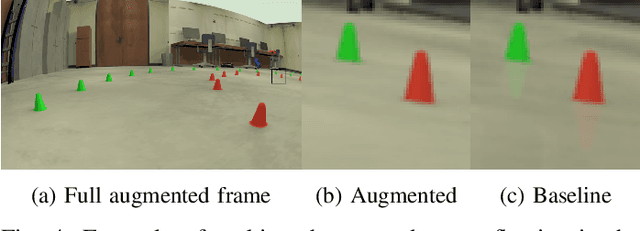

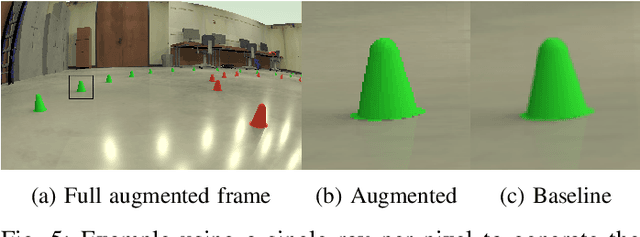

Modeling cameras for the simulation of autonomous robotics is critical for generating synthetic images with appropriate realism to effectively evaluate a perception algorithm in simulation. In many cases though, simulated images are produced by traditional rendering techniques that exclude or superficially handle processing steps and aspects encountered in the actual camera pipeline. The purpose of this contribution is to quantify the degree to which the exclusion from the camera model of various image generation steps or aspects affect the sim-to-real gap in robotics. We investigate what happens if one ignores aspects tied to processes from within the physical camera, e.g., lens distortion, noise, and signal processing; scene effects, e.g., lighting and reflection; and rendering quality. The results of the study demonstrate, quantitatively, that large-scale changes to color, scene, and location have far greater impact than model aspects concerned with local, feature-level artifacts. Moreover, we show that these scene-level aspects can stem from lens distortion and signal processing, particularly when considering white-balance and auto-exposure modeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge