Radu Serban

Co-Design of Rover Wheels and Control using Bayesian Optimization and Rover-Terrain Simulations

Feb 02, 2026Abstract:While simulation is vital for optimizing robotic systems, the cost of modeling deformable terrain has long limited its use in full-vehicle studies of off-road autonomous mobility. For example, Discrete Element Method (DEM) simulations are often confined to single-wheel tests, which obscures coupled wheel-vehicle-controller interactions and prevents joint optimization of mechanical design and control. This paper presents a Bayesian optimization framework that co-designs rover wheel geometry and steering controller parameters using high-fidelity, full-vehicle closed-loop simulations on deformable terrain. Using the efficiency and scalability of a continuum-representation model (CRM) for terramechanics, we evaluate candidate designs on trajectories of varying complexity while towing a fixed load. The optimizer tunes wheel parameters (radius, width, and grouser features) and steering PID gains under a multi-objective formulation that balances traversal speed, tracking error, and energy consumption. We compare two strategies: simultaneous co-optimization of wheel and controller parameters versus a sequential approach that decouples mechanical and control design. We analyze trade-offs in performance and computational cost. Across 3,000 full-vehicle simulations, campaigns finish in five to nine days, versus months with the group's earlier DEM-based workflow. Finally, a preliminary hardware study suggests the simulation-optimized wheel designs preserve relative performance trends on the physical rover. Together, these results show that scalable, high-fidelity simulation can enable practical co-optimization of wheel design and control for off-road vehicles on deformable terrain without relying on prohibitively expensive DEM studies. The simulation infrastructure (scripts and models) is released as open source in a public repository to support reproducibility and further research.

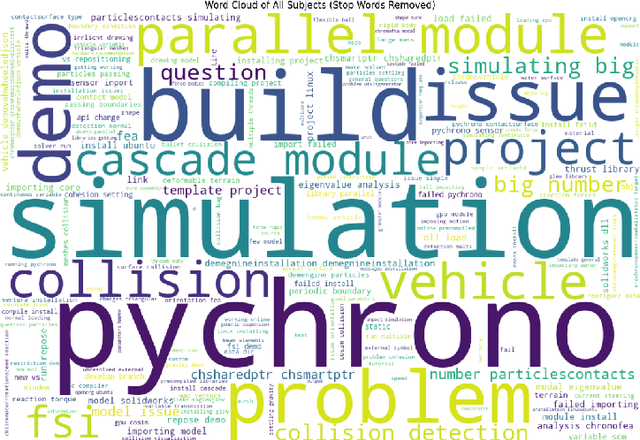

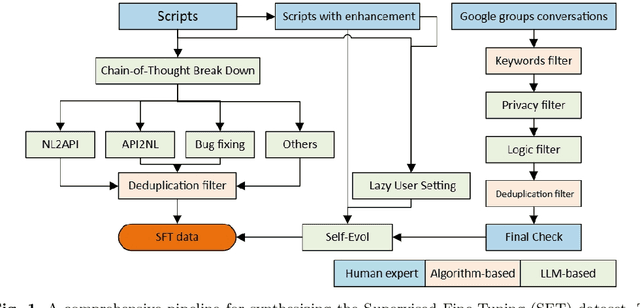

ChronoLLM: A Framework for Customizing Large Language Model for Digital Twins generalization based on PyChrono

Jan 07, 2025

Abstract:Recently, the integration of advanced simulation technologies with artificial intelligence (AI) is revolutionizing science and engineering research. ChronoLlama introduces a novel framework that customizes the open-source LLMs, specifically for code generation, paired with PyChrono for multi-physics simulations. This integration aims to automate and improve the creation of simulation scripts, thus enhancing model accuracy and efficiency. This combination harnesses the speed of AI-driven code generation with the reliability of physics-based simulations, providing a powerful tool for researchers and engineers. Empirical results indicate substantial enhancements in simulation setup speed, accuracy of the generated codes, and overall computational efficiency. ChronoLlama not only expedites the development and testing of multibody systems but also spearheads a scalable, AI-enhanced approach to managing intricate mechanical simulations. This pioneering integration of cutting-edge AI with traditional simulation platforms represents a significant leap forward in automating and optimizing design processes in engineering applications.

A physics-based sensor simulation environment for lunar ground operations

Oct 06, 2024Abstract:This contribution reports on a software framework that uses physically-based rendering to simulate camera operation in lunar conditions. The focus is on generating synthetic images qualitatively similar to those produced by an actual camera operating on a vehicle traversing and/or actively interacting with lunar terrain, e.g., for construction operations. The highlights of this simulator are its ability to capture (i) light transport in lunar conditions and (ii) artifacts related to the vehicle-terrain interaction, which might include dust formation and transport. The simulation infrastructure is built within an in-house developed physics engine called Chrono, which simulates the dynamics of the deformable terrain-vehicle interaction, as well as fallout of this interaction. The Chrono::Sensor camera model draws on ray tracing and Hapke Photometric Functions. We analyze the performance of the simulator using two virtual experiments featuring digital twins of NASA's VIPER rover navigating a lunar environment, and of the NASA's RASSOR excavator engaged into a digging operation. The sensor simulation solution presented can be used for the design and testing of perception algorithms, or as a component of in-silico experiments that pertain to large lunar operations, e.g., traversability, construction tasks.

SimBench: A Rule-Based Multi-Turn Interaction Benchmark for Evaluating an LLM's Ability to Generate Digital Twins

Aug 21, 2024Abstract:We introduce SimBench, a benchmark designed to evaluate the proficiency of student large language models (S-LLMs) in generating digital twins (DTs) that can be used in simulators for virtual testing. Given a collection of S-LLMs, this benchmark enables the ranking of the S-LLMs based on their ability to produce high-quality DTs. We demonstrate this by comparing over 20 open- and closed-source S-LLMs. Using multi-turn interactions, SimBench employs a rule-based judge LLM (J-LLM) that leverages both predefined rules and human-in-the-loop guidance to assign scores for the DTs generated by the S-LLM, thus providing a consistent and expert-inspired evaluation protocol. The J-LLM is specific to a simulator, and herein the proposed benchmarking approach is demonstrated in conjunction with the Chrono multi-physics simulator. Chrono provided the backdrop used to assess an S-LLM in relation to the latter's ability to create digital twins for multibody dynamics, finite element analysis, vehicle dynamics, robotic dynamics, and sensor simulations. The proposed benchmarking principle is broadly applicable and enables the assessment of an S-LLM's ability to generate digital twins for other simulation packages. All code and data are available at https://github.com/uwsbel/SimBench.

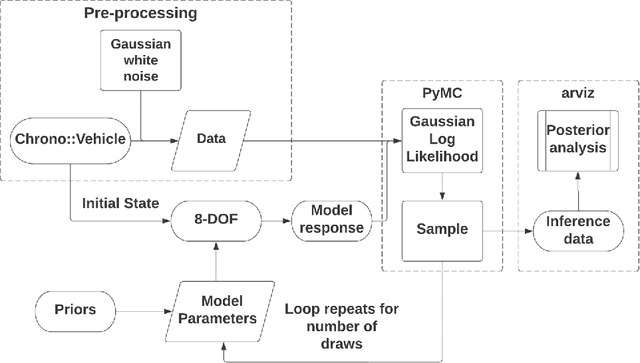

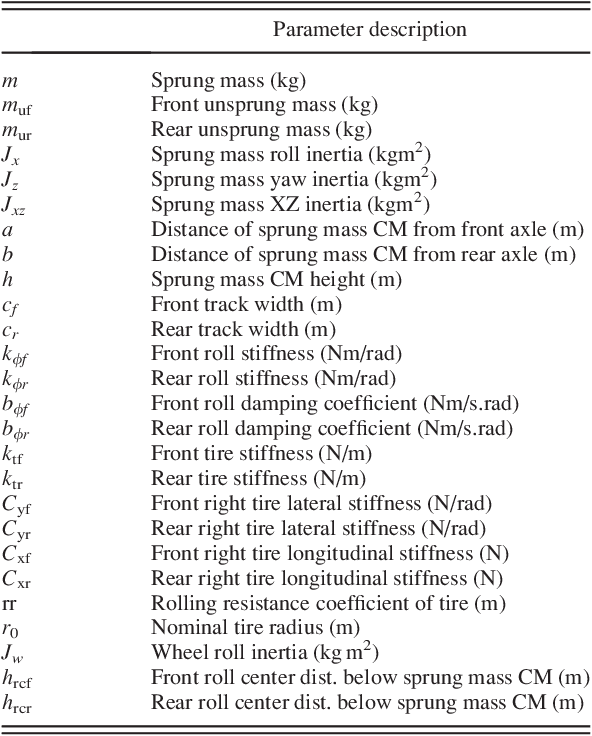

Using a Bayesian-Inference Approach to Calibrating Models for Simulation in Robotics

May 11, 2023

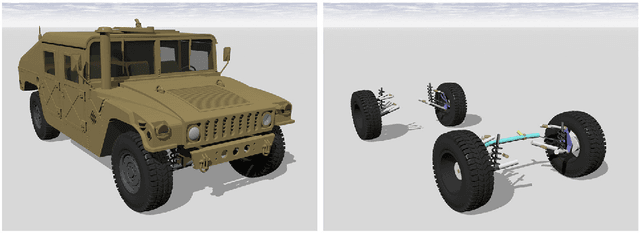

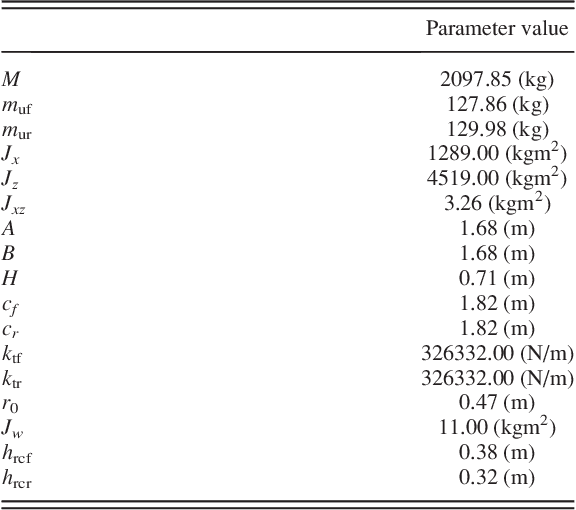

Abstract:In robotics, simulation has the potential to reduce design time and costs, and lead to a more robust engineered solution and a safer development process. However, the use of simulators is predicated on the availability of good models. This contribution is concerned with improving the quality of these models via calibration, which is cast herein in a Bayesian framework. First, we discuss the Bayesian machinery involved in model calibration. Then, we demonstrate it in one example: calibration of a vehicle dynamics model that has low degree of freedom count and can be used for state estimation, model predictive control, or path planning. A high fidelity simulator is used to emulate the ``experiments'' and generate the data for the calibration. The merit of this work is not tied to a new Bayesian methodology for calibration, but to the demonstration of how the Bayesian machinery can establish connections among models in computational dynamics, even when the data in use is noisy. The software used to generate the results reported herein is available in a public repository for unfettered use and distribution.

* 19 pages, 42 figures

Camera simulation for robot simulation: how important are various camera model components?

Nov 16, 2022

Abstract:Modeling cameras for the simulation of autonomous robotics is critical for generating synthetic images with appropriate realism to effectively evaluate a perception algorithm in simulation. In many cases though, simulated images are produced by traditional rendering techniques that exclude or superficially handle processing steps and aspects encountered in the actual camera pipeline. The purpose of this contribution is to quantify the degree to which the exclusion from the camera model of various image generation steps or aspects affect the sim-to-real gap in robotics. We investigate what happens if one ignores aspects tied to processes from within the physical camera, e.g., lens distortion, noise, and signal processing; scene effects, e.g., lighting and reflection; and rendering quality. The results of the study demonstrate, quantitatively, that large-scale changes to color, scene, and location have far greater impact than model aspects concerned with local, feature-level artifacts. Moreover, we show that these scene-level aspects can stem from lens distortion and signal processing, particularly when considering white-balance and auto-exposure modeling.

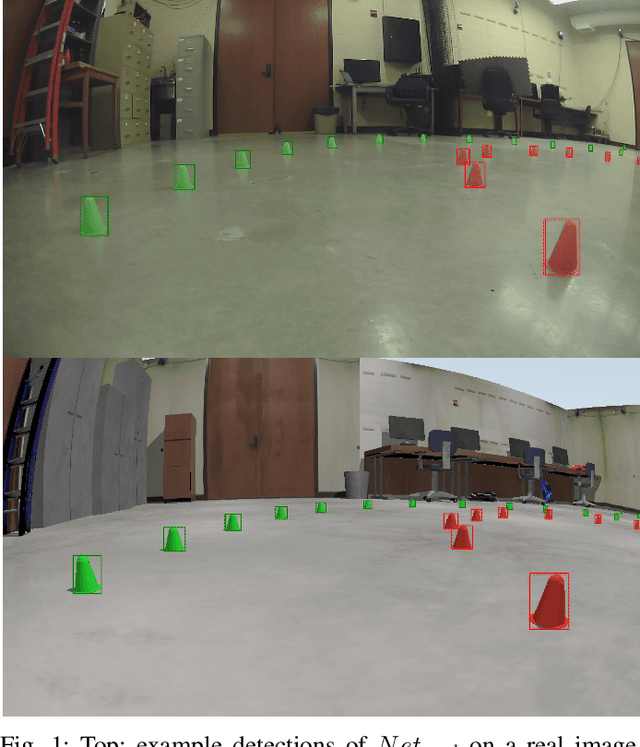

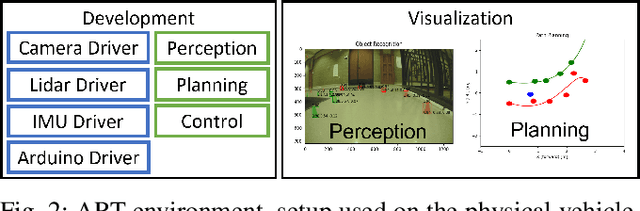

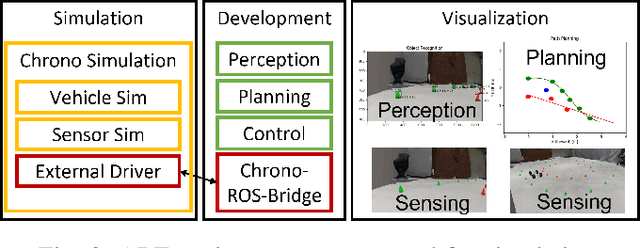

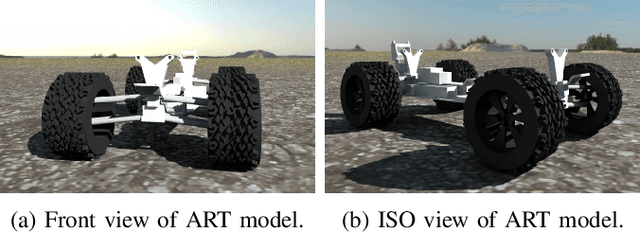

ART/ATK: A research platform for assessing and mitigating the sim-to-real gap in robotics and autonomous vehicle engineering

Nov 09, 2022Abstract:We discuss a platform that has both software and hardware components, and whose purpose is to support research into characterizing and mitigating the sim-to-real gap in robotics and vehicle autonomy engineering. The software is operating-system independent and has three main components: a simulation engine called Chrono, which supports high-fidelity vehicle and sensor simulation; an autonomy stack for algorithm design and testing; and a development environment that supports visualization and hardware-in-the-loop experimentation. The accompanying hardware platform is a 1/6th scale vehicle augmented with reconfigurable mountings for computing, sensing, and tracking. Since this vehicle platform has a digital twin within the simulation environment, one can test the same autonomy perception, state estimation, or controls algorithms, as well as the processors they run on, in both simulation and reality. A demonstration is provided to show the utilization of this platform for autonomy research. Future work will concentrate on augmenting ART/ATK with support for a full-sized Chevy Bolt EUV, which will be made available to this group in the immediate future.

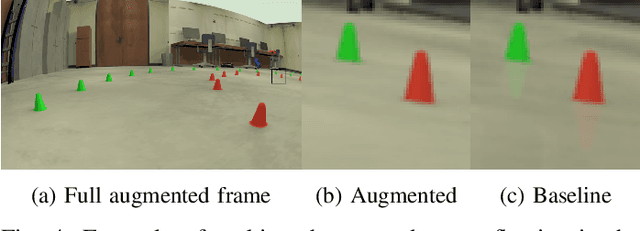

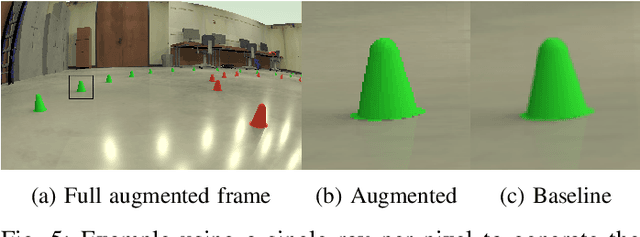

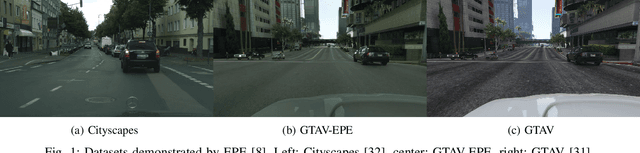

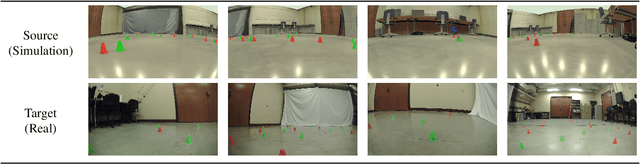

Evaluating a GAN for enhancing camera simulation for robotics

Sep 14, 2022

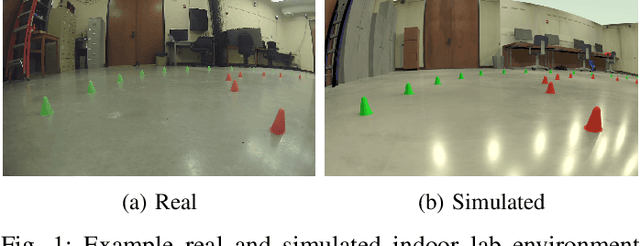

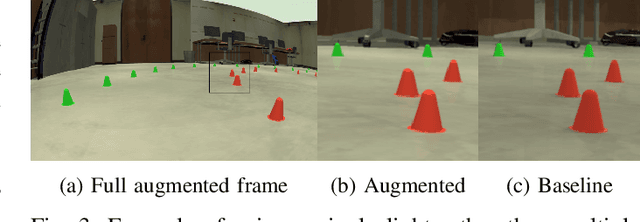

Abstract:Given the versatility of generative adversarial networks (GANs), we seek to understand the benefits gained from using an existing GAN to enhance simulated images and reduce the sim-to-real gap. We conduct an analysis in the context of simulating robot performance and image-based perception. Specifically, we quantify the GAN's ability to reduce the sim-to-real difference in image perception in robotics. Using semantic segmentation, we analyze the sim-to-real difference in training and testing, using nominal and enhanced simulation of a city environment. As a secondary application, we consider use of the GAN in enhancing an indoor environment. For this application, object detection is used to analyze the enhancement in training and testing. The results presented quantify the reduction in the sim-to-real gap when using the GAN, and illustrate the benefits of its use.

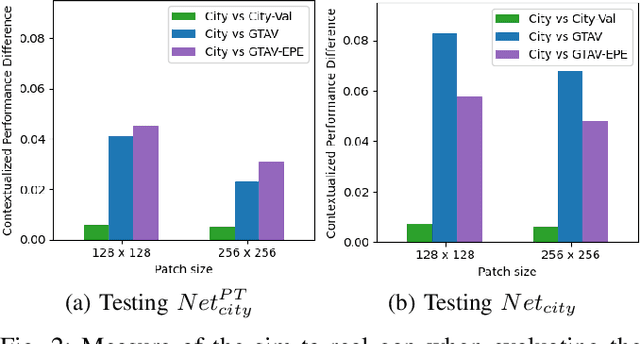

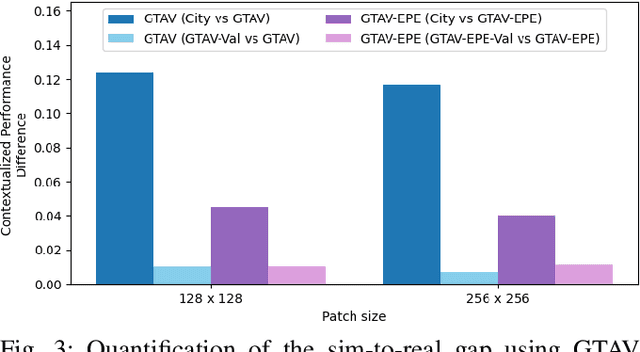

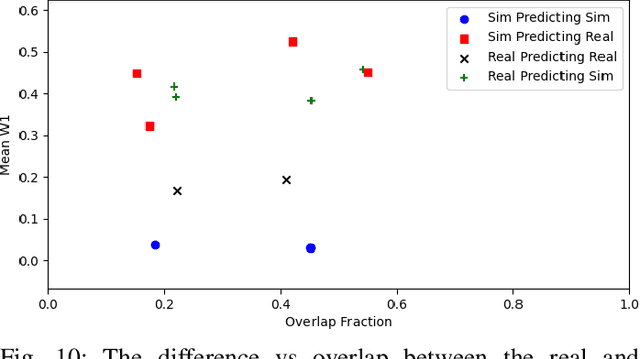

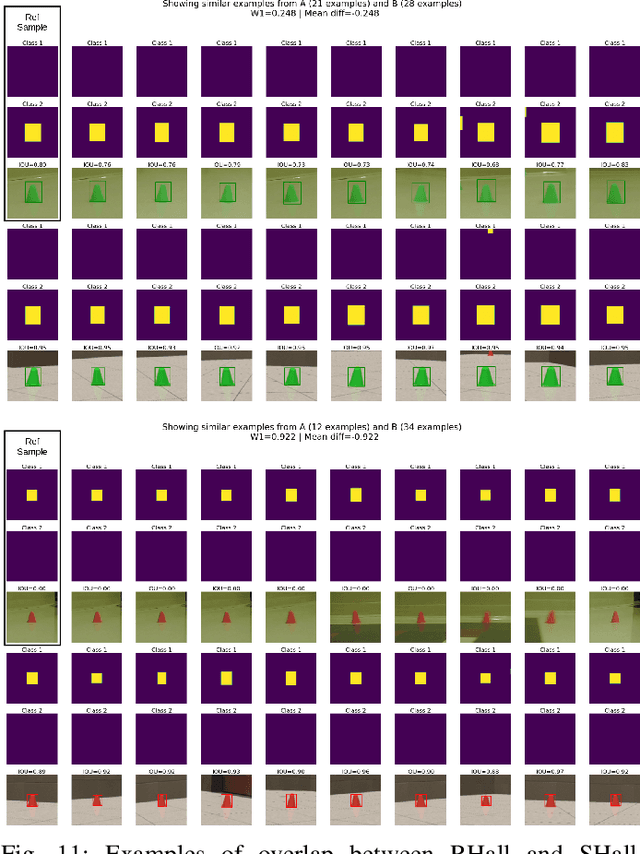

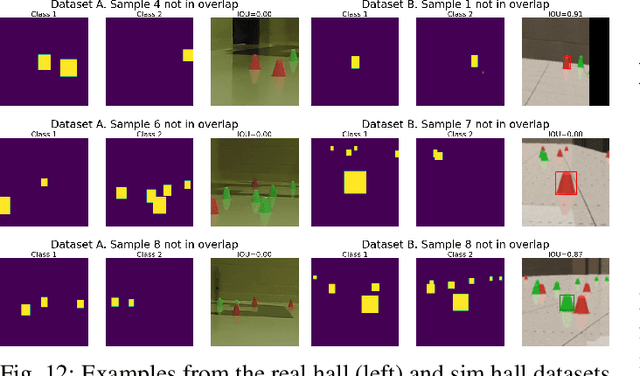

A performance contextualization approach to validating camera models for robot simulation

Aug 01, 2022

Abstract:The focus of this contribution is on camera simulation as it comes into play in simulating autonomous robots for their virtual prototyping. We propose a camera model validation methodology based on the performance of a perception algorithm and the context in which the performance is measured. This approach is different than traditional validation of synthetic images, which is often done at a pixel or feature level, and tends to require matching pairs of synthetic and real images. Due to the high cost and constraints of acquiring paired images, the proposed approach is based on datasets that are not necessarily paired. Within a real and a simulated dataset, A and B, respectively, we find subsets Ac and Bc of similar content and judge, statistically, the perception algorithm's response to these similar subsets. This validation approach obtains a statistical measure of performance similarity, as well as a measure of similarity between the content of A and B. The methodology is demonstrated using images generated with Chrono::Sensor and a scaled autonomous vehicle, using an object detector as the perception algorithm. The results demonstrate the ability to quantify (i) differences between simulated and real data; (ii) the propensity of training methods to mitigate the sim-to-real gap; and (iii) the context overlap between two datasets.

A software toolkit and hardware platform for investigating and comparing robot autonomy algorithms in simulation and reality

Jun 14, 2022

Abstract:We describe a software framework and a hardware platform used in tandem for the design and analysis of robot autonomy algorithms in simulation and reality. The software, which is open source, containerized, and operating system (OS) independent, has three main components: a ROS 2 interface to a C++ vehicle simulation framework (Chrono), which provides high-fidelity wheeled/tracked vehicle and sensor simulation; a basic ROS 2-based autonomy stack for algorithm design and testing; and, a development ecosystem which enables visualization, and hardware-in-the-loop experimentation in perception, state estimation, path planning, and controls. The accompanying hardware platform is a 1/6th scale vehicle augmented with reconfigurable mountings for computing, sensing, and tracking. Its purpose is to allow algorithms and sensor configurations to be physically tested and improved. Since this vehicle platform has a digital twin within the simulation environment, one can test and compare the same algorithms and autonomy stack in simulation and reality. This platform has been built with an eye towards characterizing and managing the simulation-to-reality gap. Herein, we describe how this platform is set up, deployed, and used to improve autonomy for mobility applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge