Bo-Hsun Chen

DiffPhysCam: Differentiable Physics-Based Camera Simulation for Inverse Rendering and Embodied AI

Aug 12, 2025

Abstract:We introduce DiffPhysCam, a differentiable camera simulator designed to support robotics and embodied AI applications by enabling gradient-based optimization in visual perception pipelines. Generating synthetic images that closely mimic those from real cameras is essential for training visual models and enabling end-to-end visuomotor learning. Moreover, differentiable rendering allows inverse reconstruction of real-world scenes as digital twins, facilitating simulation-based robotics training. However, existing virtual cameras offer limited control over intrinsic settings, poorly capture optical artifacts, and lack tunable calibration parameters -- hindering sim-to-real transfer. DiffPhysCam addresses these limitations through a multi-stage pipeline that provides fine-grained control over camera settings, models key optical effects such as defocus blur, and supports calibration with real-world data. It enables both forward rendering for image synthesis and inverse rendering for 3D scene reconstruction, including mesh and material texture optimization. We show that DiffPhysCam enhances robotic perception performance in synthetic image tasks. As an illustrative example, we create a digital twin of a real-world scene using inverse rendering, simulate it in a multi-physics environment, and demonstrate navigation of an autonomous ground vehicle using images generated by DiffPhysCam.

Instance Performance Difference: A Metric to Measure the Sim-To-Real Gap in Camera Simulation

Nov 11, 2024

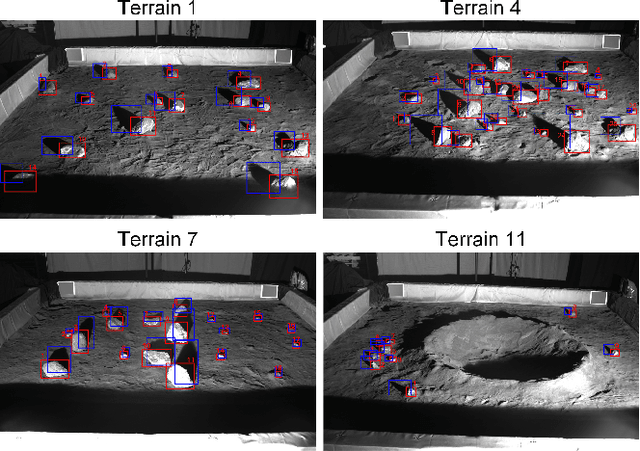

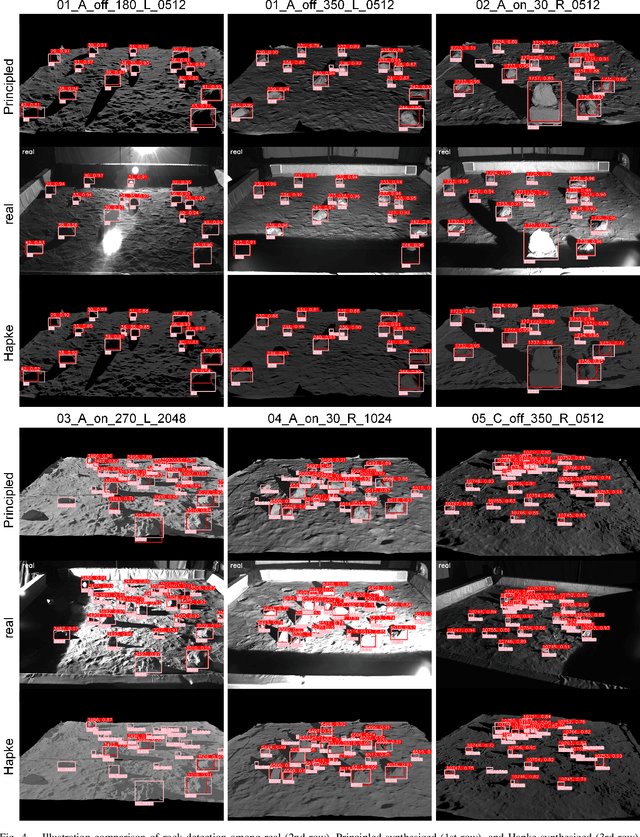

Abstract:In this contribution, we introduce the concept of Instance Performance Difference (IPD), a metric designed to measure the gap in performance that a robotics perception task experiences when working with real vs. synthetic pictures. By pairing synthetic and real instances in the pictures and evaluating their performance similarity using perception algorithms, IPD provides a targeted metric that closely aligns with the needs of real-world applications. We explain and demonstrate this metric through a rock detection task in lunar terrain images, highlighting the IPD's effectiveness in identifying the most realistic image synthesis method. The metric is thus instrumental in creating synthetic image datasets that perform in perception tasks like real-world photo counterparts. In turn, this supports robust sim-to-real transfer for perception algorithms in real-world robotics applications.

A physics-based sensor simulation environment for lunar ground operations

Oct 06, 2024Abstract:This contribution reports on a software framework that uses physically-based rendering to simulate camera operation in lunar conditions. The focus is on generating synthetic images qualitatively similar to those produced by an actual camera operating on a vehicle traversing and/or actively interacting with lunar terrain, e.g., for construction operations. The highlights of this simulator are its ability to capture (i) light transport in lunar conditions and (ii) artifacts related to the vehicle-terrain interaction, which might include dust formation and transport. The simulation infrastructure is built within an in-house developed physics engine called Chrono, which simulates the dynamics of the deformable terrain-vehicle interaction, as well as fallout of this interaction. The Chrono::Sensor camera model draws on ray tracing and Hapke Photometric Functions. We analyze the performance of the simulator using two virtual experiments featuring digital twins of NASA's VIPER rover navigating a lunar environment, and of the NASA's RASSOR excavator engaged into a digging operation. The sensor simulation solution presented can be used for the design and testing of perception algorithms, or as a component of in-silico experiments that pertain to large lunar operations, e.g., traversability, construction tasks.

POLAR3D: Augmenting NASA's POLAR Dataset for Data-Driven Lunar Perception and Rover Simulation

Sep 21, 2023

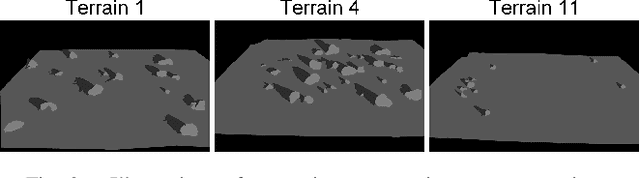

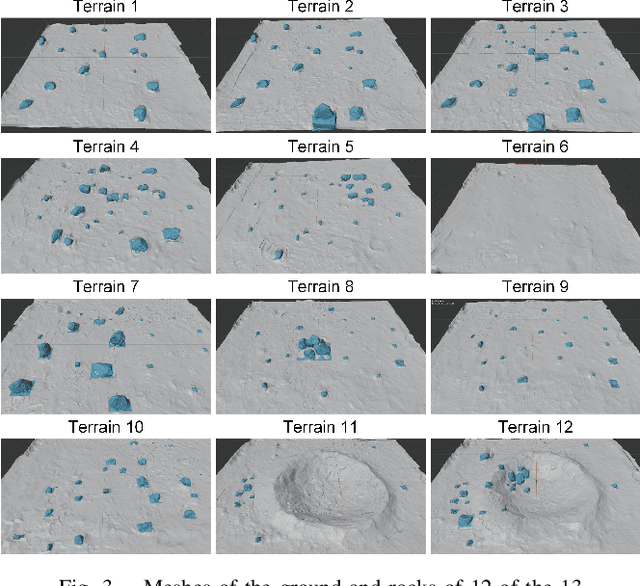

Abstract:We report on an effort that led to POLAR3D, a set of digital assets that enhance the POLAR dataset of stereo images generated by NASA to mimic lunar lighting conditions. Our contributions are twofold. First, we have annotated each photo in the POLAR dataset, providing approximately 23 000 labels for rocks and their shadows. Second, we digitized several lunar terrain scenarios available in the POLAR dataset. Specifically, by utilizing both the lunar photos and the POLAR's LiDAR point clouds, we constructed detailed obj files for all identifiable assets. POLAR3D is the set of digital assets comprising of rock/shadow labels and obj files associated with the digital twins of lunar terrain scenarios. This new dataset can be used for training perception algorithms for lunar exploration and synthesizing photorealistic images beyond the original POLAR collection. Likewise, the obj assets can be integrated into simulation environments to facilitate realistic rover operations in a digital twin of a POLAR scenario. POLAR3D is publicly available to aid perception algorithm development, camera simulation efforts, and lunar simulation exercises.POLAR3D is publicly available at https://github.com/uwsbel/POLAR-digital.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge