Zilin Si

PuffyBot: An Untethered Shape Morphing Robot for Multi-environment Locomotion

Nov 13, 2025Abstract:Amphibians adapt their morphologies and motions to accommodate movement in both terrestrial and aquatic environments. Inspired by these biological features, we present PuffyBot, an untethered shape morphing robot capable of changing its body morphology to navigate multiple environments. Our robot design leverages a scissor-lift mechanism driven by a linear actuator as its primary structure to achieve shape morphing. The transformation enables a volume change from 255.00 cm3 to 423.75 cm3, modulating the buoyant force to counteract a downward force of 3.237 N due to 330 g mass of the robot. A bell-crank linkage is integrated with the scissor-lift mechanism, which adjusts the servo-actuated limbs by 90 degrees, allowing a seamless transition between crawling and swimming modes. The robot is fully waterproof, using thermoplastic polyurethane (TPU) fabric to ensure functionality in aquatic environments. The robot can operate untethered for two hours with an onboard battery of 1000 mA h. Our experimental results demonstrate multi-environment locomotion, including crawling on the land, crawling on the underwater floor, swimming on the water surface, and bimodal buoyancy adjustment to submerge underwater or resurface. These findings show the potential of shape morphing to create versatile and energy efficient robotic platforms suitable for diverse environments.

ExoStart: Efficient learning for dexterous manipulation with sensorized exoskeleton demonstrations

Jun 13, 2025

Abstract:Recent advancements in teleoperation systems have enabled high-quality data collection for robotic manipulators, showing impressive results in learning manipulation at scale. This progress suggests that extending these capabilities to robotic hands could unlock an even broader range of manipulation skills, especially if we could achieve the same level of dexterity that human hands exhibit. However, teleoperating robotic hands is far from a solved problem, as it presents a significant challenge due to the high degrees of freedom of robotic hands and the complex dynamics occurring during contact-rich settings. In this work, we present ExoStart, a general and scalable learning framework that leverages human dexterity to improve robotic hand control. In particular, we obtain high-quality data by collecting direct demonstrations without a robot in the loop using a sensorized low-cost wearable exoskeleton, capturing the rich behaviors that humans can demonstrate with their own hands. We also propose a simulation-based dynamics filter that generates dynamically feasible trajectories from the collected demonstrations and use the generated trajectories to bootstrap an auto-curriculum reinforcement learning method that relies only on simple sparse rewards. The ExoStart pipeline is generalizable and yields robust policies that transfer zero-shot to the real robot. Our results demonstrate that ExoStart can generate dexterous real-world hand skills, achieving a success rate above 50% on a wide range of complex tasks such as opening an AirPods case or inserting and turning a key in a lock. More details and videos can be found in https://sites.google.com/view/exostart.

Tilde: Teleoperation for Dexterous In-Hand Manipulation Learning with a DeltaHand

May 29, 2024Abstract:Dexterous robotic manipulation remains a challenging domain due to its strict demands for precision and robustness on both hardware and software. While dexterous robotic hands have demonstrated remarkable capabilities in complex tasks, efficiently learning adaptive control policies for hands still presents a significant hurdle given the high dimensionalities of hands and tasks. To bridge this gap, we propose Tilde, an imitation learning-based in-hand manipulation system on a dexterous DeltaHand. It leverages 1) a low-cost, configurable, simple-to-control, soft dexterous robotic hand, DeltaHand, 2) a user-friendly, precise, real-time teleoperation interface, TeleHand, and 3) an efficient and generalizable imitation learning approach with diffusion policies. Our proposed TeleHand has a kinematic twin design to the DeltaHand that enables precise one-to-one joint control of the DeltaHand during teleoperation. This facilitates efficient high-quality data collection of human demonstrations in the real world. To evaluate the effectiveness of our system, we demonstrate the fully autonomous closed-loop deployment of diffusion policies learned from demonstrations across seven dexterous manipulation tasks with an average 90% success rate.

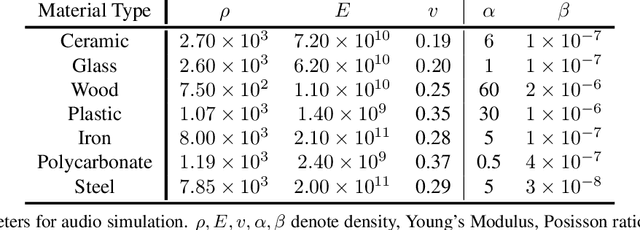

DIFFTACTILE: A Physics-based Differentiable Tactile Simulator for Contact-rich Robotic Manipulation

Mar 13, 2024Abstract:We introduce DIFFTACTILE, a physics-based differentiable tactile simulation system designed to enhance robotic manipulation with dense and physically accurate tactile feedback. In contrast to prior tactile simulators which primarily focus on manipulating rigid bodies and often rely on simplified approximations to model stress and deformations of materials in contact, DIFFTACTILE emphasizes physics-based contact modeling with high fidelity, supporting simulations of diverse contact modes and interactions with objects possessing a wide range of material properties. Our system incorporates several key components, including a Finite Element Method (FEM)-based soft body model for simulating the sensing elastomer, a multi-material simulator for modeling diverse object types (such as elastic, elastoplastic, cables) under manipulation, a penalty-based contact model for handling contact dynamics. The differentiable nature of our system facilitates gradient-based optimization for both 1) refining physical properties in simulation using real-world data, hence narrowing the sim-to-real gap and 2) efficient learning of tactile-assisted grasping and contact-rich manipulation skills. Additionally, we introduce a method to infer the optical response of our tactile sensor to contact using an efficient pixel-based neural module. We anticipate that DIFFTACTILE will serve as a useful platform for studying contact-rich manipulations, leveraging the benefits of dense tactile feedback and differentiable physics. Code and supplementary materials are available at the project website https://difftactile.github.io/.

DELTAHANDS: A Synergistic Dexterous Hand Framework Based on Delta Robots

Oct 08, 2023

Abstract:Dexterous robotic manipulation in unstructured environments can aid in everyday tasks such as cleaning and caretaking. Anthropomorphic robotic hands are highly dexterous and theoretically well-suited for working in human domains, but their complex designs and dynamics often make them difficult to control. By contrast, parallel-jaw grippers are easy to control and are used extensively in industrial applications, but they lack the dexterity for various kinds of grasps and in-hand manipulations. In this work, we present DELTAHANDS, a synergistic dexterous hand framework with Delta robots. The DELTAHANDS are soft, easy to reconfigure, simple to manufacture with low-cost off-the-shelf materials, and possess high degrees of freedom that can be easily controlled. DELTAHANDS' dexterity can be adjusted for different applications by leveraging actuation synergies, which can further reduce the control complexity, overall cost, and energy consumption. We characterize the Delta robots' kinematics accuracy, force profiles, and workspace range to assist with hand design. Finally, we evaluate the versatility of DELTAHANDS by grasping a diverse set of objects and by using teleoperation to complete three dexterous manipulation tasks: cloth folding, cap opening, and cable arrangement.

RobotSweater: Scalable, Generalizable, and Customizable Machine-Knitted Tactile Skins for Robots

Mar 06, 2023

Abstract:Tactile sensing is essential for robots to perceive and react to the environment. However, it remains a challenge to make large-scale and flexible tactile skins on robots. Industrial machine knitting provides solutions to manufacture customizable fabrics. Along with functional yarns, it can produce highly customizable circuits that can be made into tactile skins for robots. In this work, we present RobotSweater, a machine-knitted pressure-sensitive tactile skin that can be easily applied on robots. We design and fabricate a parameterized multi-layer tactile skin using off-the-shelf yarns, and characterize our sensor on both a flat testbed and a curved surface to show its robust contact detection, multi-contact localization, and pressure sensing capabilities. The sensor is fabricated using a well-established textile manufacturing process with a programmable industrial knitting machine, which makes it highly customizable and low-cost. The textile nature of the sensor also makes it easily fit curved surfaces of different robots and have a friendly appearance. Using our tactile skins, we conduct closed-loop control with tactile feedback for two applications: (1) human lead-through control of a robot arm, and (2) human-robot interaction with a mobile robot.

MidasTouch: Monte-Carlo inference over distributions across sliding touch

Oct 25, 2022

Abstract:We present MidasTouch, a tactile perception system for online global localization of a vision-based touch sensor sliding on an object surface. This framework takes in posed tactile images over time, and outputs an evolving distribution of sensor pose on the object's surface, without the need for visual priors. Our key insight is to estimate local surface geometry with tactile sensing, learn a compact representation for it, and disambiguate these signals over a long time horizon. The backbone of MidasTouch is a Monte-Carlo particle filter, with a measurement model based on a tactile code network learned from tactile simulation. This network, inspired by LIDAR place recognition, compactly summarizes local surface geometries. These generated codes are efficiently compared against a precomputed tactile codebook per-object, to update the pose distribution. We further release the YCB-Slide dataset of real-world and simulated forceful sliding interactions between a vision-based tactile sensor and standard YCB objects. While single-touch localization can be inherently ambiguous, we can quickly localize our sensor by traversing salient surface geometries. Project page: https://suddhu.github.io/midastouch-tactile/

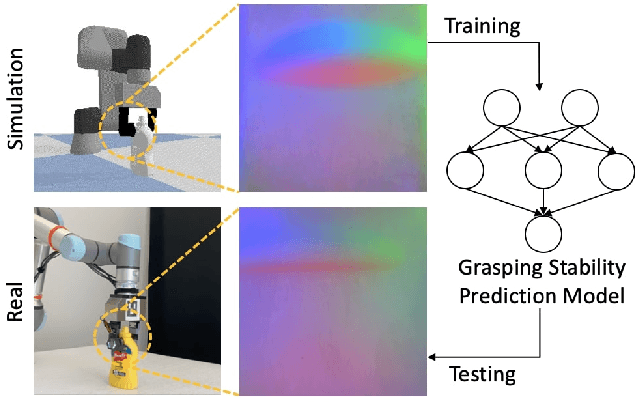

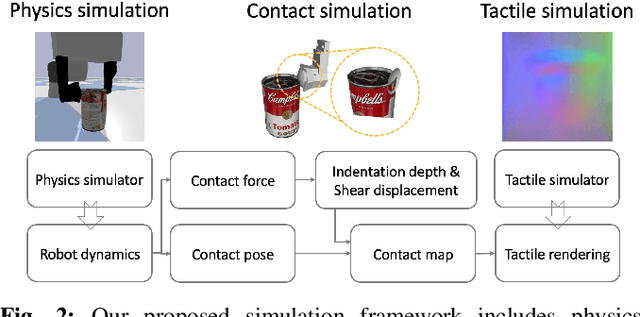

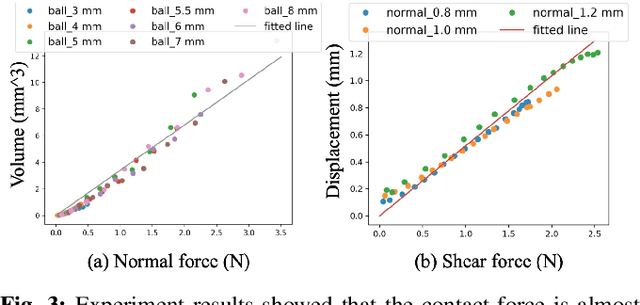

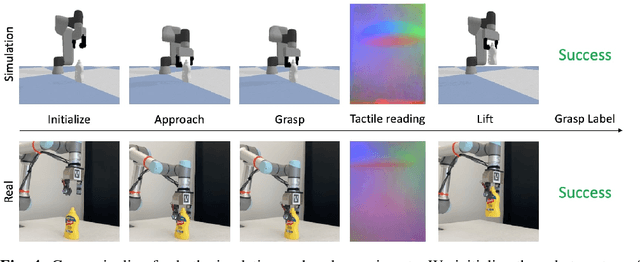

Grasp Stability Prediction with Sim-to-Real Transfer from Tactile Sensing

Aug 04, 2022

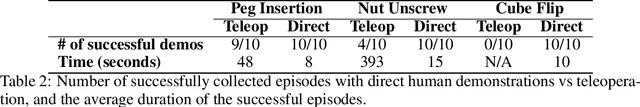

Abstract:Robot simulation has been an essential tool for data-driven manipulation tasks. However, most existing simulation frameworks lack either efficient and accurate models of physical interactions with tactile sensors or realistic tactile simulation. This makes the sim-to-real transfer for tactile-based manipulation tasks still challenging. In this work, we integrate simulation of robot dynamics and vision-based tactile sensors by modeling the physics of contact. This contact model uses simulated contact forces at the robot's end-effector to inform the generation of realistic tactile outputs. To eliminate the sim-to-real transfer gap, we calibrate our physics simulator of robot dynamics, contact model, and tactile optical simulator with real-world data, and then we demonstrate the effectiveness of our system on a zero-shot sim-to-real grasp stability prediction task where we achieve an average accuracy of 90.7% on various objects. Experiments reveal the potential of applying our simulation framework to more complicated manipulation tasks. We open-source our simulation framework at https://github.com/CMURoboTouch/Taxim/tree/taxim-robot.

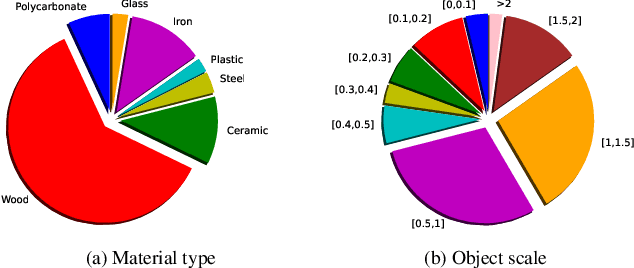

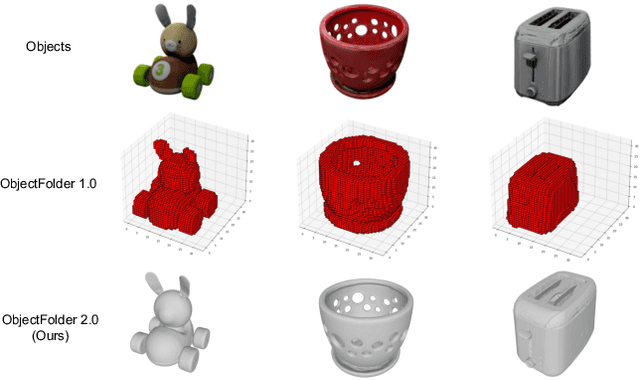

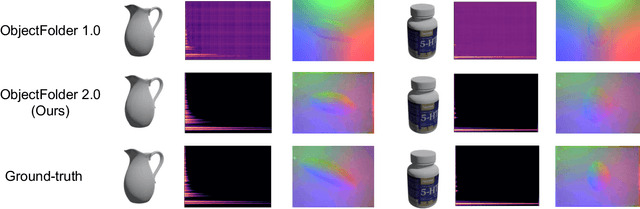

ObjectFolder 2.0: A Multisensory Object Dataset for Sim2Real Transfer

Apr 05, 2022

Abstract:Objects play a crucial role in our everyday activities. Though multisensory object-centric learning has shown great potential lately, the modeling of objects in prior work is rather unrealistic. ObjectFolder 1.0 is a recent dataset that introduces 100 virtualized objects with visual, acoustic, and tactile sensory data. However, the dataset is small in scale and the multisensory data is of limited quality, hampering generalization to real-world scenarios. We present ObjectFolder 2.0, a large-scale, multisensory dataset of common household objects in the form of implicit neural representations that significantly enhances ObjectFolder 1.0 in three aspects. First, our dataset is 10 times larger in the amount of objects and orders of magnitude faster in rendering time. Second, we significantly improve the multisensory rendering quality for all three modalities. Third, we show that models learned from virtual objects in our dataset successfully transfer to their real-world counterparts in three challenging tasks: object scale estimation, contact localization, and shape reconstruction. ObjectFolder 2.0 offers a new path and testbed for multisensory learning in computer vision and robotics. The dataset is available at https://github.com/rhgao/ObjectFolder.

Efficient shape mapping through dense touch and vision

Sep 20, 2021

Abstract:Knowledge of 3-D object shape is of great importance to robot manipulation tasks, but may not be readily available in unstructured environments. While vision is often occluded during robot-object interaction, high-resolution tactile sensors can give a dense local perspective of the object. However, tactile sensors have limited sensing area and the shape representation must faithfully approximate non-contact areas. In addition, a key challenge is efficiently incorporating these dense tactile measurements into a 3-D mapping framework. In this work, we propose an incremental shape mapping method using a GelSight tactile sensor and a depth camera. Local shape is recovered from tactile images via a learned model trained in simulation. Through efficient inference on a spatial factor graph informed by a Gaussian process, we build an implicit surface representation of the object. We demonstrate visuo-tactile mapping in both simulated and real-world experiments, to incrementally build 3-D reconstructions of household objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge