Yufan Zhang

Kling-Omni Technical Report

Dec 18, 2025

Abstract:We present Kling-Omni, a generalist generative framework designed to synthesize high-fidelity videos directly from multimodal visual language inputs. Adopting an end-to-end perspective, Kling-Omni bridges the functional separation among diverse video generation, editing, and intelligent reasoning tasks, integrating them into a holistic system. Unlike disjointed pipeline approaches, Kling-Omni supports a diverse range of user inputs, including text instructions, reference images, and video contexts, processing them into a unified multimodal representation to deliver cinematic-quality and highly-intelligent video content creation. To support these capabilities, we constructed a comprehensive data system that serves as the foundation for multimodal video creation. The framework is further empowered by efficient large-scale pre-training strategies and infrastructure optimizations for inference. Comprehensive evaluations reveal that Kling-Omni demonstrates exceptional capabilities in in-context generation, reasoning-based editing, and multimodal instruction following. Moving beyond a content creation tool, we believe Kling-Omni is a pivotal advancement toward multimodal world simulators capable of perceiving, reasoning, generating and interacting with the dynamic and complex worlds.

AI Literacy Assessment Revisited: A Task-Oriented Approach Aligned with Real-world Occupations

Nov 07, 2025Abstract:As artificial intelligence (AI) systems become ubiquitous in professional contexts, there is an urgent need to equip workers, often with backgrounds outside of STEM, with the skills to use these tools effectively as well as responsibly, that is, to be AI literate. However, prevailing definitions and therefore assessments of AI literacy often emphasize foundational technical knowledge, such as programming, mathematics, and statistics, over practical knowledge such as interpreting model outputs, selecting tools, or identifying ethical concerns. This leaves a noticeable gap in assessing someone's AI literacy for real-world job use. We propose a work-task-oriented assessment model for AI literacy which is grounded in the competencies required for effective use of AI tools in professional settings. We describe the development of a novel AI literacy assessment instrument, and accompanying formative assessments, in the context of a US Navy robotics training program. The program included training in robotics and AI literacy, as well as a competition with practical tasks and a multiple choice scenario task meant to simulate use of AI in a job setting. We found that, as a measure of applied AI literacy, the competition's scenario task outperformed the tests we adopted from past research or developed ourselves. We argue that when training people for AI-related work, educators should consider evaluating them with instruments that emphasize highly contextualized practical skills rather than abstract technical knowledge, especially when preparing workers without technical backgrounds for AI-integrated roles.

Utilizing Sequential Information of General Lab-test Results and Diagnoses History for Differential Diagnosis of Dementia

Feb 21, 2025

Abstract:Early diagnosis of Alzheimer's Disease (AD) faces multiple data-related challenges, including high variability in patient data, limited access to specialized diagnostic tests, and overreliance on single-type indicators. These challenges are exacerbated by the progressive nature of AD, where subtle pathophysiological changes often precede clinical symptoms by decades. To address these limitations, this study proposes a novel approach that takes advantage of routinely collected general laboratory test histories for the early detection and differential diagnosis of AD. By modeling lab test sequences as "sentences", we apply word embedding techniques to capture latent relationships between tests and employ deep time series models, including long-short-term memory (LSTM) and Transformer networks, to model temporal patterns in patient records. Experimental results demonstrate that our approach improves diagnostic accuracy and enables scalable and costeffective AD screening in diverse clinical settings.

Benchmarking the Robustness of Optical Flow Estimation to Corruptions

Nov 22, 2024

Abstract:Optical flow estimation is extensively used in autonomous driving and video editing. While existing models demonstrate state-of-the-art performance across various benchmarks, the robustness of these methods has been infrequently investigated. Despite some research focusing on the robustness of optical flow models against adversarial attacks, there has been a lack of studies investigating their robustness to common corruptions. Taking into account the unique temporal characteristics of optical flow, we introduce 7 temporal corruptions specifically designed for benchmarking the robustness of optical flow models, in addition to 17 classical single-image corruptions, in which advanced PSF Blur simulation method is performed. Two robustness benchmarks, KITTI-FC and GoPro-FC, are subsequently established as the first corruption robustness benchmark for optical flow estimation, with Out-Of-Domain (OOD) and In-Domain (ID) settings to facilitate comprehensive studies. Robustness metrics, Corruption Robustness Error (CRE), Corruption Robustness Error ratio (CREr), and Relative Corruption Robustness Error (RCRE) are further introduced to quantify the optical flow estimation robustness. 29 model variants from 15 optical flow methods are evaluated, yielding 10 intriguing observations, such as 1) the absolute robustness of the model is heavily dependent on the estimation performance; 2) the corruptions that diminish local information are more serious than that reduce visual effects. We also give suggestions for the design and application of optical flow models. We anticipate that our benchmark will serve as a foundational resource for advancing research in robust optical flow estimation. The benchmarks and source code will be released at https://github.com/ZhonghuaYi/optical_flow_robustness_benchmark.

EI-Nexus: Towards Unmediated and Flexible Inter-Modality Local Feature Extraction and Matching for Event-Image Data

Oct 29, 2024

Abstract:Event cameras, with high temporal resolution and high dynamic range, have limited research on the inter-modality local feature extraction and matching of event-image data. We propose EI-Nexus, an unmediated and flexible framework that integrates two modality-specific keypoint extractors and a feature matcher. To achieve keypoint extraction across viewpoint and modality changes, we bring Local Feature Distillation (LFD), which transfers the viewpoint consistency from a well-learned image extractor to the event extractor, ensuring robust feature correspondence. Furthermore, with the help of Context Aggregation (CA), a remarkable enhancement is observed in feature matching. We further establish the first two inter-modality feature matching benchmarks, MVSEC-RPE and EC-RPE, to assess relative pose estimation on event-image data. Our approach outperforms traditional methods that rely on explicit modal transformation, offering more unmediated and adaptable feature extraction and matching, achieving better keypoint similarity and state-of-the-art results on the MVSEC-RPE and EC-RPE benchmarks. The source code and benchmarks will be made publicly available at https://github.com/ZhonghuaYi/EI-Nexus_official.

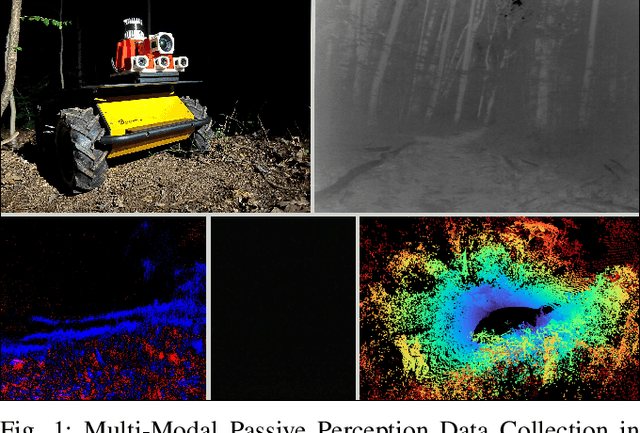

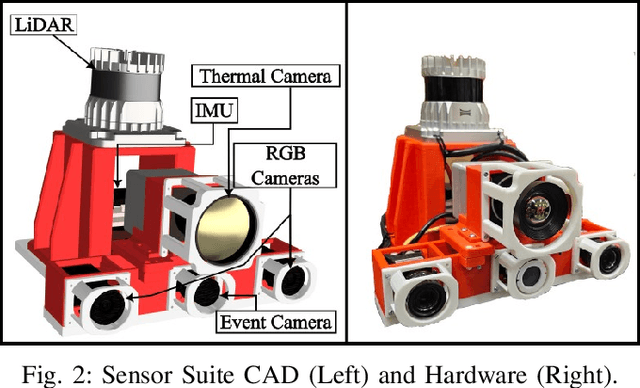

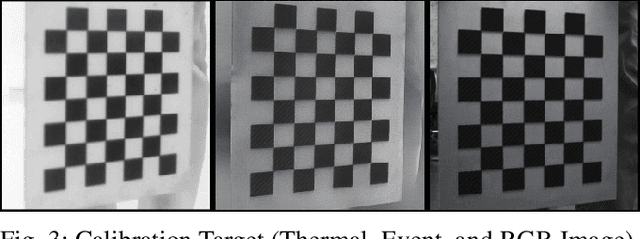

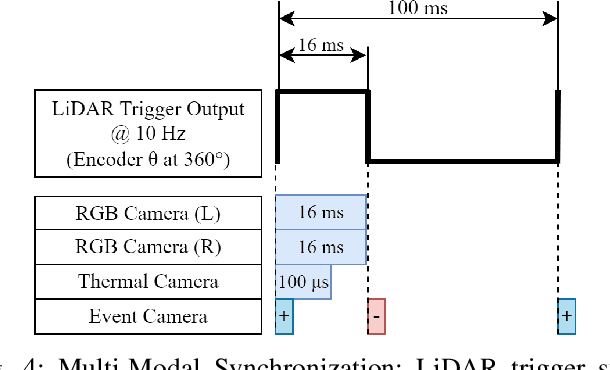

M2P2: A Multi-Modal Passive Perception Dataset for Off-Road Mobility in Extreme Low-Light Conditions

Oct 01, 2024

Abstract:Long-duration, off-road, autonomous missions require robots to continuously perceive their surroundings regardless of the ambient lighting conditions. Most existing autonomy systems heavily rely on active sensing, e.g., LiDAR, RADAR, and Time-of-Flight sensors, or use (stereo) visible light imaging sensors, e.g., color cameras, to perceive environment geometry and semantics. In scenarios where fully passive perception is required and lighting conditions are degraded to an extent that visible light cameras fail to perceive, most downstream mobility tasks such as obstacle avoidance become impossible. To address such a challenge, this paper presents a Multi-Modal Passive Perception dataset, M2P2, to enable off-road mobility in low-light to no-light conditions. We design a multi-modal sensor suite including thermal, event, and stereo RGB cameras, GPS, two Inertia Measurement Units (IMUs), as well as a high-resolution LiDAR for ground truth, with a novel multi-sensor calibration procedure that can efficiently transform multi-modal perceptual streams into a common coordinate system. Our 10-hour, 32 km dataset also includes mobility data such as robot odometry and actions and covers well-lit, low-light, and no-light conditions, along with paved, on-trail, and off-trail terrain. Our results demonstrate that off-road mobility is possible through only passive perception in extreme low-light conditions using end-to-end learning and classical planning. The project website can be found at https://cs.gmu.edu/~xiao/Research/M2P2/

P2U-SLAM: A Monocular Wide-FoV SLAM System Based on Point Uncertainty and Pose Uncertainty

Sep 16, 2024

Abstract:This paper presents P2U-SLAM, a visual Simultaneous Localization And Mapping (SLAM) system with a wide Field of View (FoV) camera, which utilizes pose uncertainty and point uncertainty. While the wide FoV enables considerable repetitive observations of historical map points for matching cross-view features, the data properties of the historical map points and the poses of historical keyframes have changed during the optimization process. The neglect of data property changes triggers the absence of a partial information matrix in optimization and leads to the risk of long-term positioning performance degradation. The purpose of our research is to reduce the risk of the wide field of view visual input to the SLAM system. Based on the conditional probability model, this work reveals the definite impact of the above data properties changes on the optimization process, concretizes it as point uncertainty and pose uncertainty, and gives a specific mathematical form. P2U-SLAM respectively embeds point uncertainty and pose uncertainty into the tracking module and local mapping, and updates these uncertainties after each optimization operation including local mapping, map merging, and loop closing. We present an exhaustive evaluation in 27 sequences from two popular public datasets with wide-FoV visual input. P2U-SLAM shows excellent performance compared with other state-of-the-art methods. The source code will be made publicly available at https://github.com/BambValley/P2U-SLAM.

BaichuanSEED: Sharing the Potential of ExtensivE Data Collection and Deduplication by Introducing a Competitive Large Language Model Baseline

Aug 27, 2024

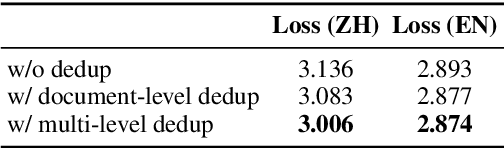

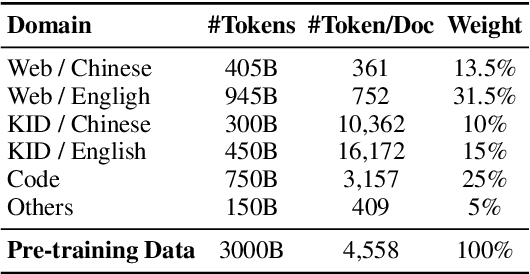

Abstract:The general capabilities of Large Language Models (LLM) highly rely on the composition and selection on extensive pretraining datasets, treated as commercial secrets by several institutions. To mitigate this issue, we open-source the details of a universally applicable data processing pipeline and validate its effectiveness and potential by introducing a competitive LLM baseline. Specifically, the data processing pipeline consists of broad collection to scale up and reweighting to improve quality. We then pretrain a 7B model BaichuanSEED with 3T tokens processed by our pipeline without any deliberate downstream task-related optimization, followed by an easy but effective supervised fine-tuning stage. BaichuanSEED demonstrates consistency and predictability throughout training and achieves comparable performance on comprehensive benchmarks with several commercial advanced large language models, such as Qwen1.5 and Llama3. We also conduct several heuristic experiments to discuss the potential for further optimization of downstream tasks, such as mathematics and coding.

Improving Sequential Market Clearing via Value-oriented Renewable Energy Forecasting

May 15, 2024

Abstract:Large penetration of renewable energy sources (RESs) brings huge uncertainty into the electricity markets. While existing deterministic market clearing fails to accommodate the uncertainty, the recently proposed stochastic market clearing struggles to achieve desirable market properties. In this work, we propose a value-oriented forecasting approach, which tactically determines the RESs generation that enters the day-ahead market. With such a forecast, the existing deterministic market clearing framework can be maintained, and the day-ahead and real-time overall operation cost is reduced. At the training phase, the forecast model parameters are estimated to minimize expected day-ahead and real-time overall operation costs, instead of minimizing forecast errors in a statistical sense. Theoretically, we derive the exact form of the loss function for training the forecast model that aligns with such a goal. For market clearing modeled by linear programs, this loss function is a piecewise linear function. Additionally, we derive the analytical gradient of the loss function with respect to the forecast, which inspires an efficient training strategy. A numerical study shows our forecasts can bring significant benefits of the overall cost reduction to deterministic market clearing, compared to quality-oriented forecasting approach.

LF-PGVIO: A Visual-Inertial-Odometry Framework for Large Field-of-View Cameras using Points and Geodesic Segments

Jun 11, 2023Abstract:In this paper, we propose LF-PGVIO, a Visual-Inertial-Odometry (VIO) framework for large Field-of-View (FoV) cameras with a negative plane using points and geodesic segments. Notoriously, when the FoV of a panoramic camera reaches the negative half-plane, the image cannot be unfolded into a single pinhole image. Moreover, if a traditional straight-line detection method is directly applied to the original panoramic image, it cannot be normally used due to the large distortions in the panoramas and remains under-explored in the literature. To address these challenges, we put forward LF-PGVIO, which can provide line constraints for cameras with large FoV, even for cameras with negative-plane FoV, and directly extract omnidirectional curve segments from the raw omnidirectional image. We propose an Omnidirectional Curve Segment Detection (OCSD) method combined with a camera model which is applicable to images with large distortions, such as panoramic annular images, fisheye images, and various panoramic images. Each point on the image is projected onto the sphere, and the detected omnidirectional curve segments in the image named geodesic segments must satisfy the criterion of being a geodesic segment on the unit sphere. The detected geodesic segment is sliced into multiple straight-line segments according to the radian of the geodesic, and descriptors are extracted separately and recombined to obtain new descriptors. Based on descriptor matching, we obtain the constraint relationship of the 3D line segments between multiple frames. In our VIO system, we use sliding window optimization using point feature residuals, line feature residuals, and IMU residuals. Our evaluation of the proposed system on public datasets demonstrates that LF-PGVIO outperforms state-of-the-art methods in terms of accuracy and robustness. Code will be open-sourced at https://github.com/flysoaryun/LF-PGVIO.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge