Mohammad Nazeri

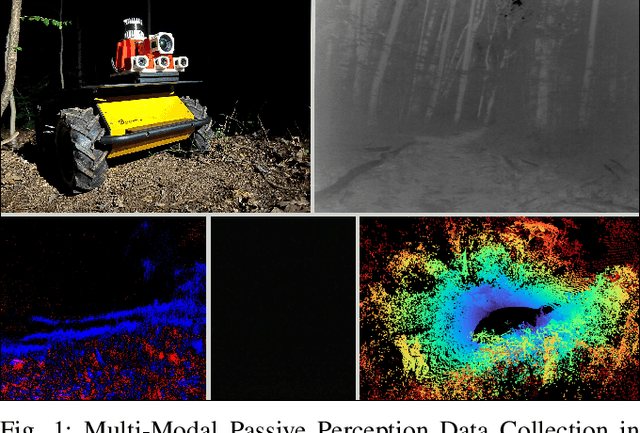

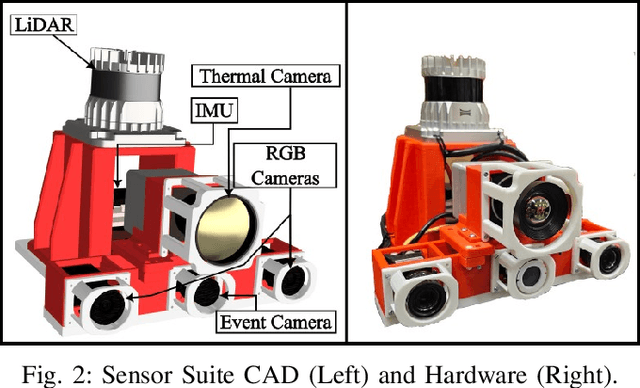

M2P2: A Multi-Modal Passive Perception Dataset for Off-Road Mobility in Extreme Low-Light Conditions

Oct 01, 2024

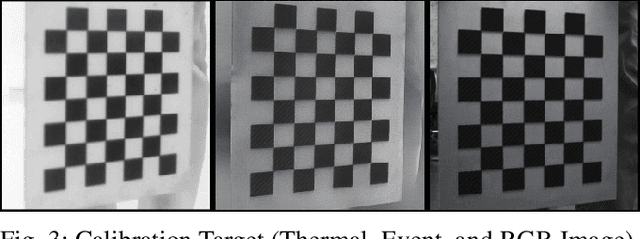

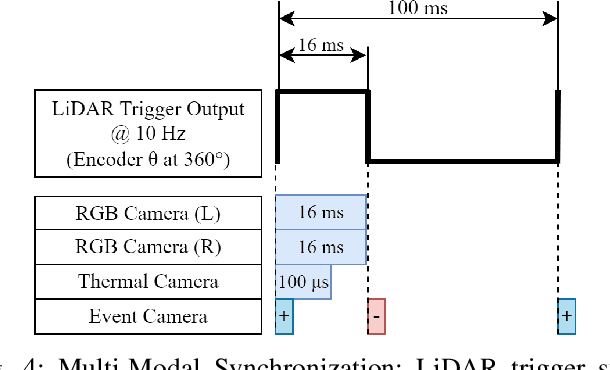

Abstract:Long-duration, off-road, autonomous missions require robots to continuously perceive their surroundings regardless of the ambient lighting conditions. Most existing autonomy systems heavily rely on active sensing, e.g., LiDAR, RADAR, and Time-of-Flight sensors, or use (stereo) visible light imaging sensors, e.g., color cameras, to perceive environment geometry and semantics. In scenarios where fully passive perception is required and lighting conditions are degraded to an extent that visible light cameras fail to perceive, most downstream mobility tasks such as obstacle avoidance become impossible. To address such a challenge, this paper presents a Multi-Modal Passive Perception dataset, M2P2, to enable off-road mobility in low-light to no-light conditions. We design a multi-modal sensor suite including thermal, event, and stereo RGB cameras, GPS, two Inertia Measurement Units (IMUs), as well as a high-resolution LiDAR for ground truth, with a novel multi-sensor calibration procedure that can efficiently transform multi-modal perceptual streams into a common coordinate system. Our 10-hour, 32 km dataset also includes mobility data such as robot odometry and actions and covers well-lit, low-light, and no-light conditions, along with paved, on-trail, and off-trail terrain. Our results demonstrate that off-road mobility is possible through only passive perception in extreme low-light conditions using end-to-end learning and classical planning. The project website can be found at https://cs.gmu.edu/~xiao/Research/M2P2/

Traverse the Non-Traversable: Estimating Traversability for Wheeled Mobility on Vertically Challenging Terrain

Sep 26, 2024

Abstract:Most traversability estimation techniques divide off-road terrain into traversable (e.g., pavement, gravel, and grass) and non-traversable (e.g., boulders, vegetation, and ditches) regions and then inform subsequent planners to produce trajectories on the traversable part. However, recent research demonstrated that wheeled robots can traverse vertically challenging terrain (e.g., extremely rugged boulders comparable in size to the vehicles themselves), which unfortunately would be deemed as non-traversable by existing techniques. Motivated by such limitations, this work aims at identifying the traversable from the seemingly non-traversable, vertically challenging terrain based on past kinodynamic vehicle-terrain interactions in a data-driven manner. Our new Traverse the Non-Traversable(TNT) traversability estimator can efficiently guide a down-stream sampling-based planner containing a high-precision 6-DoF kinodynamic model, which becomes deployable onboard a small-scale vehicle. Additionally, the estimated traversability can also be used as a costmap to plan global and local paths without sampling. Our experiment results show that TNT can improve planning performance, efficiency, and stability by 50%, 26.7%, and 9.2% respectively on a physical robot platform.

VertiEncoder: Self-Supervised Kinodynamic Representation Learning on Vertically Challenging Terrain

Sep 17, 2024Abstract:We present VertiEncoder, a self-supervised representation learning approach for robot mobility on vertically challenging terrain. Using the same pre-training process, VertiEncoder can handle four different downstream tasks, including forward kinodynamics learning, inverse kinodynamics learning, behavior cloning, and patch reconstruction with a single representation. VertiEncoder uses a TransformerEncoder to learn the local context of its surroundings by random masking and next patch reconstruction. We show that VertiEncoder achieves better performance across all four different tasks compared to specialized End-to-End models with 77% fewer parameters. We also show VertiEncoder's comparable performance against state-of-the-art kinodynamic modeling and planning approaches in real-world robot deployment. These results underscore the efficacy of VertiEncoder in mitigating overfitting and fostering more robust generalization across diverse environmental contexts and downstream vehicle kinodynamic tasks.

Terrain-Attentive Learning for Efficient 6-DoF Kinodynamic Modeling on Vertically Challenging Terrain

Mar 25, 2024Abstract:Wheeled robots have recently demonstrated superior mechanical capability to traverse vertically challenging terrain (e.g., extremely rugged boulders comparable in size to the vehicles themselves). Negotiating such terrain introduces significant variations of vehicle pose in all six Degrees-of-Freedom (DoFs), leading to imbalanced contact forces, varying momentum, and chassis deformation due to non-rigid tires and suspensions. To autonomously navigate on vertically challenging terrain, all these factors need to be efficiently reasoned within limited onboard computation and strict real-time constraints. In this paper, we propose a 6-DoF kinodynamics learning approach that is attentive only to the specific underlying terrain critical to the current vehicle-terrain interaction, so that it can be efficiently queried in real-time motion planners onboard small robots. Physical experiment results show our Terrain-Attentive Learning demonstrates on average 51.1% reduction in model prediction error among all 6 DoFs compared to a state-of-the-art model for vertically challenging terrain.

VANP: Learning Where to See for Navigation with Self-Supervised Vision-Action Pre-Training

Mar 12, 2024

Abstract:Humans excel at efficiently navigating through crowds without collision by focusing on specific visual regions relevant to navigation. However, most robotic visual navigation methods rely on deep learning models pre-trained on vision tasks, which prioritize salient objects -- not necessarily relevant to navigation and potentially misleading. Alternative approaches train specialized navigation models from scratch, requiring significant computation. On the other hand, self-supervised learning has revolutionized computer vision and natural language processing, but its application to robotic navigation remains underexplored due to the difficulty of defining effective self-supervision signals. Motivated by these observations, in this work, we propose a Self-Supervised Vision-Action Model for Visual Navigation Pre-Training (VANP). Instead of detecting salient objects that are beneficial for tasks such as classification or detection, VANP learns to focus only on specific visual regions that are relevant to the navigation task. To achieve this, VANP uses a history of visual observations, future actions, and a goal image for self-supervision, and embeds them using two small Transformer Encoders. Then, VANP maximizes the information between the embeddings by using a mutual information maximization objective function. We demonstrate that most VANP-extracted features match with human navigation intuition. VANP achieves comparable performance as models learned end-to-end with half the training time and models trained on a large-scale, fully supervised dataset, i.e., ImageNet, with only 0.08% data.

CAHSOR: Competence-Aware High-Speed Off-Road Ground Navigation in SE

Feb 10, 2024

Abstract:While the workspace of traditional ground vehicles is usually assumed to be in a 2D plane, i.e., SE(2), such an assumption may not hold when they drive at high speeds on unstructured off-road terrain: High-speed sharp turns on high-friction surfaces may lead to vehicle rollover; Turning aggressively on loose gravel or grass may violate the non-holonomic constraint and cause significant lateral sliding; Driving quickly on rugged terrain will produce extensive vibration along the vertical axis. Therefore, most offroad vehicles are currently limited to drive only at low speeds to assure vehicle stability and safety. In this work, we aim at empowering high-speed off-road vehicles with competence awareness in SE(3) so that they can reason about the consequences of taking aggressive maneuvers on different terrain with a 6-DoF forward kinodynamic model. The model is learned from visual and inertial Terrain Representation for Off-road Navigation (TRON) using multimodal, self-supervised vehicle-terrain interactions. We demonstrate the efficacy of our Competence-Aware High-Speed Off-Road (CAHSOR) navigation approach on a physical ground robot in both an autonomous navigation and a human shared-control setup and show that CAHSOR can efficiently reduce vehicle instability by 62% while only compromising 8.6% average speed with the help of TRON.

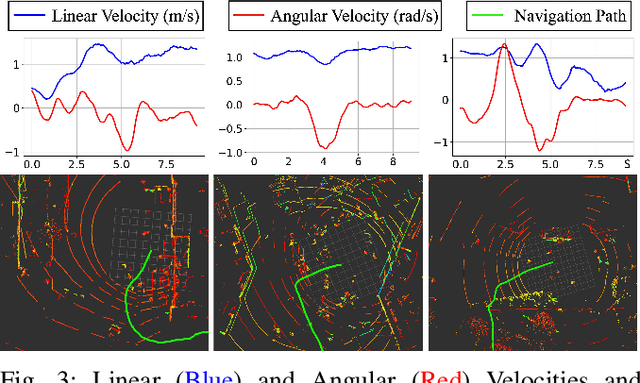

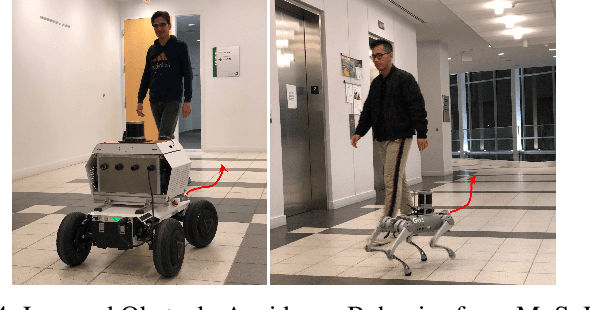

A Study on Learning Social Robot Navigation with Multimodal Perception

Sep 22, 2023

Abstract:Autonomous mobile robots need to perceive the environments with their onboard sensors (e.g., LiDARs and RGB cameras) and then make appropriate navigation decisions. In order to navigate human-inhabited public spaces, such a navigation task becomes more than only obstacle avoidance, but also requires considering surrounding humans and their intentions to somewhat change the navigation behavior in response to the underlying social norms, i.e., being socially compliant. Machine learning methods are shown to be effective in capturing those complex and subtle social interactions in a data-driven manner, without explicitly hand-crafting simplified models or cost functions. Considering multiple available sensor modalities and the efficiency of learning methods, this paper presents a comprehensive study on learning social robot navigation with multimodal perception using a large-scale real-world dataset. The study investigates social robot navigation decision making on both the global and local planning levels and contrasts unimodal and multimodal learning against a set of classical navigation approaches in different social scenarios, while also analyzing the training and generalizability performance from the learning perspective. We also conduct a human study on how learning with multimodal perception affects the perceived social compliance. The results show that multimodal learning has a clear advantage over unimodal learning in both dataset and human studies. We open-source our code for the community's future use to study multimodal perception for learning social robot navigation.

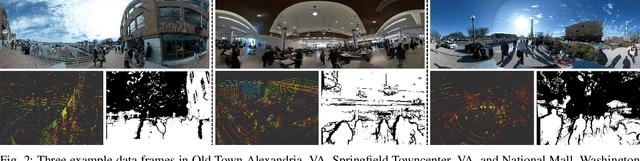

Toward Human-Like Social Robot Navigation: A Large-Scale, Multi-Modal, Social Human Navigation Dataset

Mar 27, 2023

Abstract:Humans are well-adept at navigating public spaces shared with others, where current autonomous mobile robots still struggle: while safely and efficiently reaching their goals, humans communicate their intentions and conform to unwritten social norms on a daily basis; conversely, robots become clumsy in those daily social scenarios, getting stuck in dense crowds, surprising nearby pedestrians, or even causing collisions. While recent research on robot learning has shown promises in data-driven social robot navigation, good-quality training data is still difficult to acquire through either trial and error or expert demonstrations. In this work, we propose to utilize the body of rich, widely available, social human navigation data in many natural human-inhabited public spaces for robots to learn similar, human-like, socially compliant navigation behaviors. To be specific, we design an open-source egocentric data collection sensor suite wearable by walking humans to provide multi-modal robot perception data; we collect a large-scale (~50 km, 10 hours, 150 trials, 7 humans) dataset in a variety of public spaces which contain numerous natural social navigation interactions; we analyze our dataset, demonstrate its usability, and point out future research directions and use cases.

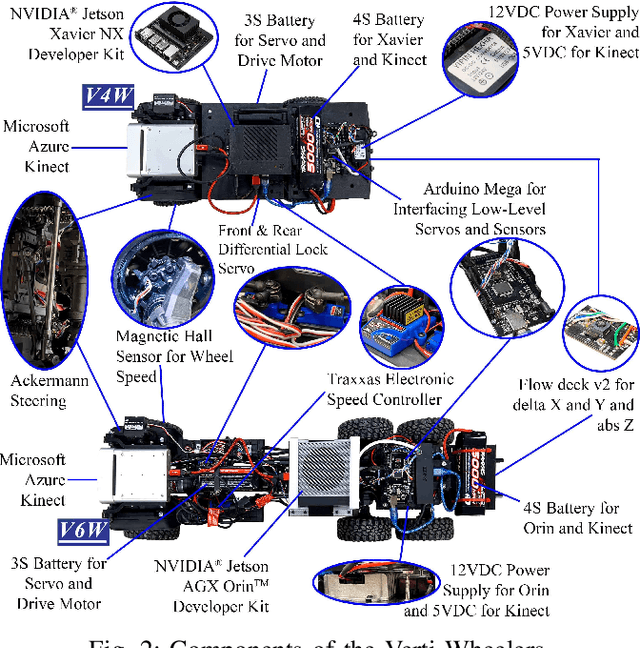

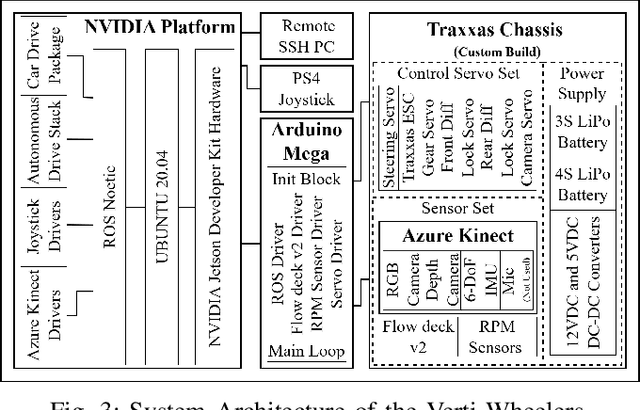

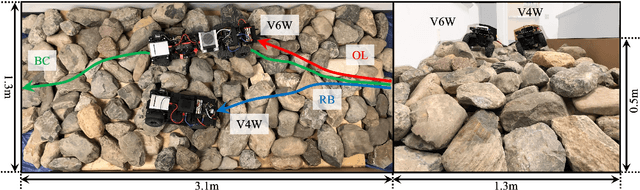

Toward Wheeled Mobility on Vertically Challenging Terrain: Platforms, Datasets, and Algorithms

Mar 02, 2023

Abstract:Most conventional wheeled robots can only move in flat environments and simply divide their planar workspaces into free spaces and obstacles. Deeming obstacles as non-traversable significantly limits wheeled robots' mobility in real-world, non-flat, off-road environments, where part of the terrain (e.g., steep slopes, rugged boulders) will be treated as non-traversable obstacles. To improve wheeled mobility in those non-flat environments with vertically challenging terrain, we present two wheeled platforms with little hardware modification compared to conventional wheeled robots; we collect datasets of our wheeled robots crawling over previously non-traversable, vertically challenging terrain to facilitate data-driven mobility; we also present algorithms and their experimental results to show that conventional wheeled robots have previously unrealized potential of moving through vertically challenging terrain. We make our platforms, datasets, and algorithms publicly available to facilitate future research on wheeled mobility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge