Maggie Wigness

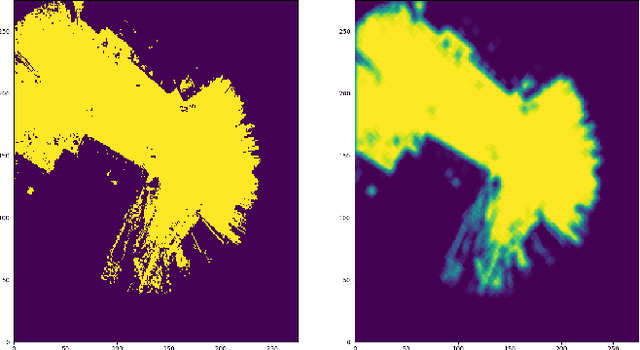

Temporally Consistent Unsupervised Segmentation for Mobile Robot Perception

Jul 29, 2025Abstract:Rapid progress in terrain-aware autonomous ground navigation has been driven by advances in supervised semantic segmentation. However, these methods rely on costly data collection and labor-intensive ground truth labeling to train deep models. Furthermore, autonomous systems are increasingly deployed in unrehearsed, unstructured environments where no labeled data exists and semantic categories may be ambiguous or domain-specific. Recent zero-shot approaches to unsupervised segmentation have shown promise in such settings but typically operate on individual frames, lacking temporal consistency-a critical property for robust perception in unstructured environments. To address this gap we introduce Frontier-Seg, a method for temporally consistent unsupervised segmentation of terrain from mobile robot video streams. Frontier-Seg clusters superpixel-level features extracted from foundation model backbones-specifically DINOv2-and enforces temporal consistency across frames to identify persistent terrain boundaries or frontiers without human supervision. We evaluate Frontier-Seg on a diverse set of benchmark datasets-including RUGD and RELLIS-3D-demonstrating its ability to perform unsupervised segmentation across unstructured off-road environments.

GO: The Great Outdoors Multimodal Dataset

Jan 31, 2025

Abstract:The Great Outdoors (GO) dataset is a multi-modal annotated data resource aimed at advancing ground robotics research in unstructured environments. This dataset provides the most comprehensive set of data modalities and annotations compared to existing off-road datasets. In total, the GO dataset includes six unique sensor types with high-quality semantic annotations and GPS traces to support tasks such as semantic segmentation, object detection, and SLAM. The diverse environmental conditions represented in the dataset present significant real-world challenges that provide opportunities to develop more robust solutions to support the continued advancement of field robotics, autonomous exploration, and perception systems in natural environments. The dataset can be downloaded at: https://www.unmannedlab.org/the-great-outdoors-dataset/

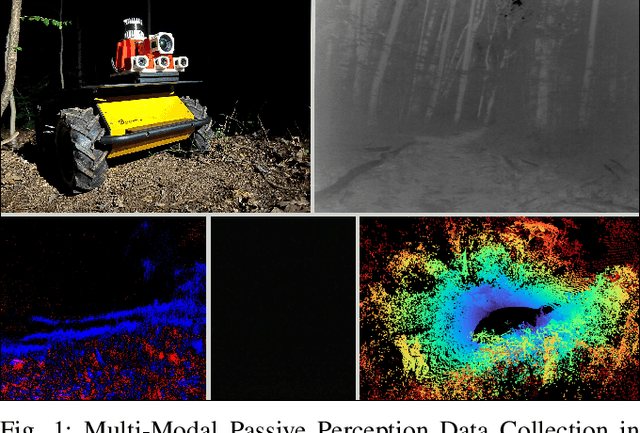

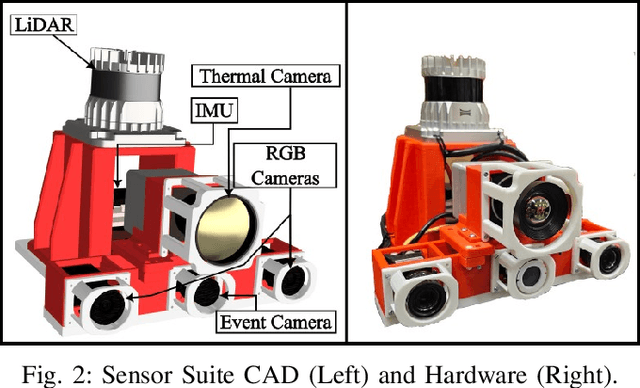

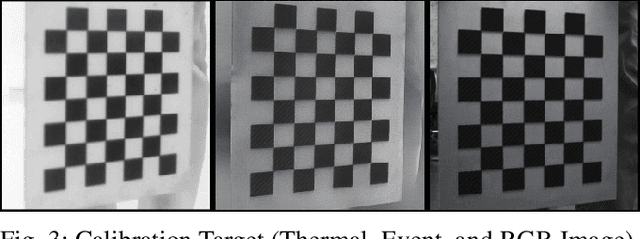

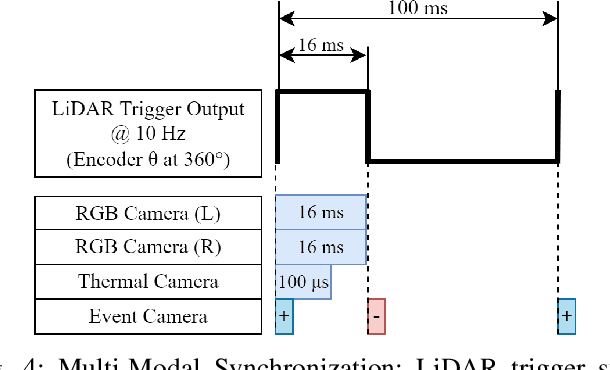

M2P2: A Multi-Modal Passive Perception Dataset for Off-Road Mobility in Extreme Low-Light Conditions

Oct 01, 2024

Abstract:Long-duration, off-road, autonomous missions require robots to continuously perceive their surroundings regardless of the ambient lighting conditions. Most existing autonomy systems heavily rely on active sensing, e.g., LiDAR, RADAR, and Time-of-Flight sensors, or use (stereo) visible light imaging sensors, e.g., color cameras, to perceive environment geometry and semantics. In scenarios where fully passive perception is required and lighting conditions are degraded to an extent that visible light cameras fail to perceive, most downstream mobility tasks such as obstacle avoidance become impossible. To address such a challenge, this paper presents a Multi-Modal Passive Perception dataset, M2P2, to enable off-road mobility in low-light to no-light conditions. We design a multi-modal sensor suite including thermal, event, and stereo RGB cameras, GPS, two Inertia Measurement Units (IMUs), as well as a high-resolution LiDAR for ground truth, with a novel multi-sensor calibration procedure that can efficiently transform multi-modal perceptual streams into a common coordinate system. Our 10-hour, 32 km dataset also includes mobility data such as robot odometry and actions and covers well-lit, low-light, and no-light conditions, along with paved, on-trail, and off-trail terrain. Our results demonstrate that off-road mobility is possible through only passive perception in extreme low-light conditions using end-to-end learning and classical planning. The project website can be found at https://cs.gmu.edu/~xiao/Research/M2P2/

On the Efficiency and Robustness of Vibration-based Foundation Models for IoT Sensing: A Case Study

Apr 03, 2024

Abstract:This paper demonstrates the potential of vibration-based Foundation Models (FMs), pre-trained with unlabeled sensing data, to improve the robustness of run-time inference in (a class of) IoT applications. A case study is presented featuring a vehicle classification application using acoustic and seismic sensing. The work is motivated by the success of foundation models in the areas of natural language processing and computer vision, leading to generalizations of the FM concept to other domains as well, where significant amounts of unlabeled data exist that can be used for self-supervised pre-training. One such domain is IoT applications. Foundation models for selected sensing modalities in the IoT domain can be pre-trained in an environment-agnostic fashion using available unlabeled sensor data and then fine-tuned to the deployment at hand using a small amount of labeled data. The paper shows that the pre-training/fine-tuning approach improves the robustness of downstream inference and facilitates adaptation to different environmental conditions. More specifically, we present a case study in a real-world setting to evaluate a simple (vibration-based) FM-like model, called FOCAL, demonstrating its superior robustness and adaptation, compared to conventional supervised deep neural networks (DNNs). We also demonstrate its superior convergence over supervised solutions. Our findings highlight the advantages of vibration-based FMs (and FM-inspired selfsupervised models in general) in terms of inference robustness, runtime efficiency, and model adaptation (via fine-tuning) in resource-limited IoT settings.

A Mapping of Assurance Techniques for Learning Enabled Autonomous Systems to the Systems Engineering Lifecycle

Dec 30, 2022

Abstract:Learning enabled autonomous systems provide increased capabilities compared to traditional systems. However, the complexity of and probabilistic nature in the underlying methods enabling such capabilities present challenges for current systems engineering processes for assurance, and test, evaluation, verification, and validation (TEVV). This paper provides a preliminary attempt to map recently developed technical approaches in the assurance and TEVV of learning enabled autonomous systems (LEAS) literature to a traditional systems engineering v-model. This mapping categorizes such techniques into three main approaches: development, acquisition, and sustainment. We review the latest techniques to develop safe, reliable, and resilient learning enabled autonomous systems, without recommending radical and impractical changes to existing systems engineering processes. By performing this mapping, we seek to assist acquisition professionals by (i) informing comprehensive test and evaluation planning, and (ii) objectively communicating risk to leaders.

NAUTS: Negotiation for Adaptation to Unstructured Terrain Surfaces

Jul 28, 2022

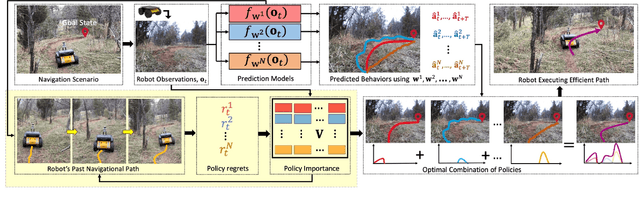

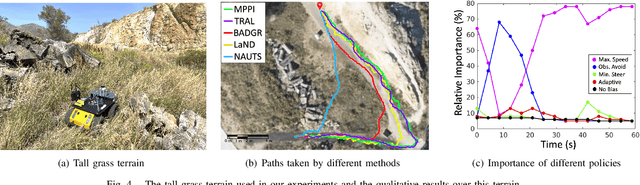

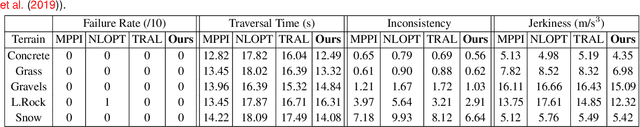

Abstract:When robots operate in real-world off-road environments with unstructured terrains, the ability to adapt their navigational policy is critical for effective and safe navigation. However, off-road terrains introduce several challenges to robot navigation, including dynamic obstacles and terrain uncertainty, leading to inefficient traversal or navigation failures. To address these challenges, we introduce a novel approach for adaptation by negotiation that enables a ground robot to adjust its navigational behaviors through a negotiation process. Our approach first learns prediction models for various navigational policies to function as a terrain-aware joint local controller and planner. Then, through a new negotiation process, our approach learns from various policies' interactions with the environment to agree on the optimal combination of policies in an online fashion to adapt robot navigation to unstructured off-road terrains on the fly. Additionally, we implement a new optimization algorithm that offers the optimal solution for robot negotiation in real-time during execution. Experimental results have validated that our method for adaptation by negotiation outperforms previous methods for robot navigation, especially over unseen and uncertain dynamic terrains.

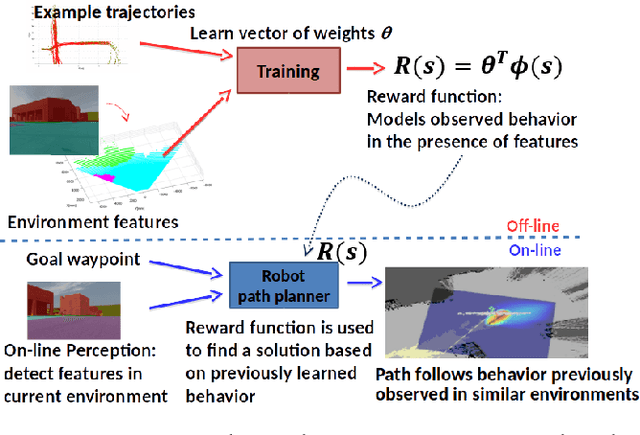

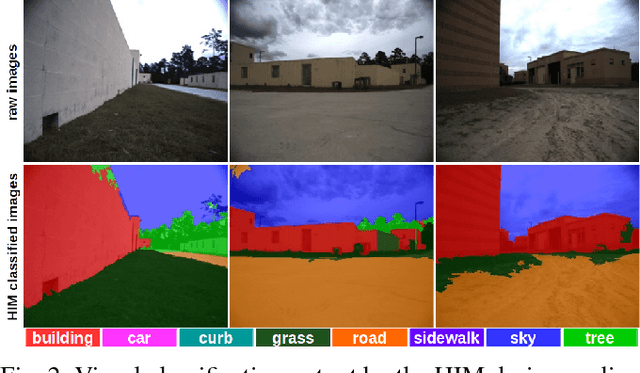

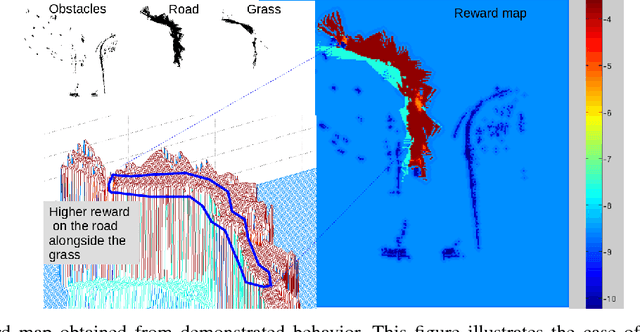

Robot navigation from human demonstration: learning control behaviors with environment feature maps

May 06, 2022

Abstract:When working alongside human collaborators in dynamic and unstructured environments, such as disaster recovery or military operation, fast field adaptation is necessary for an unmanned ground vehicle (UGV) to perform its duties or learn novel tasks. In these scenarios, personnel and equipment are constrained, making training with minimal human supervision a desirable learning attribute. We address the problem of making UGVs more reliable and adaptable teammates with a novel framework that uses visual perception and inverse optimal control to learn traversal costs for environment features. Through extensive evaluation in a real-world environment, we show that our framework requires few human demonstrated trajectory exemplars to learn feature costs that reliably encode several different traversal behaviors. Additionally, we present an on-line version of the framework that allows a human teammate to intervene during live operation to correct deteriorated behavior or to adapt behavior to dynamic changes in complex and unstructured environments.

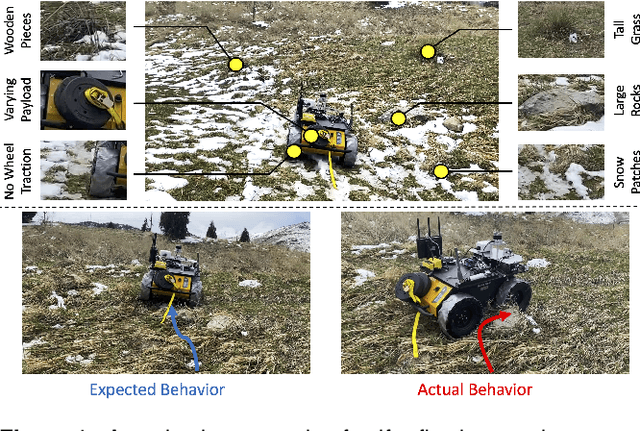

Self-Reflective Terrain-Aware Robot Adaptation for Consistent Off-Road Ground Navigation

Nov 12, 2021

Abstract:Ground robots require the crucial capability of traversing unstructured and unprepared terrains and avoiding obstacles to complete tasks in real-world robotics applications such as disaster response. When a robot operates in off-road field environments such as forests, the robot's actual behaviors often do not match its expected or planned behaviors, due to changes in the characteristics of terrains and the robot itself. Therefore, the capability of robot adaptation for consistent behavior generation is essential for maneuverability on unstructured off-road terrains. In order to address the challenge, we propose a novel method of self-reflective terrain-aware adaptation for ground robots to generate consistent controls to navigate over unstructured off-road terrains, which enables robots to more accurately execute the expected behaviors through robot self-reflection while adapting to varying unstructured terrains. To evaluate our method's performance, we conduct extensive experiments using real ground robots with various functionality changes over diverse unstructured off-road terrains. The comprehensive experimental results have shown that our self-reflective terrain-aware adaptation method enables ground robots to generate consistent navigational behaviors and outperforms the compared previous and baseline techniques.

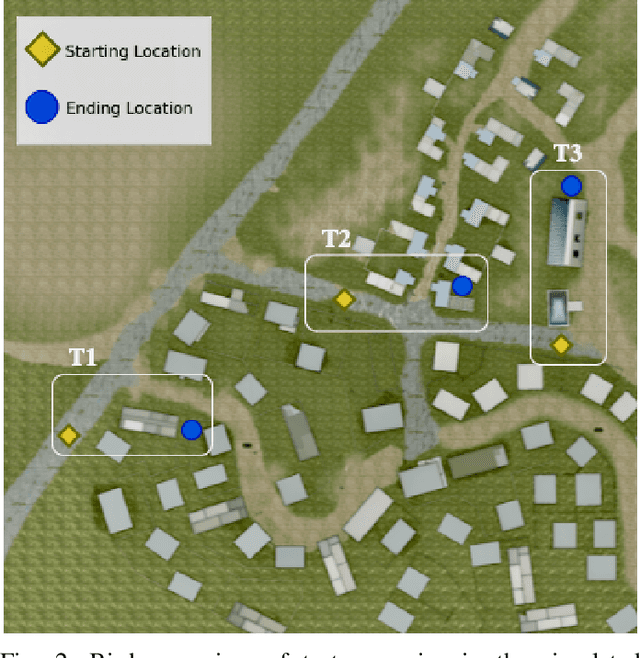

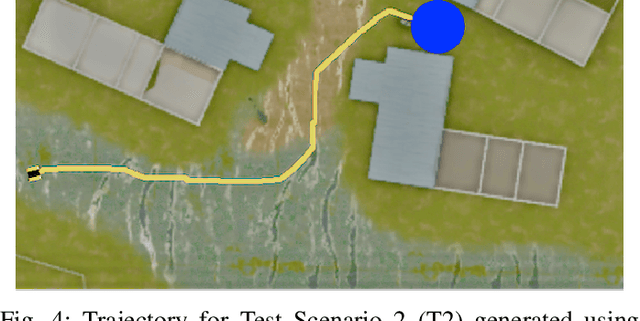

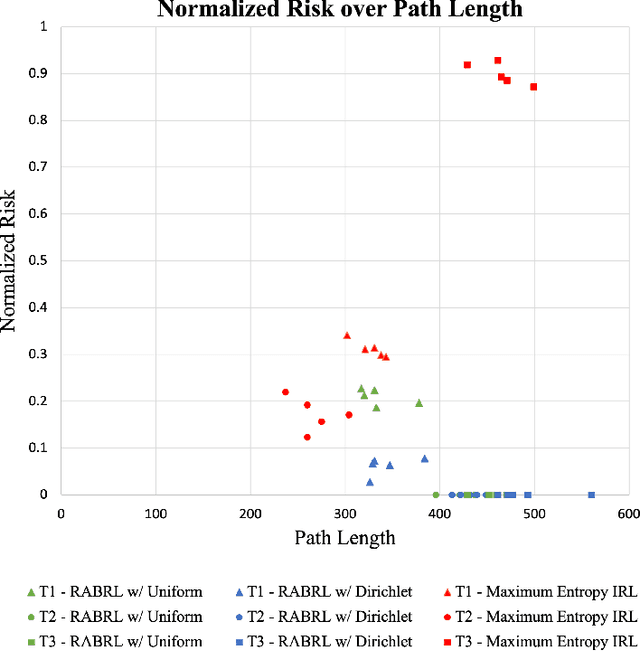

Risk Averse Bayesian Reward Learning for Autonomous Navigation from Human Demonstration

Jul 31, 2021

Abstract:Traditional imitation learning provides a set of methods and algorithms to learn a reward function or policy from expert demonstrations. Learning from demonstration has been shown to be advantageous for navigation tasks as it allows for machine learning non-experts to quickly provide information needed to learn complex traversal behaviors. However, a minimal set of demonstrations is unlikely to capture all relevant information needed to achieve the desired behavior in every possible future operational environment. Due to distributional shift among environments, a robot may encounter features that were rarely or never observed during training for which the appropriate reward value is uncertain, leading to undesired outcomes. This paper proposes a Bayesian technique which quantifies uncertainty over the weights of a linear reward function given a dataset of minimal human demonstrations to operate safely in dynamic environments. This uncertainty is quantified and incorporated into a risk averse set of weights used to generate cost maps for planning. Experiments in a 3-D environment with a simulated robot show that our proposed algorithm enables a robot to avoid dangerous terrain completely in two out of three test scenarios and accumulates a lower amount of risk than related approaches in all scenarios without requiring any additional demonstrations.

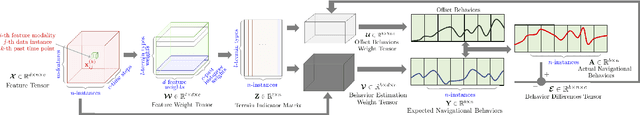

Robot Adaptation for Generating Consistent Navigational Behaviors over Unstructured Off-Road Terrain

Jan 01, 2021

Abstract:Terrain adaptation is an essential capability for a ground robot to effectively traverse unstructured off-road terrain in real-world field environments such as forests. However, the expected robot behaviors generated by terrain adaptation methods cannot always be executed accurately due to setbacks such as wheel slip and reduced tire pressure. To address this problem, we propose a novel approach for consistent behavior generation that enables the ground robot's actual behaviors to more accurately match expected behaviors while adapting to a variety of unstructured off-road terrain. Our approach learns offset behaviors that are used to compensate for the inconsistency between the actual and expected behaviors without requiring the explicit modeling of various setbacks. Our approach is also able to estimate the importance of the multi-modal features to improve terrain representations for better adaptation. In addition, we develop an algorithmic solver for our formulated regularized optimization problem, which is guaranteed to converge to the global optimal solution. To evaluate the method, we perform extensive experiments using various unstructured off-road terrain in real-world field environments. Experimental results have validated that our approach enables robots to traverse complex unstructured off-road terrain with more navigational behavior consistency, and it outperforms previous methods, particularly so on challenging terrain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge