Philip Osteen

GO: The Great Outdoors Multimodal Dataset

Jan 31, 2025

Abstract:The Great Outdoors (GO) dataset is a multi-modal annotated data resource aimed at advancing ground robotics research in unstructured environments. This dataset provides the most comprehensive set of data modalities and annotations compared to existing off-road datasets. In total, the GO dataset includes six unique sensor types with high-quality semantic annotations and GPS traces to support tasks such as semantic segmentation, object detection, and SLAM. The diverse environmental conditions represented in the dataset present significant real-world challenges that provide opportunities to develop more robust solutions to support the continued advancement of field robotics, autonomous exploration, and perception systems in natural environments. The dataset can be downloaded at: https://www.unmannedlab.org/the-great-outdoors-dataset/

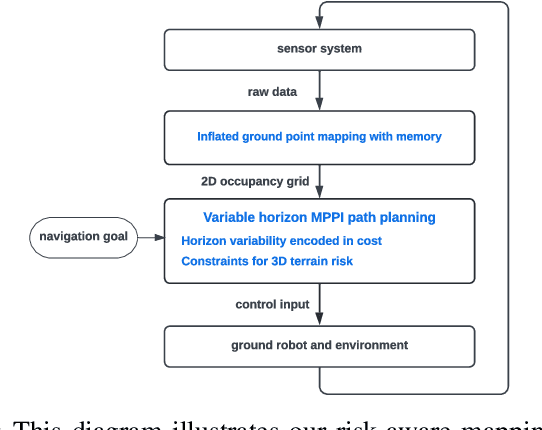

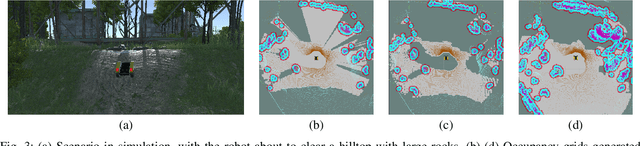

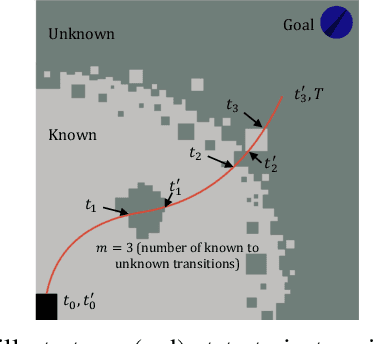

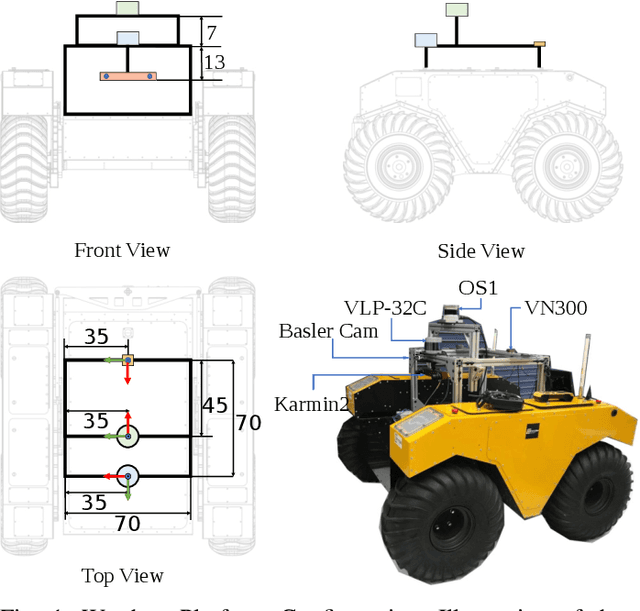

RAMP: A Risk-Aware Mapping and Planning Pipeline for Fast Off-Road Ground Robot Navigation

Oct 12, 2022

Abstract:A key challenge in fast ground robot navigation in 3D terrain is balancing robot speed and safety. Recent work has shown that 2.5D maps (2D representations with additional 3D information) are ideal for real-time safe and fast planning. However, raytracing as a prevalent method of generating occupancy grid as the base 2D representation makes the generated map unsafe to plan in, due to inaccurate representation of unknown space. Additionally, existing planners such as MPPI do not reason about speeds in known free and unknown space separately, leading to slow plans. This work therefore first presents ground point inflation as a way to generate accurate occupancy grid maps from classified pointclouds. Then we present an MPPI-based planner with embedded variability in horizon, to maximize speed in known free space while retaining cautionary penetration into unknown space. Finally, we integrate this mapping and planning pipeline with risk constraints arising from 3D terrain, and verify that it enables fast and safe navigation using simulations and a hardware demonstration.

SemCal: Semantic LiDAR-Camera Calibration using Neural MutualInformation Estimator

Sep 21, 2021

Abstract:This paper proposes SemCal: an automatic, targetless, extrinsic calibration algorithm for a LiDAR and camera system using semantic information. We leverage a neural information estimator to estimate the mutual information (MI) of semantic information extracted from each sensor measurement, facilitating semantic-level data association. By using a matrix exponential formulation of the $se(3)$ transformation and a kernel-based sampling method to sample from camera measurement based on LiDAR projected points, we can formulate the LiDAR-Camera calibration problem as a novel differentiable objective function that supports gradient-based optimization methods. We also introduce a semantic-based initial calibration method using 2D MI-based image registration and Perspective-n-Point (PnP) solver. To evaluate performance, we demonstrate the robustness of our method and quantitatively analyze the accuracy using a synthetic dataset. We also evaluate our algorithm qualitatively on an urban dataset (KITTI360) and an off-road dataset (RELLIS-3D) benchmark datasets using both hand-annotated ground truth labels as well as labels predicted by the state-of-the-art deep learning models, showing improvement over recent comparable calibration approaches.

Calibrating LiDAR and Camera using Semantic Mutual information

Apr 24, 2021

Abstract:We propose an algorithm for automatic, targetless, extrinsic calibration of a LiDAR and camera system using semantic information. We achieve this goal by maximizing mutual information (MI) of semantic information between sensors, leveraging a neural network to estimate semantic mutual information, and matrix exponential for calibration computation. Using kernel-based sampling to sample data from camera measurement based on LiDAR projected points, we formulate the problem as a novel differentiable objective function which supports the use of gradient-based optimization methods. We also introduce an initial calibration method using 2D MI-based image registration. Finally, we demonstrate the robustness of our method and quantitatively analyze the accuracy on a synthetic dataset and also evaluate our algorithm qualitatively on KITTI360 and RELLIS-3D benchmark datasets, showing improvement over recent comparable approaches.

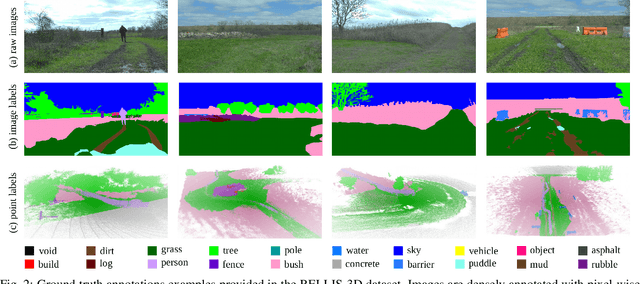

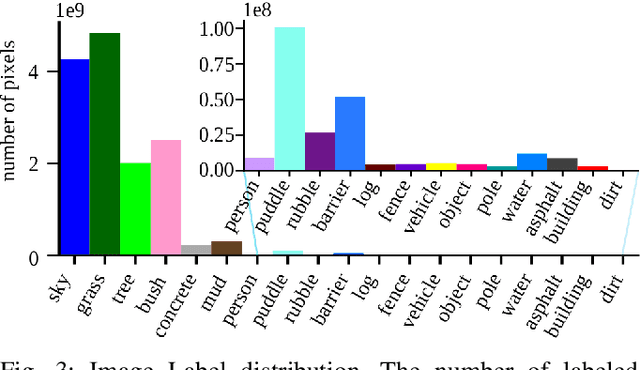

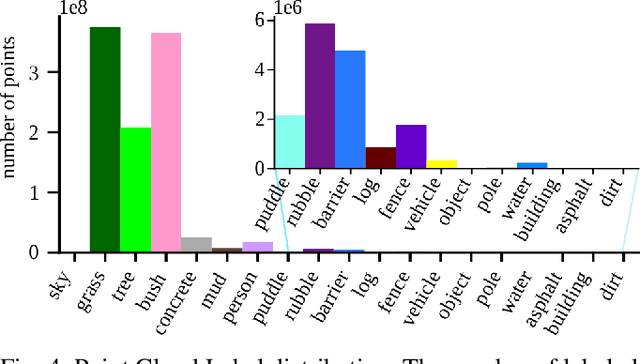

RELLIS-3D Dataset: Data, Benchmarks and Analysis

Dec 03, 2020

Abstract:Semantic scene understanding is crucial for robust and safe autonomous navigation, particularly so in off-road environments. Recent deep learning advances for 3D semantic segmentation rely heavily on large sets of training data, however existing autonomy datasets either represent urban environments or lack multimodal off-road data. We fill this gap with RELLIS-3D, a multimodal dataset collected in an off-road environment, which contains annotations for 13,556 LiDAR scans and 6,235 images. The data was collected on the Rellis Campus of Texas A&M University, and presents challenges to existing algorithms related to class imbalance and environmental topography. Additionally, we evaluate the current state of the art deep learning semantic segmentation models on this dataset. Experimental results show that RELLIS-3D presents challenges for algorithms designed for segmentation in urban environments. This novel dataset provides the resources needed by researchers to continue to develop more advanced algorithms and investigate new research directions to enhance autonomous navigation in off-road environments. RELLIS-3D will be published at https://github.com/unmannedlab/RELLIS-3D.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge