Akhil Nagariya

Learning Autonomy: Off-Road Navigation Enhanced by Human Input

Feb 26, 2025Abstract:In the area of autonomous driving, navigating off-road terrains presents a unique set of challenges, from unpredictable surfaces like grass and dirt to unexpected obstacles such as bushes and puddles. In this work, we present a novel learning-based local planner that addresses these challenges by directly capturing human driving nuances from real-world demonstrations using only a monocular camera. The key features of our planner are its ability to navigate in challenging off-road environments with various terrain types and its fast learning capabilities. By utilizing minimal human demonstration data (5-10 mins), it quickly learns to navigate in a wide array of off-road conditions. The local planner significantly reduces the real world data required to learn human driving preferences. This allows the planner to apply learned behaviors to real-world scenarios without the need for manual fine-tuning, demonstrating quick adjustment and adaptability in off-road autonomous driving technology.

GO: The Great Outdoors Multimodal Dataset

Jan 31, 2025

Abstract:The Great Outdoors (GO) dataset is a multi-modal annotated data resource aimed at advancing ground robotics research in unstructured environments. This dataset provides the most comprehensive set of data modalities and annotations compared to existing off-road datasets. In total, the GO dataset includes six unique sensor types with high-quality semantic annotations and GPS traces to support tasks such as semantic segmentation, object detection, and SLAM. The diverse environmental conditions represented in the dataset present significant real-world challenges that provide opportunities to develop more robust solutions to support the continued advancement of field robotics, autonomous exploration, and perception systems in natural environments. The dataset can be downloaded at: https://www.unmannedlab.org/the-great-outdoors-dataset/

OTTR: Off-Road Trajectory Tracking using Reinforcement Learning

Oct 05, 2021

Abstract:In this work, we present a novel Reinforcement Learning (RL) algorithm for the off-road trajectory tracking problem. Off-road environments involve varying terrain types and elevations, and it is difficult to model the interaction dynamics of specific off-road vehicles with such a diverse and complex environment. Standard RL policies trained on a simulator will fail to operate in such challenging real-world settings. Instead of using a naive domain randomization approach, we propose an innovative supervised-learning based approach for overcoming the sim-to-real gap problem. Our approach efficiently exploits the limited real-world data available to adapt the baseline RL policy obtained using a simple kinematics simulator. This avoids the need for modeling the diverse and complex interaction of the vehicle with off-road environments. We evaluate the performance of the proposed algorithm using two different off-road vehicles, Warthog and Moose. Compared to the standard ILQR approach, our proposed approach achieves a 30% and 50% reduction in cross track error in Warthog and Moose, respectively, by utilizing only 30 minutes of real-world driving data.

An Iterative LQR Controller for Off-Road and On-Road Vehicles using a Neural Network Dynamics Model

Jul 28, 2020

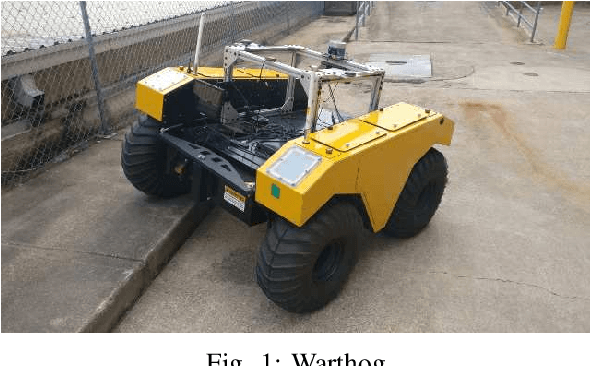

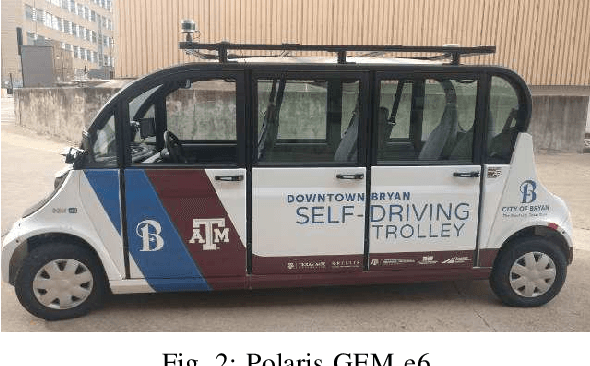

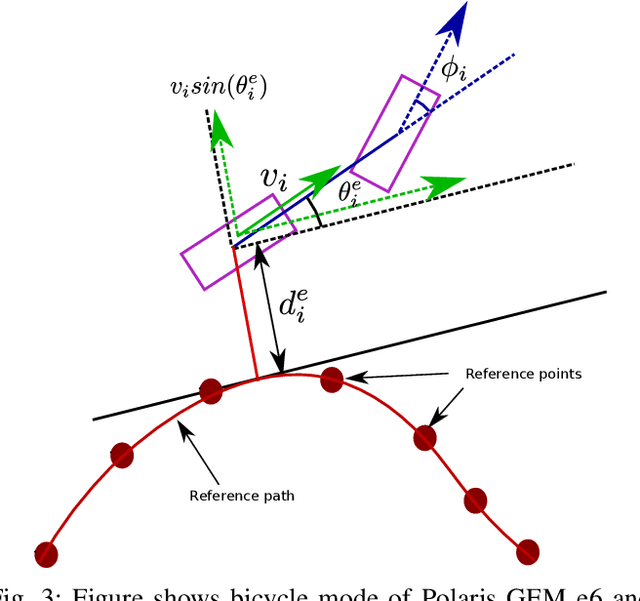

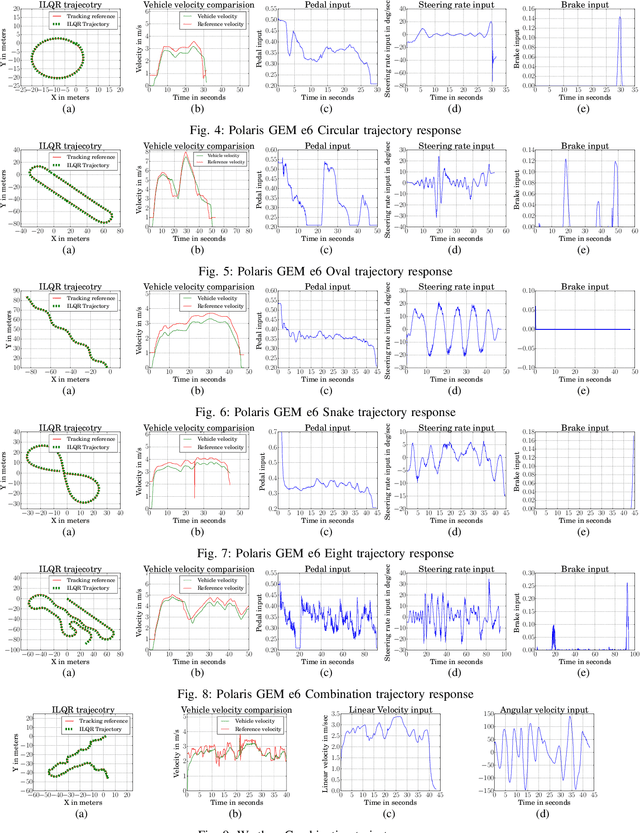

Abstract:In this work we evaluate Iterative Linear Quadratic Regulator(ILQR) for trajectory tracking of two different kinds of wheeled mobile robots namely Warthog (Fig. 1), an off-road holonomic robot with skid-steering and Polaris GEM e6 [1], a non-holonomic six seater vehicle (Fig. 2). We use multilayer neural network to learn the discrete dynamic model of these robots which is used in ILQR controller to compute the control law. We use model predictive control (MPC) to deal with model imperfections and perform extensive experiments to evaluate the performance of the controller on human driven reference trajectories with vehicle speeds of 3m/s- 4m/s for warthog and 7m/s-10m/s for the Polaris GEM

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge