Gaurav Pandey

CAtCh: Cognitive Assessment through Cookie Thief

Jun 07, 2025Abstract:Several machine learning algorithms have been developed for the prediction of Alzheimer's disease and related dementia (ADRD) from spontaneous speech. However, none of these algorithms have been translated for the prediction of broader cognitive impairment (CI), which in some cases is a precursor and risk factor of ADRD. In this paper, we evaluated several speech-based open-source methods originally proposed for the prediction of ADRD, as well as methods from multimodal sentiment analysis for the task of predicting CI from patient audio recordings. Results demonstrated that multimodal methods outperformed unimodal ones for CI prediction, and that acoustics-based approaches performed better than linguistics-based ones. Specifically, interpretable acoustic features relating to affect and prosody were found to significantly outperform BERT-based linguistic features and interpretable linguistic features, respectively. All the code developed for this study is available at https://github.com/JTColonel/catch.

Learning Autonomy: Off-Road Navigation Enhanced by Human Input

Feb 26, 2025Abstract:In the area of autonomous driving, navigating off-road terrains presents a unique set of challenges, from unpredictable surfaces like grass and dirt to unexpected obstacles such as bushes and puddles. In this work, we present a novel learning-based local planner that addresses these challenges by directly capturing human driving nuances from real-world demonstrations using only a monocular camera. The key features of our planner are its ability to navigate in challenging off-road environments with various terrain types and its fast learning capabilities. By utilizing minimal human demonstration data (5-10 mins), it quickly learns to navigate in a wide array of off-road conditions. The local planner significantly reduces the real world data required to learn human driving preferences. This allows the planner to apply learned behaviors to real-world scenarios without the need for manual fine-tuning, demonstrating quick adjustment and adaptability in off-road autonomous driving technology.

Systematic Knowledge Injection into Large Language Models via Diverse Augmentation for Domain-Specific RAG

Feb 12, 2025

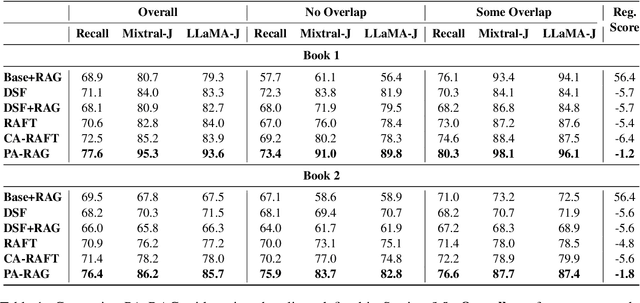

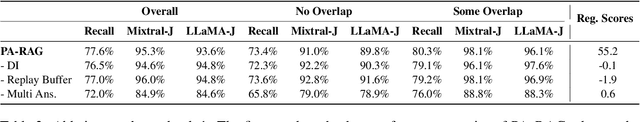

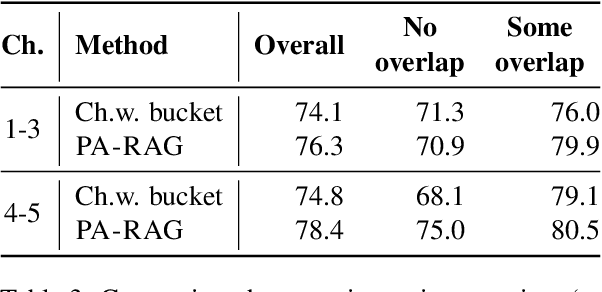

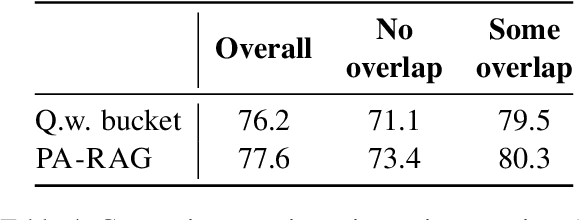

Abstract:Retrieval-Augmented Generation (RAG) has emerged as a prominent method for incorporating domain knowledge into Large Language Models (LLMs). While RAG enhances response relevance by incorporating retrieved domain knowledge in the context, retrieval errors can still lead to hallucinations and incorrect answers. To recover from retriever failures, domain knowledge is injected by fine-tuning the model to generate the correct response, even in the case of retrieval errors. However, we observe that without systematic knowledge augmentation, fine-tuned LLMs may memorize new information but still fail to extract relevant domain knowledge, leading to poor performance. In this work, we present a novel framework that significantly enhances the fine-tuning process by augmenting the training data in two ways -- context augmentation and knowledge paraphrasing. In context augmentation, we create multiple training samples for a given QA pair by varying the relevance of the retrieved information, teaching the model when to ignore and when to rely on retrieved content. In knowledge paraphrasing, we fine-tune with multiple answers to the same question, enabling LLMs to better internalize specialized knowledge. To mitigate catastrophic forgetting due to fine-tuning, we add a domain-specific identifier to a question and also utilize a replay buffer containing general QA pairs. Experimental results demonstrate the efficacy of our method over existing techniques, achieving up to 10\% relative gain in token-level recall while preserving the LLM's generalization capabilities.

Longitudinal Ensemble Integration for sequential classification with multimodal data

Nov 08, 2024

Abstract:Effectively modeling multimodal longitudinal data is a pressing need in various application areas, especially biomedicine. Despite this, few approaches exist in the literature for this problem, with most not adequately taking into account the multimodality of the data. In this study, we developed multiple configurations of a novel multimodal and longitudinal learning framework, Longitudinal Ensemble Integration (LEI), for sequential classification. We evaluated LEI's performance, and compared it against existing approaches, for the early detection of dementia, which is among the most studied multimodal sequential classification tasks. LEI outperformed these approaches due to its use of intermediate base predictions arising from the individual data modalities, which enabled their better integration over time. LEI's design also enabled the identification of features that were consistently important across time for the effective prediction of dementia-related diagnoses. Overall, our work demonstrates the potential of LEI for sequential classification from longitudinal multimodal data.

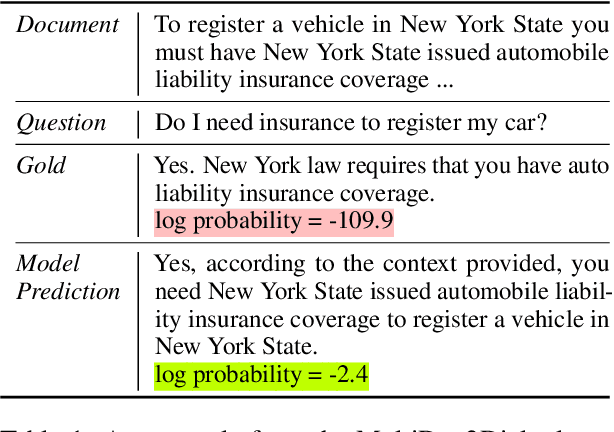

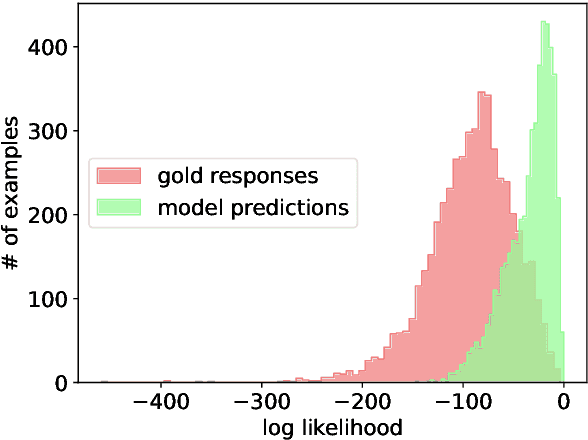

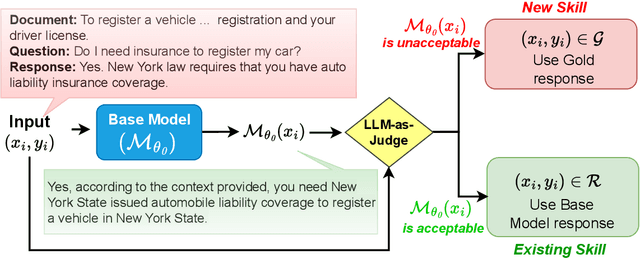

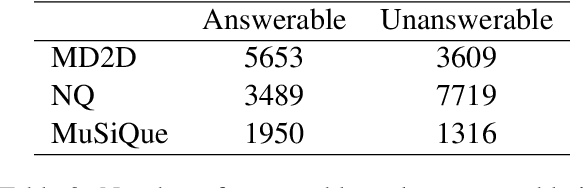

Selective Self-Rehearsal: A Fine-Tuning Approach to Improve Generalization in Large Language Models

Sep 07, 2024

Abstract:Fine-tuning Large Language Models (LLMs) on specific datasets is a common practice to improve performance on target tasks. However, this performance gain often leads to overfitting, where the model becomes too specialized in either the task or the characteristics of the training data, resulting in a loss of generalization. This paper introduces Selective Self-Rehearsal (SSR), a fine-tuning approach that achieves performance comparable to the standard supervised fine-tuning (SFT) while improving generalization. SSR leverages the fact that there can be multiple valid responses to a query. By utilizing the model's correct responses, SSR reduces model specialization during the fine-tuning stage. SSR first identifies the correct model responses from the training set by deploying an appropriate LLM as a judge. Then, it fine-tunes the model using the correct model responses and the gold response for the remaining samples. The effectiveness of SSR is demonstrated through experiments on the task of identifying unanswerable queries across various datasets. The results show that standard SFT can lead to an average performance drop of up to $16.7\%$ on multiple benchmarks, such as MMLU and TruthfulQA. In contrast, SSR results in close to $2\%$ drop on average, indicating better generalization capabilities compared to standard SFT.

Toward Physics-Aware Deep Learning Architectures for LiDAR Intensity Simulation

Apr 24, 2024

Abstract:Autonomous vehicles (AVs) heavily rely on LiDAR perception for environment understanding and navigation. LiDAR intensity provides valuable information about the reflected laser signals and plays a crucial role in enhancing the perception capabilities of AVs. However, accurately simulating LiDAR intensity remains a challenge due to the unavailability of material properties of the objects in the environment, and complex interactions between the laser beam and the environment. The proposed method aims to improve the accuracy of intensity simulation by incorporating physics-based modalities within the deep learning framework. One of the key entities that captures the interaction between the laser beam and the objects is the angle of incidence. In this work we demonstrate that the addition of the LiDAR incidence angle as a separate input to the deep neural networks significantly enhances the results. We present a comparative study between two prominent deep learning architectures: U-NET a Convolutional Neural Network (CNN), and Pix2Pix a Generative Adversarial Network (GAN). We implemented these two architectures for the intensity prediction task and used SemanticKITTI and VoxelScape datasets for experiments. The comparative analysis reveals that both architectures benefit from the incidence angle as an additional input. Moreover, the Pix2Pix architecture outperforms U-NET, especially when the incidence angle is incorporated.

3DGS-ReLoc: 3D Gaussian Splatting for Map Representation and Visual ReLocalization

Mar 17, 2024

Abstract:This paper presents a novel system designed for 3D mapping and visual relocalization using 3D Gaussian Splatting. Our proposed method uses LiDAR and camera data to create accurate and visually plausible representations of the environment. By leveraging LiDAR data to initiate the training of the 3D Gaussian Splatting map, our system constructs maps that are both detailed and geometrically accurate. To mitigate excessive GPU memory usage and facilitate rapid spatial queries, we employ a combination of a 2D voxel map and a KD-tree. This preparation makes our method well-suited for visual localization tasks, enabling efficient identification of correspondences between the query image and the rendered image from the Gaussian Splatting map via normalized cross-correlation (NCC). Additionally, we refine the camera pose of the query image using feature-based matching and the Perspective-n-Point (PnP) technique. The effectiveness, adaptability, and precision of our system are demonstrated through extensive evaluation on the KITTI360 dataset.

SwinMTL: A Shared Architecture for Simultaneous Depth Estimation and Semantic Segmentation from Monocular Camera Images

Mar 15, 2024

Abstract:This research paper presents an innovative multi-task learning framework that allows concurrent depth estimation and semantic segmentation using a single camera. The proposed approach is based on a shared encoder-decoder architecture, which integrates various techniques to improve the accuracy of the depth estimation and semantic segmentation task without compromising computational efficiency. Additionally, the paper incorporates an adversarial training component, employing a Wasserstein GAN framework with a critic network, to refine model's predictions. The framework is thoroughly evaluated on two datasets - the outdoor Cityscapes dataset and the indoor NYU Depth V2 dataset - and it outperforms existing state-of-the-art methods in both segmentation and depth estimation tasks. We also conducted ablation studies to analyze the contributions of different components, including pre-training strategies, the inclusion of critics, the use of logarithmic depth scaling, and advanced image augmentations, to provide a better understanding of the proposed framework. The accompanying source code is accessible at \url{https://github.com/PardisTaghavi/SwinMTL}.

BRAIn: Bayesian Reward-conditioned Amortized Inference for natural language generation from feedback

Feb 04, 2024

Abstract:Following the success of Proximal Policy Optimization (PPO) for Reinforcement Learning from Human Feedback (RLHF), new techniques such as Sequence Likelihood Calibration (SLiC) and Direct Policy Optimization (DPO) have been proposed that are offline in nature and use rewards in an indirect manner. These techniques, in particular DPO, have recently become the tools of choice for LLM alignment due to their scalability and performance. However, they leave behind important features of the PPO approach. Methods such as SLiC or RRHF make use of the Reward Model (RM) only for ranking/preference, losing fine-grained information and ignoring the parametric form of the RM (eg., Bradley-Terry, Plackett-Luce), while methods such as DPO do not use even a separate reward model. In this work, we propose a novel approach, named BRAIn, that re-introduces the RM as part of a distribution matching approach.BRAIn considers the LLM distribution conditioned on the assumption of output goodness and applies Bayes theorem to derive an intractable posterior distribution where the RM is explicitly represented. BRAIn then distills this posterior into an amortized inference network through self-normalized importance sampling, leading to a scalable offline algorithm that significantly outperforms prior art in summarization and AntropicHH tasks. BRAIn also has interesting connections to PPO and DPO for specific RM choices.

eipy: An Open-Source Python Package for Multi-modal Data Integration using Heterogeneous Ensembles

Jan 17, 2024

Abstract:In this paper, we introduce eipy--an open-source Python package for developing effective, multi-modal heterogeneous ensembles for classification. eipy simultaneously provides both a rigorous, and user-friendly framework for comparing and selecting the best-performing multi-modal data integration and predictive modeling methods by systematically evaluating their performance using nested cross-validation. The package is designed to leverage scikit-learn-like estimators as components to build multi-modal predictive models. An up-to-date user guide, including API reference and tutorials, for eipy is maintained at https://eipy.readthedocs.io . The main repository for this project can be found on GitHub at https://github.com/GauravPandeyLab/eipy .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge