Yen-Yu Chang

Tracking Everything Everywhere All at Once

Jun 08, 2023

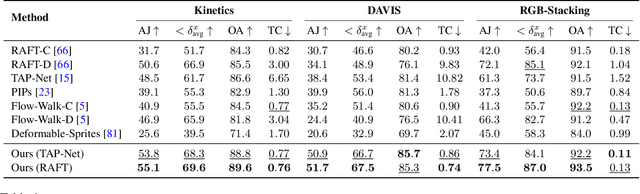

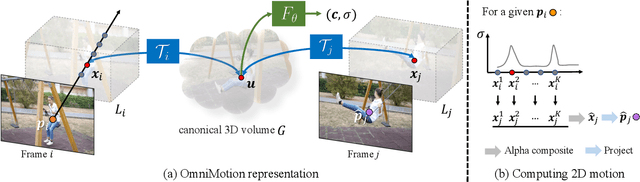

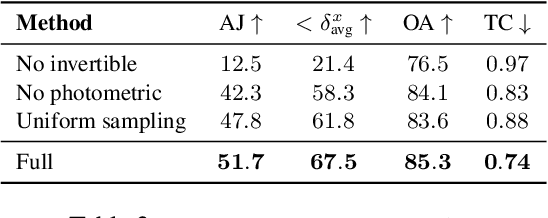

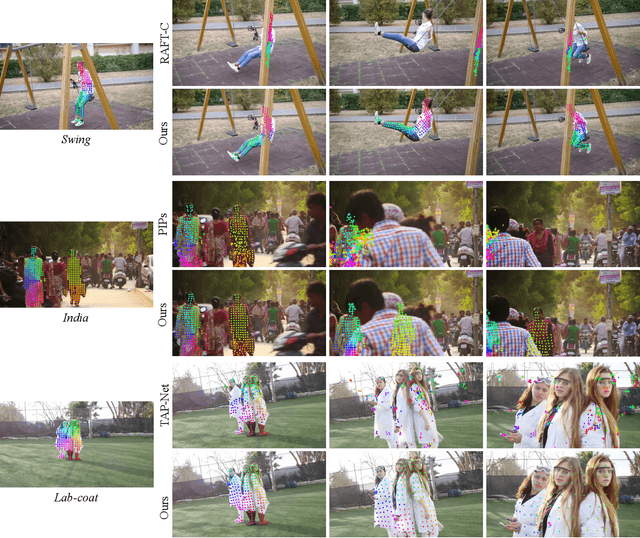

Abstract:We present a new test-time optimization method for estimating dense and long-range motion from a video sequence. Prior optical flow or particle video tracking algorithms typically operate within limited temporal windows, struggling to track through occlusions and maintain global consistency of estimated motion trajectories. We propose a complete and globally consistent motion representation, dubbed OmniMotion, that allows for accurate, full-length motion estimation of every pixel in a video. OmniMotion represents a video using a quasi-3D canonical volume and performs pixel-wise tracking via bijections between local and canonical space. This representation allows us to ensure global consistency, track through occlusions, and model any combination of camera and object motion. Extensive evaluations on the TAP-Vid benchmark and real-world footage show that our approach outperforms prior state-of-the-art methods by a large margin both quantitatively and qualitatively. See our project page for more results: http://omnimotion.github.io/

Learning Object-Centric Neural Scattering Functions for Free-viewpoint Relighting and Scene Composition

Mar 10, 2023

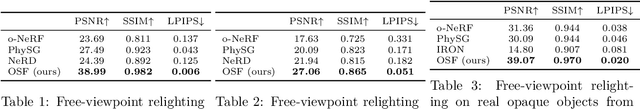

Abstract:Photorealistic object appearance modeling from 2D images is a constant topic in vision and graphics. While neural implicit methods (such as Neural Radiance Fields) have shown high-fidelity view synthesis results, they cannot relight the captured objects. More recent neural inverse rendering approaches have enabled object relighting, but they represent surface properties as simple BRDFs, and therefore cannot handle translucent objects. We propose Object-Centric Neural Scattering Functions (OSFs) for learning to reconstruct object appearance from only images. OSFs not only support free-viewpoint object relighting, but also can model both opaque and translucent objects. While accurately modeling subsurface light transport for translucent objects can be highly complex and even intractable for neural methods, OSFs learn to approximate the radiance transfer from a distant light to an outgoing direction at any spatial location. This approximation avoids explicitly modeling complex subsurface scattering, making learning a neural implicit model tractable. Experiments on real and synthetic data show that OSFs accurately reconstruct appearances for both opaque and translucent objects, allowing faithful free-viewpoint relighting as well as scene composition.

Learning Electron Bunch Distribution along a FEL Beamline by Normalising Flows

Feb 27, 2023Abstract:Understanding and control of Laser-driven Free Electron Lasers remain to be difficult problems that require highly intensive experimental and theoretical research. The gap between simulated and experimentally collected data might complicate studies and interpretation of obtained results. In this work we developed a deep learning based surrogate that could help to fill in this gap. We introduce a surrogate model based on normalising flows for conditional phase-space representation of electron clouds in a FEL beamline. Achieved results let us discuss further benefits and limitations in exploitability of the models to gain deeper understanding of fundamental processes within a beamline.

* 7 pages, 5 figures

ObjectFolder 2.0: A Multisensory Object Dataset for Sim2Real Transfer

Apr 05, 2022

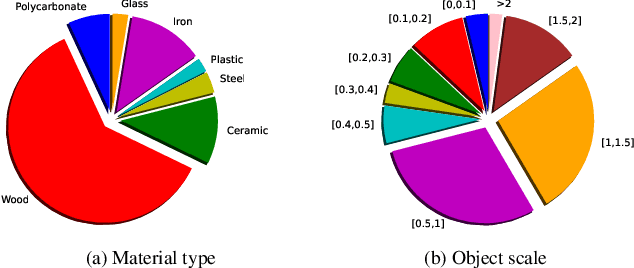

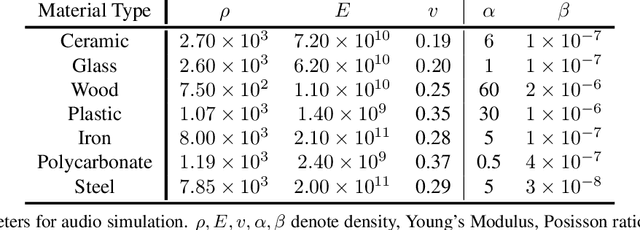

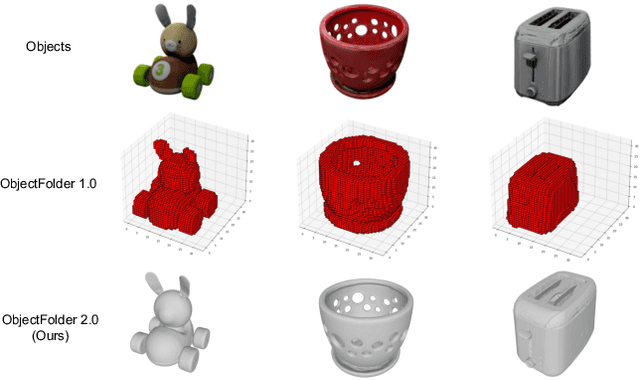

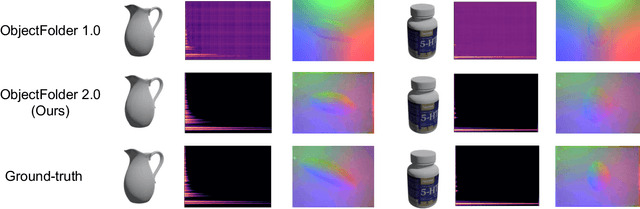

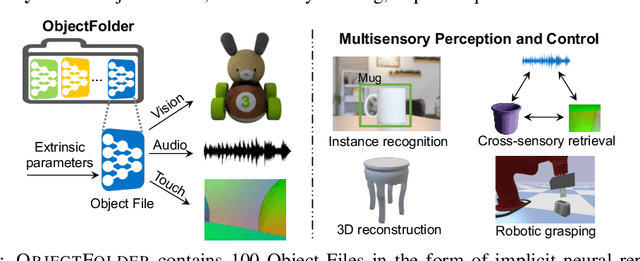

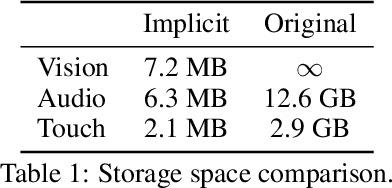

Abstract:Objects play a crucial role in our everyday activities. Though multisensory object-centric learning has shown great potential lately, the modeling of objects in prior work is rather unrealistic. ObjectFolder 1.0 is a recent dataset that introduces 100 virtualized objects with visual, acoustic, and tactile sensory data. However, the dataset is small in scale and the multisensory data is of limited quality, hampering generalization to real-world scenarios. We present ObjectFolder 2.0, a large-scale, multisensory dataset of common household objects in the form of implicit neural representations that significantly enhances ObjectFolder 1.0 in three aspects. First, our dataset is 10 times larger in the amount of objects and orders of magnitude faster in rendering time. Second, we significantly improve the multisensory rendering quality for all three modalities. Third, we show that models learned from virtual objects in our dataset successfully transfer to their real-world counterparts in three challenging tasks: object scale estimation, contact localization, and shape reconstruction. ObjectFolder 2.0 offers a new path and testbed for multisensory learning in computer vision and robotics. The dataset is available at https://github.com/rhgao/ObjectFolder.

ObjectFolder: A Dataset of Objects with Implicit Visual, Auditory, and Tactile Representations

Sep 18, 2021

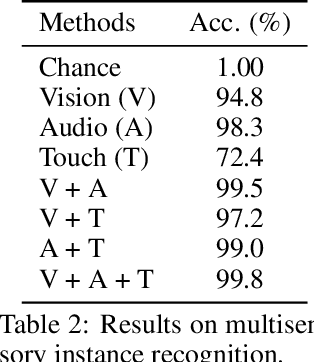

Abstract:Multisensory object-centric perception, reasoning, and interaction have been a key research topic in recent years. However, the progress in these directions is limited by the small set of objects available -- synthetic objects are not realistic enough and are mostly centered around geometry, while real object datasets such as YCB are often practically challenging and unstable to acquire due to international shipping, inventory, and financial cost. We present ObjectFolder, a dataset of 100 virtualized objects that addresses both challenges with two key innovations. First, ObjectFolder encodes the visual, auditory, and tactile sensory data for all objects, enabling a number of multisensory object recognition tasks, beyond existing datasets that focus purely on object geometry. Second, ObjectFolder employs a uniform, object-centric, and implicit representation for each object's visual textures, acoustic simulations, and tactile readings, making the dataset flexible to use and easy to share. We demonstrate the usefulness of our dataset as a testbed for multisensory perception and control by evaluating it on a variety of benchmark tasks, including instance recognition, cross-sensory retrieval, 3D reconstruction, and robotic grasping.

Data-Driven Shadowgraph Simulation of a 3D Object

Jun 01, 2021

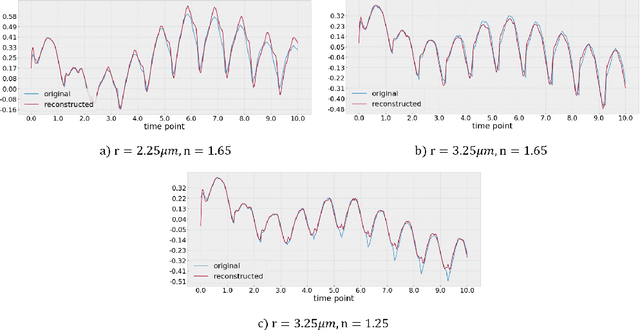

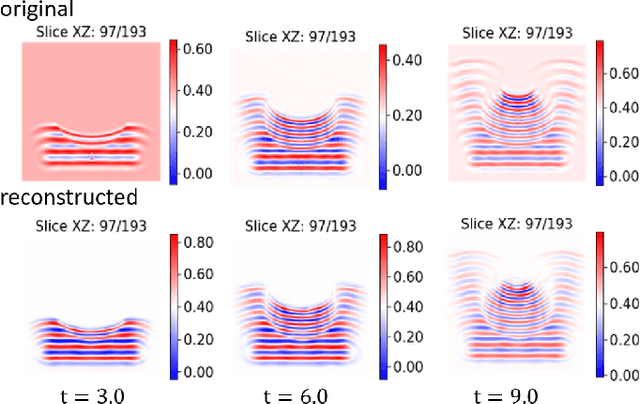

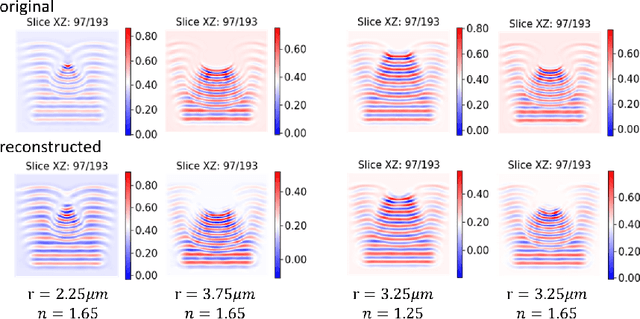

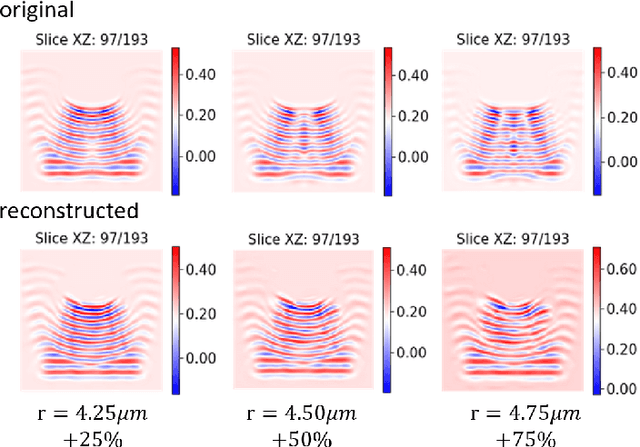

Abstract:In this work we propose a deep neural network based surrogate model for a plasma shadowgraph - a technique for visualization of perturbations in a transparent medium. We are substituting the numerical code by a computationally cheaper projection based surrogate model that is able to approximate the electric fields at a given time without computing all preceding electric fields as required by numerical methods. This means that the projection based surrogate model allows to recover the solution of the governing 3D partial differential equation, 3D wave equation, at any point of a given compute domain and configuration without the need to run a full simulation. This model has shown a good quality of reconstruction in a problem of interpolation of data within a narrow range of simulation parameters and can be used for input data of large size.

Inductive Representation Learning in Temporal Networks via Causal Anonymous Walks

Jan 15, 2021

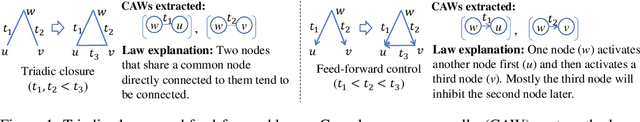

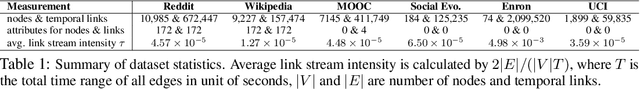

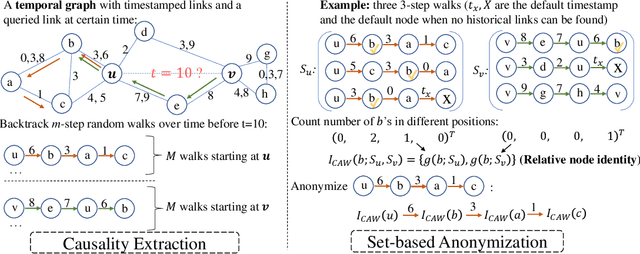

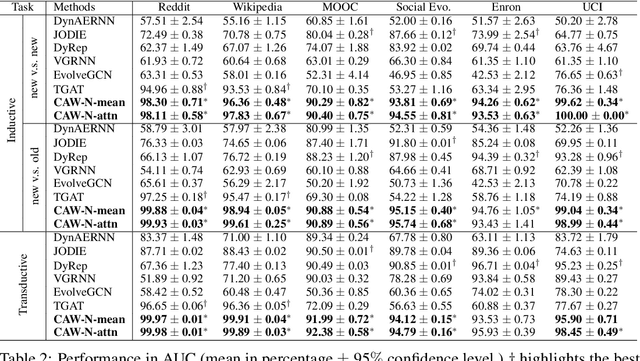

Abstract:Temporal networks serve as abstractions of many real-world dynamic systems. These networks typically evolve according to certain laws, such as the law of triadic closure, which is universal in social networks. Inductive representation learning of temporal networks should be able to capture such laws and further be applied to systems that follow the same laws but have not been unseen during the training stage. Previous works in this area depend on either network node identities or rich edge attributes and typically fail to extract these laws. Here, we propose Causal Anonymous Walks (CAWs) to inductively represent a temporal network. CAWs are extracted by temporal random walks and work as automatic retrieval of temporal network motifs to represent network dynamics while avoiding the time-consuming selection and counting of those motifs. CAWs adopt a novel anonymization strategy that replaces node identities with the hitting counts of the nodes based on a set of sampled walks to keep the method inductive, and simultaneously establish the correlation between motifs. We further propose a neural-network model CAW-N to encode CAWs, and pair it with a CAW sampling strategy with constant memory and time cost to support online training and inference. CAW-N is evaluated to predict links over 6 real temporal networks and uniformly outperforms previous SOTA methods by averaged 15% AUC gain in the inductive setting. CAW-N also outperforms previous methods in 5 out of the 6 networks in the transductive setting.

F-FADE: Frequency Factorization for Anomaly Detection in Edge Streams

Nov 09, 2020

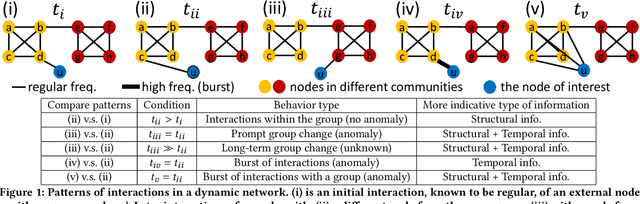

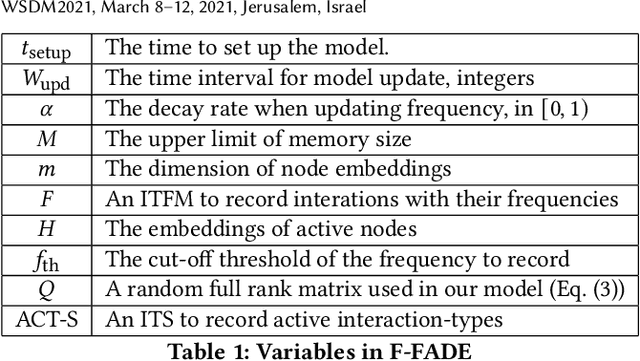

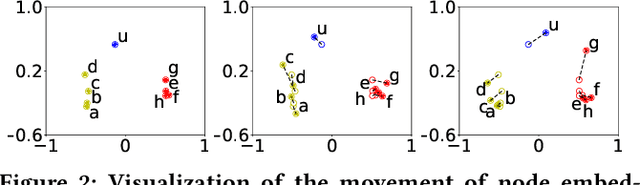

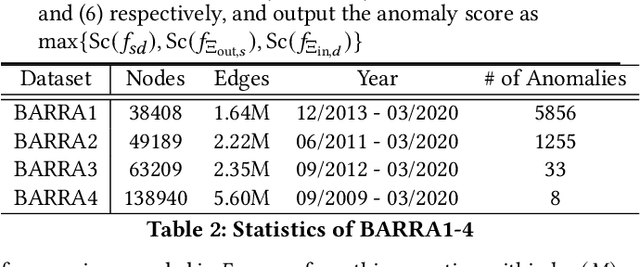

Abstract:Edge streams are commonly used to capture interactions in dynamic networks, such as email, social, or computer networks. The problem of detecting anomalies or rare events in edge streams has a wide range of applications. However, it presents many challenges due to lack of labels, a highly dynamic nature of interactions, and the entanglement of temporal and structural changes in the network. Current methods are limited in their ability to address the above challenges and to efficiently process a large number of interactions. Here, we propose F-FADE, a new approach for detection of anomalies in edge streams, which uses a novel frequency-factorization technique to efficiently model the time-evolving distributions of frequencies of interactions between node-pairs. The anomalies are then determined based on the likelihood of the observed frequency of each incoming interaction. F-FADE is able to handle in an online streaming setting a broad variety of anomalies with temporal and structural changes, while requiring only constant memory. Our experiments on one synthetic and six real-world dynamic networks show that F-FADE achieves state of the art performance and may detect anomalies that previous methods are unable to find.

A Regulation Enforcement Solution for Multi-agent Reinforcement Learning

Jan 29, 2019

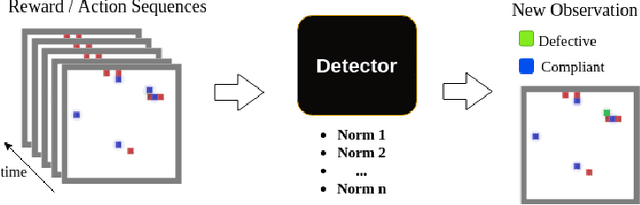

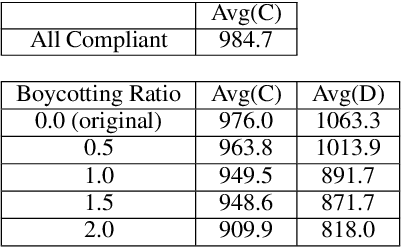

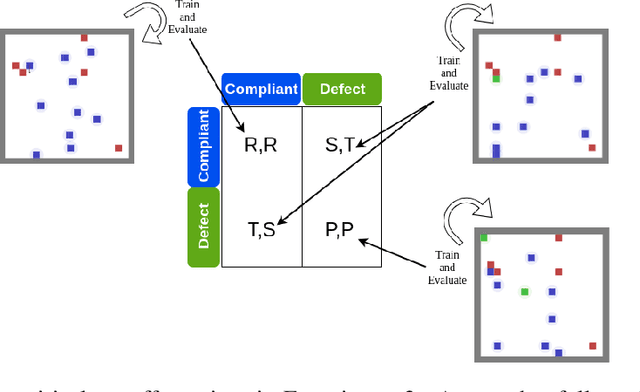

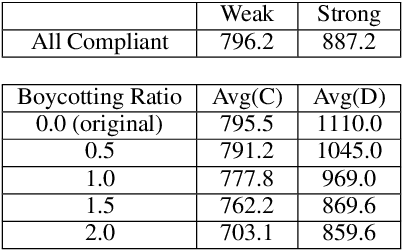

Abstract:Human behaviors are regularized by a variety of norms or regulations, either to maintain orders or to enhance social welfare. If artificial intelligent (AI) agents make decisions on behalf of human beings, we would hope they can also follow established regulations while interacting with humans or other AI agents. However, it is possible that an AI agent can opt to disobey the regulations for self-interests. This paper attempts to design a mechanism that discourages the agents from not obeying the global regulation setup for every agent. We first introduce the problem Regulation Enforcement and formulate it using reinforcement learning and game theory under the scenario where agents make decisions in complete isolation of other agents. The key idea is that, although we could not alter how defective agents choose to behave, we can, however, leverage the aggregated power of compliant agents to boycott the defective ones. Based on the idea, we proposed a solution to the problem and conducted simulated experiments on two scenarios: Replenishing Resource Management Dilemma and Diminishing Reward Shaping Enforcement, using deep multi-agent reinforcement learning algorithms. We further use empirical game-theoretic analysis to show that how the method alters the resulting empirical payoff matrices in a way that promotes compliance (making mutual compliant a Nash Equilibrium).

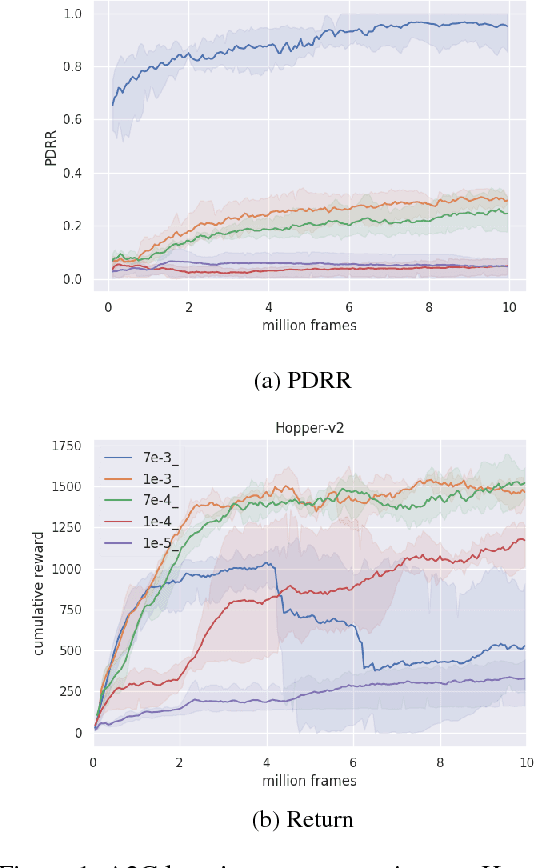

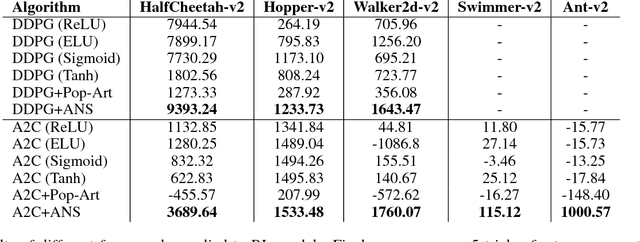

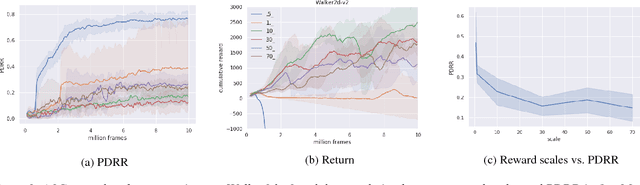

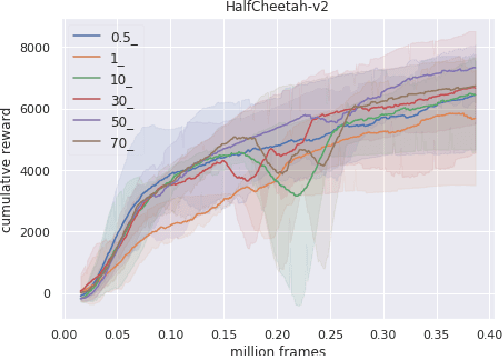

ANS: Adaptive Network Scaling for Deep Rectifier Reinforcement Learning Models

Oct 31, 2018

Abstract:This work provides a thorough study on how reward scaling can affect performance of deep reinforcement learning agents. In particular, we would like to answer the question that how does reward scaling affect non-saturating ReLU networks in RL? This question matters because ReLU is one of the most effective activation functions for deep learning models. We also propose an Adaptive Network Scaling framework to find a suitable scale of the rewards during learning for better performance. We conducted empirical studies to justify the solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge