A Regulation Enforcement Solution for Multi-agent Reinforcement Learning

Paper and Code

Jan 29, 2019

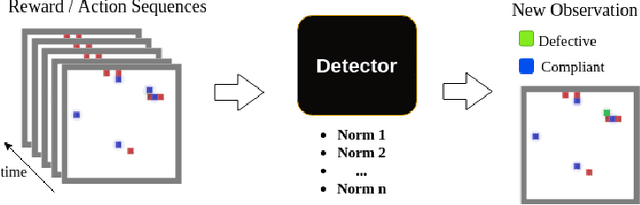

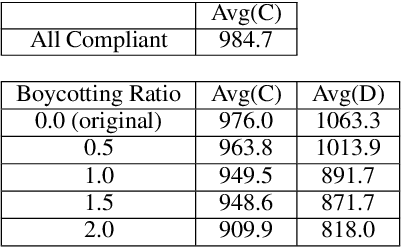

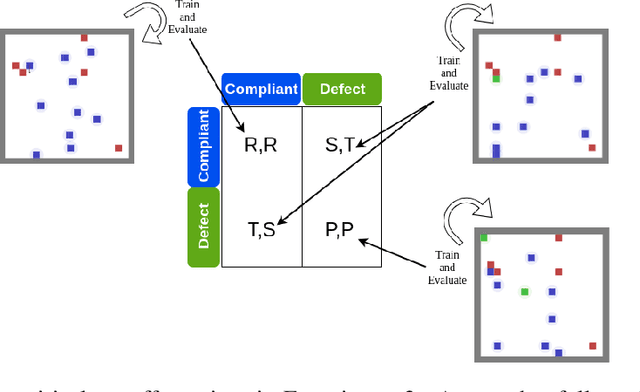

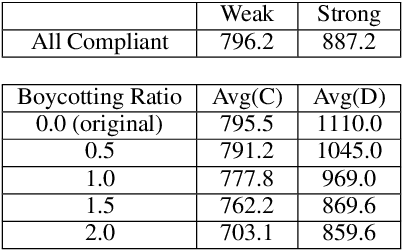

Human behaviors are regularized by a variety of norms or regulations, either to maintain orders or to enhance social welfare. If artificial intelligent (AI) agents make decisions on behalf of human beings, we would hope they can also follow established regulations while interacting with humans or other AI agents. However, it is possible that an AI agent can opt to disobey the regulations for self-interests. This paper attempts to design a mechanism that discourages the agents from not obeying the global regulation setup for every agent. We first introduce the problem Regulation Enforcement and formulate it using reinforcement learning and game theory under the scenario where agents make decisions in complete isolation of other agents. The key idea is that, although we could not alter how defective agents choose to behave, we can, however, leverage the aggregated power of compliant agents to boycott the defective ones. Based on the idea, we proposed a solution to the problem and conducted simulated experiments on two scenarios: Replenishing Resource Management Dilemma and Diminishing Reward Shaping Enforcement, using deep multi-agent reinforcement learning algorithms. We further use empirical game-theoretic analysis to show that how the method alters the resulting empirical payoff matrices in a way that promotes compliance (making mutual compliant a Nash Equilibrium).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge