Changyi Lin

LightTact: A Visual-Tactile Fingertip Sensor for Deformation-Independent Contact Sensing

Dec 23, 2025Abstract:Contact often occurs without macroscopic surface deformation, such as during interaction with liquids, semi-liquids, or ultra-soft materials. Most existing tactile sensors rely on deformation to infer contact, making such light-contact interactions difficult to perceive robustly. To address this, we present LightTact, a visual-tactile fingertip sensor that makes contact directly visible via a deformation-independent, optics-based principle. LightTact uses an ambient-blocking optical configuration that suppresses both external light and internal illumination at non-contact regions, while transmitting only the diffuse light generated at true contacts. As a result, LightTact produces high-contrast raw images in which non-contact pixels remain near-black (mean gray value < 3) and contact pixels preserve the natural appearance of the contacting surface. Built on this, LightTact achieves accurate pixel-level contact segmentation that is robust to material properties, contact force, surface appearance, and environmental lighting. We further integrate LightTact on a robotic arm and demonstrate manipulation behaviors driven by extremely light contact, including water spreading, facial-cream dipping, and thin-film interaction. Finally, we show that LightTact's spatially aligned visual-tactile images can be directly interpreted by existing vision-language models, enabling resistor value reasoning for robotic sorting.

LocoTouch: Learning Dexterous Quadrupedal Transport with Tactile Sensing

May 29, 2025Abstract:Quadrupedal robots have demonstrated remarkable agility and robustness in traversing complex terrains. However, they remain limited in performing object interactions that require sustained contact. In this work, we present LocoTouch, a system that equips quadrupedal robots with tactile sensing to address a challenging task in this category: long-distance transport of unsecured cylindrical objects, which typically requires custom mounting mechanisms to maintain stability. For efficient large-area tactile sensing, we design a high-density distributed tactile sensor array that covers the entire back of the robot. To effectively leverage tactile feedback for locomotion control, we develop a simulation environment with high-fidelity tactile signals, and train tactile-aware transport policies using a two-stage learning pipeline. Furthermore, we design a novel reward function to promote stable, symmetric, and frequency-adaptive locomotion gaits. After training in simulation, LocoTouch transfers zero-shot to the real world, reliably balancing and transporting a wide range of unsecured, cylindrical everyday objects with broadly varying sizes and weights. Thanks to the responsiveness of the tactile sensor and the adaptive gait reward, LocoTouch can robustly balance objects with slippery surfaces over long distances, or even under severe external perturbations.

QuietPaw: Learning Quadrupedal Locomotion with Versatile Noise Preference Alignment

Mar 06, 2025Abstract:When operating at their full capacity, quadrupedal robots can produce loud footstep noise, which can be disruptive in human-centered environments like homes, offices, and hospitals. As a result, balancing locomotion performance with noise constraints is crucial for the successful real-world deployment of quadrupedal robots. However, achieving adaptive noise control is challenging due to (a) the trade-off between agility and noise minimization, (b) the need for generalization across diverse deployment conditions, and (c) the difficulty of effectively adjusting policies based on noise requirements. We propose QuietPaw, a framework incorporating our Conditional Noise-Constrained Policy (CNCP), a constrained learning-based algorithm that enables flexible, noise-aware locomotion by conditioning policy behavior on noise-reduction levels. We leverage value representation decomposition in the critics, disentangling state representations from condition-dependent representations and this allows a single versatile policy to generalize across noise levels without retraining while improving the Pareto trade-off between agility and noise reduction. We validate our approach in simulation and the real world, demonstrating that CNCP can effectively balance locomotion performance and noise constraints, achieving continuously adjustable noise reduction.

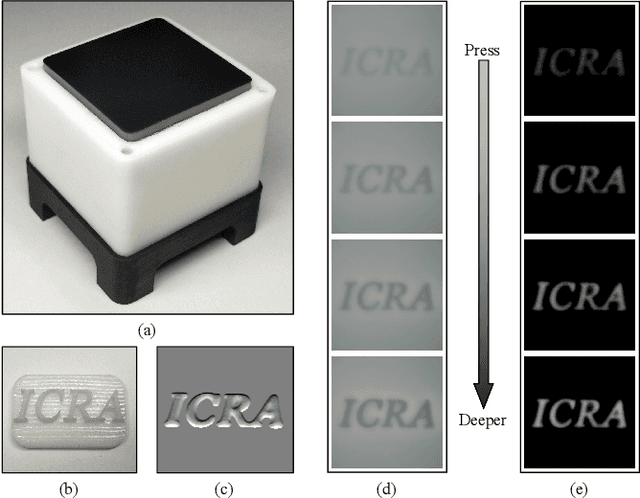

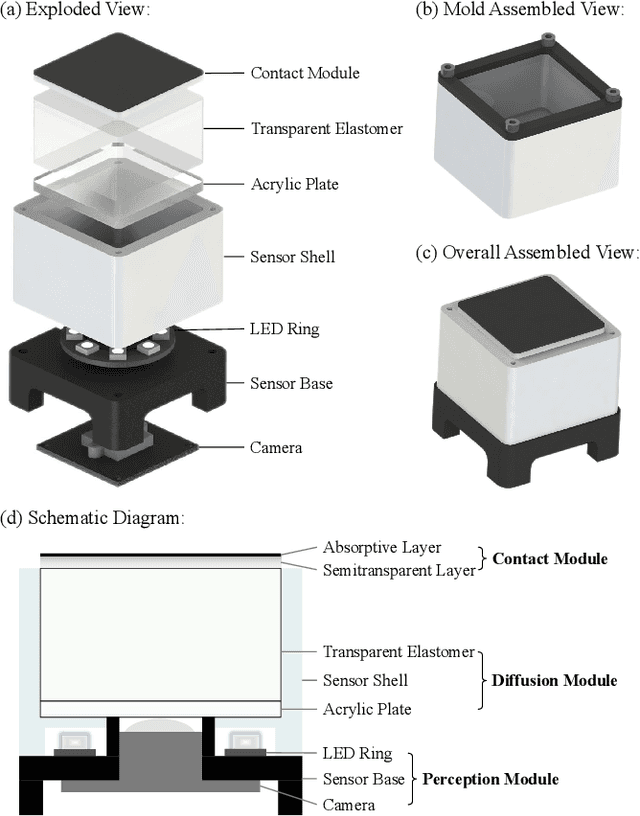

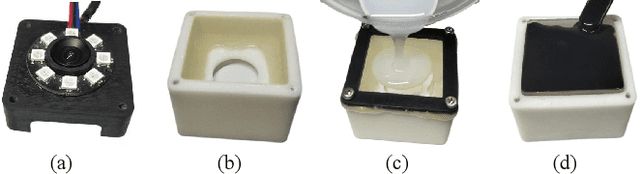

DTactive: A Vision-Based Tactile Sensor with Active Surface

Oct 10, 2024Abstract:The development of vision-based tactile sensors has significantly enhanced robots' perception and manipulation capabilities, especially for tasks requiring contact-rich interactions with objects. In this work, we present DTactive, a novel vision-based tactile sensor with active surfaces. DTactive inherits and modifies the tactile 3D shape reconstruction method of DTact while integrating a mechanical transmission mechanism that facilitates the mobility of its surface. Thanks to this design, the sensor is capable of simultaneously performing tactile perception and in-hand manipulation with surface movement. Leveraging the high-resolution tactile images from the sensor and the magnetic encoder data from the transmission mechanism, we propose a learning-based method to enable precise angular trajectory control during in-hand manipulation. In our experiments, we successfully achieved accurate rolling manipulation within the range of [ -180{\deg},180{\deg} ] on various objects, with the root mean square error between the desired and actual angular trajectories being less than 12{\deg} on nine trained objects and less than 19{\deg} on three novel objects. The results demonstrate the potential of DTactive for in-hand object manipulation in terms of effectiveness, robustness and precision.

Agile Continuous Jumping in Discontinuous Terrains

Sep 17, 2024

Abstract:We focus on agile, continuous, and terrain-adaptive jumping of quadrupedal robots in discontinuous terrains such as stairs and stepping stones. Unlike single-step jumping, continuous jumping requires accurately executing highly dynamic motions over long horizons, which is challenging for existing approaches. To accomplish this task, we design a hierarchical learning and control framework, which consists of a learned heightmap predictor for robust terrain perception, a reinforcement-learning-based centroidal-level motion policy for versatile and terrain-adaptive planning, and a low-level model-based leg controller for accurate motion tracking. In addition, we minimize the sim-to-real gap by accurately modeling the hardware characteristics. Our framework enables a Unitree Go1 robot to perform agile and continuous jumps on human-sized stairs and sparse stepping stones, for the first time to the best of our knowledge. In particular, the robot can cross two stair steps in each jump and completes a 3.5m long, 2.8m high, 14-step staircase in 4.5 seconds. Moreover, the same policy outperforms baselines in various other parkour tasks, such as jumping over single horizontal or vertical discontinuities. Experiment videos can be found at \url{https://yxyang.github.io/jumping\_cod/}.

LocoMan: Advancing Versatile Quadrupedal Dexterity with Lightweight Loco-Manipulators

Mar 27, 2024

Abstract:Quadrupedal robots have emerged as versatile agents capable of locomoting and manipulating in complex environments. Traditional designs typically rely on the robot's inherent body parts or incorporate top-mounted arms for manipulation tasks. However, these configurations may limit the robot's operational dexterity, efficiency and adaptability, particularly in cluttered or constrained spaces. In this work, we present LocoMan, a dexterous quadrupedal robot with a novel morphology to perform versatile manipulation in diverse constrained environments. By equipping a Unitree Go1 robot with two low-cost and lightweight modular 3-DoF loco-manipulators on its front calves, LocoMan leverages the combined mobility and functionality of the legs and grippers for complex manipulation tasks that require precise 6D positioning of the end effector in a wide workspace. To harness the loco-manipulation capabilities of LocoMan, we introduce a unified control framework that extends the whole-body controller (WBC) to integrate the dynamics of loco-manipulators. Through experiments, we validate that the proposed whole-body controller can accurately and stably follow desired 6D trajectories of the end effector and torso, which, when combined with the large workspace from our design, facilitates a diverse set of challenging dexterous loco-manipulation tasks in confined spaces, such as opening doors, plugging into sockets, picking objects in narrow and low-lying spaces, and bimanual manipulation.

9DTact: A Compact Vision-Based Tactile Sensor for Accurate 3D Shape Reconstruction and Generalizable 6D Force Estimation

Aug 28, 2023

Abstract:The advancements in vision-based tactile sensors have boosted the aptitude of robots to perform contact-rich manipulation, particularly when precise positioning and contact state of the manipulated objects are crucial for successful execution. In this work, we present 9DTact, a straightforward yet versatile tactile sensor that offers 3D shape reconstruction and 6D force estimation capabilities. Conceptually, 9DTact is designed to be highly compact, robust, and adaptable to various robotic platforms. Moreover, it is low-cost and DIY-friendly, requiring minimal assembly skills. Functionally, 9DTact builds upon the optical principles of DTact and is optimized to achieve 3D shape reconstruction with enhanced accuracy and efficiency. Remarkably, we leverage the optical and deformable properties of the translucent gel so that 9DTact can perform 6D force estimation without the participation of auxiliary markers or patterns on the gel surface. More specifically, we collect a dataset consisting of approximately 100,000 image-force pairs from 175 complex objects and train a neural network to regress the 6D force, which can generalize to unseen objects. To promote the development and applications of vision-based tactile sensors, we open-source both the hardware and software of 9DTact as well as present a 1-hour video tutorial.

ArrayBot: Reinforcement Learning for Generalizable Distributed Manipulation through Touch

Jun 29, 2023Abstract:We present ArrayBot, a distributed manipulation system consisting of a $16 \times 16$ array of vertically sliding pillars integrated with tactile sensors, which can simultaneously support, perceive, and manipulate the tabletop objects. Towards generalizable distributed manipulation, we leverage reinforcement learning (RL) algorithms for the automatic discovery of control policies. In the face of the massively redundant actions, we propose to reshape the action space by considering the spatially local action patch and the low-frequency actions in the frequency domain. With this reshaped action space, we train RL agents that can relocate diverse objects through tactile observations only. Surprisingly, we find that the discovered policy can not only generalize to unseen object shapes in the simulator but also transfer to the physical robot without any domain randomization. Leveraging the deployed policy, we present abundant real-world manipulation tasks, illustrating the vast potential of RL on ArrayBot for distributed manipulation.

DTact: A Vision-Based Tactile Sensor that Measures High-Resolution 3D Geometry Directly from Darkness

Sep 28, 2022

Abstract:Vision-based tactile sensors that can measure 3D geometry of the contacting objects are crucial for robots to perform dexterous manipulation tasks. However, the existing sensors are usually complicated to fabricate and delicate to extend. In this work, we novelly take advantage of the reflection property of semitransparent elastomer to design a robust, low-cost, and easy-to-fabricate tactile sensor named DTact. DTact measures high-resolution 3D geometry accurately from the darkness shown in the captured tactile images with only a single image for calibration. In contrast to previous sensors, DTact is robust under various illumination conditions. Then, we build prototypes of DTact that have non-planar contact surfaces with minimal extra efforts and costs. Finally, we perform two intelligent robotic tasks including pose estimation and object recognition using DTact, in which DTact shows large potential in applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge