H. Andy Park

LEGATO: Cross-Embodiment Imitation Using a Grasping Tool

Nov 06, 2024

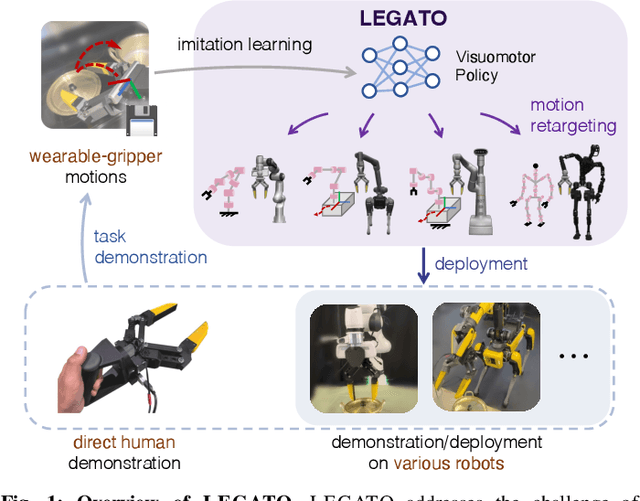

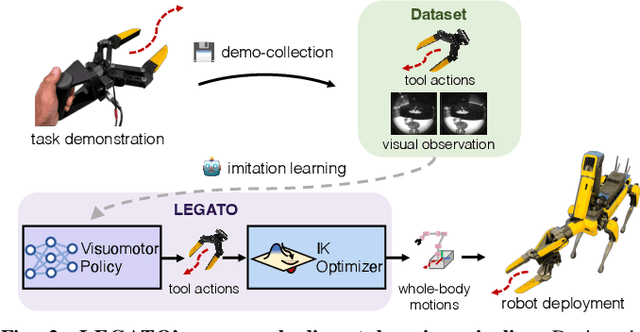

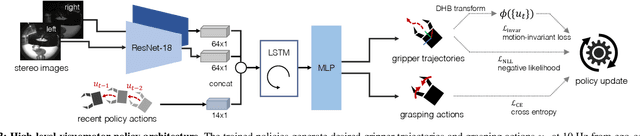

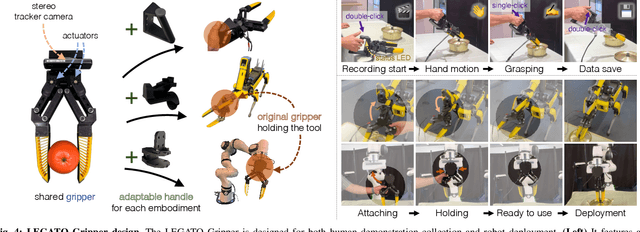

Abstract:Cross-embodiment imitation learning enables policies trained on specific embodiments to transfer across different robots, unlocking the potential for large-scale imitation learning that is both cost-effective and highly reusable. This paper presents LEGATO, a cross-embodiment imitation learning framework for visuomotor skill transfer across varied kinematic morphologies. We introduce a handheld gripper that unifies action and observation spaces, allowing tasks to be defined consistently across robots. Using this gripper, we train visuomotor policies via imitation learning, applying a motion-invariant transformation to compute the training loss. Gripper motions are then retargeted into high-degree-of-freedom whole-body motions using inverse kinematics for deployment across diverse embodiments. Our evaluations in simulation and real-robot experiments highlight the framework's effectiveness in learning and transferring visuomotor skills across various robots. More information can be found at the project page: https://ut-hcrl.github.io/LEGATO.

A Nonparametric Motion Flow Model for Human Robot Cooperation

Sep 11, 2017

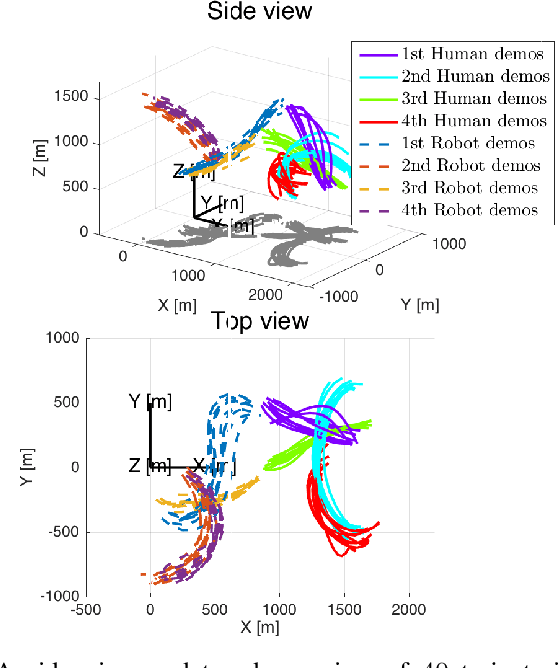

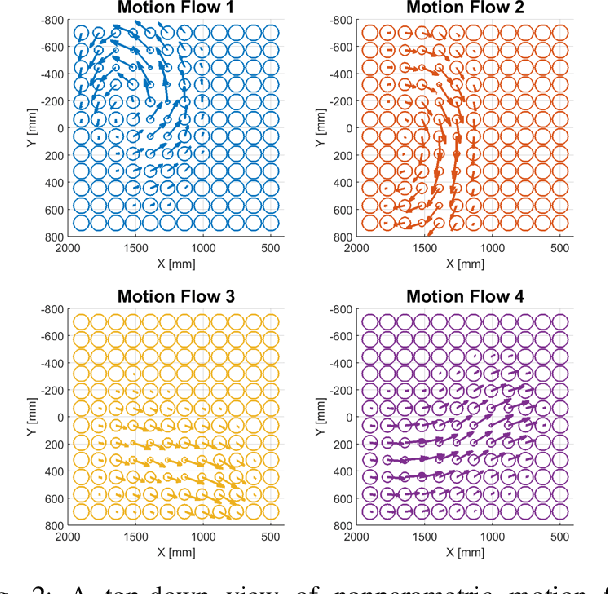

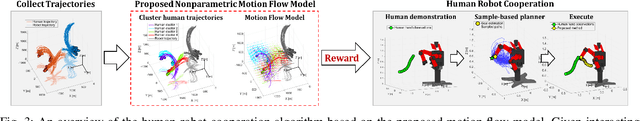

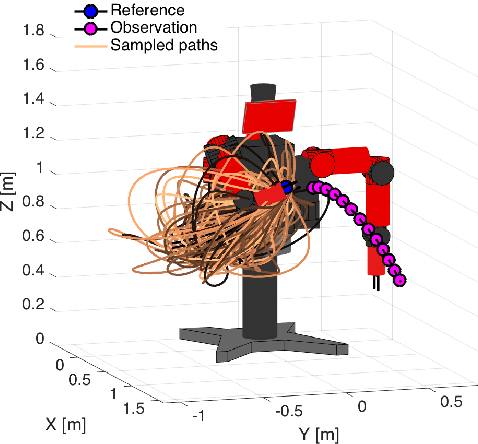

Abstract:In this paper, we present a novel nonparametric motion flow model that effectively describes a motion trajectory of a human and its application to human robot cooperation. To this end, motion flow similarity measure which considers both spatial and temporal properties of a trajectory is proposed by utilizing the mean and variance functions of a Gaussian process. We also present a human robot cooperation method using the proposed motion flow model. Given a set of interacting trajectories of two workers, the underlying reward function of cooperating behaviors is optimized by using the learned motion description as an input to the reward function where a stochastic trajectory optimization method is used to control a robot. The presented human robot cooperation method is compared with the state-of-the-art algorithm, which utilizes a mixture of interaction primitives (MIP), in terms of the RMS error between generated and target trajectories. While the proposed method shows comparable performance with the MIP when the full observation of human demonstrations is given, it shows superior performance with respect to given partial trajectory information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge