J. Kenneth Salisbury

Designing Underactuated Graspers with Dynamically Variable Geometry Using Potential Energy Map Based Analysis

Mar 14, 2022

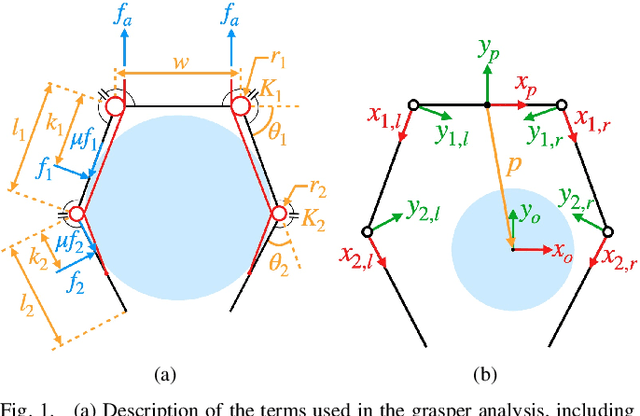

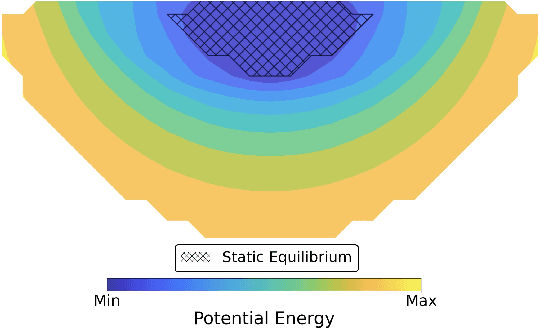

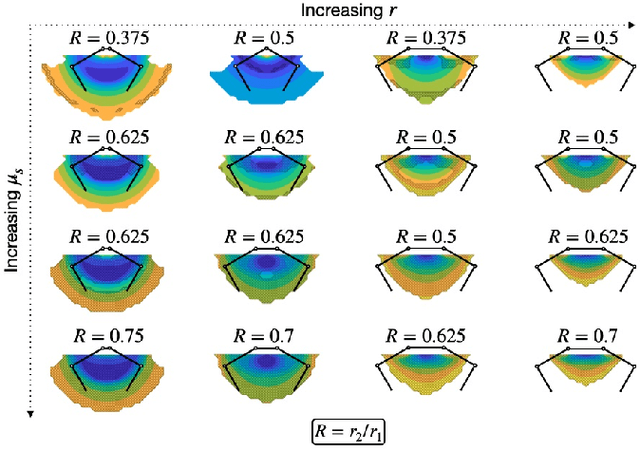

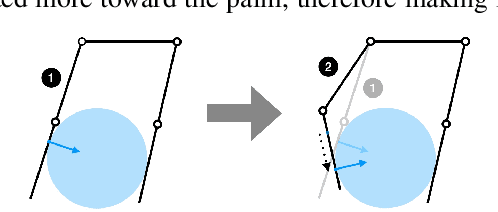

Abstract:In this paper we present a potential energy map based approach that provides a framework for the design and control of a robotic grasper. Unlike other potential energy map approaches, our framework is able to consider friction for a more realistic perspective on grasper performance. Our analysis establishes the importance of including variable geometry in a grasper design, namely with regards to palm width, link lengths, and transmission ratio. We demonstrate the use of this method specifically for a two-phalanx tendon-pulley underactuated grasper, and show how various design parameters - palm width, link lengths, and transmission ratios - impact the grasping and manipulation performance of a specific design across a range of object sizes and friction coefficients. Optimal grasping designs have palms that scale with object size, and transmission ratios that scale with the coefficient of friction. Using a custom manipulation metric we compared a grasper that only dynamically varied its geometry to a grasper with a variable palm and distinct actuation commands. The analysis revealed the advantage of the compliant reconfiguration ability intrinsic to underactuated mechanisms; by varying only the geometry of the grasper, manipulation of a wide range of objects could be performed.

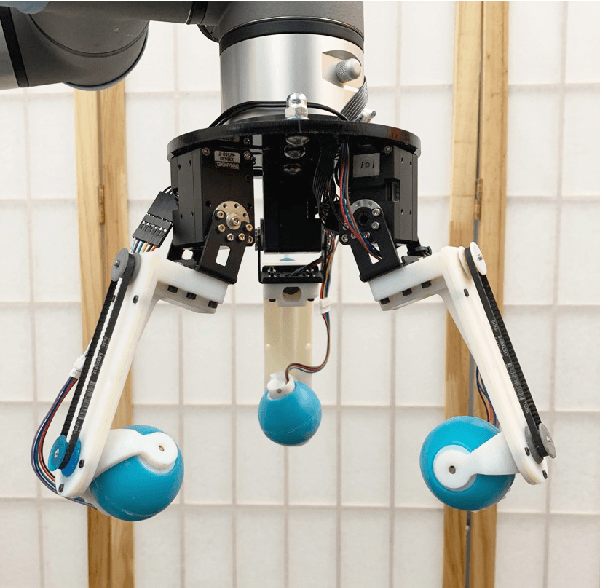

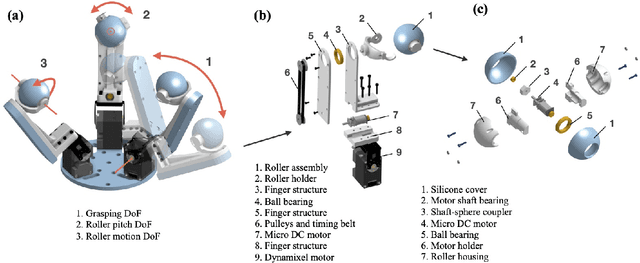

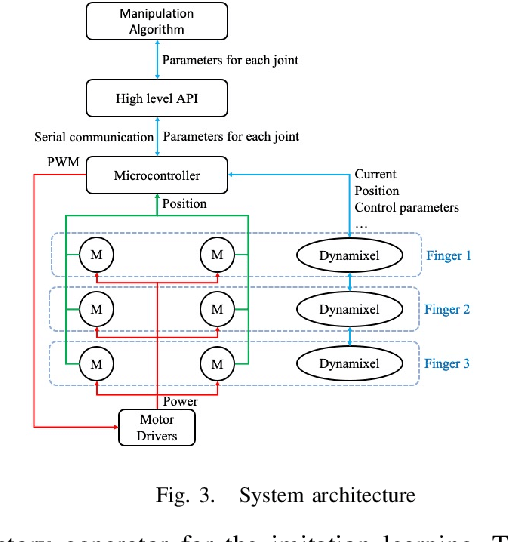

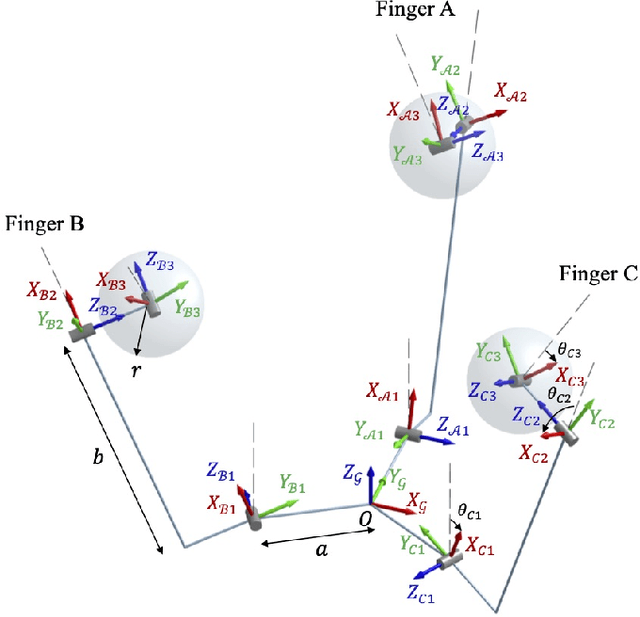

Design and Control of Roller Grasper V2 for In-Hand Manipulation

Apr 18, 2020

Abstract:The ability to perform in-hand manipulation still remains an unsolved problem; having this capability would allow robots to perform sophisticated tasks requiring repositioning and reorienting of grasped objects. In this work, we present a novel non-anthropomorphic robot grasper with the ability to manipulate objects by means of active surfaces at the fingertips. Active surfaces are achieved by spherical rolling finger tips with two degrees of freedom (DoF) -- a pivoting motion for surface reorientation -- and a continuous rolling motion for moving the object. A further DoF is in the base of each finger, allowing the fingers to grasp objects over a range of size and shapes. Instantaneous kinematics was derived and objects were successfully manipulated both with a custom hard-coded control scheme as well as one learned through imitation learning, in simulation and experimentally on the hardware.

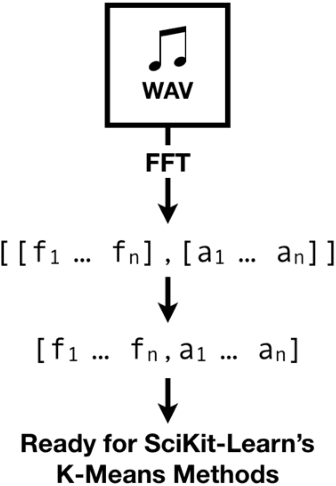

Scene Recognition Through Visual and Acoustic Cues Using K-Means

Nov 26, 2018

Abstract:We propose a K-Means based prediction system, nicknamed SERVANT (Scene Recognition Through Visual and Acoustic Cues), that is capable of recognizing environmental scenes through analysis of ambient sound and color cues. The concept and implementation originated within the Learning branch of the Intelligent Wearable Robotics Project (also known as the Third Arm project) at the Stanford Artificial Intelligence Lab-Toyota Center (SAIL-TC). The Third Arm Project focuses on the development and conceptualization of a robotic arm that can aid users in a whole array of situations: i.e. carrying a cup of coffee, holding a flashlight. Servant uses a K-Means fit-and-predict architecture to classify environmental scenes, such as that of a coffee shop or a basketball gym, using visual and auditory cues. Following such classification, Servant can recommend contextual actions based on prior training.

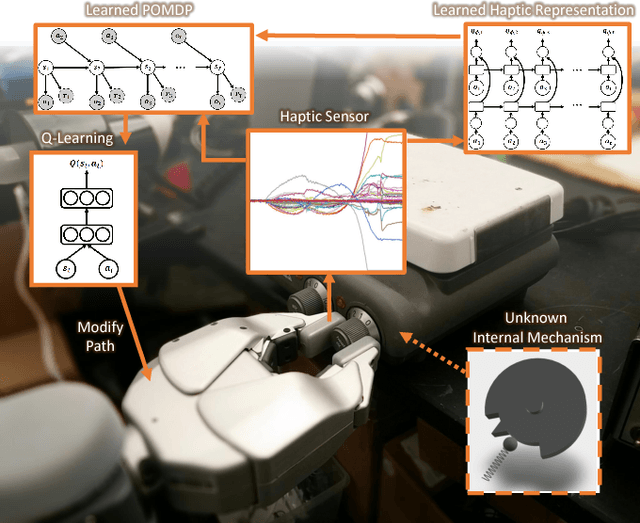

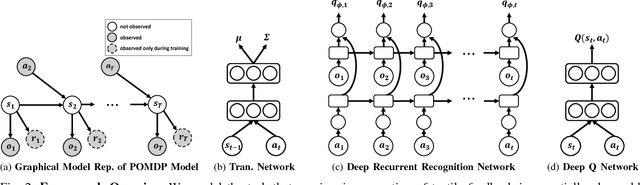

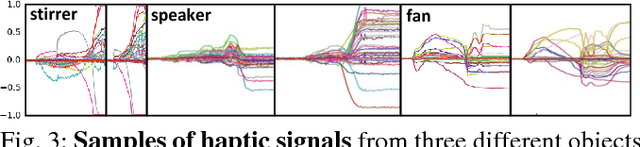

Learning to Represent Haptic Feedback for Partially-Observable Tasks

May 17, 2017

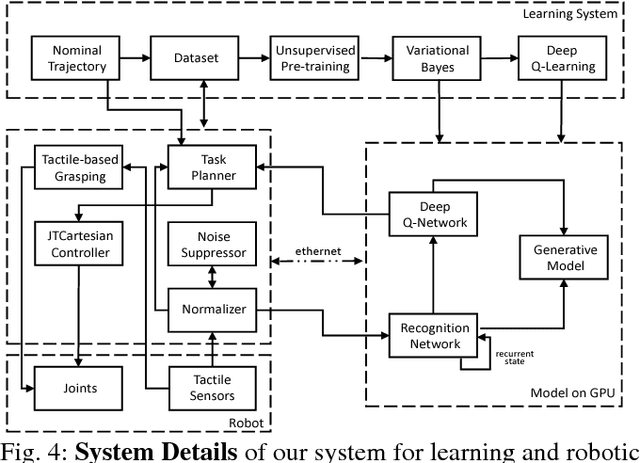

Abstract:The sense of touch, being the earliest sensory system to develop in a human body [1], plays a critical part of our daily interaction with the environment. In order to successfully complete a task, many manipulation interactions require incorporating haptic feedback. However, manually designing a feedback mechanism can be extremely challenging. In this work, we consider manipulation tasks that need to incorporate tactile sensor feedback in order to modify a provided nominal plan. To incorporate partial observation, we present a new framework that models the task as a partially observable Markov decision process (POMDP) and learns an appropriate representation of haptic feedback which can serve as the state for a POMDP model. The model, that is parametrized by deep recurrent neural networks, utilizes variational Bayes methods to optimize the approximate posterior. Finally, we build on deep Q-learning to be able to select the optimal action in each state without access to a simulator. We test our model on a PR2 robot for multiple tasks of turning a knob until it clicks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge