Ryan Gupta

Human Stress Response and Perceived Safety during Encounters with Quadruped Robots

Mar 25, 2024

Abstract:Despite the rise of mobile robot deployments in home and work settings, perceived safety of users and bystanders is understudied in the human-robot interaction (HRI) literature. To address this, we present a study designed to identify elements of a human-robot encounter that correlate with observed stress response. Stress is a key component of perceived safety and is strongly associated with human physiological response. In this study a Boston Dynamics Spot and a Unitree Go1 navigate autonomously through a shared environment occupied by human participants wearing multimodal physiological sensors to track their electrocardiography (ECG) and electrodermal activity (EDA). The encounters are varied through several trials and participants self-rate their stress levels after each encounter. The study resulted in a multidimensional dataset archiving various objective and subjective aspects of a human-robot encounter, containing insights for understanding perceived safety in such encounters. To this end, acute stress responses were decoded from the human participants' ECG and EDA and compared across different human-robot encounter conditions. Statistical analysis of data indicate that on average (1) participants feel more stress during encounters compared to baselines, (2) participants feel more stress encountering multiple robots compared to a single robot and (3) participants stress increases during navigation behavior compared with search behavior.

LIVE: Lidar Informed Visual Search for Multiple Objects with Multiple Robots

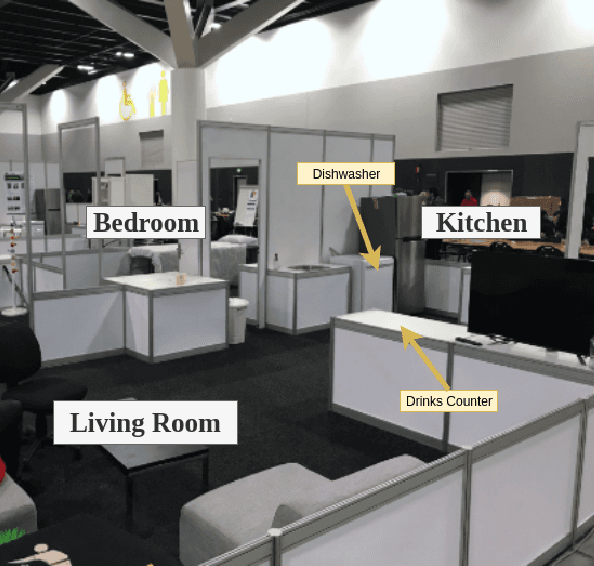

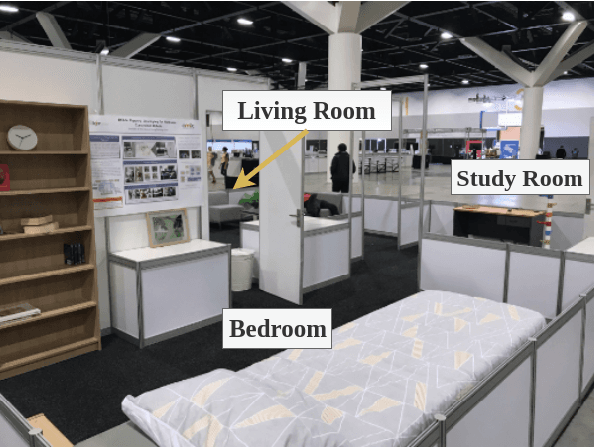

Oct 06, 2023Abstract:This paper introduces LIVE: Lidar Informed Visual Search focused on the problem of multi-robot (MR) planning and execution for robust visual detection of multiple objects. We perform extensive real-world experiments with a two-robot team in an indoor apartment setting. LIVE acts as a perception module that detects unmapped obstacles, or Short Term Features (STFs), in Lidar observations. STFs are filtered, resulting in regions to be visually inspected by modifying plans online. Lidar Coverage Path Planning (CPP) is employed for generating highly efficient global plans for heterogeneous robot teams. Finally, we present a data model and a demonstration dataset, which can be found by visiting our project website https://sites.google.com/view/live-iros2023/home.

Learned Contextual LiDAR Informed Visual Search in Unseen Environments

Sep 25, 2023

Abstract:This paper presents LIVES: LiDAR Informed Visual Search, an autonomous planner for unknown environments. We consider the pixel-wise environment perception problem where one is given 2D range data from LiDAR scans and must label points contextually as map or non-map in the surroundings for visual planning. LIVES classifies incoming 2D scans from the wide Field of View (FoV) LiDAR in unseen environments without prior map information. The map-generalizable classifier is trained from expert data collected using a simple cart platform equipped with a map-based classifier in real environments. A visual planner takes contextual data from scans and uses this information to plan viewpoints more likely to yield detection of the search target. While conventional frontier based methods for LiDAR and multi sensor exploration effectively map environments, they are not tailored to search for people indoors, which we investigate in this paper. LIVES is baselined against several existing exploration methods in simulation to verify its performance. Finally, it is validated in real-world experiments with a Spot robot in a 20x30m indoor apartment setting. Videos of experimental validation can be found on our project website at https://sites.google.com/view/lives-icra-2024/home.

Learning to Walk by Steering: Perceptive Quadrupedal Locomotion in Dynamic Environments

Sep 19, 2022

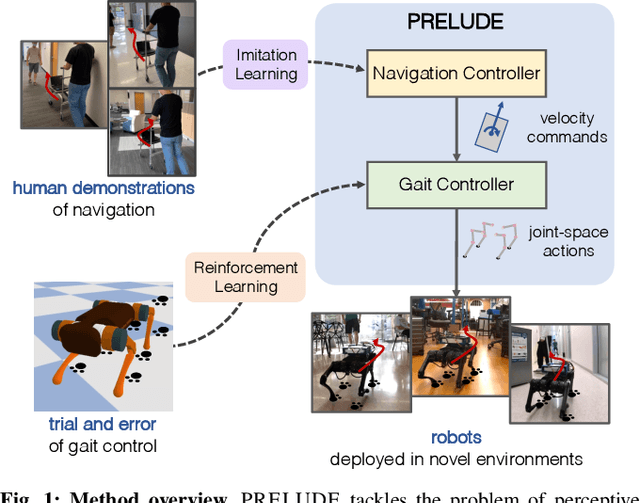

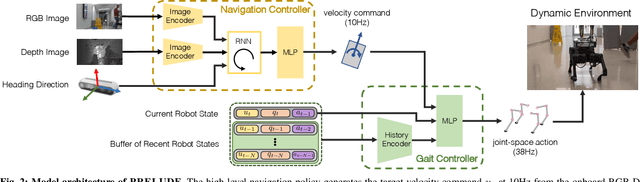

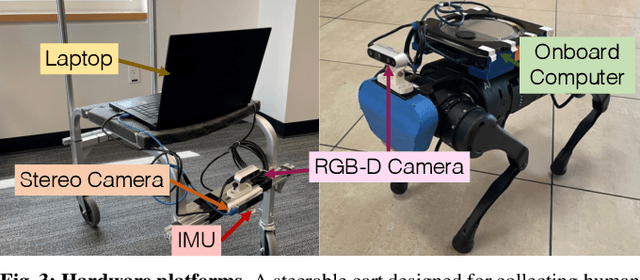

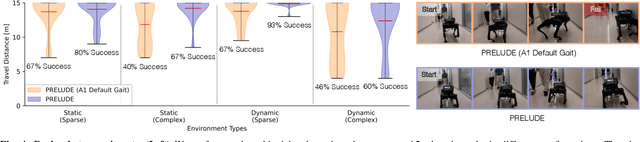

Abstract:We tackle the problem of perceptive locomotion in dynamic environments. In this problem, a quadrupedal robot must exhibit robust and agile walking behaviors in response to environmental clutter and moving obstacles. We present a hierarchical learning framework, named PRELUDE, which decomposes the problem of perceptive locomotion into high-level decision-making to predict navigation commands and low-level gait generation to realize the target commands. In this framework, we train the high-level navigation controller with imitation learning on human demonstrations collected on a steerable cart and the low-level gait controller with reinforcement learning (RL). Therefore, our method can acquire complex navigation behaviors from human supervision and discover versatile gaits from trial and error. We demonstrate the effectiveness of our approach in simulation and with hardware experiments. Video and code can be found on https://ut-austin-rpl.github.io/PRELUDE.

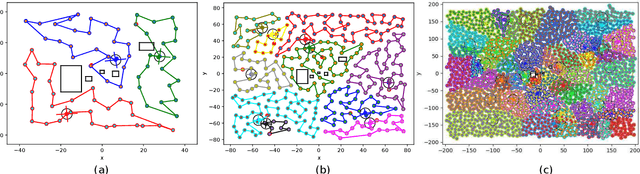

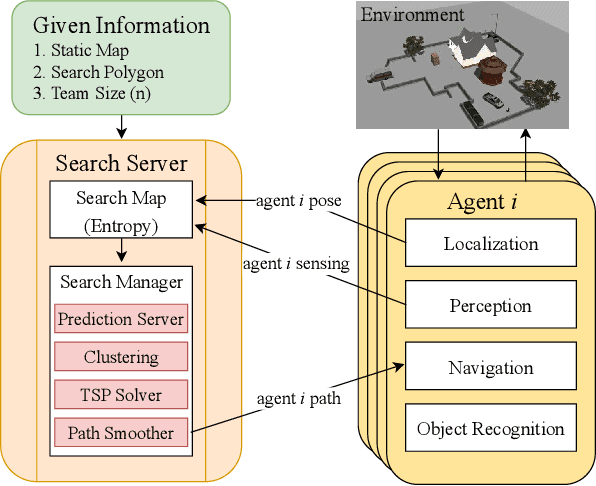

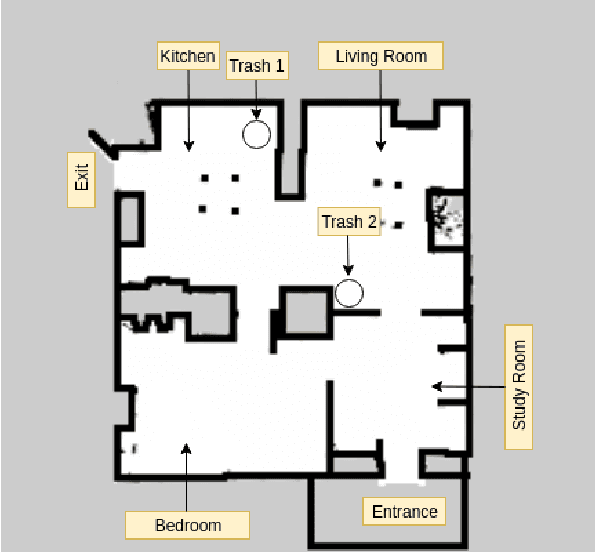

KC-TSS: An Algorithm for Heterogeneous Robot Teams Performing Resilient Target Search

Mar 02, 2022

Abstract:This paper proposes KC-TSS: K-Clustered-Traveling Salesman Based Search, a failure resilient path planning algorithm for heterogeneous robot teams performing target search in human environments. We separate the sample path generation problem into Heterogeneous Clustering and multiple Traveling Salesman Problems. This allows us to provide high-quality candidate paths (i.e. minimal backtracking, overlap) to an Information-Theoretic utility function for each agent. First, we generate waypoint candidates from map knowledge and a target prediction model. All of these candidates are clustered according to the number of agents and their ability to cover space, or coverage competency. Each agent solves a Traveling Salesman Problem (TSP) instance over their assigned cluster and then candidates are fed to a utility function for path selection. We perform extensive Gazebo simulations and preliminary deployment of real robots in indoor search and simulated rescue scenarios with static targets. We compare our proposed method against a state-of-the-art algorithm and show that ours is able to outperform it in mission time. Our method provides resilience in the event of single or multi teammate failure by recomputing global team plans online.

Information-Theoretic Based Target Search with Multiple Agents

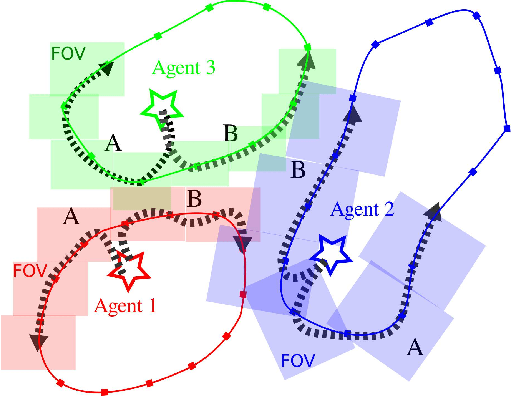

Jul 27, 2021

Abstract:This paper proposes an online path planning and motion generation algorithm for heterogeneous robot teams performing target search in a real-world environment. Path selection for each robot is optimized using an information-theoretic formulation and is computed sequentially for each agent. First, we generate candidate trajectories sampled from both global waypoints derived from vertical cell decomposition and local frontier points. From this set, we choose the path with maximum information gain. We demonstrate that the hierarchical sequential decision-making structure provided by the algorithm is scalable to multiple agents in a simulation setup. We also validate our framework in a real-world apartment setting using a two robot team comprised of the Unitree A1 quadruped and the Toyota HSR mobile manipulator searching for a person. The agents leverage an efficient leader-follower communication structure where only critical information is shared.

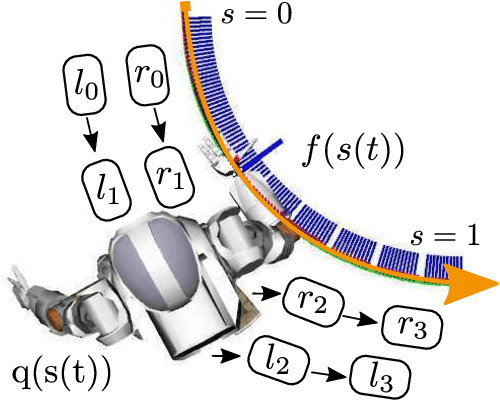

Finding Locomanipulation Plans Quickly in the Locomotion Constrained Manifold

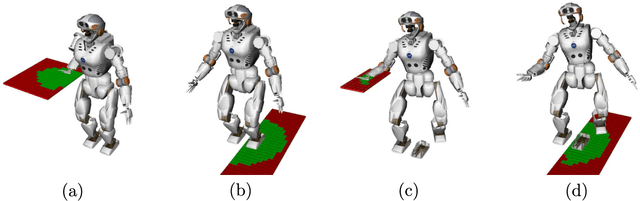

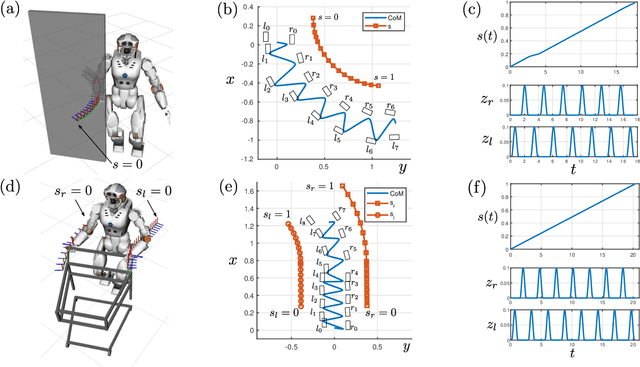

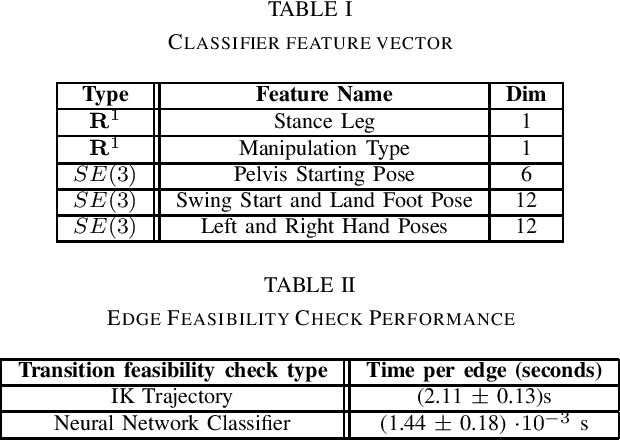

Sep 22, 2019

Abstract:We present a method that finds locomanipulation plans that perform simultaneous locomotion and manipulation of objects for a desired end-effector trajectory. Key to our approach is to consider a generic locomotion constraint manifold that defines the locomotion scheme of the robot and then using this constraint manifold to search for admissible manipulation trajectories. The problem is formulated as a weighted-A* graph search whose planner output is a sequence of contact transitions and a path progression trajectory to construct the whole-body kinodynamic locomanipulation plan. We also provide a method for computing, visualizing and learning the locomanipulability region, which is used to efficiently evaluate the edge transition feasibility during the graph search. Experiments are performed on the NASA Valkyrie robot platform that utilizes a dynamic locomotion approach, called the divergent-component-of-motion (DCM), on two example locomanipulation scenarios.

Solving Service Robot Tasks: UT Austin Villa@Home 2019 Team Report

Sep 14, 2019

Abstract:RoboCup@Home is an international robotics competition based on domestic tasks requiring autonomous capabilities pertaining to a large variety of AI technologies. Research challenges are motivated by these tasks both at the level of individual technologies and the integration of subsystems into a fully functional, robustly autonomous system. We describe the progress made by the UT Austin Villa 2019 RoboCup@Home team which represents a significant step forward in AI-based HRI due to the breadth of tasks accomplished within a unified system. Presented are the competition tasks, component technologies they rely on, our initial approaches both to the components and their integration, and directions for future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge