Justin W. Hart

Proceedings of the AI-HRI Symposium at AAAI-FSS 2022

Sep 28, 2022Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration on AI theory and methods aimed at HRI since 2014. This year, after a review of the achievements of the AI-HRI community over the last decade in 2021, we are focusing on a visionary theme: exploring the future of AI-HRI. Accordingly, we added a Blue Sky Ideas track to foster a forward-thinking discussion on future research at the intersection of AI and HRI. As always, we appreciate all contributions related to any topic on AI/HRI and welcome new researchers who wish to take part in this growing community. With the success of past symposia, AI-HRI impacts a variety of communities and problems, and has pioneered the discussions in recent trends and interests. This year's AI-HRI Fall Symposium aims to bring together researchers and practitioners from around the globe, representing a number of university, government, and industry laboratories. In doing so, we hope to accelerate research in the field, support technology transition and user adoption, and determine future directions for our group and our research.

Proceedings of the AI-HRI Symposium at AAAI-FSS 2020

Nov 11, 2020Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration since 2014. In that time, the related topic of trust in robotics has been rapidly growing, with major research efforts at universities and laboratories across the world. Indeed, many of the past participants in AI-HRI have been or are now involved with research into trust in HRI. While trust has no consensus definition, it is regularly associated with predictability, reliability, inciting confidence, and meeting expectations. Furthermore, it is generally believed that trust is crucial for adoption of both AI and robotics, particularly when transitioning technologies from the lab to industrial, social, and consumer applications. However, how does trust apply to the specific situations we encounter in the AI-HRI sphere? Is the notion of trust in AI the same as that in HRI? We see a growing need for research that lives directly at the intersection of AI and HRI that is serviced by this symposium. Over the course of the two-day meeting, we propose to create a collaborative forum for discussion of current efforts in trust for AI-HRI, with a sub-session focused on the related topic of explainable AI (XAI) for HRI.

Proceedings of the AI-HRI Symposium at AAAI-FSS 2019

Sep 19, 2019Abstract:The past few years have seen rapid progress in the development of service robots. Universities and companies alike have launched major research efforts toward the deployment of ambitious systems designed to aid human operators performing a variety of tasks. These robots are intended to make those who may otherwise need to live in assisted care facilities more independent, to help workers perform their jobs, or simply to make life more convenient. Service robots provide a powerful platform on which to study Artificial Intelligence (AI) and Human-Robot Interaction (HRI) in the real world. Research sitting at the intersection of AI and HRI is crucial to the success of service robots if they are to fulfill their mission. This symposium seeks to highlight research enabling robots to effectively interact with people autonomously while modeling, planning, and reasoning about the environment that the robot operates in and the tasks that it must perform. AI-HRI deals with the challenge of interacting with humans in environments that are relatively unstructured or which are structured around people rather than machines, as well as the possibility that the robot may need to interact naturally with people rather than through teach pendants, programming, or similar interfaces.

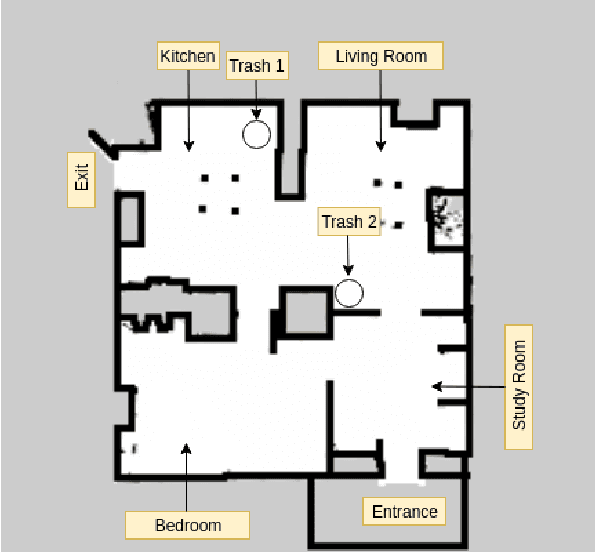

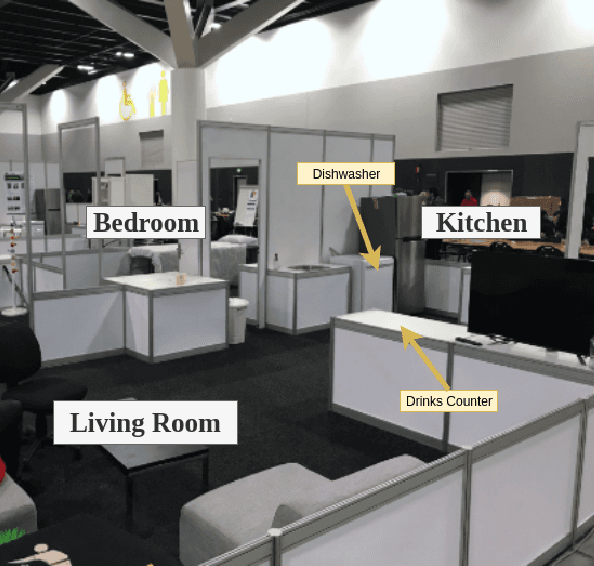

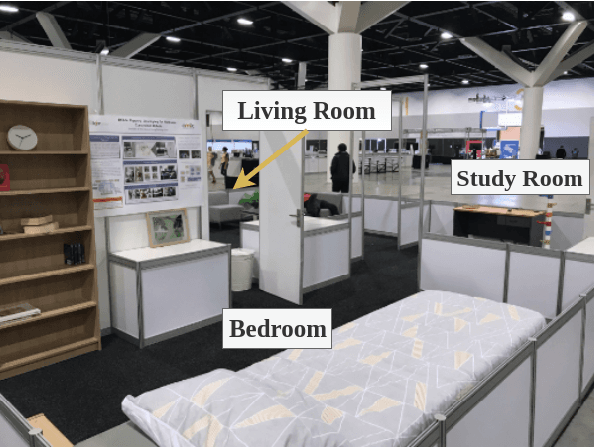

Solving Service Robot Tasks: UT Austin Villa@Home 2019 Team Report

Sep 14, 2019

Abstract:RoboCup@Home is an international robotics competition based on domestic tasks requiring autonomous capabilities pertaining to a large variety of AI technologies. Research challenges are motivated by these tasks both at the level of individual technologies and the integration of subsystems into a fully functional, robustly autonomous system. We describe the progress made by the UT Austin Villa 2019 RoboCup@Home team which represents a significant step forward in AI-based HRI due to the breadth of tasks accomplished within a unified system. Presented are the competition tasks, component technologies they rely on, our initial approaches both to the components and their integration, and directions for future research.

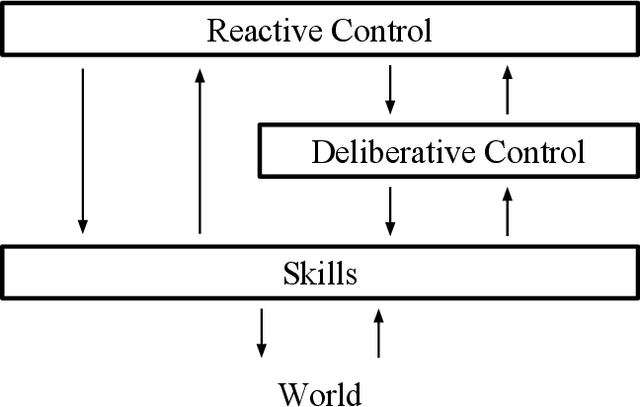

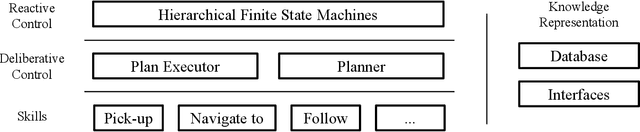

LAAIR: A Layered Architecture for Autonomous Interactive Robots

Nov 09, 2018

Abstract:When developing general purpose robots, the overarching software architecture can greatly affect the ease of accomplishing various tasks. Initial efforts to create unified robot systems in the 1990s led to hybrid architectures, emphasizing a hierarchy in which deliberative plans direct the use of reactive skills. However, since that time there has been significant progress in the low-level skills available to robots, including manipulation and perception, making it newly feasible to accomplish many more tasks in real-world domains. There is thus renewed optimism that robots will be able to perform a wide array of tasks while maintaining responsiveness to human operators. However, the top layer in traditional hybrid architectures, designed to achieve long-term goals, can make it difficult to react quickly to human interactions during goal-driven execution. To mitigate this difficulty, we propose a novel architecture that supports such transitions by adding a top-level reactive module which has flexible access to both reactive skills and a deliberative control module. To validate this architecture, we present a case study of its application on a domestic service robot platform.

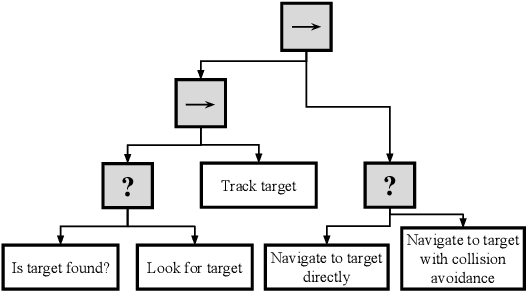

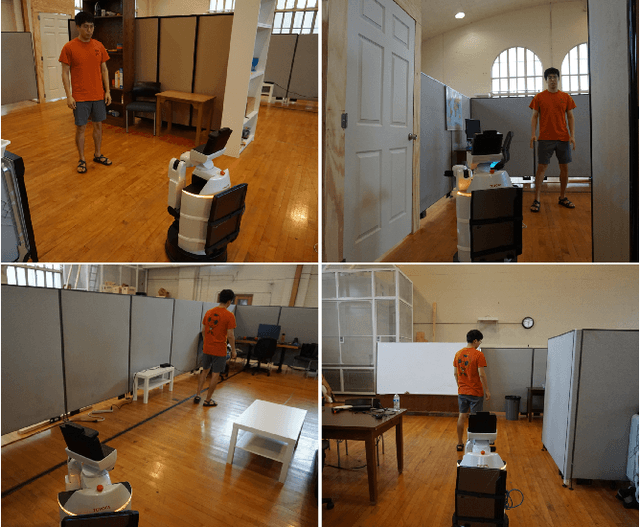

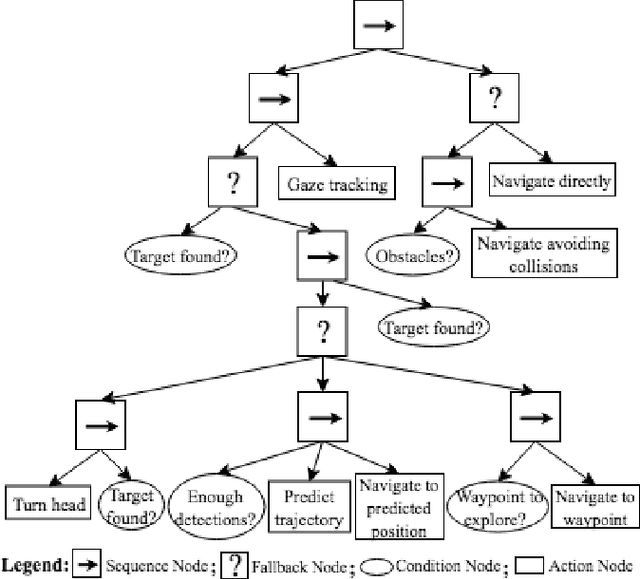

An Architecture for Person-Following using Active Target Search

Sep 24, 2018

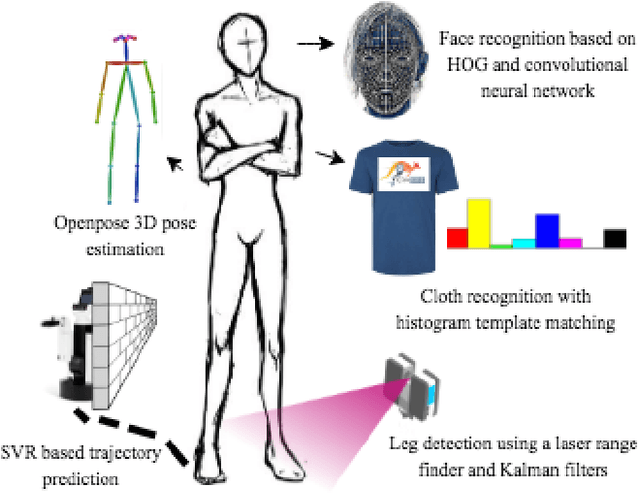

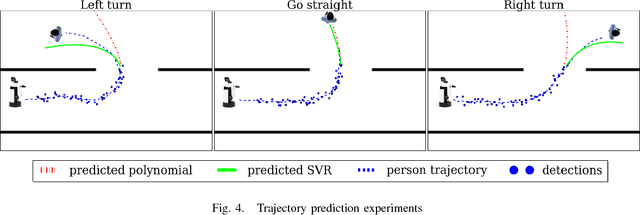

Abstract:This paper addresses a novel architecture for person-following robots using active search. The proposed system can be applied in real-time to general mobile robots for learning features of a human, detecting and tracking, and finally navigating towards that person. To succeed at person-following, perception, planning, and robot behavior need to be integrated properly. Toward this end, an active target searching capability, including prediction and navigation toward vantage locations for finding human targets, is proposed. The proposed capability aims at improving the robustness and efficiency for tracking and following people under dynamic conditions such as crowded environments. A multi-modal sensor information approach including fusing an RGB-D sensor and a laser scanner, is pursued to robustly track and identify human targets. Bayesian filtering for keeping track of human and a regression algorithm to predict the trajectory of people are investigated. In order to make the robot autonomous, the proposed framework relies on a behavior-tree structure. Using Toyota Human Support Robot (HSR), real-time experiments demonstrate that the proposed architecture can generate fast, efficient person-following behaviors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge