Zhao Han

Indicating Robot Vision Capabilities with Augmented Reality

Nov 05, 2025Abstract:Research indicates that humans can mistakenly assume that robots and humans have the same field of view (FoV), possessing an inaccurate mental model of robots. This misperception may lead to failures during human-robot collaboration tasks where robots might be asked to complete impossible tasks about out-of-view objects. The issue is more severe when robots do not have a chance to scan the scene to update their world model while focusing on assigned tasks. To help align humans' mental models of robots' vision capabilities, we propose four FoV indicators in augmented reality (AR) and conducted a user human-subjects experiment (N=41) to evaluate them in terms of accuracy, confidence, task efficiency, and workload. These indicators span a spectrum from egocentric (robot's eye and head space) to allocentric (task space). Results showed that the allocentric blocks at the task space had the highest accuracy with a delay in interpreting the robot's FoV. The egocentric indicator of deeper eye sockets, possible for physical alteration, also increased accuracy. In all indicators, participants' confidence was high while cognitive load remained low. Finally, we contribute six guidelines for practitioners to apply our AR indicators or physical alterations to align humans' mental models with robots' vision capabilities.

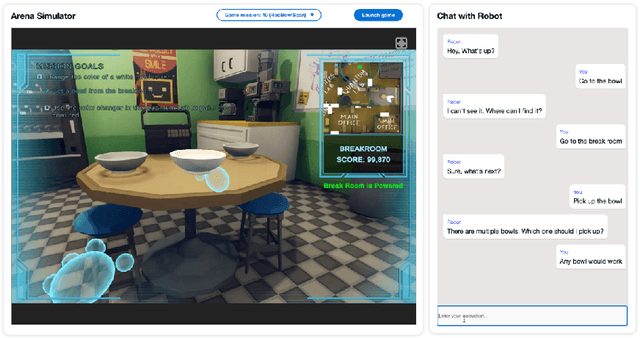

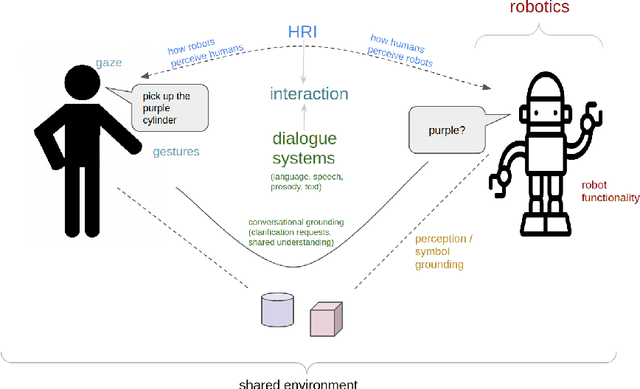

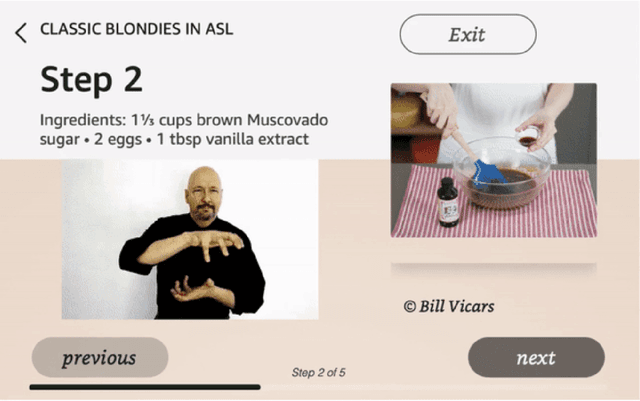

Dialogue with Robots: Proposals for Broadening Participation and Research in the SLIVAR Community

Apr 01, 2024

Abstract:The ability to interact with machines using natural human language is becoming not just commonplace, but expected. The next step is not just text interfaces, but speech interfaces and not just with computers, but with all machines including robots. In this paper, we chronicle the recent history of this growing field of spoken dialogue with robots and offer the community three proposals, the first focused on education, the second on benchmarks, and the third on the modeling of language when it comes to spoken interaction with robots. The three proposals should act as white papers for any researcher to take and build upon.

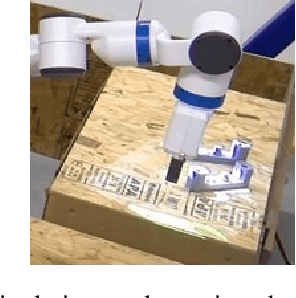

Mixed-Reality Robot Behavior Replay: A System Implementation

Sep 30, 2022

Abstract:As robots become increasingly complex, they must explain their behaviors to gain trust and acceptance. However, it may be difficult through verbal explanation alone to fully convey information about past behavior, especially regarding objects no longer present due to robots' or humans' actions. Humans often try to physically mimic past movements to accompany verbal explanations. Inspired by this human-human interaction, we describe the technical implementation of a system for past behavior replay for robots in this tool paper. Specifically, we used Behavior Trees to encode and separate robot behaviors, and schemaless MongoDB to structurally store and query the underlying sensor data and joint control messages for future replay. Our approach generalizes to different types of replays, including both manipulation and navigation replay, and visual (i.e., augmented reality (AR)) and auditory replay. Additionally, we briefly summarize a user study to further provide empirical evidence of its effectiveness and efficiency. Sample code and instructions are available on GitHub at https://github.com/umhan35/robot-behavior-replay.

* 6 pages, 5 figures, the AI-HRI Symposium at AAAI Fall Symposium Series (FSS) 2022

Proceedings of the AI-HRI Symposium at AAAI-FSS 2022

Sep 28, 2022Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration on AI theory and methods aimed at HRI since 2014. This year, after a review of the achievements of the AI-HRI community over the last decade in 2021, we are focusing on a visionary theme: exploring the future of AI-HRI. Accordingly, we added a Blue Sky Ideas track to foster a forward-thinking discussion on future research at the intersection of AI and HRI. As always, we appreciate all contributions related to any topic on AI/HRI and welcome new researchers who wish to take part in this growing community. With the success of past symposia, AI-HRI impacts a variety of communities and problems, and has pioneered the discussions in recent trends and interests. This year's AI-HRI Fall Symposium aims to bring together researchers and practitioners from around the globe, representing a number of university, government, and industry laboratories. In doing so, we hope to accelerate research in the field, support technology transition and user adoption, and determine future directions for our group and our research.

Causal Robot Communication Inspired by Observational Learning Insights

Mar 17, 2022Abstract:Autonomous robots must communicate about their decisions to gain trust and acceptance. When doing so, robots must determine which actions are causal, i.e., which directly give rise to the desired outcome, so that these actions can be included in explanations. In behavior learning in psychology, this sort of reasoning during an action sequence has been studied extensively in the context of imitation learning. And yet, these techniques and empirical insights are rarely applied to human-robot interaction (HRI). In this work, we discuss the relevance of behavior learning insights for robot intent communication, and present the first application of these insights for a robot to efficiently communicate its intent by selectively explaining the causal actions in an action sequence.

Towards Formalizing HRI Data Collection Processes

Mar 16, 2022

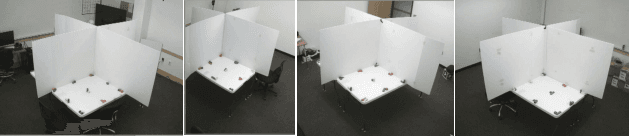

Abstract:Within the human-robot interaction (HRI) community, many researchers have focused on the careful design of human-subjects studies. However, other parts of the community, e.g., the technical advances community, also need to do human-subjects studies to collect data to train their models, in ways that require user studies but without a strict experimental design. The design of such data collection is an underexplored area worthy of more attention. In this work, we contribute a clearly defined process to collect data with three steps for machine learning modeling purposes, grounded in recent literature, and detail an use of this process to facilitate the collection of a corpus of referring expressions. Specifically, we discuss our data collection goal and how we worked to encourage well-covered and abundant participant responses, through our design of the task environment, the task itself, and the study procedure. We hope this work would lead to more data collection formalism efforts in the HRI community and a fruitful discussion during the workshop.

Projecting Robot Navigation Paths: Hardware and Software for Projected AR

Jan 06, 2022

Abstract:For mobile robots, mobile manipulators, and autonomous vehicles to safely navigate around populous places such as streets and warehouses, human observers must be able to understand their navigation intent. One way to enable such understanding is by visualizing this intent through projections onto the surrounding environment. But despite the demonstrated effectiveness of such projections, no open codebase with an integrated hardware setup exists. In this work, we detail the empirical evidence for the effectiveness of such directional projections, and share a robot-agnostic implementation of such projections, coded in C++ using the widely-used Robot Operating System (ROS) and rviz. Additionally, we demonstrate a hardware configuration for deploying this software, using a Fetch robot, and briefly summarize a full-scale user study that motivates this configuration. The code, configuration files (roslaunch and rviz files), and documentation are freely available on GitHub at https://github.com/umhan35/arrow_projection.

AI-HRI 2021 Proceedings

Sep 23, 2021Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration since 2014. During that time, these symposia provided a fertile ground for numerous collaborations and pioneered many discussions revolving trust in HRI, XAI for HRI, service robots, interactive learning, and more. This year, we aim to review the achievements of the AI-HRI community in the last decade, identify the challenges facing ahead, and welcome new researchers who wish to take part in this growing community. Taking this wide perspective, this year there will be no single theme to lead the symposium and we encourage AI-HRI submissions from across disciplines and research interests. Moreover, with the rising interest in AR and VR as part of an interaction and following the difficulties in running physical experiments during the pandemic, this year we specifically encourage researchers to submit works that do not include a physical robot in their evaluation, but promote HRI research in general. In addition, acknowledging that ethics is an inherent part of the human-robot interaction, we encourage submissions of works on ethics for HRI. Over the course of the two-day meeting, we will host a collaborative forum for discussion of current efforts in AI-HRI, with additional talks focused on the topics of ethics in HRI and ubiquitous HRI.

Investigation of Multiple Resource Theory Design Principles on Robot Teleoperation and Workload Management

Mar 31, 2021

Abstract:Robot interfaces often only use the visual channel. Inspired by Wickens' Multiple Resource Theory, we investigated if the addition of audio elements would reduce cognitive workload and improve performance. Specifically, we designed a search and threat-defusal task (primary) with a memory test task (secondary). Eleven participants - predominantly first responders - were recruited to control a robot to clear all threats in a combination of four conditions of primary and secondary tasks in visual and auditory channels. We did not find any statistically significant differences in performance or workload across subjects, making it questionable that Multiple Resource Theory could shorten longer-term task completion time and reduce workload. Our results suggest that considering individual differences for splitting interface modalities across multiple channels requires further investigation.

Proceedings of the AI-HRI Symposium at AAAI-FSS 2020

Nov 11, 2020Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration since 2014. In that time, the related topic of trust in robotics has been rapidly growing, with major research efforts at universities and laboratories across the world. Indeed, many of the past participants in AI-HRI have been or are now involved with research into trust in HRI. While trust has no consensus definition, it is regularly associated with predictability, reliability, inciting confidence, and meeting expectations. Furthermore, it is generally believed that trust is crucial for adoption of both AI and robotics, particularly when transitioning technologies from the lab to industrial, social, and consumer applications. However, how does trust apply to the specific situations we encounter in the AI-HRI sphere? Is the notion of trust in AI the same as that in HRI? We see a growing need for research that lives directly at the intersection of AI and HRI that is serviced by this symposium. Over the course of the two-day meeting, we propose to create a collaborative forum for discussion of current efforts in trust for AI-HRI, with a sub-session focused on the related topic of explainable AI (XAI) for HRI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge