Amir Yazdani

Proceedings of the AI-HRI Symposium at AAAI-FSS 2022

Sep 28, 2022Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration on AI theory and methods aimed at HRI since 2014. This year, after a review of the achievements of the AI-HRI community over the last decade in 2021, we are focusing on a visionary theme: exploring the future of AI-HRI. Accordingly, we added a Blue Sky Ideas track to foster a forward-thinking discussion on future research at the intersection of AI and HRI. As always, we appreciate all contributions related to any topic on AI/HRI and welcome new researchers who wish to take part in this growing community. With the success of past symposia, AI-HRI impacts a variety of communities and problems, and has pioneered the discussions in recent trends and interests. This year's AI-HRI Fall Symposium aims to bring together researchers and practitioners from around the globe, representing a number of university, government, and industry laboratories. In doing so, we hope to accelerate research in the field, support technology transition and user adoption, and determine future directions for our group and our research.

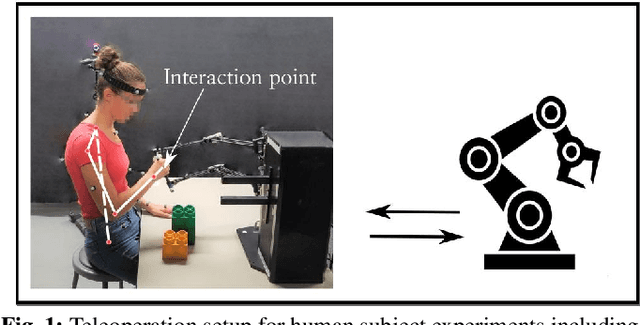

Occlusion-Robust Multi-Sensory Posture Estimation in Physical Human-Robot Interaction

Aug 12, 2022

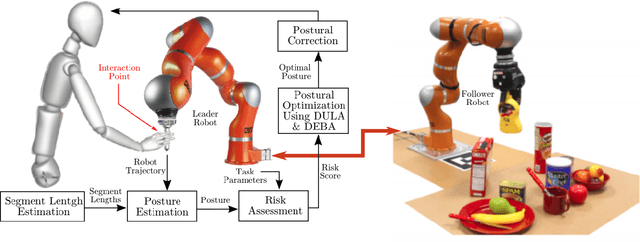

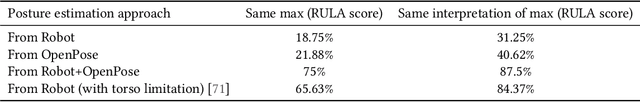

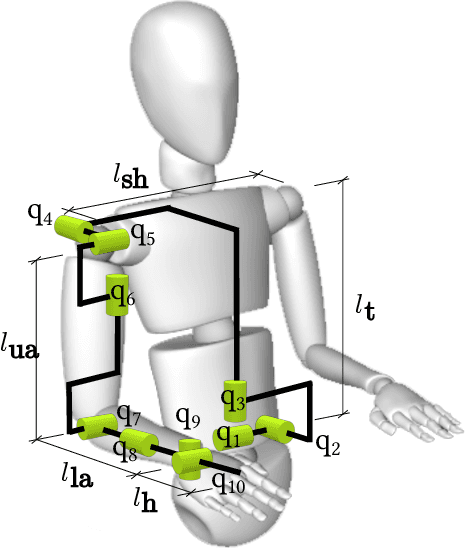

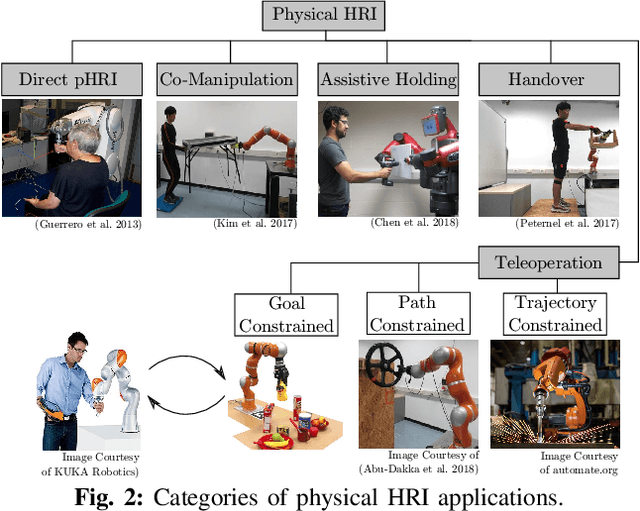

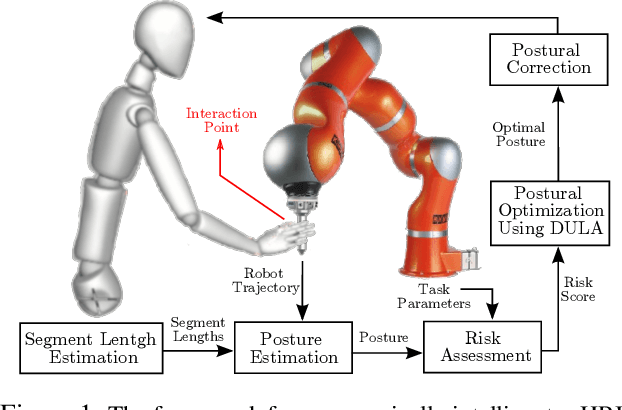

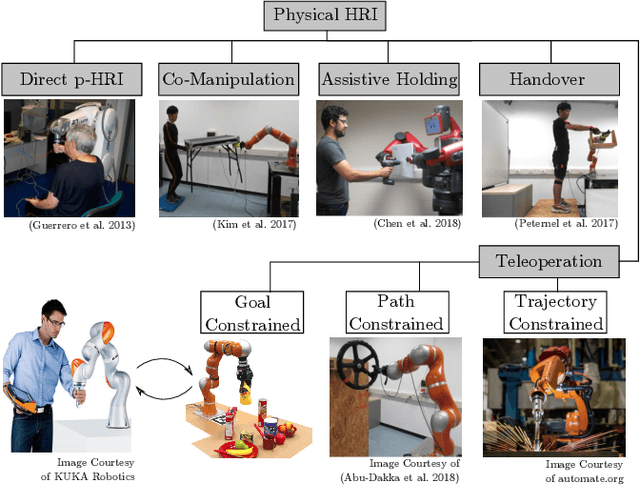

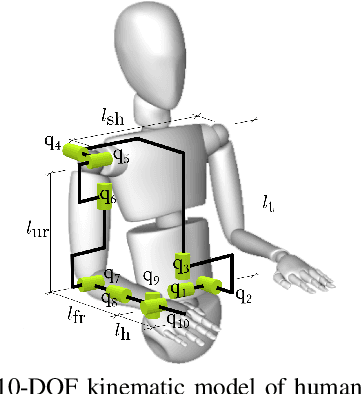

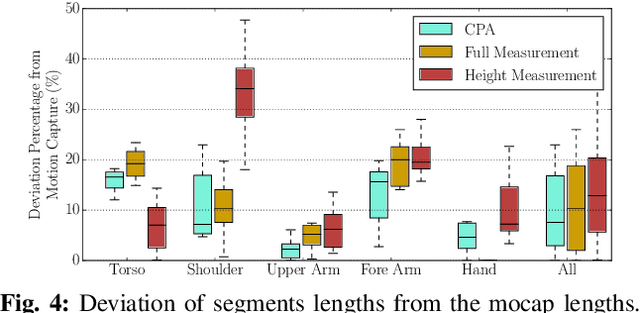

Abstract:3D posture estimation is important in analyzing and improving ergonomics in physical human-robot interaction and reducing the risk of musculoskeletal disorders. Vision-based posture estimation approaches are prone to sensor and model errors, as well as occlusion, while posture estimation solely from the interacting robot's trajectory suffers from ambiguous solutions. To benefit from the advantages of both approaches and improve upon their drawbacks, we introduce a low-cost, non-intrusive, and occlusion-robust multi-sensory 3D postural estimation algorithm in physical human-robot interaction. We use 2D postures from OpenPose over a single camera, and the trajectory of the interacting robot while the human performs a task. We model the problem as a partially-observable dynamical system and we infer the 3D posture via a particle filter. We present our work in teleoperation, but it can be generalized to other applications of physical human-robot interaction. We show that our multi-sensory system resolves human kinematic redundancy better than posture estimation solely using OpenPose or posture estimation solely using the robot's trajectory. This will increase the accuracy of estimated postures compared to the gold-standard motion capture postures. Moreover, our approach also performs better than other single sensory methods when postural assessment using RULA assessment tool.

DULA and DEBA: Differentiable Ergonomic Risk Models for Postural Assessment and Optimization in Ergonomically Intelligent pHRI

May 06, 2022

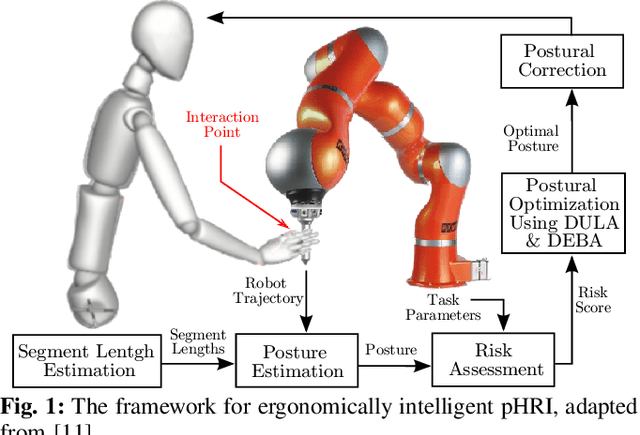

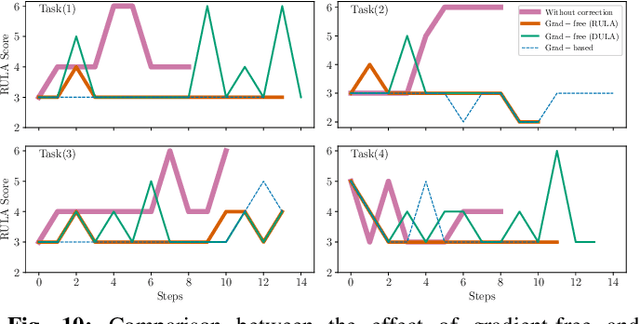

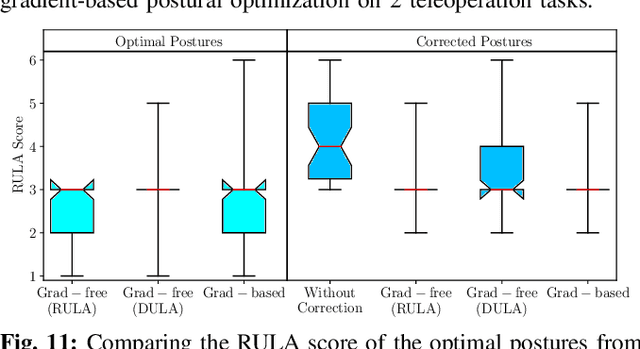

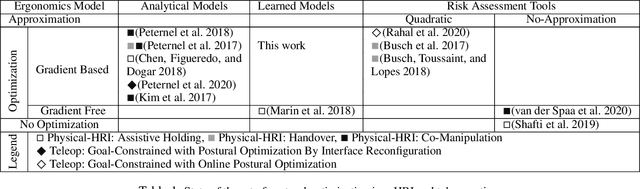

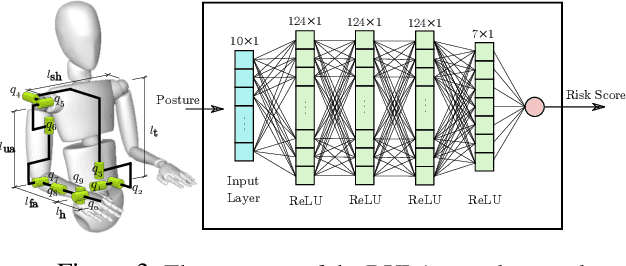

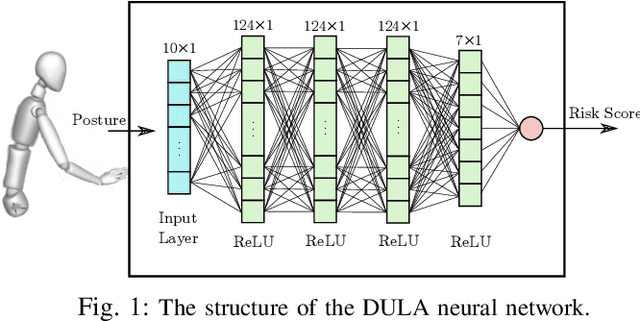

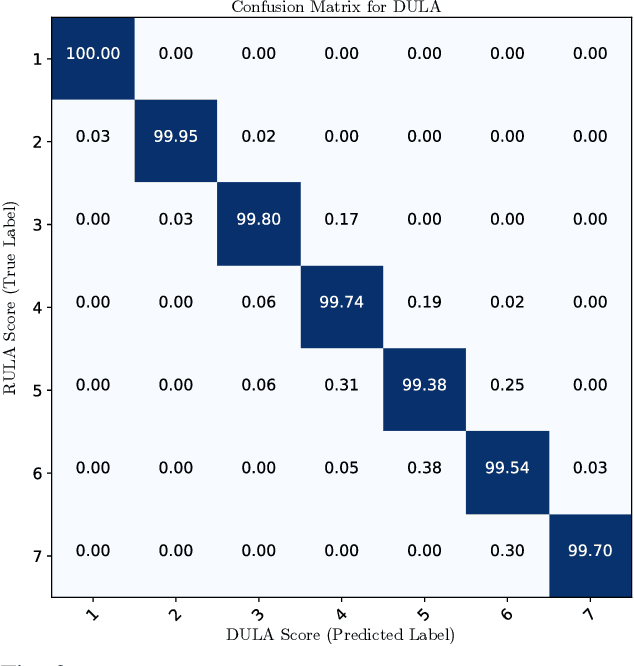

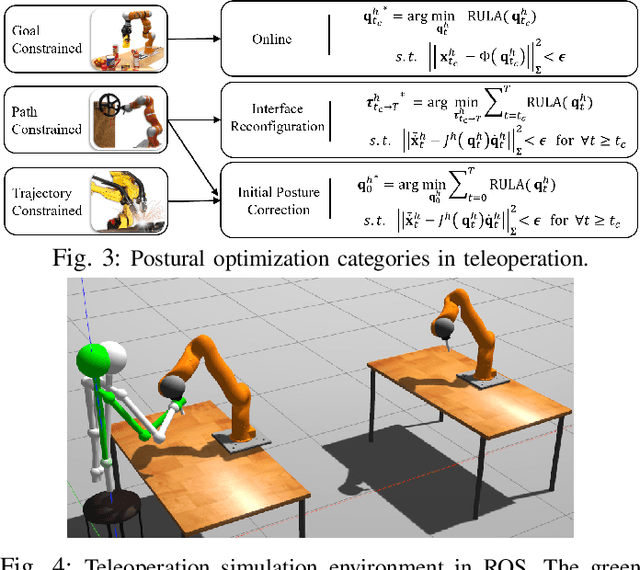

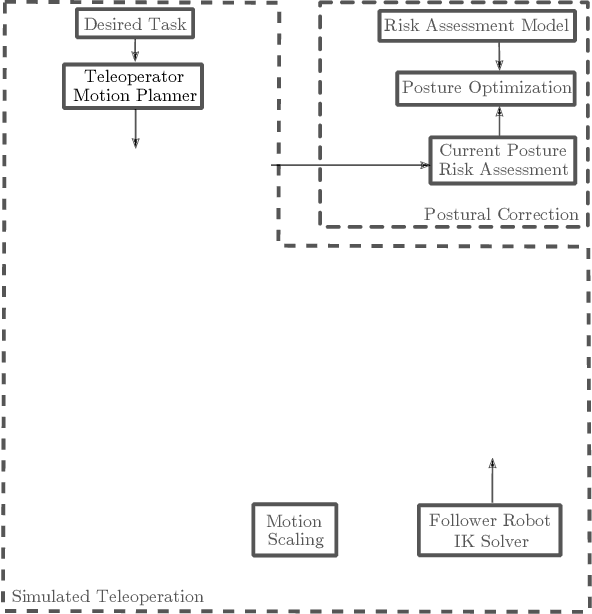

Abstract:Ergonomics and human comfort are essential concerns in physical human-robot interaction applications. Defining an accurate and easy-to-use ergonomic assessment model stands as an important step in providing feedback for postural correction to improve operator health and comfort. Common practical methods in the area suffer from inaccurate ergonomics models in performing postural optimization. In order to retain assessment quality, while improving computational considerations, we propose a novel framework for postural assessment and optimization for ergonomically intelligent physical human-robot interaction. We introduce DULA and DEBA, differentiable and continuous ergonomics models learned to replicate the popular and scientifically validated RULA and REBA assessments with more than 99% accuracy. We show that DULA and DEBA provide assessment comparable to RULA and REBA while providing computational benefits when being used in postural optimization. We evaluate our framework through human and simulation experiments. We highlight DULA and DEBA's strength in a demonstration of postural optimization for a simulated pHRI task.

Ergonomically Intelligent Physical Human-Robot Interaction: Postural Estimation, Assessment, and Optimization

Aug 12, 2021

Abstract:Ergonomics and human comfort are essential concerns in physical human-robot interaction applications, and common practical methods either fail in estimating the correct posture due to occlusion or suffer from less accurate ergonomics models in their postural optimization methods. Instead, we propose a novel framework for posture estimation, assessment, and optimization for ergonomically intelligent physical human-robot interaction. We show that we can estimate human posture solely from the trajectory of the interacting robot. We propose DULA, a differentiable ergonomics model, and use it in gradient-free postural optimization for physical human-robot interaction tasks such as co-manipulation and teleoperation. We evaluate our framework through human and simulation experiments.

DULA: A Differentiable Ergonomics Model for Postural Optimization in Physical HRI

Jul 14, 2021

Abstract:Ergonomics and human comfort are essential concerns in physical human-robot interaction applications. Defining an accurate and easy-to-use ergonomic assessment model stands as an important step in providing feedback for postural correction to improve operator health and comfort. In order to enable efficient computation, previously proposed automated ergonomic assessment and correction tools make approximations or simplifications to gold-standard assessment tools used by ergonomists in practice. In order to retain assessment quality, while improving computational considerations, we introduce DULA, a differentiable and continuous ergonomics model learned to replicate the popular and scientifically validated RULA assessment. We show that DULA provides assessment comparable to RULA while providing computational benefits. We highlight DULA's strength in a demonstration of gradient-based postural optimization for a simulated teleoperation task.

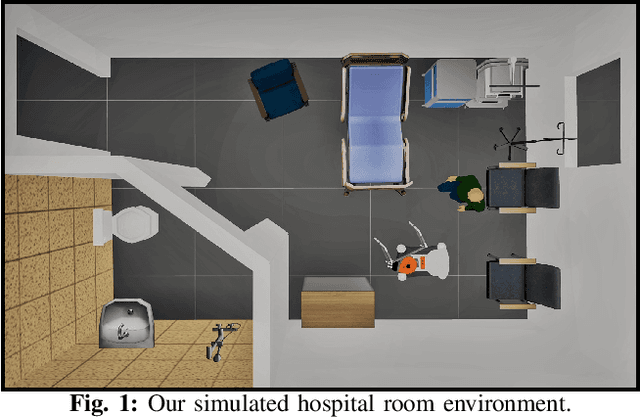

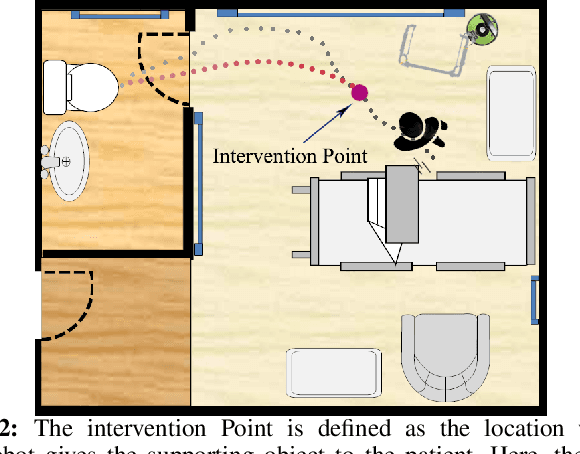

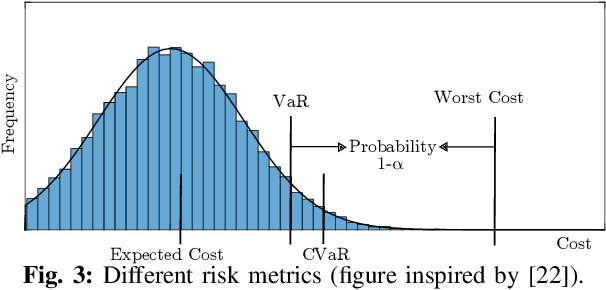

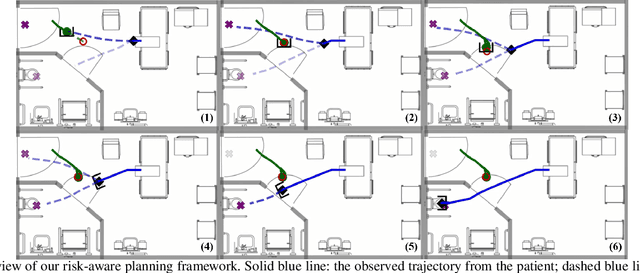

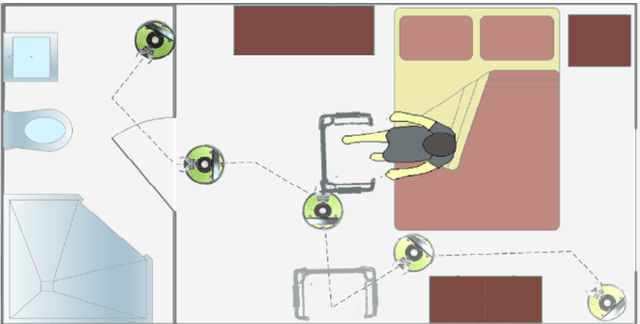

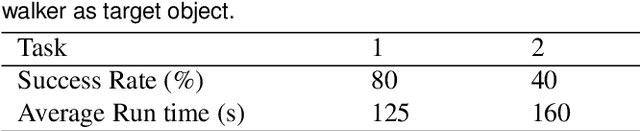

Risk-Aware Decision Making in Service Robots to Minimize Risk of Patient Falls in Hospitals

Oct 16, 2020

Abstract:Planning under uncertainty is a crucial capability for autonomous systems to operate reliably in uncertain and dynamic environments. The concern of patient safety becomes even more critical in healthcare settings where robots interact with humans. In this paper, we propose a novel risk-aware planning framework to minimize the risk of patient falls by providing a patient with an assistive device. Our approach combines learning-based prediction with model-based control to plan for the fall prevention tasks. This provides advantages compared to end-to-end learning methods in which the robot's performance is limited to specific scenarios, or purely model-based approaches that use relatively simple function approximators and are prone to high modeling errors. We compare two different risk metrics and the combination of them and report the results from various simulated scenarios. The results show that using the proposed cost function, the robot can plan interventions to avoid high fall score events.

Estimating Human Teleoperator Posture Using Only a Haptic-Input Device

Mar 02, 2020

Abstract:Ergonomic analysis of human posture plays a vital role in understanding long-term, work-related safety and health. Current analysis is often hindered due to difficulties in estimating human posture. We introduce a new approach to the problem of human posture estimation for teleoperation tasks which relies solely on a haptic-input device for generating observations. We model the human upper body using a redundant, partially observable dynamical system. This allows us to naturally formulate the estimation problem as probabilistic inference and solve the inference problem using a standard particle filter. We show that our approach accurately estimates the posture of different human users without knowing their specific segment lengths. We evaluate our posture estimation approach from a haptic-input device by comparing it with the human posture estimates from a commercial motion capture system. Our results show that the proposed algorithm successfully estimates human posture based only on the trajectory of the haptic-input device stylus. We additionally show that ergonomic risk estimates derived from our posture estimation approach are comparable to those estimates from gold-standard, motion-capture based pose estimates.

A Model Predictive Approach for Online Mobile Manipulation of Nonholonomic Objects using Learned Dynamics

Dec 19, 2019

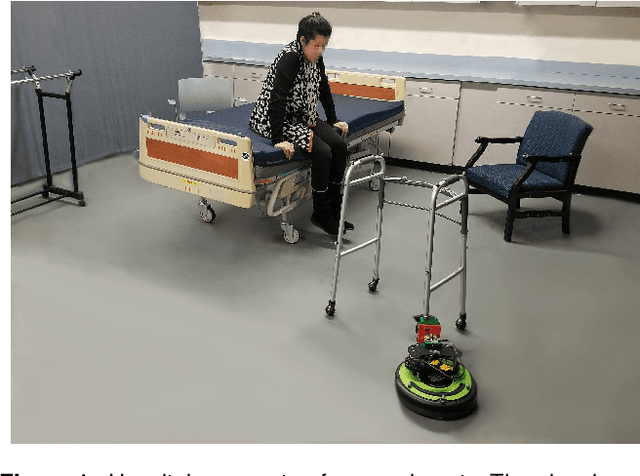

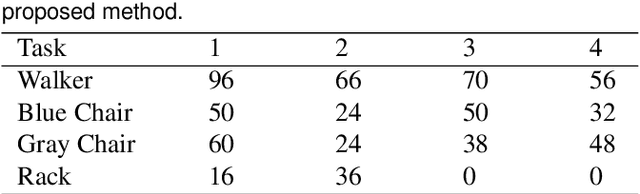

Abstract:A particular type of assistive robots designed for physical interaction with objects could play an important role assisting with mobility and fall prevention in healthcare facilities. Autonomous mobile manipulation presents a hurdle prior to safely using robots in real life applications. In this article, we introduce a mobile manipulation framework based on model predictive control using learned dynamics models of objects. We focus on the specific problem of manipulating legged objects such as those commonly found in healthcare environments and personal dwellings (e.g. walkers, tables, chairs). We describe a probabilistic method for autonomous learning of an approximate dynamics model for these objects. In this method, we learn dynamic parameters using a small dataset consisting of force and motion data from interactions between the robot and object. Moreover, we account for multiple manipulation strategies by formulating the manipulation planning as a mixed-integer convex optimization. The proposed framework considers the hybrid control system comprised of i) choosing which leg to grasp, and ii) control of continuous applied forces for manipulation. We formalize our algorithm based on model predictive control to compensate for modeling errors and find an optimal path to manipulate the object from one configuration to another. We show results for several objects with various wheel configurations. Simulation and physical experiments show that the obtained dynamics models are sufficiently accurate for safe and collision-free manipulation. When combined with the proposed manipulation planning algorithm, the robot successfully moves the object to a desired pose while avoiding collision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge