Jason R. Wilson

HRI Curriculum for a Liberal Arts Education

Mar 20, 2024Abstract:In this paper, we discuss the opportunities and challenges of teaching a human-robot interaction course at an undergraduate liberal arts college. We provide a sample syllabus adapted from a previous version of a course.

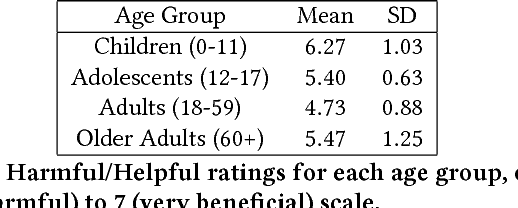

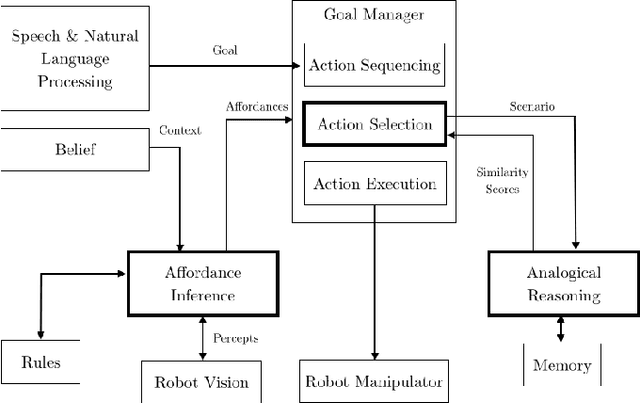

Human Autonomy as a Design Principle for Socially Assistive Robots

Nov 12, 2022Abstract:High levels of robot autonomy are a common goal, but there is a significant risk that the greater the autonomy of the robot the lesser the autonomy of the human working with the robot. For vulnerable populations like older adults who already have a diminished level of autonomy, this is an even greater concern. We propose that human autonomy needs to be at the center of the design for socially assistive robots. Towards this goal, we define autonomy and then provide architectural requirements for social robots to support the user's autonomy. As an example of a design effort, we describe some of the features of our Assist architecture.

Proceedings of the AI-HRI Symposium at AAAI-FSS 2022

Sep 28, 2022Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration on AI theory and methods aimed at HRI since 2014. This year, after a review of the achievements of the AI-HRI community over the last decade in 2021, we are focusing on a visionary theme: exploring the future of AI-HRI. Accordingly, we added a Blue Sky Ideas track to foster a forward-thinking discussion on future research at the intersection of AI and HRI. As always, we appreciate all contributions related to any topic on AI/HRI and welcome new researchers who wish to take part in this growing community. With the success of past symposia, AI-HRI impacts a variety of communities and problems, and has pioneered the discussions in recent trends and interests. This year's AI-HRI Fall Symposium aims to bring together researchers and practitioners from around the globe, representing a number of university, government, and industry laboratories. In doing so, we hope to accelerate research in the field, support technology transition and user adoption, and determine future directions for our group and our research.

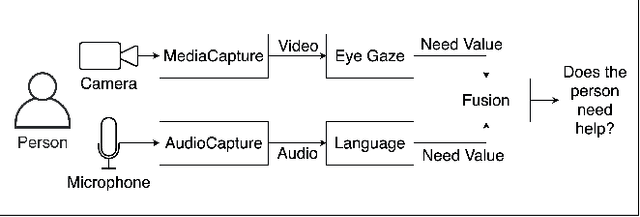

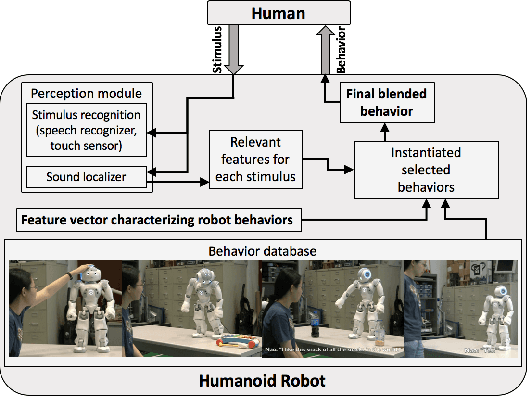

Enabling a Social Robot to Process Social Cues to Detect when to Help a User

Oct 18, 2021

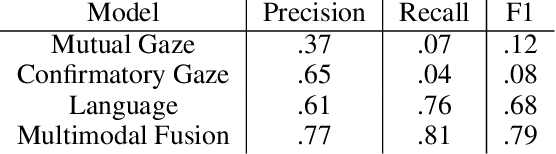

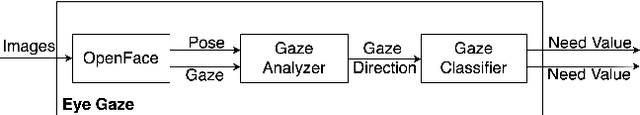

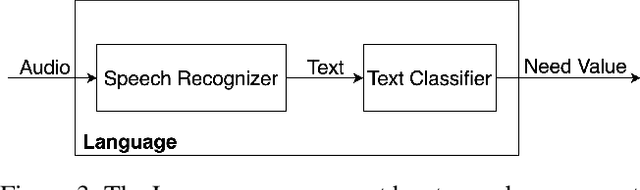

Abstract:It is important for socially assistive robots to be able to recognize when a user needs and wants help. Such robots need to be able to recognize human needs in a real-time manner so that they can provide timely assistance. We propose an architecture that uses social cues to determine when a robot should provide assistance. Based on a multimodal fusion approach upon eye gaze and language modalities, our architecture is trained and evaluated on data collected in a robot-assisted Lego building task. By focusing on social cues, our architecture has minimal dependencies on the specifics of a given task, enabling it to be applied in many different contexts. Enabling a social robot to recognize a user's needs through social cues can help it to adapt to user behaviors and preferences, which in turn will lead to improved user experiences.

Supporting User Autonomy with Multimodal Fusion to Detect when a User Needs Assistance from a Social Robot

Dec 07, 2020

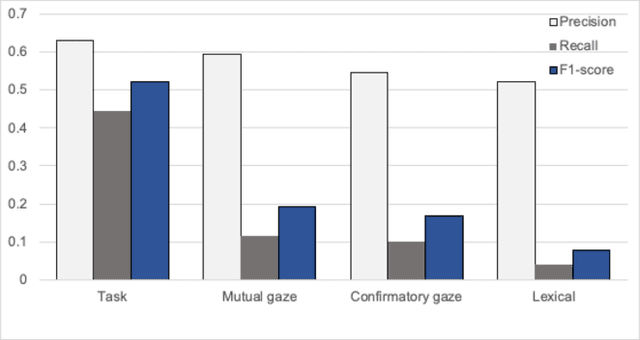

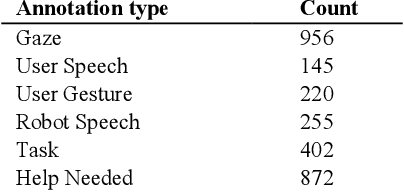

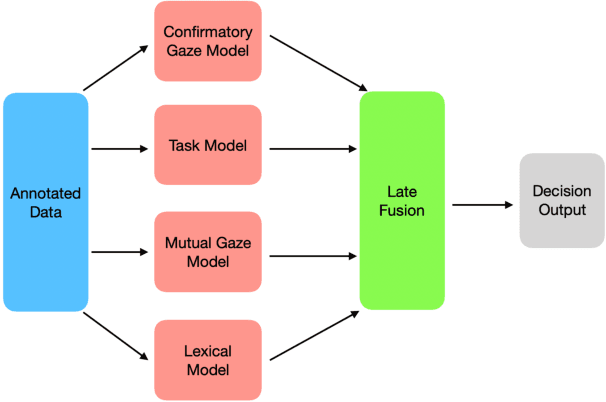

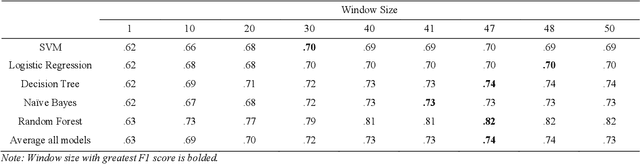

Abstract:It is crucial for any assistive robot to prioritize the autonomy of the user. For a robot working in a task setting to effectively maintain a user's autonomy it must provide timely assistance and make accurate decisions. We use four independent high-precision, low-recall models, a mutual gaze model, task model, confirmatory gaze model, and a lexical model, that predict a user's need for assistance. Improving upon our four independent models, we used a sliding window method and a random forest classification algorithm to capture temporal dependencies and fuse the independent models with a late fusion approach. The late fusion approach strongly outperforms all four of the independent models providing a more wholesome approach with greater accuracy to better assist the user while maintaining their autonomy. These results can provide insight into the potential of including additional modalities and utilizing assistive robots in more task settings.

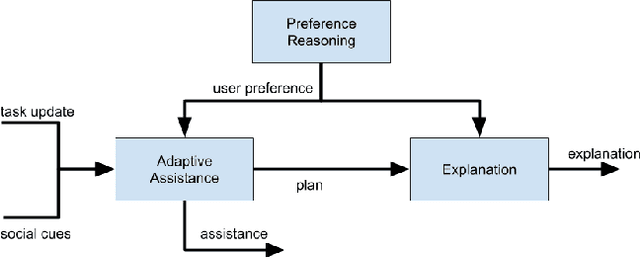

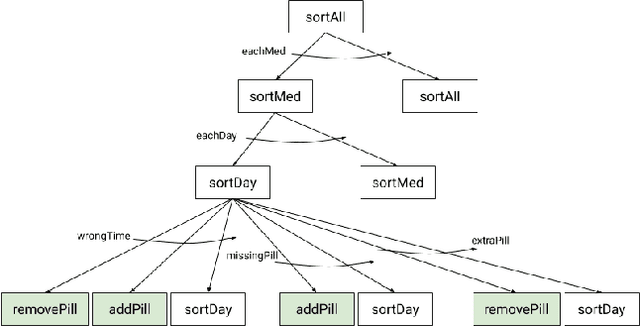

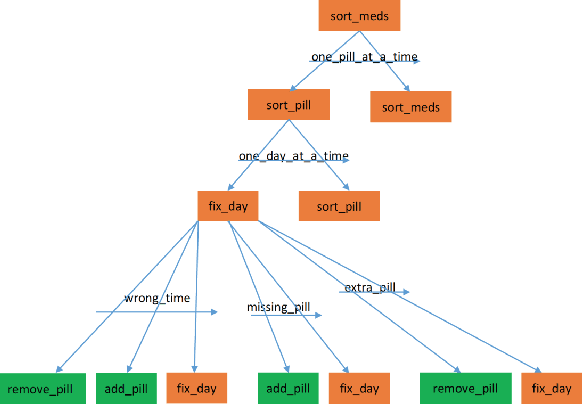

A Knowledge Driven Approach to Adaptive Assistance Using Preference Reasoning and Explanation

Dec 05, 2020

Abstract:There is a need for socially assistive robots (SARs) to provide transparency in their behavior by explaining their reasoning. Additionally, the reasoning and explanation should represent the user's preferences and goals. To work towards satisfying this need for interpretable reasoning and representations, we propose the robot uses Analogical Theory of Mind to infer what the user is trying to do and uses the Hint Engine to find an appropriate assistance based on what the user is trying to do. If the user is unsure or confused, the robot provides the user with an explanation, generated by the Explanation Synthesizer. The explanation helps the user understand what the robot inferred about the user's preferences and why the robot decided to provide the assistance it gave. A knowledge-driven approach provides transparency to reasoning about preferences, assistance, and explanations, thereby facilitating the incorporation of user feedback and allowing the robot to learn and adapt to the user.

Proceedings of the AI-HRI Symposium at AAAI-FSS 2020

Nov 11, 2020Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration since 2014. In that time, the related topic of trust in robotics has been rapidly growing, with major research efforts at universities and laboratories across the world. Indeed, many of the past participants in AI-HRI have been or are now involved with research into trust in HRI. While trust has no consensus definition, it is regularly associated with predictability, reliability, inciting confidence, and meeting expectations. Furthermore, it is generally believed that trust is crucial for adoption of both AI and robotics, particularly when transitioning technologies from the lab to industrial, social, and consumer applications. However, how does trust apply to the specific situations we encounter in the AI-HRI sphere? Is the notion of trust in AI the same as that in HRI? We see a growing need for research that lives directly at the intersection of AI and HRI that is serviced by this symposium. Over the course of the two-day meeting, we propose to create a collaborative forum for discussion of current efforts in trust for AI-HRI, with a sub-session focused on the related topic of explainable AI (XAI) for HRI.

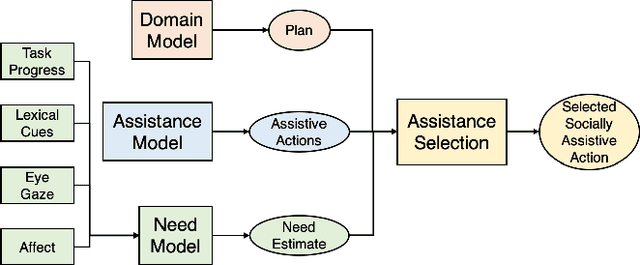

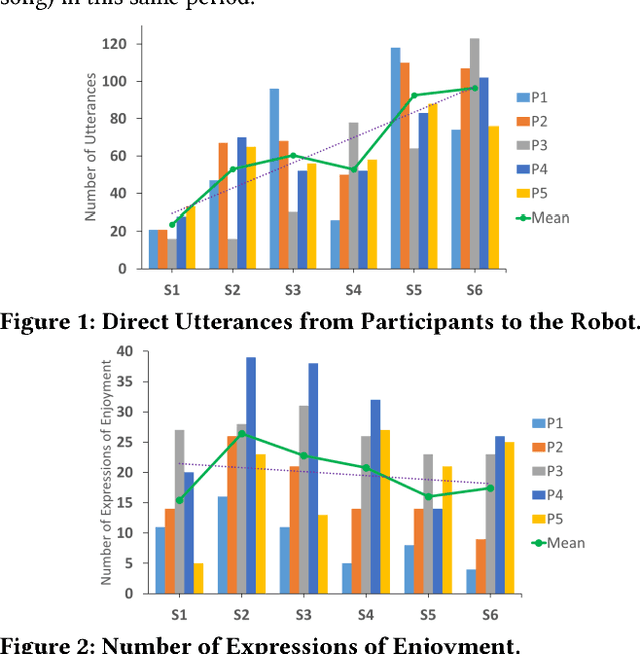

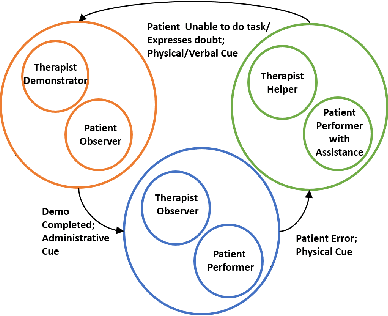

Developing Computational Models of Social Assistance to Guide Socially Assistive Robots

Sep 14, 2019

Abstract:While there are many examples in which robots provide social assistance, a lack of theory on how the robots should decide how to assist impedes progress in realizing these technologies. To address this deficiency, we propose a pair of computational models to guide a robot as it provides social assistance. The model of social autonomy helps a robot select an appropriate assistance that will help with the task at hand while also maintaining the autonomy of the person being assisted. The model of social alliance describes how a to determine whether the robot and the person being assisted are cooperatively working towards the same goal. Each of these models are rooted in social reasoning between people, and we describe here our ongoing work to adapt this social reasoning to human-robot interactions.

Proceedings of the Workshop on Social Robots in Therapy: Focusing on Autonomy and Ethical Challenges

Dec 18, 2018

Abstract:Robot-Assisted Therapy (RAT) has successfully been used in HRI research by including social robots in health-care interventions by virtue of their ability to engage human users both social and emotional dimensions. Research projects on this topic exist all over the globe in the USA, Europe, and Asia. All of these projects have the overall ambitious goal to increase the well-being of a vulnerable population. Typical work in RAT is performed using remote controlled robots; a technique called Wizard-of-Oz (WoZ). The robot is usually controlled, unbeknownst to the patient, by a human operator. However, WoZ has been demonstrated to not be a sustainable technique in the long-term. Providing the robots with autonomy (while remaining under the supervision of the therapist) has the potential to lighten the therapists burden, not only in the therapeutic session itself but also in longer-term diagnostic tasks. Therefore, there is a need for exploring several degrees of autonomy in social robots used in therapy. Increasing the autonomy of robots might also bring about a new set of challenges. In particular, there will be a need to answer new ethical questions regarding the use of robots with a vulnerable population, as well as a need to ensure ethically-compliant robot behaviours. Therefore, in this workshop we want to gather findings and explore which degree of autonomy might help to improve health-care interventions and how we can overcome the ethical challenges inherent to it.

Enabling Basic Normative HRI in a Cognitive Robotic Architecture

Feb 11, 2016

Abstract:Collaborative human activities are grounded in social and moral norms, which humans consciously and subconsciously use to guide and constrain their decision-making and behavior, thereby strengthening their interactions and preventing emotional and physical harm. This type of norm-based processing is also critical for robots in many human-robot interaction scenarios (e.g., when helping elderly and disabled persons in assisted living facilities, or assisting humans in assembly tasks in factories or even the space station). In this position paper, we will briefly describe how several components in an integrated cognitive architecture can be used to implement processes that are required for normative human-robot interactions, especially in collaborative tasks where actions and situations could potentially be perceived as threatening and thus need a change in course of action to mitigate the perceived threats.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge