Emily Jensen

Large Language Models Enable Automated Formative Feedback in Human-Robot Interaction Tasks

May 25, 2024Abstract:We claim that LLMs can be paired with formal analysis methods to provide accessible, relevant feedback for HRI tasks. While logic specifications are useful for defining and assessing a task, these representations are not easily interpreted by non-experts. Luckily, LLMs are adept at generating easy-to-understand text that explains difficult concepts. By integrating task assessment outcomes and other contextual information into an LLM prompt, we can effectively synthesize a useful set of recommendations for the learner to improve their performance.

Automated Assessment and Adaptive Multimodal Formative Feedback Improves Psychomotor Skills Training Outcomes in Quadrotor Teleoperation

May 24, 2024Abstract:The workforce will need to continually upskill in order to meet the evolving demands of industry, especially working with robotic and autonomous systems. Current training methods are not scalable and do not adapt to the skills that learners already possess. In this work, we develop a system that automatically assesses learner skill in a quadrotor teleoperation task using temporal logic task specifications. This assessment is used to generate multimodal feedback based on the principles of effective formative feedback. Participants perceived the feedback positively. Those receiving formative feedback viewed the feedback as more actionable compared to receiving summary statistics. Participants in the multimodal feedback condition were more likely to achieve a safe landing and increased their safe landings more over the experiment compared to other feedback conditions. Finally, we identify themes to improve adaptive feedback and discuss and how training for complex psychomotor tasks can be integrated with learning theories.

HRI Curriculum for a Liberal Arts Education

Mar 20, 2024Abstract:In this paper, we discuss the opportunities and challenges of teaching a human-robot interaction course at an undergraduate liberal arts college. We provide a sample syllabus adapted from a previous version of a course.

Mathematical Models of Human Drivers Using Artificial Risk Fields

May 24, 2022

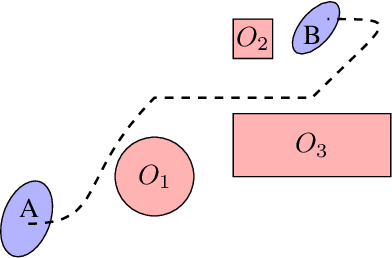

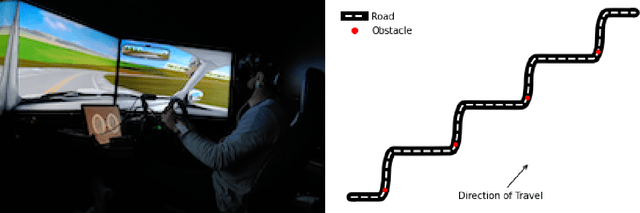

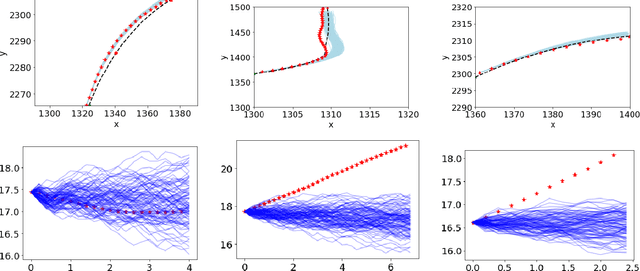

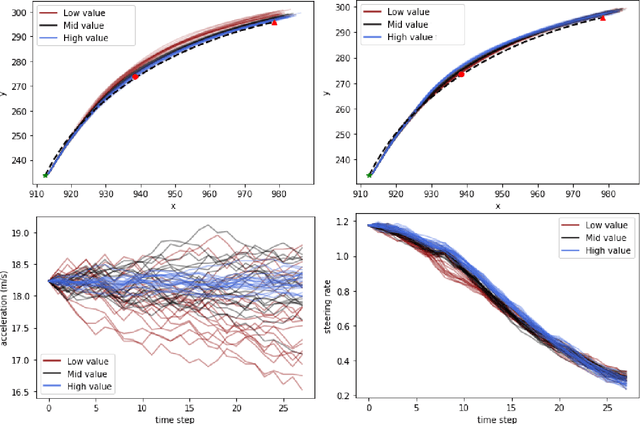

Abstract:In this paper, we use the concept of artificial risk fields to predict how human operators control a vehicle in response to upcoming road situations. A risk field assigns a non-negative risk measure to the state of the system in order to model how close that state is to violating a safety property, such as hitting an obstacle or exiting the road. Using risk fields, we construct a stochastic model of the operator that maps from states to likely actions. We demonstrate our approach on a driving task wherein human subjects are asked to drive a car inside a realistic driving simulator while avoiding obstacles placed on the road. We show that the most likely risk field given the driving data is obtained by solving a convex optimization problem. Next, we apply the inferred risk fields to generate distinct driving behaviors while comparing predicted trajectories against ground truth measurements. We observe that the risk fields are excellent at predicting future trajectory distributions with high prediction accuracy for up to twenty seconds prediction horizons. At the same time, we observe some challenges such as the inability to account for how drivers choose to accelerate/decelerate based on the road conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge