Phyo Thuta Aung

Enabling a Social Robot to Process Social Cues to Detect when to Help a User

Oct 18, 2021

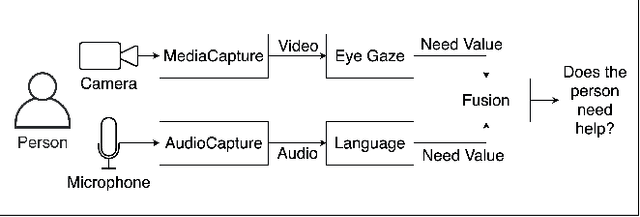

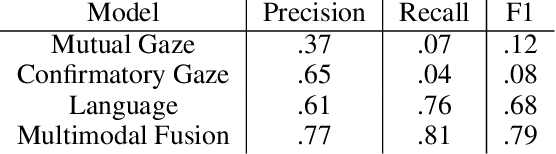

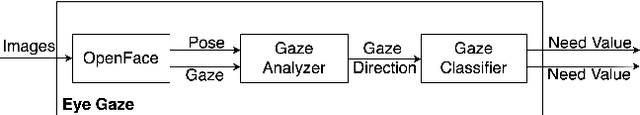

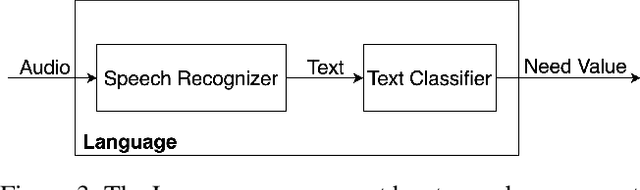

Abstract:It is important for socially assistive robots to be able to recognize when a user needs and wants help. Such robots need to be able to recognize human needs in a real-time manner so that they can provide timely assistance. We propose an architecture that uses social cues to determine when a robot should provide assistance. Based on a multimodal fusion approach upon eye gaze and language modalities, our architecture is trained and evaluated on data collected in a robot-assisted Lego building task. By focusing on social cues, our architecture has minimal dependencies on the specifics of a given task, enabling it to be applied in many different contexts. Enabling a social robot to recognize a user's needs through social cues can help it to adapt to user behaviors and preferences, which in turn will lead to improved user experiences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge