Katrin Lohan

Proceedings of the AI-HRI Symposium at AAAI-FSS 2020

Nov 11, 2020Abstract:The Artificial Intelligence (AI) for Human-Robot Interaction (HRI) Symposium has been a successful venue of discussion and collaboration since 2014. In that time, the related topic of trust in robotics has been rapidly growing, with major research efforts at universities and laboratories across the world. Indeed, many of the past participants in AI-HRI have been or are now involved with research into trust in HRI. While trust has no consensus definition, it is regularly associated with predictability, reliability, inciting confidence, and meeting expectations. Furthermore, it is generally believed that trust is crucial for adoption of both AI and robotics, particularly when transitioning technologies from the lab to industrial, social, and consumer applications. However, how does trust apply to the specific situations we encounter in the AI-HRI sphere? Is the notion of trust in AI the same as that in HRI? We see a growing need for research that lives directly at the intersection of AI and HRI that is serviced by this symposium. Over the course of the two-day meeting, we propose to create a collaborative forum for discussion of current efforts in trust for AI-HRI, with a sub-session focused on the related topic of explainable AI (XAI) for HRI.

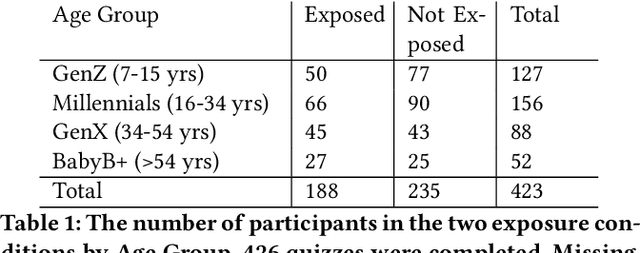

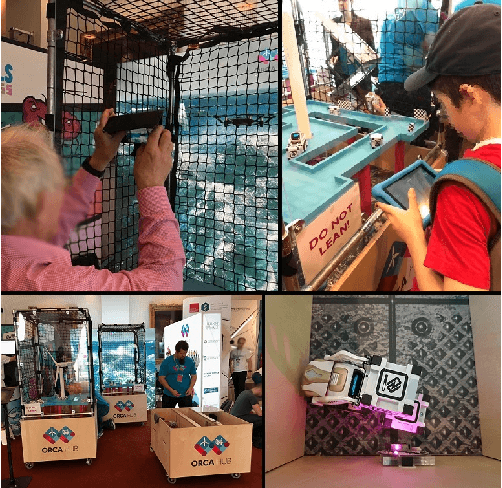

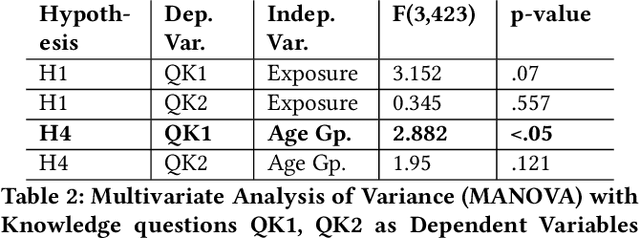

Robots in the Danger Zone: Exploring Public Perception through Engagement

Apr 01, 2020

Abstract:Public perceptions of Robotics and Artificial Intelligence (RAI) are important in the acceptance, uptake, government regulation and research funding of this technology. Recent research has shown that the public's understanding of RAI can be negative or inaccurate. We believe effective public engagement can help ensure that public opinion is better informed. In this paper, we describe our first iteration of a high throughput in-person public engagement activity. We describe the use of a light touch quiz-format survey instrument to integrate in-the-wild research participation into the engagement, allowing us to probe both the effectiveness of our engagement strategy, and public perceptions of the future roles of robots and humans working in dangerous settings, such as in the off-shore energy sector. We critique our methods and share interesting results into generational differences within the public's view of the future of Robotics and AI in hazardous environments. These findings include that older peoples' views about the future of robots in hazardous environments were not swayed by exposure to our exhibit, while the views of younger people were affected by our exhibit, leading us to consider carefully in future how to more effectively engage with and inform older people.

* Accepted in HRI 2020, Keywords: Human robot interaction, robotics, artificial intelligence, public engagement, public perceptions of robots, robotics and society

Proceedings of the AI-HRI Symposium at AAAI-FSS 2019

Sep 19, 2019Abstract:The past few years have seen rapid progress in the development of service robots. Universities and companies alike have launched major research efforts toward the deployment of ambitious systems designed to aid human operators performing a variety of tasks. These robots are intended to make those who may otherwise need to live in assisted care facilities more independent, to help workers perform their jobs, or simply to make life more convenient. Service robots provide a powerful platform on which to study Artificial Intelligence (AI) and Human-Robot Interaction (HRI) in the real world. Research sitting at the intersection of AI and HRI is crucial to the success of service robots if they are to fulfill their mission. This symposium seeks to highlight research enabling robots to effectively interact with people autonomously while modeling, planning, and reasoning about the environment that the robot operates in and the tasks that it must perform. AI-HRI deals with the challenge of interacting with humans in environments that are relatively unstructured or which are structured around people rather than machines, as well as the possibility that the robot may need to interact naturally with people rather than through teach pendants, programming, or similar interfaces.

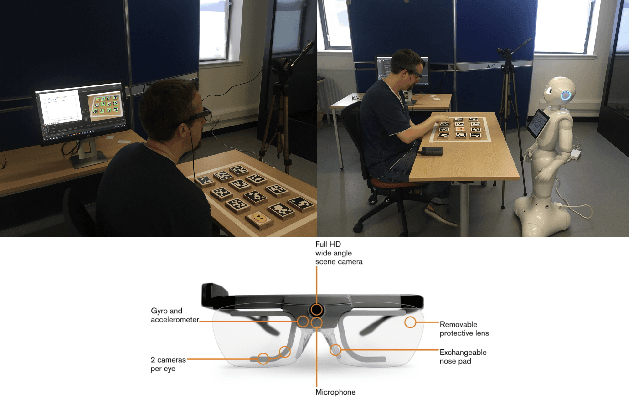

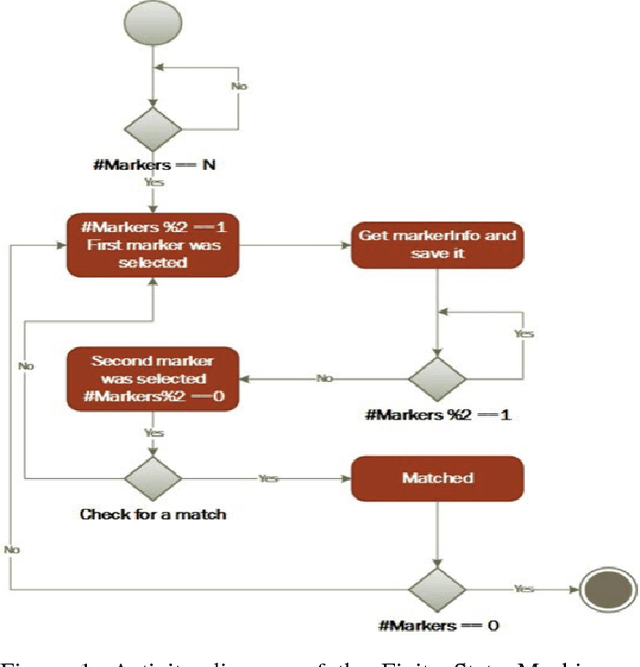

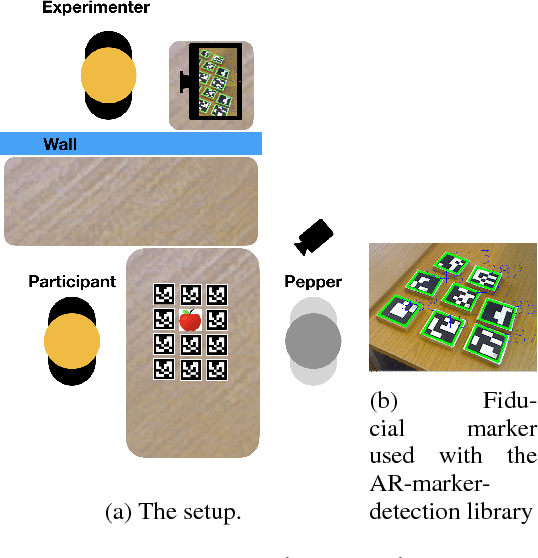

Trust and Cognitive Load During Human-Robot Interaction

Sep 11, 2019

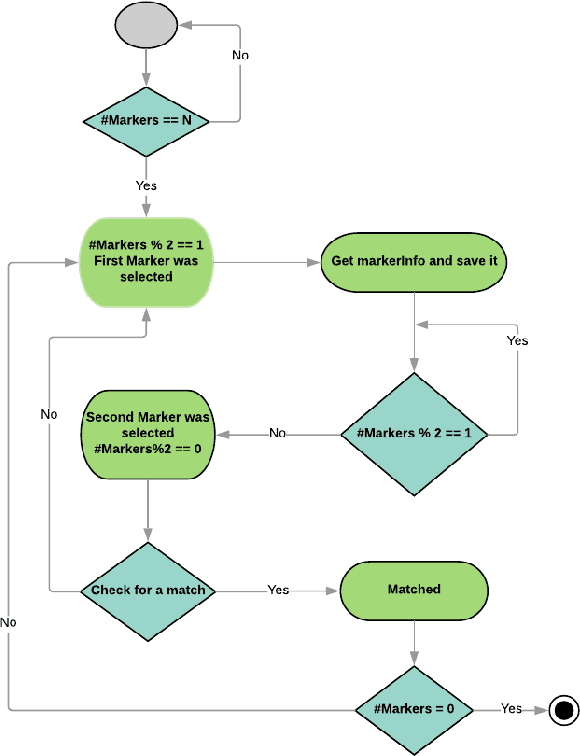

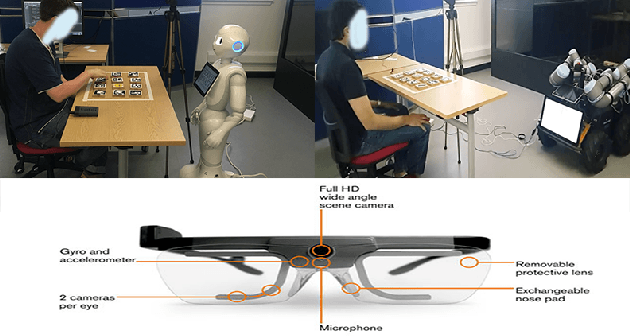

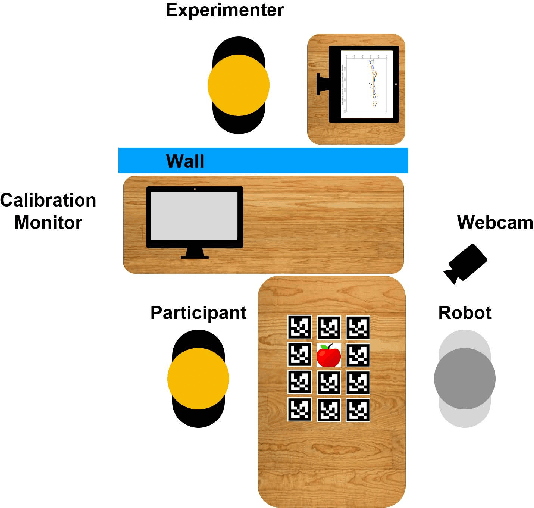

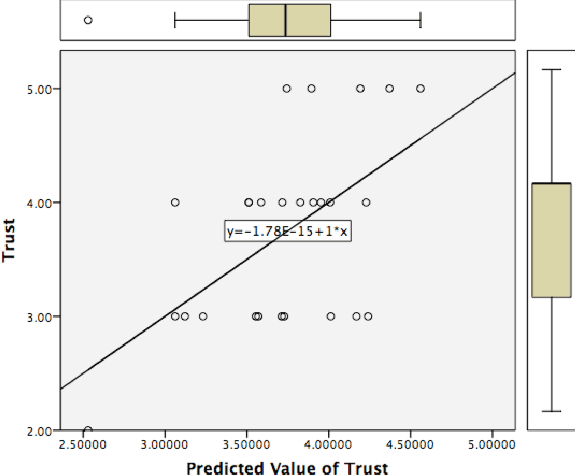

Abstract:This paper presents an exploratory study to understand the relationship between a humans' cognitive load, trust, and anthropomorphism during human-robot interaction. To understand the relationship, we created a \say{Matching the Pair} game that participants could play collaboratively with one of two robot types, Husky or Pepper. The goal was to understand if humans would trust the robot as a teammate while being in the game-playing situation that demanded a high level of cognitive load. Using a humanoid vs. a technical robot, we also investigated the impact of physical anthropomorphism and we furthermore tested the impact of robot error rate on subsequent judgments and behavior. Our results showed that there was an inversely proportional relationship between trust and cognitive load, suggesting that as the amount of cognitive load increased in the participants, their ratings of trust decreased. We also found a triple interaction impact between robot-type, error-rate and participant's ratings of trust. We found that participants perceived Pepper to be more trustworthy in comparison with the Husky robot after playing the game with both robots under high error-rate condition. On the contrary, Husky was perceived as more trustworthy than Pepper when it was depicted as featuring a low error-rate. Our results are interesting and call further investigation of the impact of physical anthropomorphism in combination with variable error-rates of the robot.

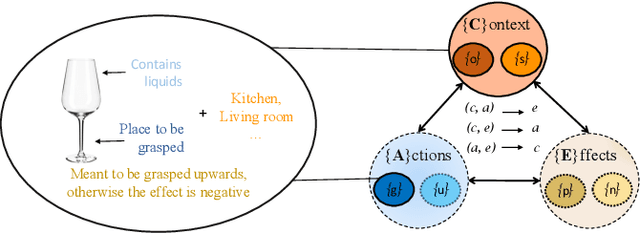

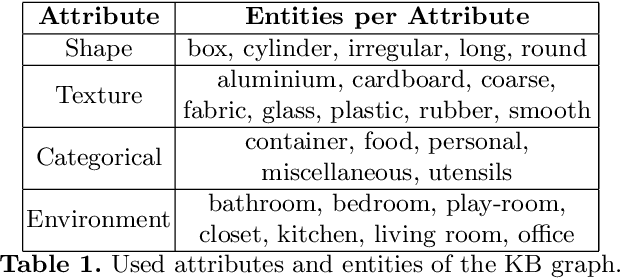

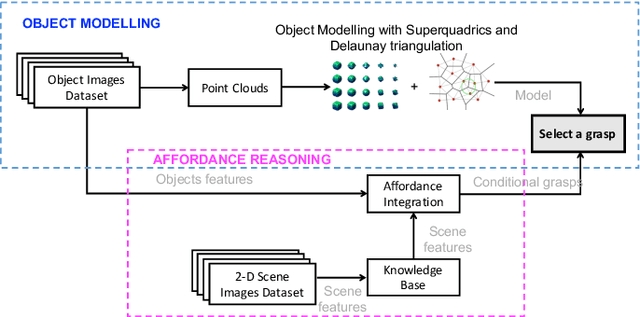

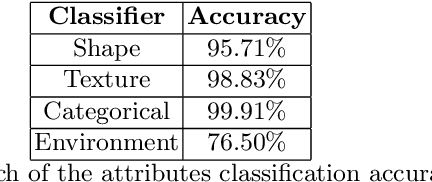

Reasoning on Grasp-Action Affordances

May 25, 2019

Abstract:Artificial intelligence is essential to succeed in challenging activities that involve dynamic environments, such as object manipulation tasks in indoor scenes. Most of the state-of-the-art literature explores robotic grasping methods by focusing exclusively on attributes of the target object. When it comes to human perceptual learning approaches, these physical qualities are not only inferred from the object, but also from the characteristics of the surroundings. This work proposes a method that includes environmental context to reason on an object affordance to then deduce its grasping regions. This affordance is reasoned using a ranked association of visual semantic attributes harvested in a knowledge base graph representation. The framework is assessed using standard learning evaluation metrics and the zero-shot affordance prediction scenario. The resulting grasping areas are compared with unseen labelled data to asses their accuracy matching percentage. The outcome of this evaluation suggest the autonomy capabilities of the proposed method for object interaction applications in indoor environments.

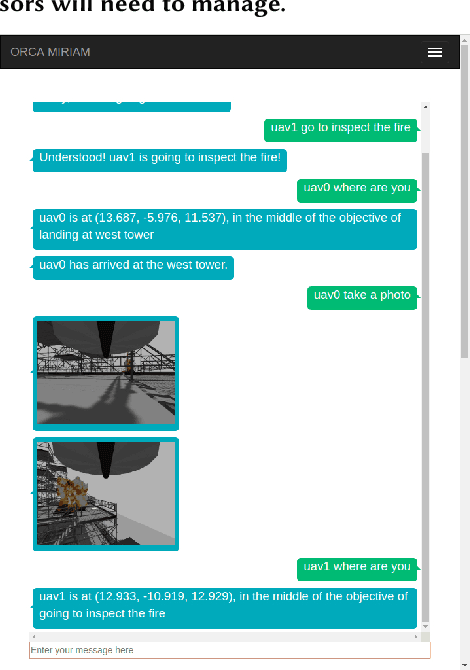

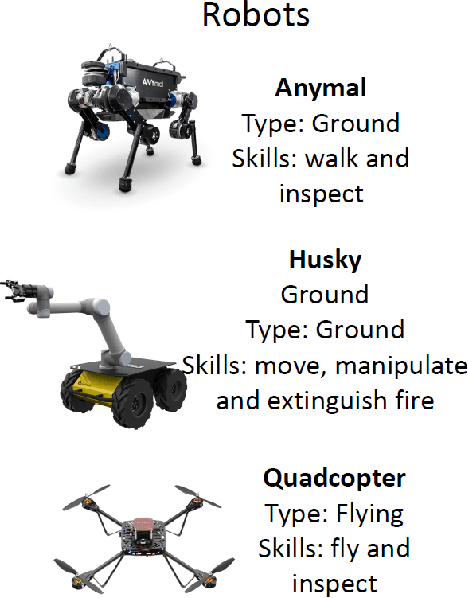

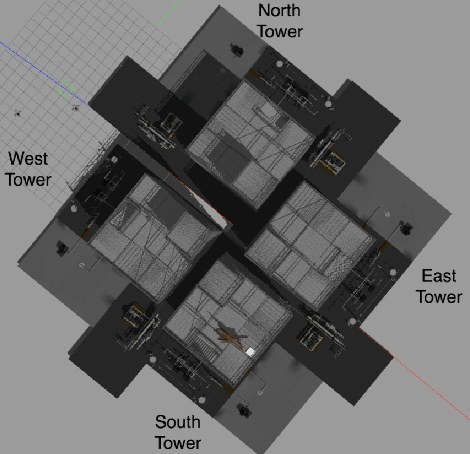

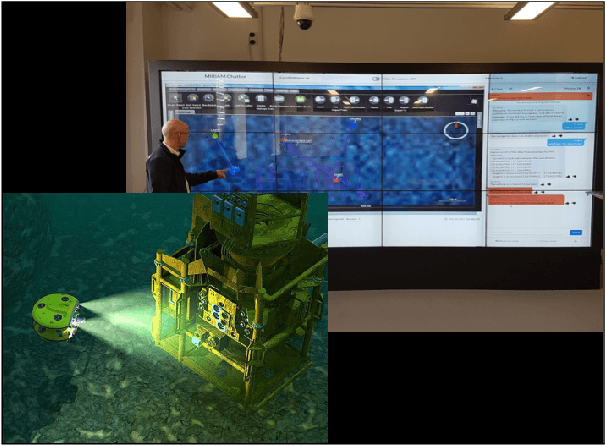

Challenges in Collaborative HRI for Remote Robot Teams

May 17, 2019

Abstract:Collaboration between human supervisors and remote teams of robots is highly challenging, particularly in high-stakes, distant, hazardous locations, such as off-shore energy platforms. In order for these teams of robots to truly be beneficial, they need to be trusted to operate autonomously, performing tasks such as inspection and emergency response, thus reducing the number of personnel placed in harm's way. As remote robots are generally trusted less than robots in close-proximity, we present a solution to instil trust in the operator through a `mediator robot' that can exhibit social skills, alongside sophisticated visualisation techniques. In this position paper, we present general challenges and then take a closer look at one challenge in particular, discussing an initial study, which investigates the relationship between the level of control the supervisor hands over to the mediator robot and how this affects their trust. We show that the supervisor is more likely to have higher trust overall if their initial experience involves handing over control of the emergency situation to the robotic assistant. We discuss this result, here, as well as other challenges and interaction techniques for human-robot collaboration.

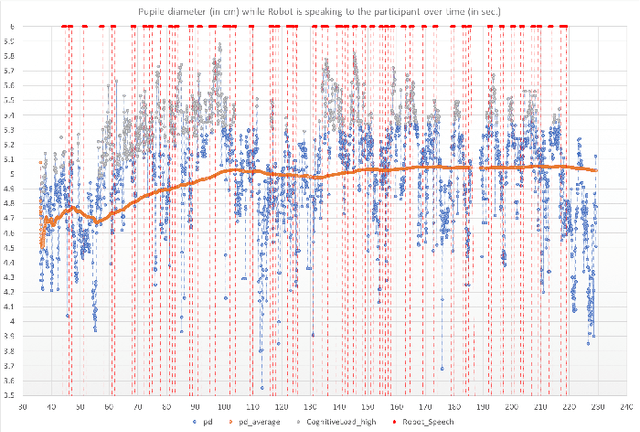

Using Pupil Diameter to Measure Cognitive Load

Nov 29, 2018

Abstract:In this paper, we will present a method for measuring cognitive load and online real-time feedback using the Tobii Pro 2 eye-tracking glasses. The system is envisaged to be capable of estimating high cognitive load states and situations, and adjust human-machine interfaces to the user's needs. The system is using well-known metrics such as average pupillary size over time. Our system can provide cognitive load feedback at 17-18 Hz. We will elaborate on our results of a HRI study using this tool to show it's functionality.

Playing Pairs with Pepper

Oct 17, 2018

Abstract:As robots become increasingly prevalent in almost all areas of society, the factors affecting humans trust in those robots becomes increasingly important. This paper is intended to investigate the factor of robot attributes, looking specifically at the relationship between anthropomorphism and human development of trust. To achieve this, an interaction game, Matching the Pairs, was designed and implemented on two robots of varying levels of anthropomorphism, Pepper and Husky. Participants completed both pre- and post-test questionnaires that were compared and analyzed predominantly with the use of quantitative methods, such as paired sample t-tests. Post-test analyses suggested a positive relationship between trust and anthropomorphism with $80\%$ of participants confirming that the robots' adoption of facial features assisted in establishing trust. The results also indicated a positive relationship between interaction and trust with $90\%$ of participants confirming this for both robots post-test

Proceedings of the AI-HRI Symposium at AAAI-FSS 2018

Sep 18, 2018Abstract:The goal of the Interactive Learning for Artificial Intelligence (AI) for Human-Robot Interaction (HRI) symposium is to bring together the large community of researchers working on interactive learning scenarios for interactive robotics. While current HRI research involves investigating ways for robots to effectively interact with people, HRI's overarching goal is to develop robots that are autonomous while intelligently modeling and learning from humans. These goals greatly overlap with some central goals of AI and interactive machine learning, such that HRI is an extremely challenging problem domain for interactive learning and will elicit fresh problem areas for robotics research. Present-day AI research still does not widely consider situations for interacting directly with humans and within human-populated environments, which present inherent uncertainty in dynamics, structure, and interaction. We believe that the HRI community already offers a rich set of principles and observations that can be used to structure new models of interaction. The human-aware AI initiative has primarily been approached through human-in-the-loop methods that use people's data and feedback to improve refinement and performance of the algorithms, learned functions, and personalization. We thus believe that HRI is an important component to furthering AI and robotics research.

The ORCA Hub: Explainable Offshore Robotics through Intelligent Interfaces

Mar 06, 2018

Abstract:We present the UK Robotics and Artificial Intelligence Hub for Offshore Robotics for Certification of Assets (ORCA Hub), a 3.5 year EPSRC funded, multi-site project. The ORCA Hub vision is to use teams of robots and autonomous intelligent systems (AIS) to work on offshore energy platforms to enable cheaper, safer and more efficient working practices. The ORCA Hub will research, integrate, validate and deploy remote AIS solutions that can operate with existing and future offshore energy assets and sensors, interacting safely in autonomous or semi-autonomous modes in complex and cluttered environments, co-operating with remote operators. The goal is that through the use of such robotic systems offshore, the need for personnel will decrease. To enable this to happen, the remote operator will need a high level of situation awareness and key to this is the transparency of what the autonomous systems are doing and why. This increased transparency will facilitate a trusting relationship, which is particularly key in high-stakes, hazardous situations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge