Richard G. Freedman

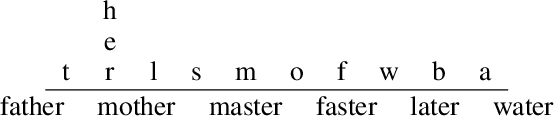

A Symbolic Representation of Human Posture for Interpretable Learning and Reasoning

Oct 17, 2022

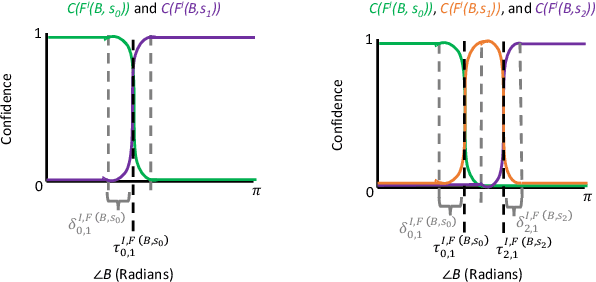

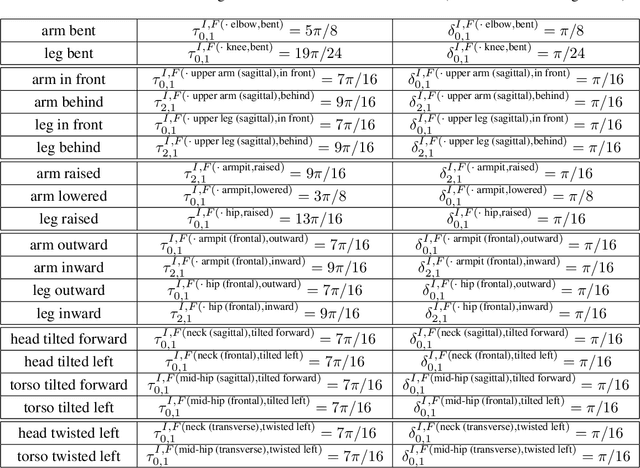

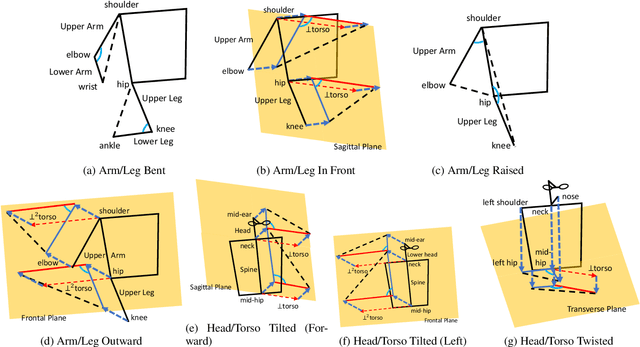

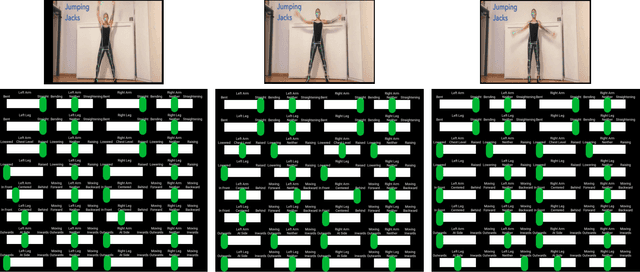

Abstract:Robots that interact with humans in a physical space or application need to think about the person's posture, which typically comes from visual sensors like cameras and infra-red. Artificial intelligence and machine learning algorithms use information from these sensors either directly or after some level of symbolic abstraction, and the latter usually partitions the range of observed values to discretize the continuous signal data. Although these representations have been effective in a variety of algorithms with respect to accuracy and task completion, the underlying models are rarely interpretable, which also makes their outputs more difficult to explain to people who request them. Instead of focusing on the possible sensor values that are familiar to a machine, we introduce a qualitative spatial reasoning approach that describes the human posture in terms that are more familiar to people. This paper explores the derivation of our symbolic representation at two levels of detail and its preliminary use as features for interpretable activity recognition.

Provenance-Based Assessment of Plans in Context

Nov 03, 2020

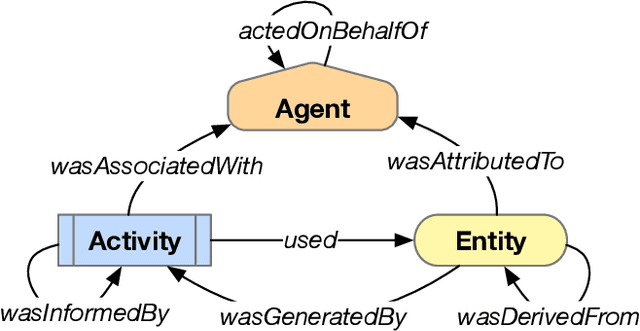

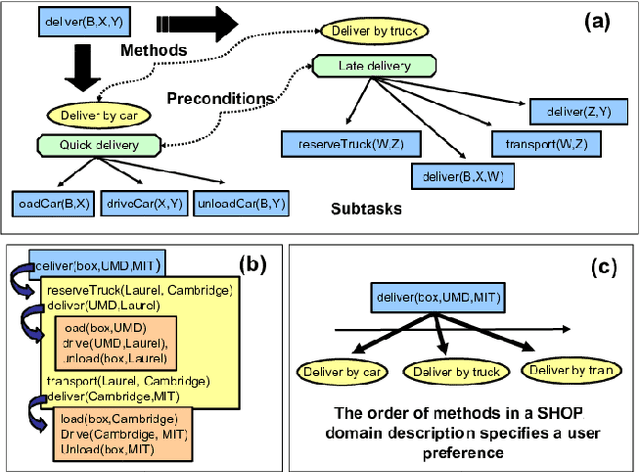

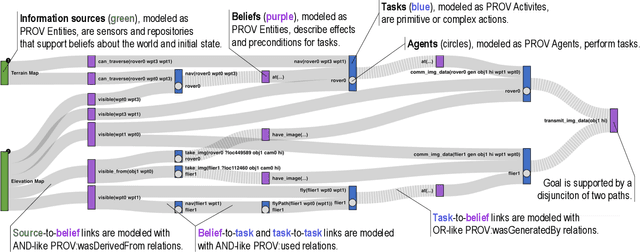

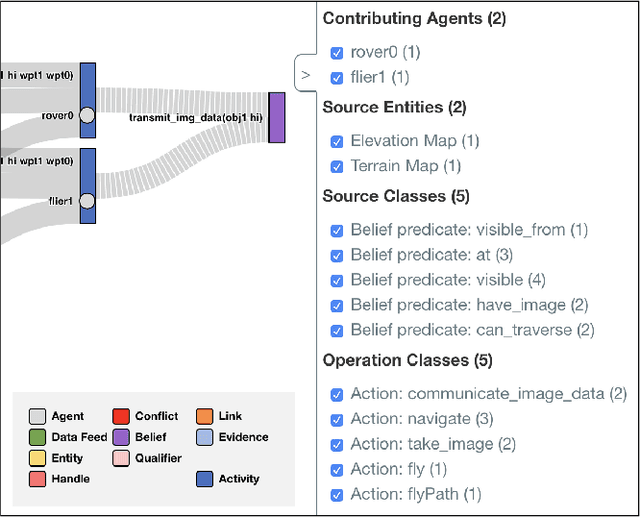

Abstract:Many real-world planning domains involve diverse information sources, external entities, and variable-reliability agents, all of which may impact the confidence, risk, and sensitivity of plans. Humans reviewing a plan may lack context about these factors; however, this information is available during the domain generation, which means it can also be interwoven into the planner and its resulting plans. This paper presents a provenance-based approach to explaining automated plans. Our approach (1) extends the SHOP3 HTN planner to generate dependency information, (2) transforms the dependency information into an established PROV-O representation, and (3) uses graph propagation and TMS-inspired algorithms to support dynamic and counter-factual assessment of information flow, confidence, and support. We qualified our approach's explanatory scope with respect to explanation targets from the automated planning literature and the information analysis literature, and we demonstrate its ability to assess a plan's pertinence, sensitivity, risk, assumption support, diversity, and relative confidence.

* 9 pages, 7 figures, including in Proceedings of the 2020 ICAPS Workshop on Explainable AI Planning (XAIP)

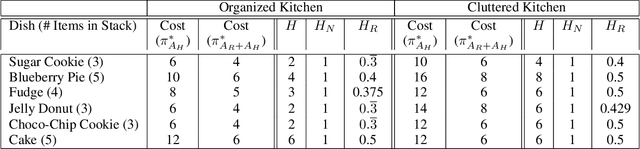

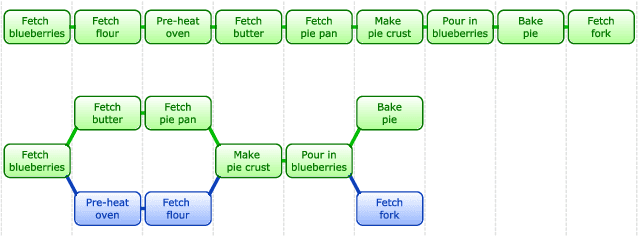

Helpfulness as a Key Metric of Human-Robot Collaboration

Oct 10, 2020

Abstract:As robotic teammates become more common in society, people will assess the robots' roles in their interactions along many dimensions. One such dimension is effectiveness: people will ask whether their robotic partners are trustworthy and effective collaborators. This begs a crucial question: how can we quantitatively measure the helpfulness of a robotic partner for a given task at hand? This paper seeks to answer this question with regards to the interactive robot's decision making. We describe a clear, concise, and task-oriented metric applicable to many different planning and execution paradigms. The proposed helpfulness metric is fundamental to assessing the benefit that a partner has on a team for a given task. In this paper, we define helpfulness, illustrate it on concrete examples from a variety of domains, discuss its properties and ramifications for planning interactions with humans, and present preliminary results.

Proceedings of the AI-HRI Symposium at AAAI-FSS 2019

Sep 19, 2019Abstract:The past few years have seen rapid progress in the development of service robots. Universities and companies alike have launched major research efforts toward the deployment of ambitious systems designed to aid human operators performing a variety of tasks. These robots are intended to make those who may otherwise need to live in assisted care facilities more independent, to help workers perform their jobs, or simply to make life more convenient. Service robots provide a powerful platform on which to study Artificial Intelligence (AI) and Human-Robot Interaction (HRI) in the real world. Research sitting at the intersection of AI and HRI is crucial to the success of service robots if they are to fulfill their mission. This symposium seeks to highlight research enabling robots to effectively interact with people autonomously while modeling, planning, and reasoning about the environment that the robot operates in and the tasks that it must perform. AI-HRI deals with the challenge of interacting with humans in environments that are relatively unstructured or which are structured around people rather than machines, as well as the possibility that the robot may need to interact naturally with people rather than through teach pendants, programming, or similar interfaces.

Responsive Planning and Recognition for Closed-Loop Interaction

Sep 13, 2019

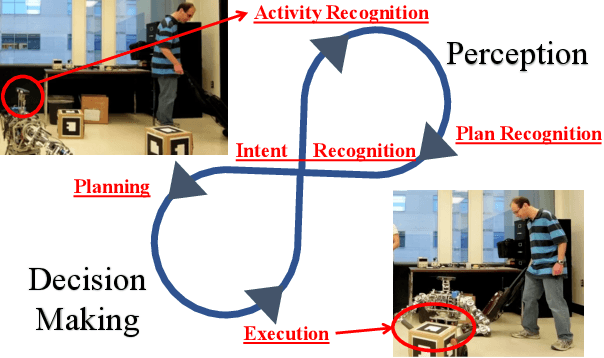

Abstract:Many intelligent systems currently interact with others using at least one of fixed communication inputs or preset responses, resulting in rigid interaction experiences and extensive efforts developing a variety of scenarios for the system. Fixed inputs limit the natural behavior of the user in order to effectively communicate, and preset responses prevent the system from adapting to the current situation unless it was specifically implemented. Closed-loop interaction instead focuses on dynamic responses that account for what the user is currently doing based on interpretations of their perceived activity. Agents employing closed-loop interaction can also monitor their interactions to ensure that the user responds as expected. We introduce a closed-loop interactive agent framework that integrates planning and recognition to predict what the user is trying to accomplish and autonomously decide on actions to take in response to these predictions. Based on a recent demonstration of such an assistive interactive agent in a turn-based simulated game, we also discuss new research challenges that are not present in the areas of artificial intelligence planning or recognition alone.

Proceedings of the AI-HRI Symposium at AAAI-FSS 2018

Sep 18, 2018Abstract:The goal of the Interactive Learning for Artificial Intelligence (AI) for Human-Robot Interaction (HRI) symposium is to bring together the large community of researchers working on interactive learning scenarios for interactive robotics. While current HRI research involves investigating ways for robots to effectively interact with people, HRI's overarching goal is to develop robots that are autonomous while intelligently modeling and learning from humans. These goals greatly overlap with some central goals of AI and interactive machine learning, such that HRI is an extremely challenging problem domain for interactive learning and will elicit fresh problem areas for robotics research. Present-day AI research still does not widely consider situations for interacting directly with humans and within human-populated environments, which present inherent uncertainty in dynamics, structure, and interaction. We believe that the HRI community already offers a rich set of principles and observations that can be used to structure new models of interaction. The human-aware AI initiative has primarily been approached through human-in-the-loop methods that use people's data and feedback to improve refinement and performance of the algorithms, learned functions, and personalization. We thus believe that HRI is an important component to furthering AI and robotics research.

Blue Sky Ideas in Artificial Intelligence Education from the EAAI 2017 New and Future AI Educator Program

Feb 01, 2017Abstract:The 7th Symposium on Educational Advances in Artificial Intelligence (EAAI'17, co-chaired by Sven Koenig and Eric Eaton) launched the EAAI New and Future AI Educator Program to support the training of early-career university faculty, secondary school faculty, and future educators (PhD candidates or postdocs who intend a career in academia). As part of the program, awardees were asked to address one of the following "blue sky" questions: * How could/should Artificial Intelligence (AI) courses incorporate ethics into the curriculum? * How could we teach AI topics at an early undergraduate or a secondary school level? * AI has the potential for broad impact to numerous disciplines. How could we make AI education more interdisciplinary, specifically to benefit non-engineering fields? This paper is a collection of their responses, intended to help motivate discussion around these issues in AI education.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge